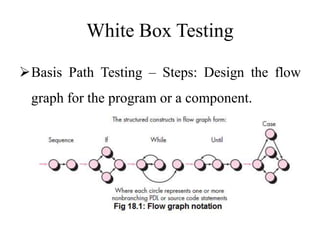

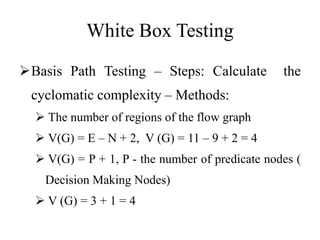

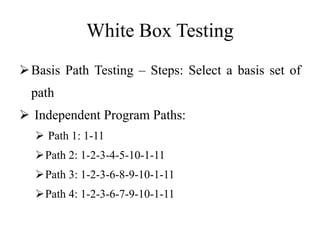

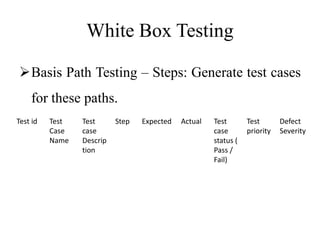

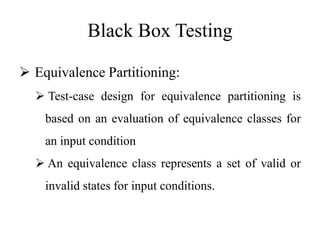

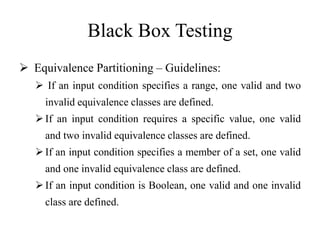

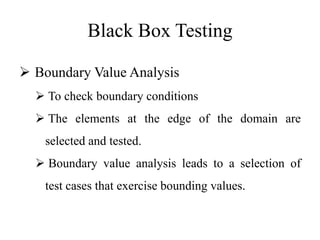

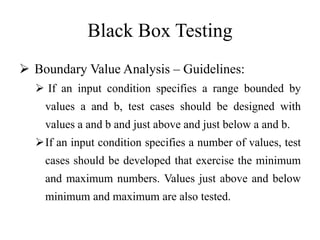

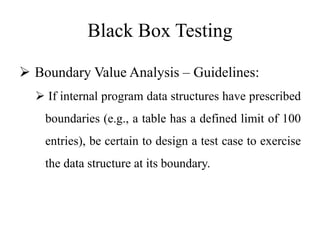

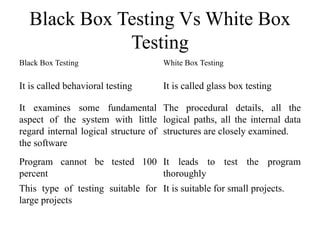

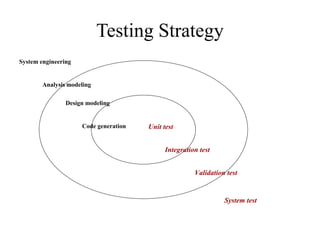

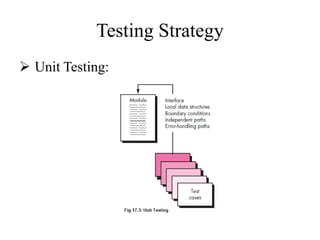

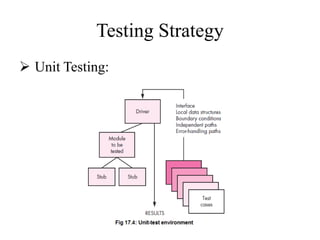

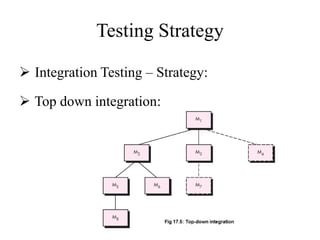

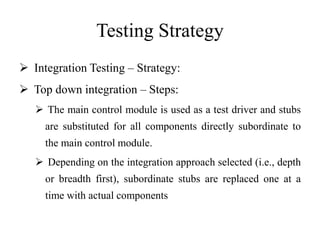

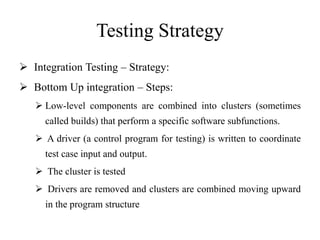

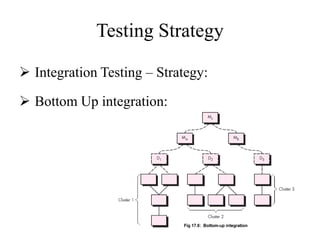

Testing involves executing a program to find errors and is done at various stages - unit testing focuses on individual program modules, integration testing combines units and tests their interactions, and regression testing re-executes previous tests to ensure changes haven't caused errors. Key aspects of testing include white box testing which examines internal logic and black box testing which treats the program as a "black box" and focuses on inputs/outputs.