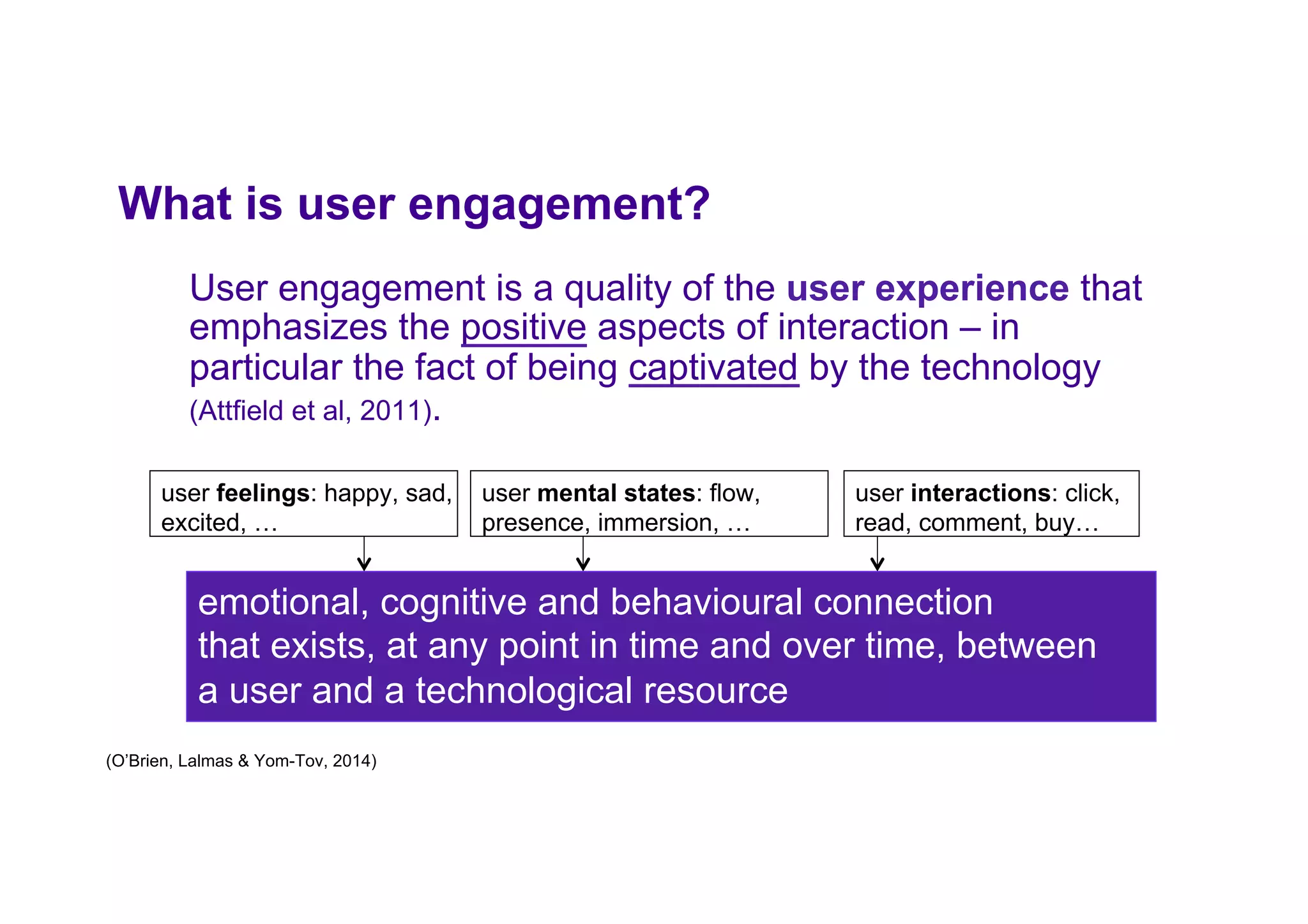

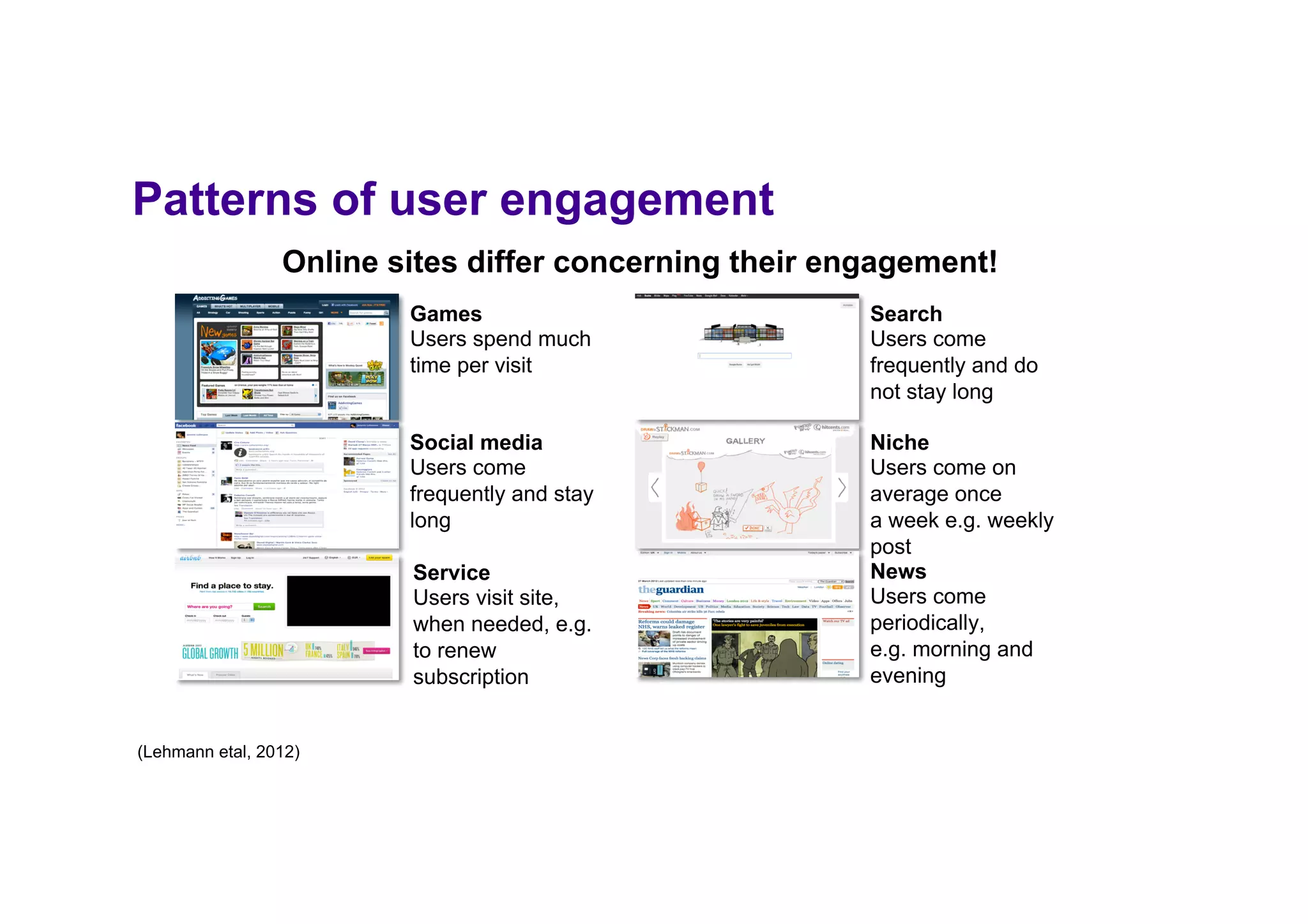

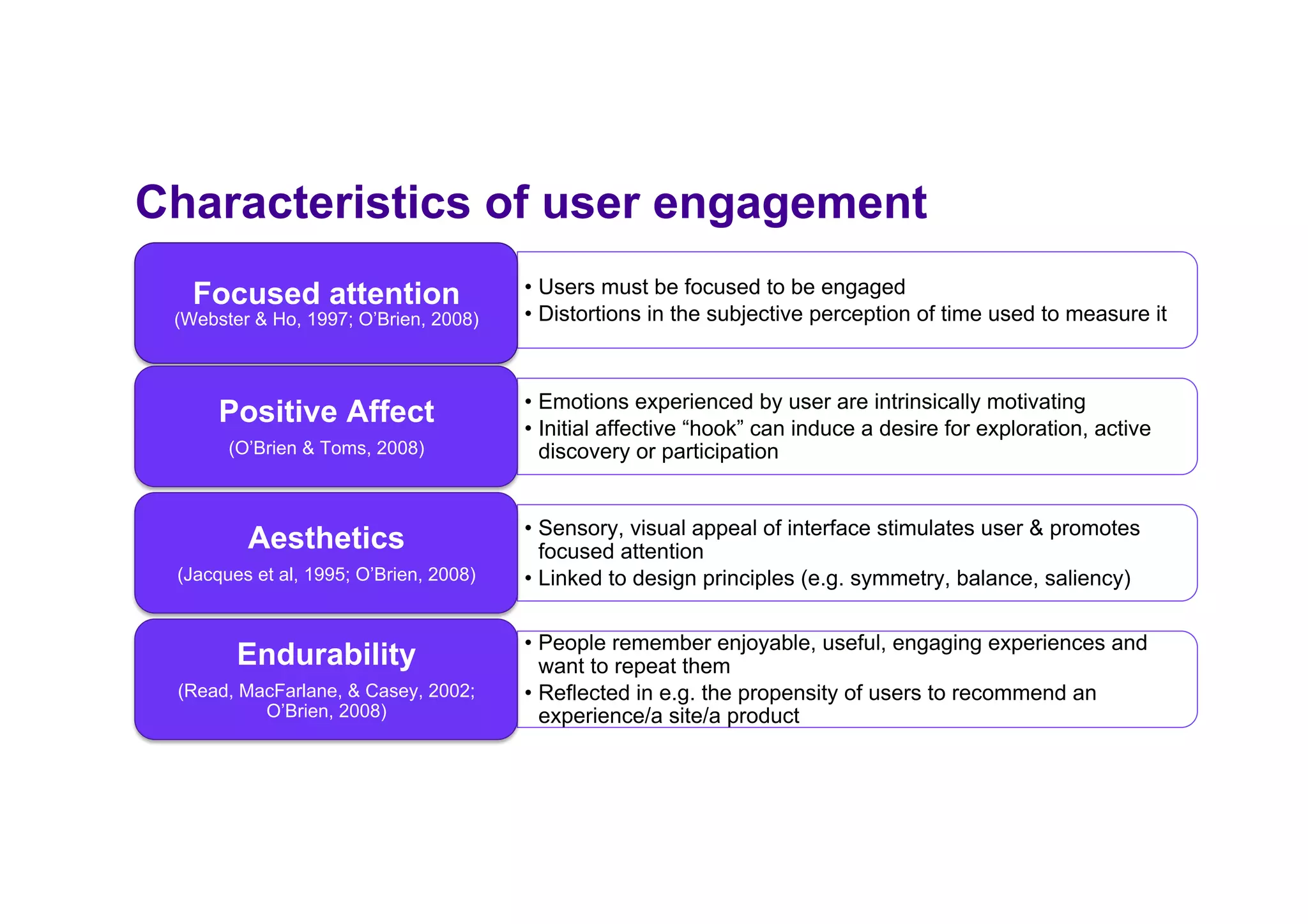

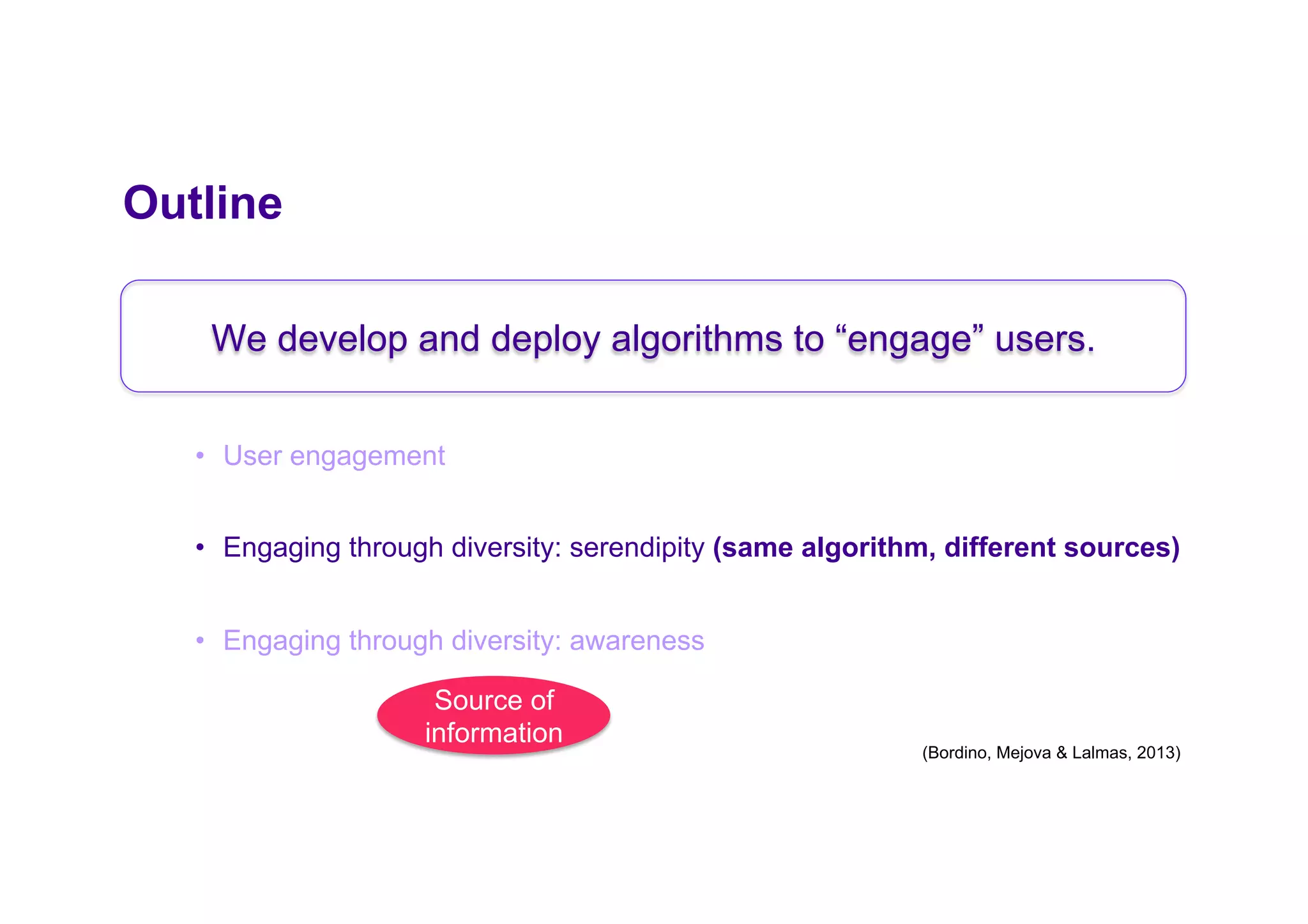

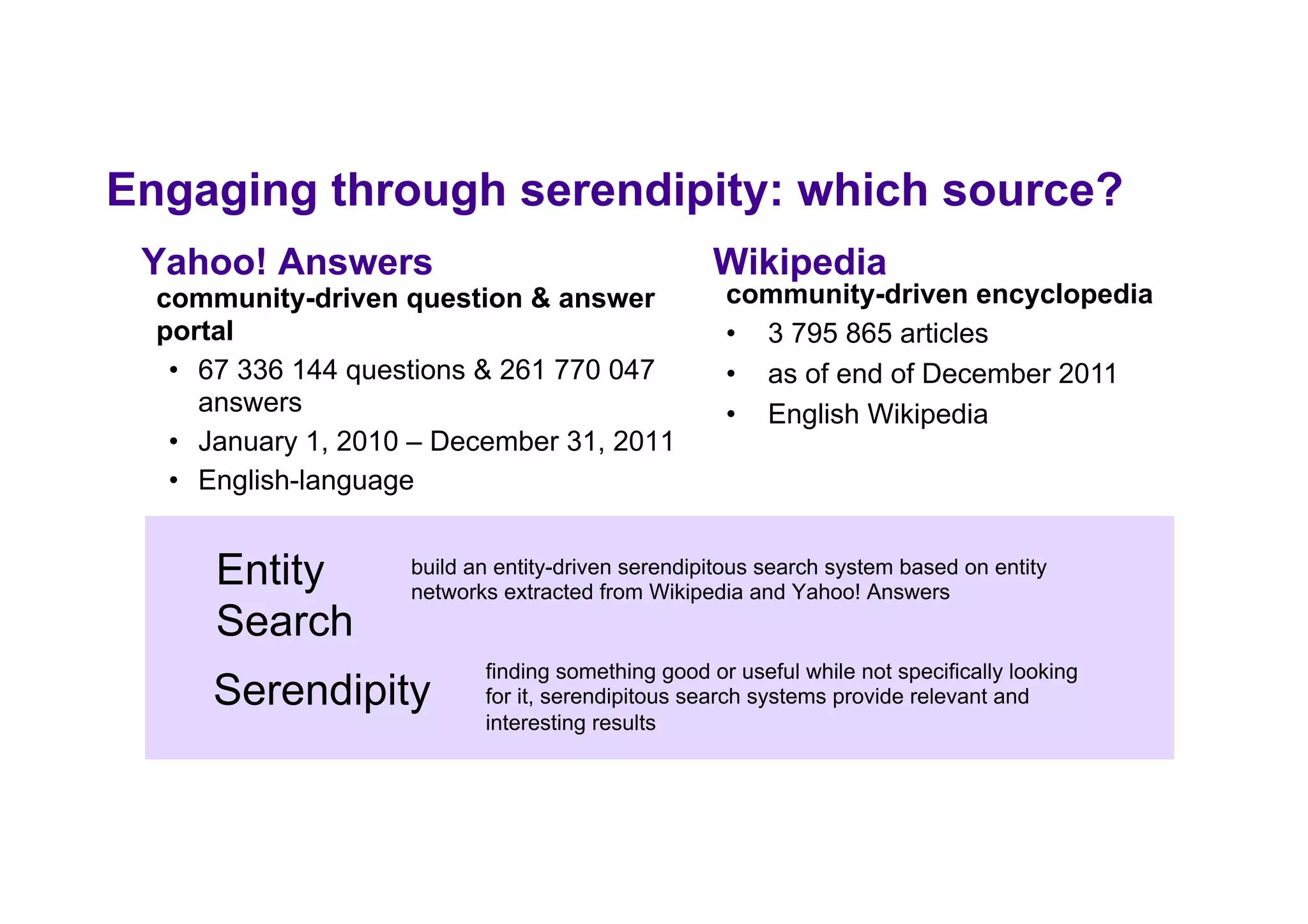

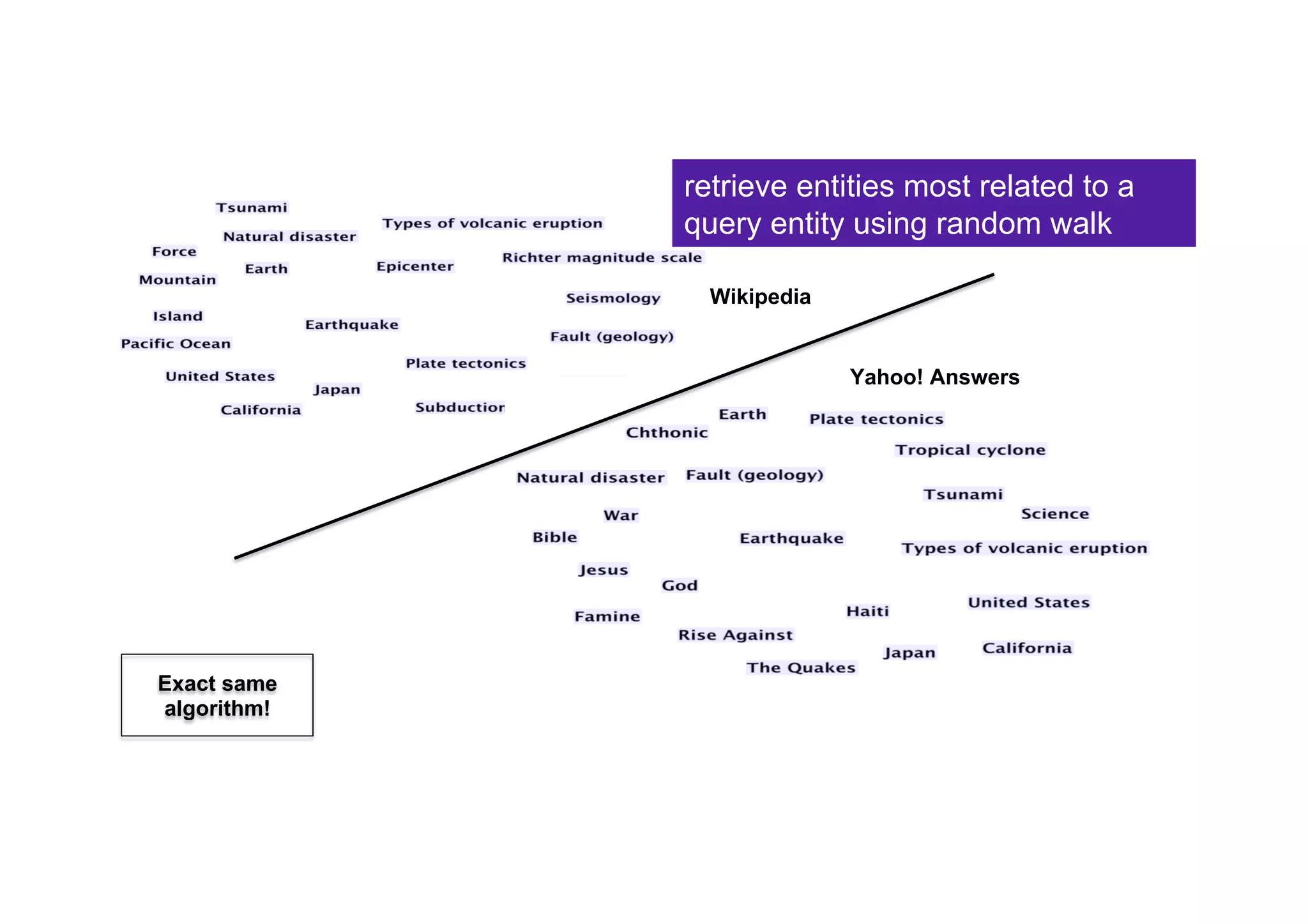

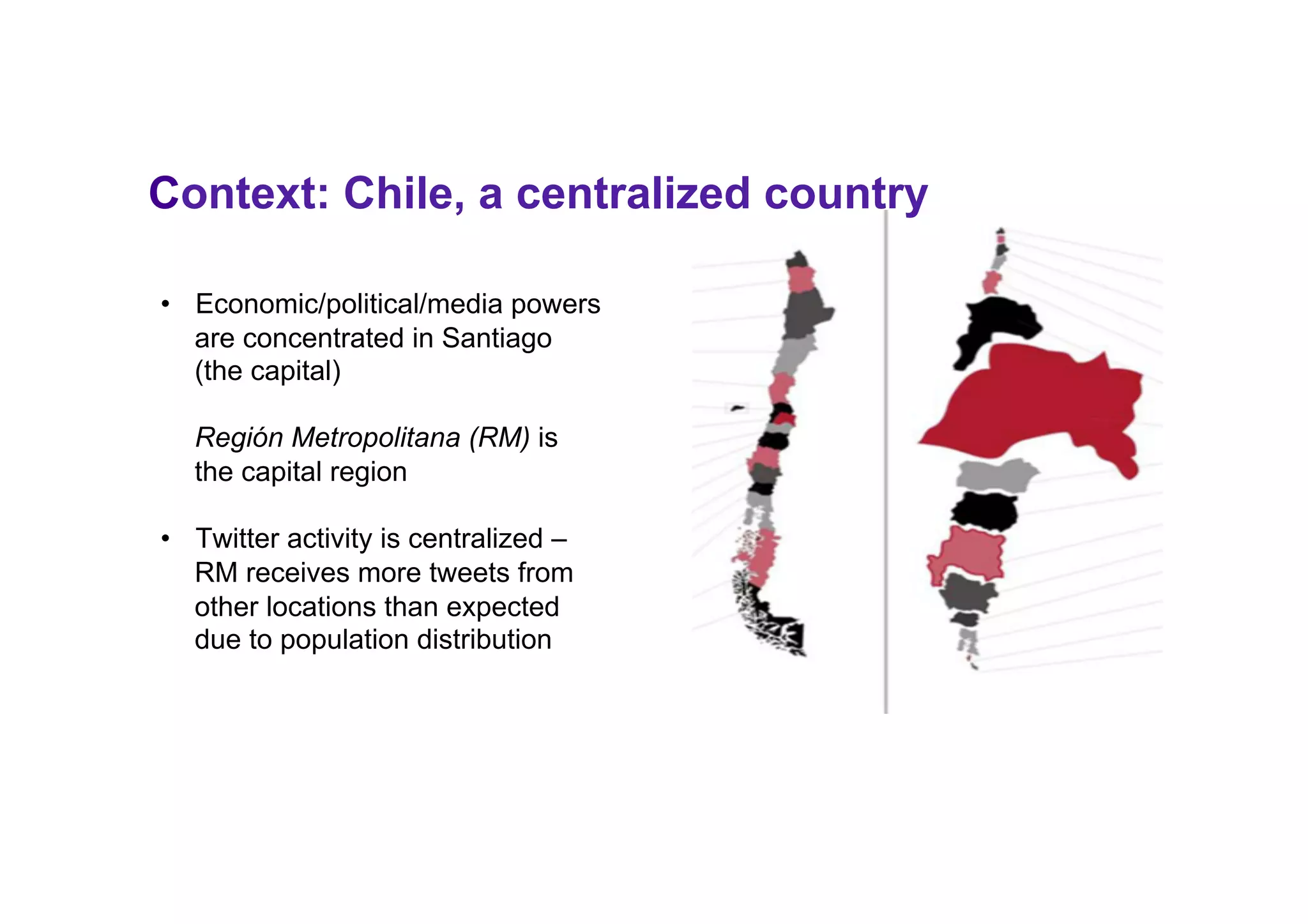

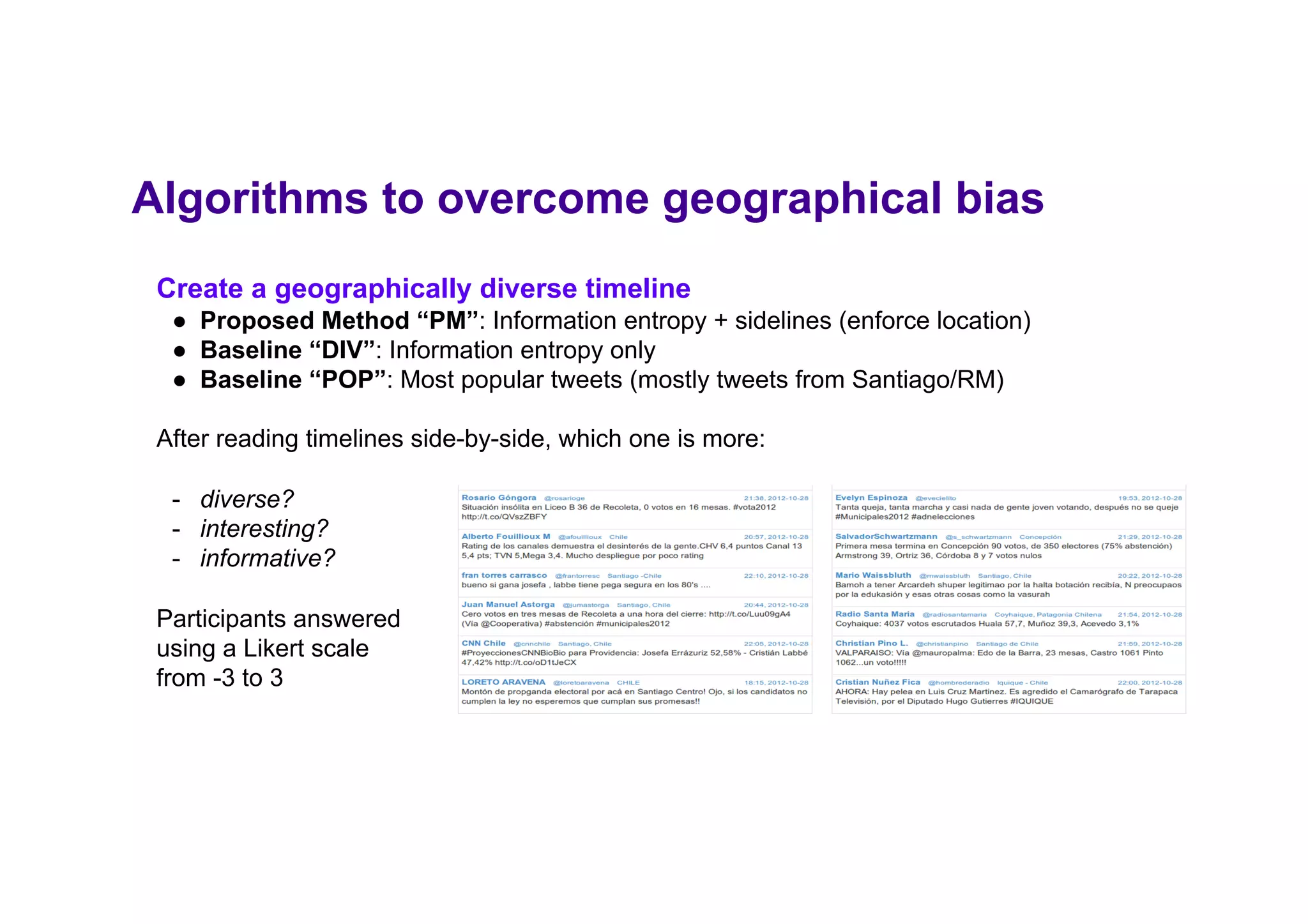

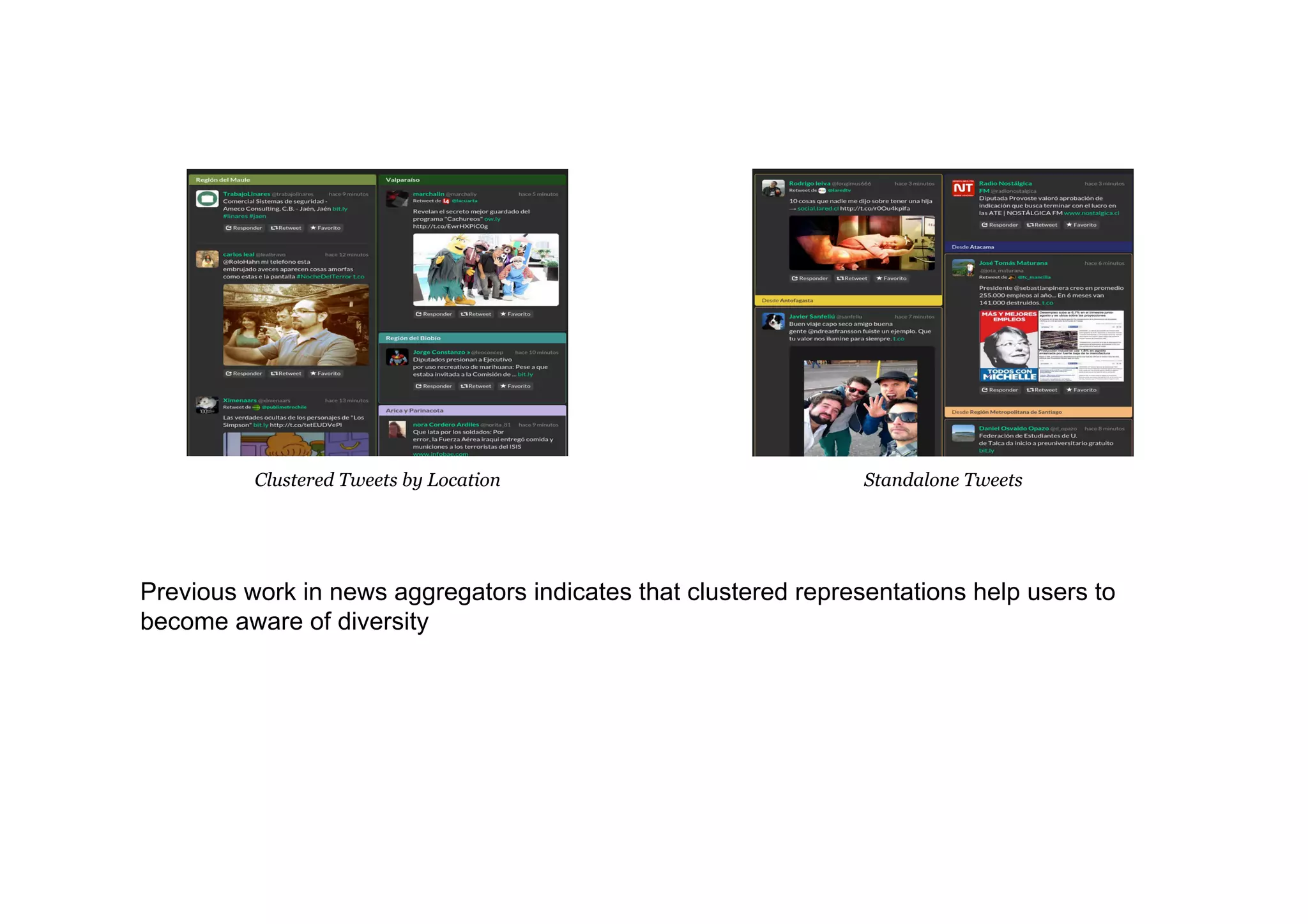

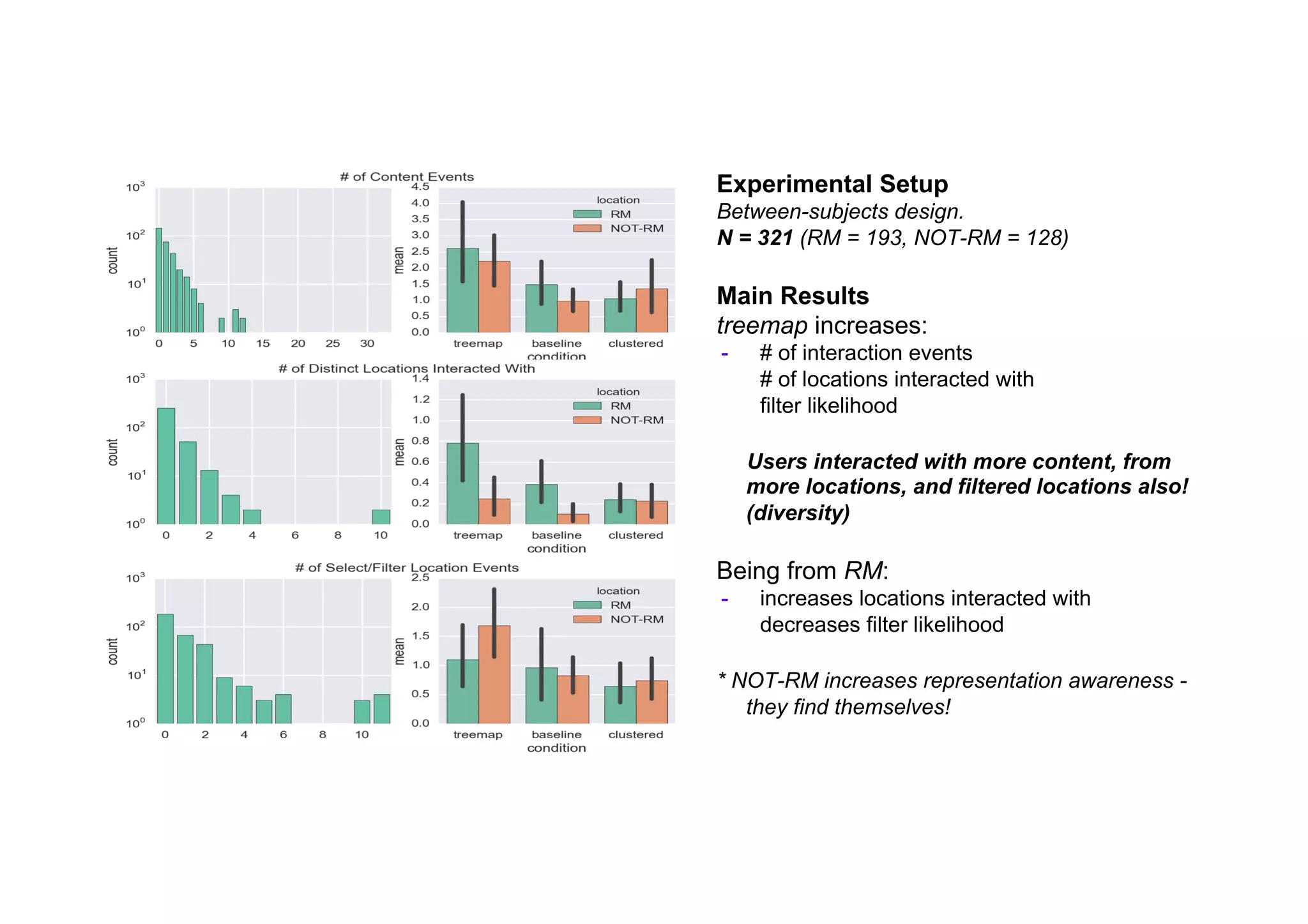

The document discusses the importance of user engagement in social media and AI, emphasizing the role of algorithms in enhancing user interactions through diversity and serendipity. It highlights that user engagement is influenced by various factors including emotions, attention, and trust, and reviews techniques for measuring and interpreting engagement effectively. The document suggests that enhancing user awareness of diversity and representation in content is crucial, particularly in centralized environments, and presents algorithms to mitigate cognitive biases in user engagement.