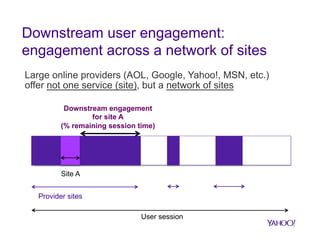

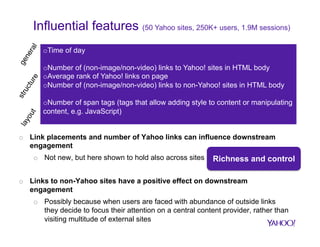

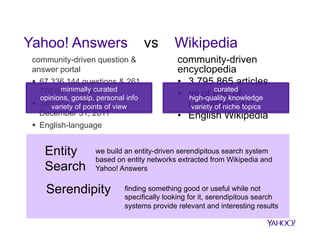

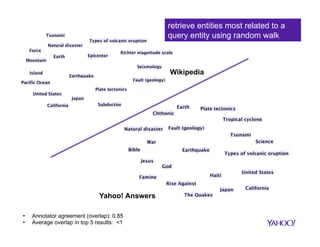

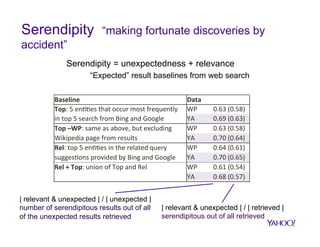

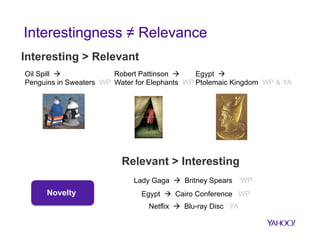

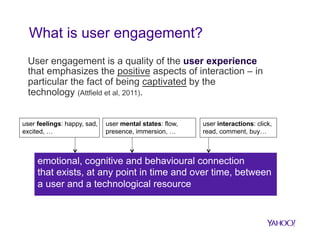

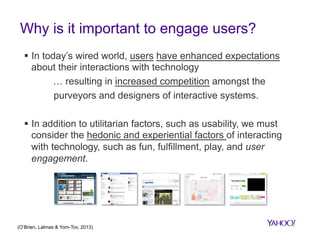

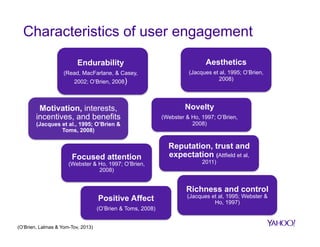

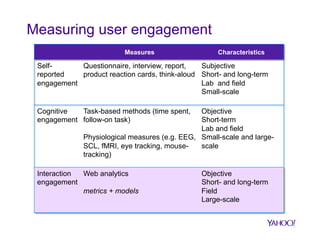

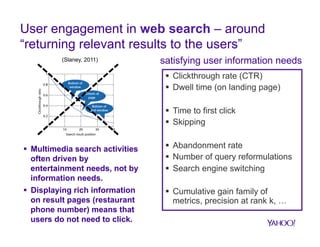

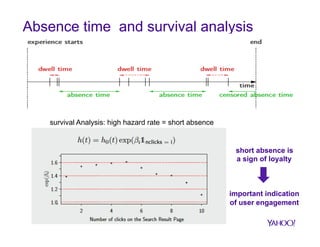

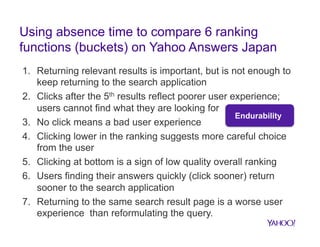

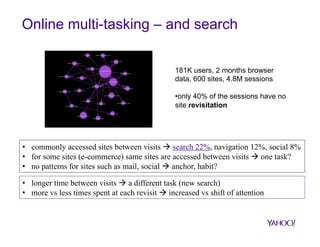

The document discusses user engagement in web search and its characteristics, highlighting its measurement and implications for search applications. It emphasizes the importance of positive user experiences and the emotional, cognitive, and behavioral connections between users and technology. Various studies described in the talk explore factors such as inter-session engagement, online multi-tasking, and serendipity in user engagement across different web applications.

![Revisitation patterns

average attention

mail sites [decreasing attention]

search sites [increasing attention]

auction sites [complex attention]

100% 54% 36% 26% 20% 17% 14% 12% 10%

100% 62% 41% 29% 21% 16% 13% 10% 8%

100% 69% 54% 44% 38% 33% 29% 26% 23%

100% 67% 54% 46% 41% 35% 31% 29% 26%

p-value < 0.05

p-value < 0.05

m = -0.288

p-value < 0.05

m = 0.063

p-value = 0.24

m = 0.142

●

0.8

●

12

11

11.2

0.4

●

●

●

●

●

●

10

●

●

1 2 3 4 5 6 7 8 9

1 2 3 4 5 6 7 8 9

0.4

●

●

1 2 3 4 5 6 7 8 9

0.8

●

●

●

11.0

●

Motivation, interests,

incentives, and benefits

k [kth visit on site]

0.0

0.0

§ 10% users accessed a site 9+ times (23% for search sites); 28% at least four

1 2 3 4 5 6 7 8 9

1 2 3 4

1 times (44%7for search sites) 5 6 7 8 9

2 3 4 5 6

8 9

1 2 3 4 5 6 7 8 9

0.0

0.0

●

§ 48% sites visited at least 9 times

§ Revisitation “level” depends on site

0.4

0.8

1 2 3 4 5 6 7 8 9

% of navigation type

●

● ●

●

0.4

● ●

●

10.8

12

●

●

●

●

●

0.8

●

●

11

11.2

● ●

13

●

●

10

●

10.8

% of total page views on site

proportion of users

k [kth visit on site]

k [kth visit on site]

k [kth visit on site]

Hyperlinking

§ Activity on site decreases with each Teleporting but activity on many search

revisit Backpaging

sites increases](https://image.slidesharecdn.com/engagingclick-131126065000-phpapp01/85/An-Engaging-Click-or-how-can-user-engagement-measurement-inform-web-search-evaluation-15-320.jpg)