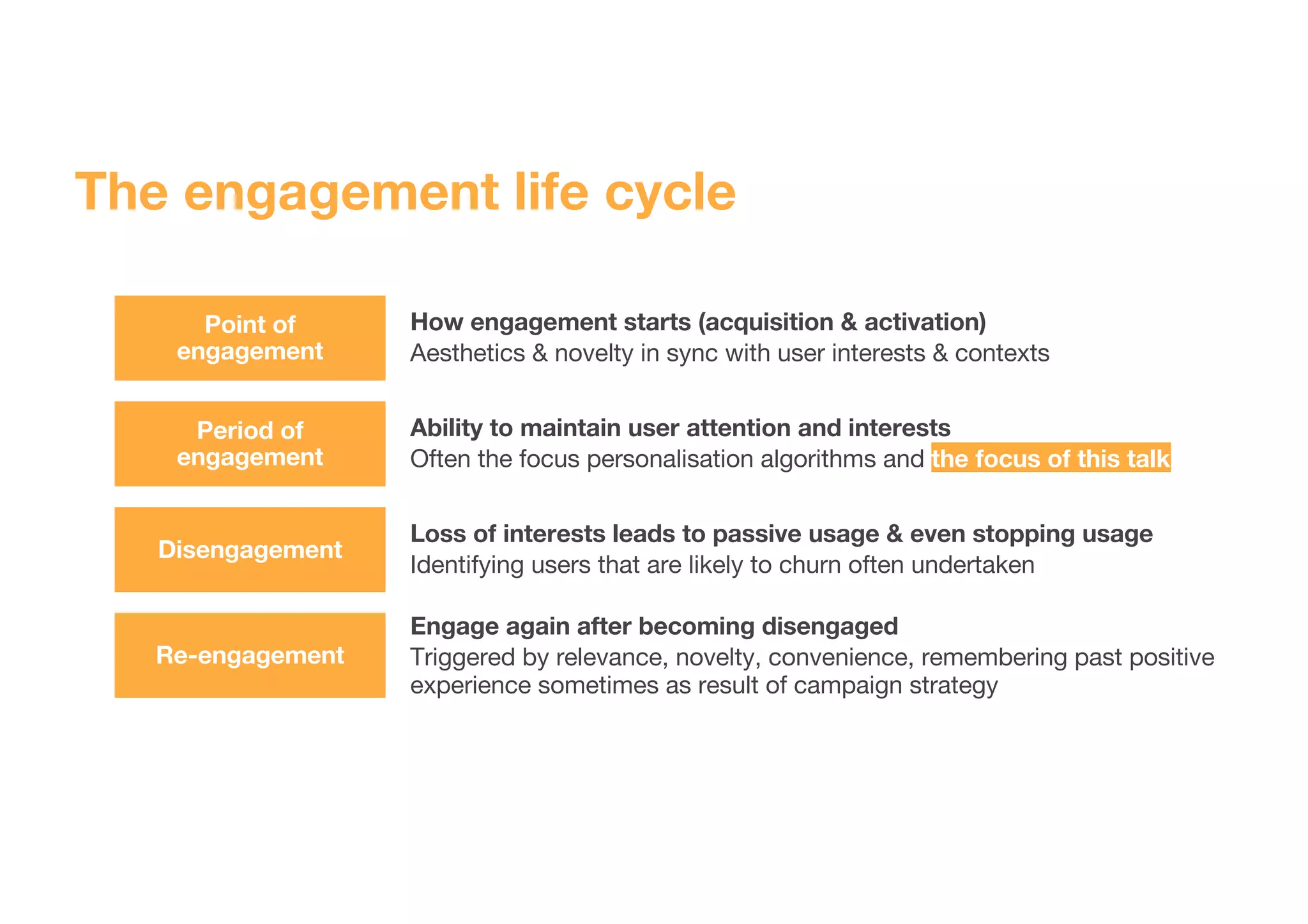

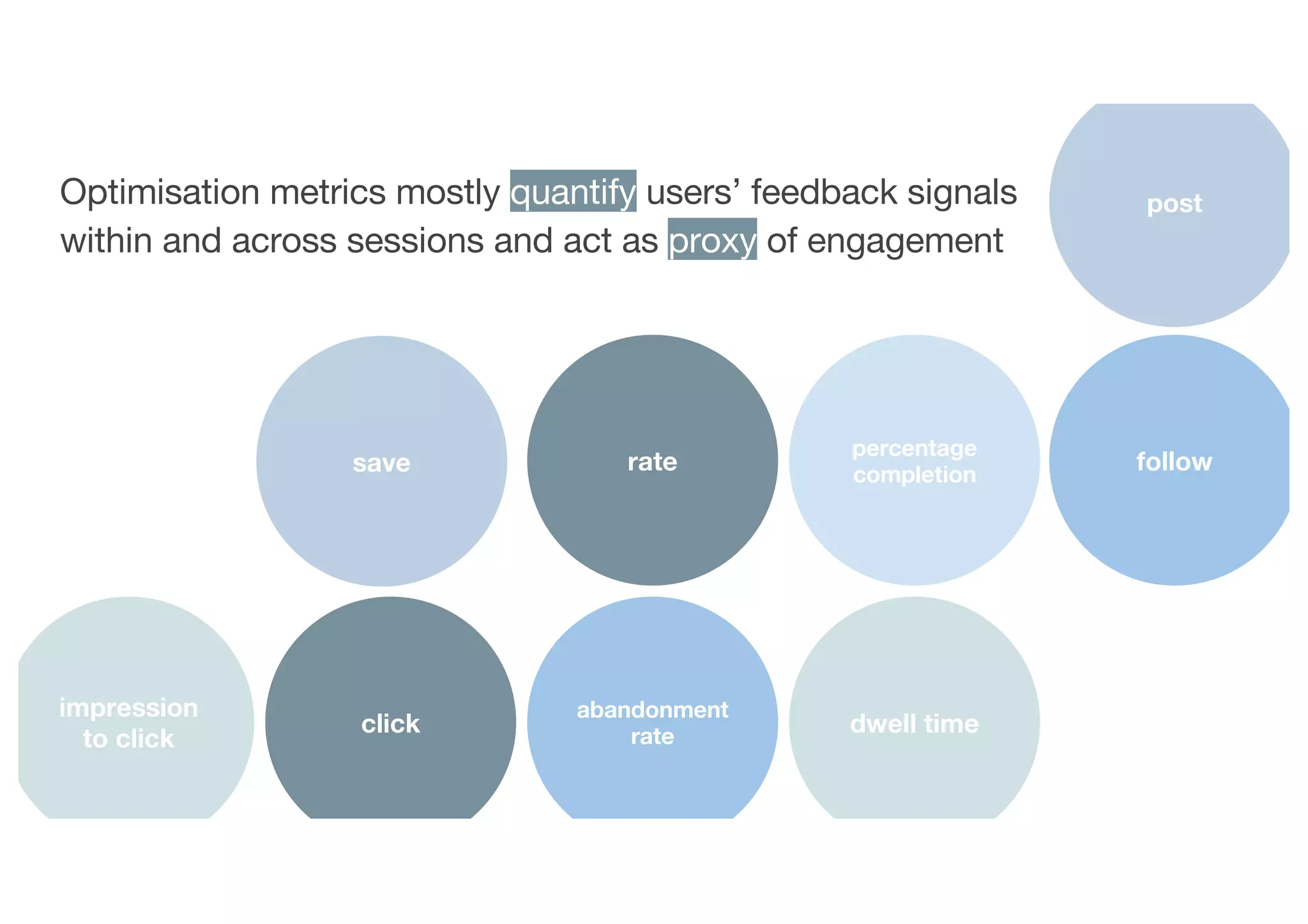

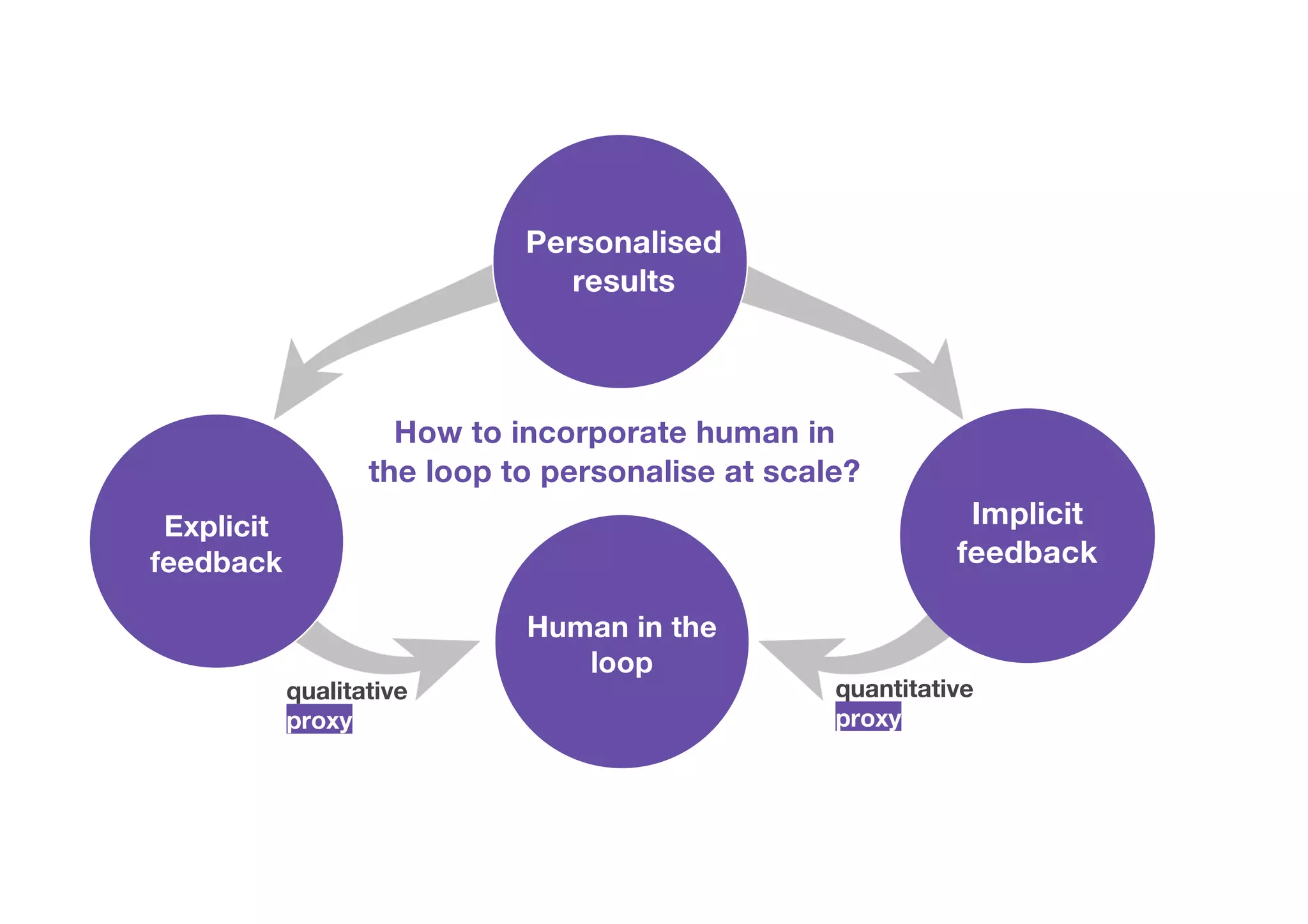

The document discusses Spotify's mission to enhance human creativity through personalized music experiences, emphasizing user engagement metrics and the importance of 'human-in-the-loop' models. It outlines strategies for optimizing personalization at scale by understanding user intentions, optimizing metrics, segmenting users, and considering diversity in content recommendations. It highlights how various metrics can be used to gauge user experience and engagement behavior throughout their interaction lifecycle with Spotify.

![[1] R Mehrotra, M Lalmas, D Kenney, T Lim-Meng & G

Hashemian. Jointly Leveraging Intent and Interaction

Signals to Predict User Satisfaction with Slate

Recommendations. WWW 2019.

What do users

want on Home?

Knowing user intent on Home helps interpreting

user implicit feedback

Passively Listening

- quickly access playlists or saved music (2)

- play music matching mood or activity (4)

- find music to play in background (6)

Other

Home is default screen (1)

Actively Engaging

- discover new music to listen to now (3)

- to find X (5)

- save new music or follow new playlists for later (7)

- explore artists or albums more deeply (8)

Considering intent

and learning across

intents improves

ability to infer user

satisfaction by 20%](https://image.slidesharecdn.com/hcomp2020-201026215915/75/Engagement-Metrics-Personalisation-at-Scale-25-2048.jpg)

![FOCUSED

One specific thing in mind

● Find it or not

● Quickest/easiest path to results

is important

● From nothing good enough,

good enough to better than

good enough

● Willing to try things out

● But still want to fulfil their intent

EXPLORATORY

A path to explore

● Difficult for users to assess

how it went

● May be able to answer in

relative terms

● Users expect to be active when

in an exploratory mindset

● Effort is expected

[2] C Hosey, L Vujović, B St. Thomas, J Garcia-Gathright

& J Thom. Just Give Me What I Want: How People Use

and Evaluate Music Search. CHI 2019.

[3] A Li, J Thom, P Ravichandran, C Hosey, B St.

Thomas & J Garcia-Gathright. Search Mindsets:

Understanding Focused and Non-Focused

Information Seeking in Music Search. WWW 2019.

How users think about

results relate to how they

use Spotify tabs

What do users

want from

Search?

OPEN

A seed of an idea in mind](https://image.slidesharecdn.com/hcomp2020-201026215915/75/Engagement-Metrics-Personalisation-at-Scale-26-2048.jpg)

![[4] P Chandar, J Garcia-Gathright, C Hosey, B St

Thomas & J Thom. Developing Evaluation Metrics for

Instant Search Using Mixed Methods. SIGIR 2019.

Success rate: a composite

metric of all success-related

behaviors, is more sensitive

than click-through rate

Users evaluate their search experience in

terms of effort and success

TYPE

User communicates

with us

CONSIDER

User evaluates what

we show them

DECIDE

User ends the

search session

EFFORT

Depends on a user mindset:

focused, open, exploratory

SUCCESS

Depends on user goal:

listen, organize, share

Success

Click-through

How are users

satisfied with

Search?](https://image.slidesharecdn.com/hcomp2020-201026215915/75/Engagement-Metrics-Personalisation-at-Scale-29-2048.jpg)

![[5] P Dragone, R Mehrotra & M Lalmas. Deriving User-

and Content-specific Rewards for Contextual

Bandits. WWW 2019.

Using playlist consumption time to inform metric

to optimise for playlist satisfaction on Home

Optimizing for mean consumption time led to +22.24% in predicted

stream rate. Defining per user x playlist cluster led to further +13%

mean of

consumption

time

co-clustering

user group x

playlist type

How are users

satisfied with

playlists?](https://image.slidesharecdn.com/hcomp2020-201026215915/75/Engagement-Metrics-Personalisation-at-Scale-30-2048.jpg)

![[6] S Way, J Garcia-Gathright, and H Cramer. Local

Trends in Global Music Streaming. ICWSM 2020.

Despite access to a global catalog, countries

are increasingly streaming their own, local music

Global music trade is

strongly shaped by

language and geography

Used Gravity Modeling

to study how these

relationships are changing

over time, around the world

Local music is on the rise

What music

users listen to?](https://image.slidesharecdn.com/hcomp2020-201026215915/75/Engagement-Metrics-Personalisation-at-Scale-33-2048.jpg)

![[7] A Anderson, L Maystre, R Mehrotra, I Anderson & M

Lalmas. Algorithmic Effects on the Diversity of

Consumption on Spotify. WWW 2020.

Generalists and specialists exhibit different

retention and conversion behaviorsHow do users

listen to music?

Generalist-Specialist Score (GS)

Specialist Generalist

Generalists churn less and convert more than specialists](https://image.slidesharecdn.com/hcomp2020-201026215915/75/Engagement-Metrics-Personalisation-at-Scale-34-2048.jpg)

![[8] R Mehrotra, N Xue & M Lalmas. Bandit based

Optimization of Multiple Objectives on a Music

Streaming Platform. KDD 2020.

Optimizing for multiple satisfaction objectives

together performs better than single metric

optimisation

Optimising for multiple satisfaction

metrics performs better for each metric

than directly optimising that metric

● clicks

● streaming time

● total number of tracks played

Single objective models Multi-

objective

model

Learning more relevant patterns of user

satisfaction with more optimisation metrics

● positive correlation between objectives

● holistic view of user experience

How to

consider

diversity in user

satisfaction?](https://image.slidesharecdn.com/hcomp2020-201026215915/75/Engagement-Metrics-Personalisation-at-Scale-37-2048.jpg)

![[9] C Hansen, R Mehrotra, C Hansen, B Brost, L Maystre

& M Lalmas. Shifting Consumption towards Diverse

Content on Music Streaming Platforms. WSDM 2021.

Personalisation algorithms need to explicitly

optimise for content diversity

As personalisation algorithms increase

in complexity, they improve satisfaction

but at the cost of content diversity

Choice of personalisation algorithm

is more important when considering

diversity compared to considering

satisfaction only

How to

consider

diversity of

content?](https://image.slidesharecdn.com/hcomp2020-201026215915/75/Engagement-Metrics-Personalisation-at-Scale-38-2048.jpg)

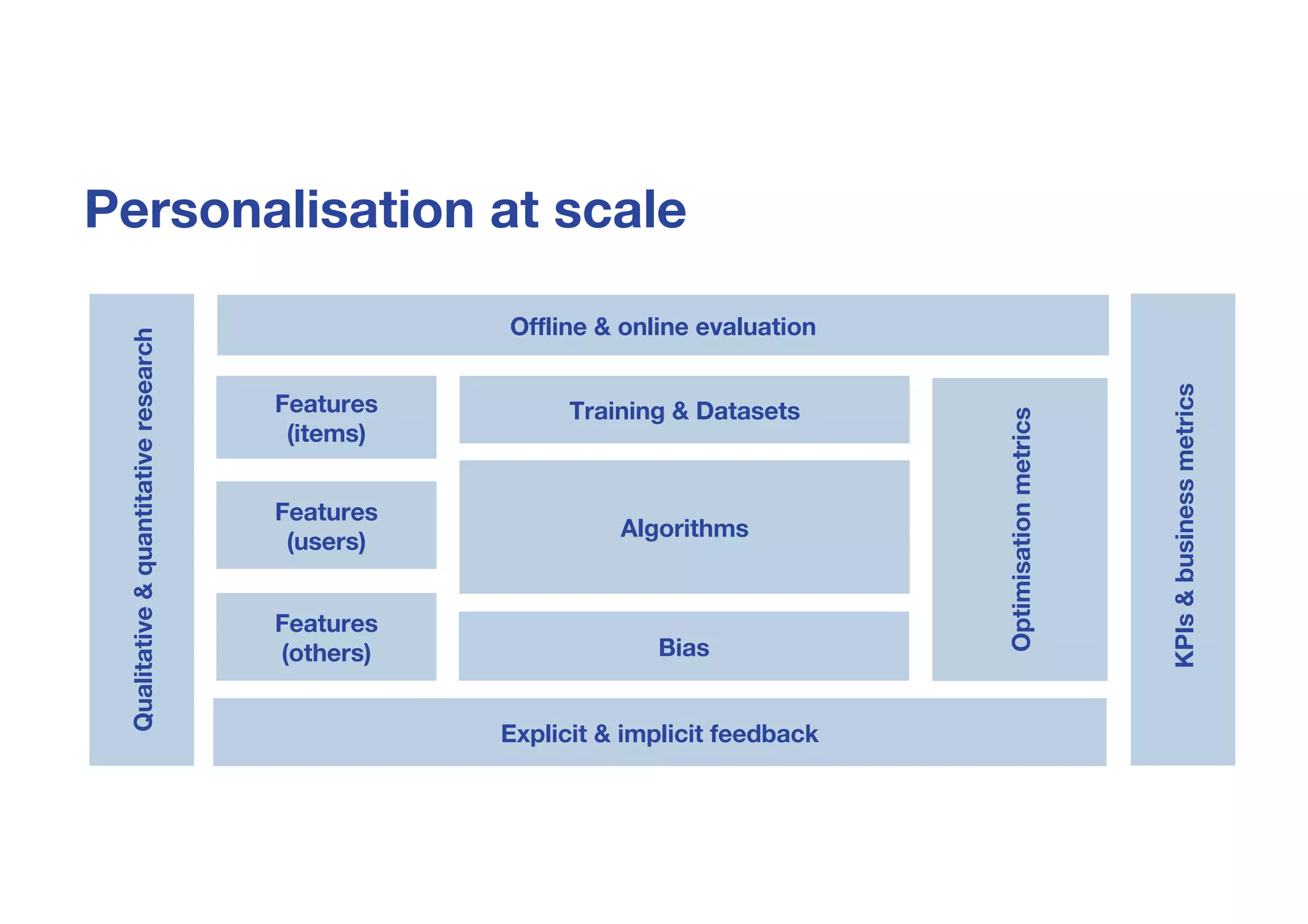

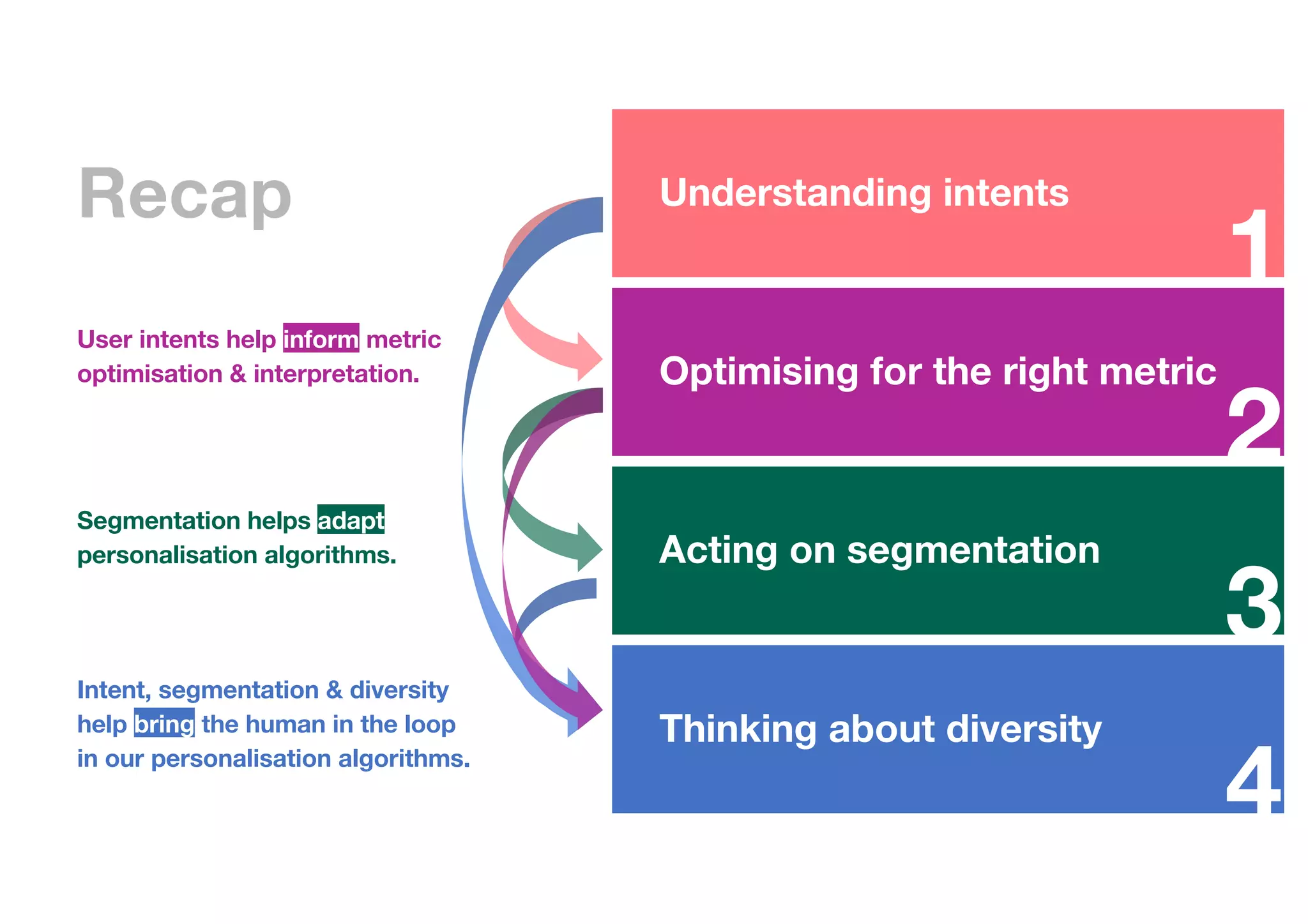

![Qualitative&quantitativeresearch

KPIs&businessmetrics

Algorithms

Training & Datasets

Optimizationmetrics

Offline & online evaluation

Explicit & implicit feedback

Features

(items)

Features

(users)

Features

(others) Bias

Incorporating human in the loop to personalise

at scale

Understandingintents[1,2,3]

Actingonsegmentation[6,7]

Optimisingfortherightmetric[4,5]

Thinkingaboutdiversity[8,9]](https://image.slidesharecdn.com/hcomp2020-201026215915/75/Engagement-Metrics-Personalisation-at-Scale-42-2048.jpg)