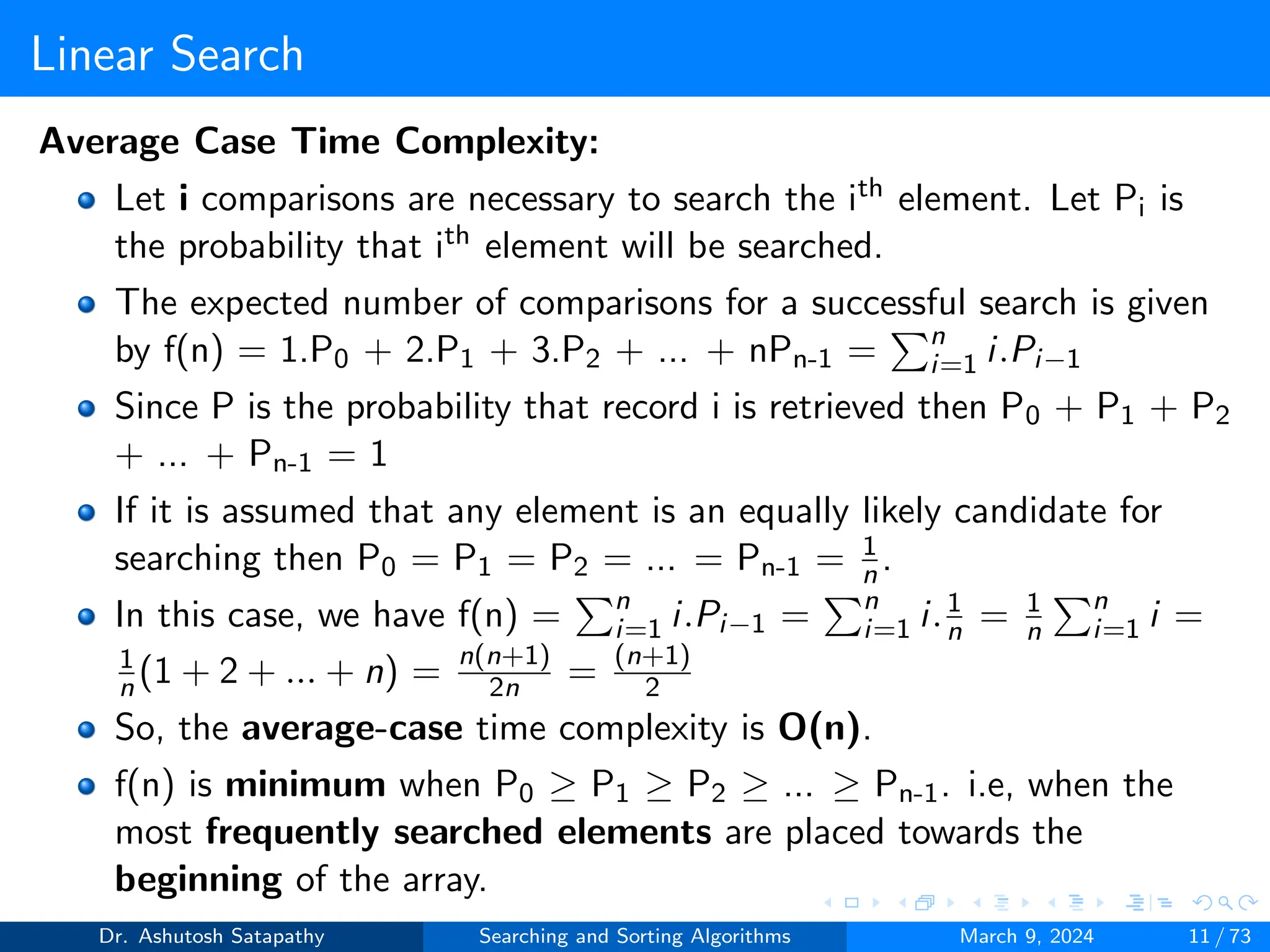

The document provides an overview of searching and sorting algorithms, including linear and binary search methods, as well as various sorting techniques such as insertion sort, selection sort, and bubble sort. It discusses time and space complexities associated with these algorithms, explaining best, worst, and average case scenarios. The content is structured as a lecture by Dr. Ashutosh Satapathy, delivered on March 9, 2024, highlighting the importance of efficient data retrieval and manipulation in computer science.

![Linear Search

The simplest way to do a search is to begin at one end of the list and

scan down it until the desired key is found or the other end is

reached.

Let us assume that a is an array of n keys, a[0] through a[n-1]. Let

us also assume that key is a search element.

The process starts with a comparison between the first element (i.e,

a[0]) and key. As long as a comparison does not result in a success,

the algorithm proceeds to compare the next element of a and key.

The process terminates when the list exhausted or a comparison

results in a success. This method of searching is also known as

linear searching.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 6 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-6-2048.jpg)

![Linear Search

Algorithm 1 Linear search

1: procedure linearsearch(a, n, key)

2: for i ← 0 to n − 1 do

3: if (key = a[i]) then

4: return i

5: end if

6: end for

7: return -1

8: end procedure

The function examines each key in turn; upon finding one that

matches the search argument, its index is returned. If no match is

found, -1 is returned.

When the values of the array a are not distinct then the function

will return the first index of the array a which is equal to key.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 7 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-7-2048.jpg)

![Linear Search

Best Case Time Complexity: The searched element is available at the

a[0].

Algorithm 2 Linear search best case analysis

1: procedure linearsearch(a, n, key) ▷ Frequency count is 1

2: for i ← 0 to n − 1 do ▷ Frequency count is 1

3: if (key = a[i]) then ▷ Frequency count is 1

4: return i ▷ Frequency count is 1

5: end if ▷ Frequency count is 0

6: end for ▷ Frequency count is 0

7: return -1 ▷ Frequency count is 0

8: end procedure ▷ Frequency count is 0

Total frequency count f(n) is 4. So, the best-case time complexity is

O(1).

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 8 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-8-2048.jpg)

![Linear Search

Worst Case Time Complexity: The searched element is available at the

a[n-1].

Algorithm 3 Linear search worst case analysis

1: procedure linearsearch(a, n, key) ▷ Frequency count is 1

2: for i ← 0 to n − 1 do ▷ Frequency count is n

3: if (key = a[i]) then ▷ Frequency count is n

4: return i ▷ Frequency count is 1

5: end if ▷ Frequency count is 0

6: end for ▷ Frequency count is 0

7: return -1 ▷ Frequency count is 0

8: end procedure ▷ Frequency count is 0

Total frequency count f(n) is 2n+2. So, the worst-case time complexity

is O(n).

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 9 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-9-2048.jpg)

![Linear Search

Worst Case Time Complexity: The searched element is is not available

in the array.

Algorithm 4 Linear search worst case analysis

1: procedure linearsearch(a, n, key) ▷ Frequency count is 1

2: for i ← 0 to n − 1 do ▷ Frequency count is n+1

3: if (key = a[i]) then ▷ Frequency count is n

4: return i ▷ Frequency count is 0

5: end if ▷ Frequency count is 0

6: end for ▷ Frequency count is n

7: return -1 ▷ Frequency count is 1

8: end procedure ▷ Frequency count is 1

Total frequency count f(n) is 3n+4. So, the worst-case time complexity

is O(n).

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 10 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-10-2048.jpg)

![Binary Search

Algorithm 5 Binary search

1: procedure binarysearch(a, n, key)

2: low ← 0

3: high ← n − 1

4: while (low ≤ high) do

5: mid ←⌈low+high

2 ⌉

6: if (key = a[mid]) then

7: return mid

8: else if (key < a[mid]) then

9: high ← mid − 1

10: else

11: low ← mid + 1

12: end if

13: end while

14: return -1

15: end procedure

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 15 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-15-2048.jpg)

![Binary Search

Algorithm 6 Binary search best case time complexity - O(1)

1: procedure binarysearch(a, n, key) ▷ Frequency count is 1

2: low ← 0 ▷ Frequency count is 1

3: high ← n − 1 ▷ Frequency count is 1

4: while (low ≤ high) do ▷ Frequency count is 1

5: mid ←⌈low+high

2 ⌉ ▷ Frequency count is 1

6: if (key = a[mid]) then ▷ Frequency count is 1

7: return mid ▷ Frequency count is 1

8: else if (key < a[mid]) then ▷ Frequency count is 0

9: high ← mid − 1 ▷ Frequency count is 0

10: else ▷ Frequency count is 0

11: low ← mid + 1 ▷ Frequency count is 0

12: end if ▷ Frequency count is 0

13: end while ▷ Frequency count is 0

14: return -1 ▷ Frequency count is 0

15: end procedure ▷ Frequency count is 0

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 17 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-17-2048.jpg)

![Insertion Sort

Insertion sort is an efficient algorithm for sorting a small number

of elements. It sorts a set of values by inserting values in to an

existing sorted file.

The method can be explained in the similar way the arrangement of

cards by a card player.

The card player picks up the cards and inserts them in to the

required position.

Thus at every step, we insert an item in to its proper place in an

already ordered list.

suppose an array a with n elements a[0], a[1], ..., a[n-1] is in memory.

The insertion sort scans a from a[0] to a[n-1], inserting each element

a[k] in to its proper position in the previously sorted sub-array

a[0], a[1], ...., a[k-1].

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 25 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-25-2048.jpg)

![Insertion Sort

Algorithm 7 Insertion Sort

1: procedure insertion-sort(A, n)

2: for j ← 1 to n − 1 do

3: key ← A[j]

4: i ← j-1

5: while (i ≥ 0 and A[i] > key) do

6: A[i+1] ← A[i]

7: i ← i-1

8: end while

9: A[i+1] ← key

10: end for

11: end procedure

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 26 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-26-2048.jpg)

![Insertion Sort

Best Case Time Complexity:

Algorithm 8 Insertion Sort Best Case Analysis

1: procedure insertion-sort(A, n) ▷ Frequency count is 1

2: for j ← 1 to n − 1 do ▷ Frequency count is n

3: key ← A[j] ▷ Frequency count is n-1

4: i ← j-1 ▷ Frequency count is n-1

5: while (i ≥ 0 and A[i] > key) do ▷ Frequency count is n-1

6: A[i+1] ← A[i] ▷ Frequency count is 0

7: i ← i-1 ▷ Frequency count is 0

8: end while ▷ Frequency count is 0

9: A[i+1] ← key ▷ Frequency count is n-1

10: end for ▷ Frequency count is n-1

11: end procedure ▷ Frequency count is 1

Total frequency count f(n) is 6n-3. The best case time complexity is O(n)

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 28 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-28-2048.jpg)

![Insertion Sort

Best Case Time Complexity Analysis - Big Oh Notation

f(n) = 6n-3 ≤ cn

Assume c = 6, then f(n) = 6n-3 ≤ 6n

⇒ 3 ≤ 6 [Since n0 = 1]

For n0 ≥ 1, f(n) ≤ 6n

So, best case time complexity O(n), where n0 = 1 and c = 6.

Worst Case Time Complexity Analysis - Big Oh Notation

f(n) = 2n2+4n-3 ≤ cn2

Assume c = 3, then f(n) = 2n2+4n-3 ≤ 3n2

⇒ 27 ≤ 27 [Since n0 = 3]

For n0 ≥ 3, f(n) ≤ 3n2

So, worst case time complexity O(n2), where n0 = 3 and c = 3.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 30 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-30-2048.jpg)

![Insertion Sort

Best Case Time Complexity Analysis - Big Omega Notation

f(n) = 6n-3 ≥ cn

Assume c = 5, then f(n) = 6n-3 ≥ 5n

⇒ 15 ≥ 15 [Since n0 = 3]

For n0 ≥ 3, f(n) ≥ 5n

So, best case time complexity Ω(n), where n0 = 3 and c = 5.

Worst Case Time Complexity Analysis - Big Omega Notation

f(n) = 2n2+4n-3 ≥ cn2

Assume c = 2, then f(n) = 2n2+4n-3 ≥ 2n2

⇒ 3 ≥ 2 [Since n0 = 1]

For n0 ≥ 1, f(n) ≥ 2n2

So, worst case time complexity Ω(n2), where n0 = 1 and c = 2.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 31 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-31-2048.jpg)

![Insertion Sort

Best Case Time Complexity Analysis - Little Oh Notation

f(n) = 6n-3 < cn

Assume c = 6, then f(n) = 6n-3 < 6n

⇒ 3 < 6 [Since n0 = 1]

For n0 ≥ 1, f(n) < 6n

So, best case time complexity o(n), where n0 = 1 and c = 6.

Worst Case Time Complexity Analysis - Little Oh Notation

f(n) = 2n2+4n-3 < cn2

Assume c = 3, then f(n) = 2n2+4n-3 < 3n2

⇒ 45 < 48 [Since n0 = 4]

For n0 ≥ 4, f(n) < 3n2

So, worst case time complexity o(n2), where n0 = 4 and c = 3.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 32 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-32-2048.jpg)

![Insertion Sort

Best Case Time Complexity Analysis - Little Omega Notation

f(n) = 6n-3 > cn

Assume c = 5, then f(n) = 6n-3 > 5n

⇒ 21 > 20 [Since n0 = 4]

For n0 ≥ 4, f(n) > 5n

So, best case time complexity ω(n), where n0 = 4 and c = 5.

Worst Case Time Complexity Analysis - Little Omega Notation

f(n) = 2n2+4n-3 > cn2

Assume c = 2, then f(n) = 2n2+4n-3 > 2n2

⇒ 3 > 2 [Since n0 = 1]

For n0 ≥ 1, f(n) > 2n2

So, worst case time complexity ω(n2), where n0 = 1 and c = 2.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 33 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-33-2048.jpg)

![Insertion Sort

Best Case Time Complexity Analysis - Theta Notation

c1n ≤ f(n) = 6n-3 ≤ c2n

Assume c1 = 5 and c2 = 6, then 5n ≤ 6n-3 ≤ 6n

⇒ 15 ≤ 15 ≤ 18 [Since n0 = 3]

For n0 ≥ 3, 5n ≤ f(n) ≤ 6n

So, best case time complexity θ(n), where n0 = 3, c1 = 5 and c2 = 6.

Worst Case Time Complexity Analysis - Theta Notation

c1n2 ≤ f(n) = 2n2+4n-3 ≤ c2n2

Assume c1 = 2 and c2 = 3, then 2n2 ≤ 2n2+4n-3 ≤ 3n2

⇒ 18 ≤ 27 ≤ 27 [Since n0 = 3]

For n0 ≥ 3, 2n2 ≤ f(n) ≤ 3n2

So, worst case time complexity θ(n2), where n0 = 3, c1 = 2 and c2 = 3.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 34 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-34-2048.jpg)

![Selection Sort

The selection sort is also known as push-down sort.

The sort consists entirely of a selection phase in which the smallest

of the remaining elements, small, is repeatedly placed in its proper

position.

Let a be an array of n elements. Find the position i of the smallest

element in the list of n elements a[0], a[1], ..., a[n-1] and then

interchange a[i] with a[0]. Then a[0] is sorted.

Find the position i of the smallest in the sub-list of n-1 elements

a[1], a[2], ..., a[n-1]. Then interchange a[i] with a[1]. Then a[0]

and a[1] are sorted.

find the position i of the smallest element in the sub-list of n-2

elements a[2], a[3], ..., a[n-1]. Then interchange a[i] with a[2].

Then a[0], a[1] and a[2] are sorted.

Like this, find the position i of the smallest element between a[n-2]

and a[n-1]. Then interchange a[i] with a[n-2]. Then the array a is

sorted.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 37 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-37-2048.jpg)

![Selection Sort

Algorithm 9 Selection Sort

1: procedure Selection-sort(A, n)

2: for i ← 0 to n − 2 do

3: min ← i

4: for j ← i + 1 to n − 1 do

5: if A[j] < a[min] then

6: min ← j // index of the ith smallest element.

7: end if

8: end for

9: t ← A[min]

10: a[min] ← A[i]

11: a[i] ← t

12: end for

13: end procedure

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 38 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-38-2048.jpg)

![Selection Sort

Best Case Time Complexity: Total freq. count f(n) is 1.5n2+5.5n-4

Algorithm 10 Selection Sort

1: procedure Selection-sort(A, n) ▷ Frequency count is 1

2: for i ← 0 to n − 2 do ▷ Frequency count is n

3: min ← i ▷ Frequency count is n-1

4: for j ← i + 1 to n − 1 do ▷ Freq. count is n(n+1)/2 -1

5: if A[j] < a[min] then ▷ Freq. count is n(n-1)/2

6: min ← j ▷ Frequency count is 0

7: end if ▷ Frequency count is 0

8: end for ▷ Freq. count is n(n-1)/2

9: t ← A[min] ▷ Frequency count is n-1

10: a[min] ← A[i] ▷ Frequency count is n-1

11: a[i] ← t ▷ Frequency count is n-1

12: end for ▷ Frequency count is n-1

13: end procedure ▷ Frequency count is 1

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 40 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-40-2048.jpg)

![Selection Sort

Worst Case Time Complexity: Total freq. count f(n) is 2.5n2+4.5n-4

Algorithm 11 Selection Sort

1: procedure Selection-sort(A, n) ▷ Frequency count is 1

2: for i ← 0 to n − 2 do ▷ Frequency count is n

3: min ← i ▷ Frequency count is n-1

4: for j ← i + 1 to n − 1 do ▷ Freq. count is n(n+1)/2 -1

5: if A[j] < a[min] then ▷ Freq. count is n(n-1)/2

6: min ← j ▷ Freq. count is n(n-1)/2

7: end if ▷ Freq. count is n(n-1)/2

8: end for ▷ Freq. count is n(n-1)/2

9: t ← A[min] ▷ Frequency count is n-1

10: a[min] ← A[i] ▷ Frequency count is n-1

11: a[i] ← t ▷ Frequency count is n-1

12: end for ▷ Frequency count is n-1

13: end procedure ▷ Frequency count is 1

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 41 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-41-2048.jpg)

![Selection Sort

Best Case Time Complexity Analysis - Big Oh Notation

f(n) = 1.5n2+5.5n-4 ≤ cn2

Assume c = 2, then f(n) = 1.5n2+5.5n-4 ≤ 2n2

⇒ 238 ≤ 242 [Since n0 = 11]

For n0 ≥ 11, f(n) ≤ 2n2

So, best case time complexity O(n2), where n0 = 11 and c = 2.

Worst Case Time Complexity Analysis - Big Oh Notation

f(n) = 2.5n2+4.5n-4 ≤ cn2

Assume c = 3, then f(n) = 2.5n2+4.5n-4 ≤ 3n2

⇒ 192 ≤ 192 [Since n0 = 8]

For n0 ≥ 8, f(n) ≤ 3n2

So, worst case time complexity O(n2), where n0 = 8 and c = 3.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 42 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-42-2048.jpg)

![Selection Sort

Best Case Time Complexity Analysis - Big Omega Notation

f(n) = 1.5n2+5.5n-4 ≥ cn2

Assume c = 1, then f(n) = 1.5n2+5.5n-4 ≥ n2

⇒ 3 ≥ 1 [Since n0 = 1]

For n0 ≥ 1, f(n) ≥ n2

So, best case time complexity Ω(n2), where n0 = 1 and c = 1.

Worst Case Time Complexity Analysis - Big Omega Notation

f(n) = 2.5n2+4.5n-4 ≥ cn2

Assume c = 2, then f(n) = 2.5n2+4.5n-4 ≥ 2n2

⇒ 3 ≥ 2 [Since n0 = 1]

For n0 ≥ 1, f(n) ≥ 2n2

So, worst case time complexity Ω(n2), where n0 = 1 and c = 2.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 43 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-43-2048.jpg)

![Selection Sort

Best Case Time Complexity Analysis - Little Oh Notation

f(n) = 1.5n2+5.5n-4 < cn2

Assume c = 2, then f(n) = 1.5n2+5.5n-4 < 2n2

⇒ 238 < 242 [Since n0 = 11]

For n0 ≥ 11, f(n) < 2n2

So, best case time complexity o(n2), where n0 = 11 and c = 2.

Worst Case Time Complexity Analysis - Little Oh Notation

f(n) = 2.5n2+4.5n-4 < cn2

Assume c = 3, then f(n) = 2.5n2+4.5n-4 < 3n2

⇒ 239 < 243 [Since n0 = 9]

For n0 ≥ 9, f(n) < 3n2

So, worst case time complexity o(n2), where n0 = 9 and c = 3.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 44 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-44-2048.jpg)

![Selection Sort

Best Case Time Complexity Analysis - Little Omega Notation

f(n) = 1.5n2+5.5n-4 > cn2

Assume c = 1, then f(n) = 1.5n2+5.5n-4 > n2

⇒ 3 > 1 [Since n0 = 1]

For n0 ≥ 1, f(n) > n2

So, best case time complexity ω(n2), where n0 = 1 and c = 1.

Worst Case Time Complexity Analysis - Little Omega Notation

f(n) = 2.5n2+4.5n-4 > cn2

Assume c = 2, then f(n) = 2.5n2+4.5n-4 > 2n2

⇒ 3 > 2 [Since n0 = 1]

For n0 ≥ 1, f(n) > 2n2

So, worst case time complexity ω(n2), where n0 = 1 and c = 2.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 45 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-45-2048.jpg)

![Selection Sort

Best Case Time Complexity Analysis - Theta Notation

c1n2 ≤ f(n) = 1.5n2+5.5n-4 ≤ c2n2

Assume c1 = 1 and c2 = 2, then n2 ≤ 1.5n2+5.5n-4 ≤ 2n2

⇒ 121 ≤ 238 ≤ 242 [Since n0 = 11]

For n0 ≥ 11, n2 ≤ f(n) ≤ 2n2

So, best case time complexity θ(n), where n0 = 11, c1 = 1 and c2 = 2.

Worst Case Time Complexity Analysis - Theta Notation

c1n2 ≤ f(n) = 2.5n2+4.5n-4 ≤ c2n2

Assume c1 = 2 and c2 = 3, then 2n2 ≤ 2.5n2+4.5n-4 ≤ 3n2

⇒ 128 ≤ 192 ≤ 192 [Since n0 = 8]

For n0 ≥ 8, 2n2 ≤ f(n) ≤ 3n2

So, worst case time complexity θ(n2), where n0 = 8, c1 = 2 and c2 = 3.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 46 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-46-2048.jpg)

![Bubble Sort

Bubble sort proceeds by scanning the list from left to right, and

whenever a pair of adjacent keys found out of order, those

items are swapped.

This process repeats till all the elements of the list are in sorted order.

Let a is an array of n integers in which the elements are to be

sorted, so that a[i] ≤ a[j] for 0 ≤ i < j < n.

The basic idea of bubble sort is to pass through the list sequentially

several times.

Each pass consists of comparing each element in the list with its

successors and interchanging the two elements if they are not in the

proper order.

In Pass 1, Compare a[0] and a[1] and arrange them in order so that

a[0] ≤ a[1]. Then compare a[1] and a[2] and arrange them so that

a[1] ≤ a[2].

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 49 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-49-2048.jpg)

![Bubble Sort

Continue until a[n-2] and a[n-1] comparison and arrange them so

that a[n-2] ≤ a[n-1]. Pass 1 involves n-1 comparison and the

largest element occupies (n-1)th position.

In Pass 2, repeat the above process with one less comparison i.e.

stop after comparing and possible rearrangement of a[n-3] and

a[n-2].

It involves n-2 comparisons, the second largest element will

occupies (n-2)th position. The process continues, and the (n-i)th

index position receives ith largest element after pass i.

Compare a[0] and a[1] in pass n-1, and arrange them so that a[0]

≤ a[1].

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 50 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-50-2048.jpg)

![Bubble Sort

Algorithm 12 Bubble Sort

1: procedure Bubble-sort(A, n)

2: for i ← 0 to n − 2 do

3: for j ← 0 to n − i − 2 do

4: // compare adjacent elements

5: if A[j] > a[j + 1] then

6: t ← A[j]

7: a[j] ← A[j + 1]

8: a[j + 1] ← t

9: end if

10: end for

11: end for

12: end procedure

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 51 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-51-2048.jpg)

![Bubble Sort

Best Case Time Complexity:

Algorithm 13 Bubble Sort

1: procedure Bubble-sort(A, n) ▷ Frequency count is 1

2: for i ← 0 to n − 2 do ▷ Frequency count is n

3: for j ← 0 to n − i − 2 do ▷ Freq. count is n(n+1)/2 -1

4: if A[j] > a[j + 1] then ▷ Freq. count is n(n-1)/2

5: t ← A[j] ▷ Frequency count is 0

6: a[j] ← A[j + 1] ▷ Frequency count is 0

7: a[j + 1] ← t ▷ Frequency count is 0

8: end if ▷ Frequency count is 0

9: end for ▷ Freq. count is n(n-1)/2

10: end for ▷ Frequency count is n-1

11: end procedure ▷ Frequency count is 1

Total frequency count f(n) is 1.5n2+1.5n. The best case time complexity

is O(n2)

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 54 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-54-2048.jpg)

![Bubble Sort

Worst Case Time Complexity:

Algorithm 14 Bubble Sort

1: procedure Bubble-sort(A, n) ▷ Frequency count is 1

2: for i ← 0 to n − 2 do ▷ Frequency count is n

3: for j ← 0 to n − i − 2 do ▷ Freq. count is n(n+1)/2 -1

4: if A[j] > a[j + 1] then ▷ Freq. count is n(n-1)/2

5: t ← A[j] ▷ Freq. count is n(n-1)/2

6: a[j] ← A[j + 1] ▷ Freq. count is n(n-1)/2

7: a[j + 1] ← t ▷ Freq. count is n(n-1)/2

8: end if ▷ Freq. count is n(n-1)/2

9: end for ▷ Freq. count is n(n-1)/2

10: end for ▷ Frequency count is n-1

11: end procedure ▷ Frequency count is 1

Total frequency count f(n) is 3.5n2-0.5n. The worst case time complexity

is O(n2)

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 55 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-55-2048.jpg)

![Bubble Sort

Best Case Time Complexity Analysis - Big Oh Notation

f(n) = 1.5n2+1.5n ≤ cn2

Assume c = 2, then f(n) = 1.5n2+1.5n ≤ 2n2

⇒ 18 ≤ 18 [Since n0 = 3]

For n0 ≥ 3, f(n) ≤ 2n2

So, best case time complexity O(n2), where n0 = 3 and c = 2.

Worst Case Time Complexity Analysis - Big Oh Notation

f(n) = 3.5n2-0.5n ≤ cn2

Assume c = 4, then f(n) = 3.5n2-0.5n ≤ 4n2

⇒ 3 ≤ 4 [Since n0 = 1]

For n0 ≥ 1, f(n) ≤ 4n2

So, worst case time complexity O(n2), where n0 = 1 and c = 4.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 56 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-56-2048.jpg)

![Bubble Sort

Best Case Time Complexity Analysis - Big Omega Notation

f(n) = 1.5n2+1.5n ≥ cn2

Assume c = 1, then f(n) = 1.5n2+1.5n ≥ n2

⇒ 3 ≥ 1 [Since n0 = 1]

For n0 ≥ 1, f(n) ≥ n2

So, best case time complexity Ω(n2), where n0 = 1 and c = 1.

Worst Case Time Complexity Analysis - Big Omega Notation

f(n) = 3.5n2-0.5n ≥ cn2

Assume c = 3, then f(n) = 3.5n2-0.5n ≥ 3n2

⇒ 3 ≥ 3 [Since n0 = 1]

For n0 ≥ 1, f(n) ≥ 3n2

So, worst case time complexity Ω(n2), where n0 = 1 and c = 3.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 57 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-57-2048.jpg)

![Bubble Sort

Best Case Time Complexity Analysis - Little Oh Notation

f(n) = 1.5n2+1.5n < cn2

Assume c = 2, then f(n) = 1.5n2+1.5n < 2n2

⇒ 30 < 32 [Since n0 = 4]

For n0 ≥ 4, f(n) < 2n2

So, best case time complexity o(n2), where n0 = 4 and c = 2.

Worst Case Time Complexity Analysis - Little Oh Notation

f(n) = 3.5n2-0.5n < cn2

Assume c = 4, then f(n) = 3.5n2-0.5n < 4n2

⇒ 3 < 4 [Since n0 = 1]

For n0 ≥ 1, f(n) < 4n2

So, worst case time complexity o(n2), where n0 = 1 and c = 4.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 58 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-58-2048.jpg)

![Bubble Sort

Best Case Time Complexity Analysis - Little Omega Notation

f(n) = 1.5n2+1.5n > cn2

Assume c = 1, then f(n) = 1.5n2+1.5n > n2

⇒ 3 > 1 [Since n0 = 1]

For n0 ≥ 1, f(n) > n2

So, best case time complexity ω(n2), where n0 = 1 and c = 1.

Worst Case Time Complexity Analysis - Little Omega Notation

f(n) = 3.5n2-0.5n > cn2

Assume c = 2, then f(n) = 3.5n2-0.5n > 2n2

⇒ 3 > 2 [Since n0 = 1]

For n0 ≥ 1, f(n) > 2n2

So, worst case time complexity ω(n2), where n0 = 1 and c = 2.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 59 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-59-2048.jpg)

![Bubble Sort

Best Case Time Complexity Analysis - Theta Notation

c1n2 ≤ f(n) = 1.5n2+1.5n ≤ c2n2

Assume c1 = 1 and c2 = 2, then n2 ≤ 1.5n2+1.5n ≤ 2n2

⇒ 9 ≤ 18 ≤ 18 [Since n0 = 3]

For n0 ≥ 3, n2 ≤ f(n) ≤ 2n2

So, best case time complexity θ(n), where n0 = 3, c1 = 1 and c2 = 2.

Worst Case Time Complexity Analysis - Theta Notation

c1n2 ≤ f(n) = 2.5n2+4.5n-4 ≤ c2n2

Assume c1 = 3 and c2 = 4, then 3n2 ≤ 3.5n2-0.5n ≤ 4n2

⇒ 3 ≤ 3 ≤ 4 [Since n0 = 1]

For n0 ≥ 1, 3n2 ≤ f(n) ≤ 4n2

So, worst case time complexity θ(n2), where n0 = 1, c1 = 3 and c2 = 4.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 60 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-60-2048.jpg)

![Optimized Bubble Sort

An algorithm that outperforms the default bubble sort algorithm is

said to be optimized.

An optimized bubble sort algorithm’s primary advantage is that it

runs faster, which is beneficial for situations where performance is a

key consideration.

Algorithm 15 Optimized Bubble Sort

1: procedure Bubble-sort(A, n)

2: for i ← 0 to n − 2 do

3: swapped ← 0

4: for j ← 0 to n − i − 2 do

5: if A[j] > a[j +1] then

6: t ← A[j]

7: a[j] ← A[j + 1]

8: a[j + 1] ← t

9: swapped ← 1

10: end if

11: end for

12: if (swapped = 0) then

13: break

14: end if

15: end for

16: end procedure

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 63 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-63-2048.jpg)

![Optimized Bubble Sort

Best Case Time Complexity Analysis - Big Oh Notation

f(n) = 3n+4 ≤ cn

Assume c = 4, then f(n) = 3n+4 ≤ 4n

⇒ 16 ≤ 16 [Since n0 = 4]

For n0 ≥ 4, f(n) ≤ 4n

So, best case time complexity O(n), where n0 = 4 and c = 4.

Worst Case Time Complexity Analysis - Big Oh Notation

f(n) = 4n2+n-2 ≤ cn2

Assume c = 5, then f(n) = 4n2+n-2 ≤ 5n2

⇒ 3 ≤ 5 [Since n0 = 1]

For n0 ≥ 1, f(n) ≤ 5n2

So, worst case time complexity O(n2), where n0 = 1 and c = 5.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 66 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-66-2048.jpg)

![Optimized Bubble Sort

Best Case Time Complexity Analysis - Big Omega Notation

f(n) = 3n+4 ≥ cn

Assume c = 3, then f(n) = 3n+4 ≥ 3n

⇒ 7 ≥ 3 [Since n0 = 1]

For n0 ≥ 1, f(n) ≥ 3n

So, best case time complexity Ω(n), where n0 = 1 and c = 3.

Worst Case Time Complexity Analysis - Big Omega Notation

f(n) = 4n2+n-2 ≥ cn2

Assume c = 4, then f(n) = 4n2+n-2 ≥ 4n2

⇒ 16 ≥ 16 [Since n0 = 2]

For n0 ≥ 2, f(n) ≥ 4n2

So, worst case time complexity Ω(n2), where n0 = 2 and c = 4.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 67 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-67-2048.jpg)

![Optimized Bubble Sort

Best Case Time Complexity Analysis - Little Oh Notation

f(n) = 3n+4 < cn

Assume c = 4, then f(n) = 3n+4 < 4n

⇒ 19 < 20 [Since n0 = 5]

For n0 ≥ 5, f(n) < 2n2

So, best case time complexity o(n2), where n0 = 5 and c = 2.

Worst Case Time Complexity Analysis - Little Oh Notation

f(n) = 4n2+n-2 < cn2

Assume c = 5, then f(n) = 4n2+n-2 < 5n2

⇒ 3 < 5 [Since n0 = 1]

For n0 ≥ 1, f(n) < 5n2

So, worst case time complexity o(n2), where n0 = 1 and c = 5.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 68 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-68-2048.jpg)

![Optimized Bubble Sort

Best Case Time Complexity Analysis - Little Omega Notation

f(n) = 3n+4 > cn

Assume c = 3, then f(n) = 3n+4 > 3n

⇒ 7 > 3 [Since n0 = 1]

For n0 ≥ 1, f(n) > 3n

So, best case time complexity ω(n), where n0 = 1 and c = 3.

Worst Case Time Complexity Analysis - Little Omega Notation

f(n) = 4n2+n-2 > cn2

Assume c = 4, then f(n) = 4n2+n-2 > 4n2

⇒ 37 > 36 [Since n0 = 3]

For n0 ≥ 3, f(n) > 4n2

So, worst case time complexity ω(n2), where n0 = 3 and c = 4.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 69 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-69-2048.jpg)

![Optimized Bubble Sort

Best Case Time Complexity Analysis - Theta Notation

c1n ≤ f(n) = 3n+4 ≤ c2n

Assume c1 = 3 and c2 = 4, then 3n ≤ 3n+4 ≤ 4n

⇒ 12 ≤ 16 ≤ 16 [Since n0 = 4]

For n0 ≥ 4, 3n ≤ f(n) ≤ 4n

So, best case time complexity θ(n), where n0 = 4, c1 = 3 and c2 = 4.

Worst Case Time Complexity Analysis - Theta Notation

c1n2 ≤ f(n) = 4n2+n-2 ≤ c2n2

Assume c1 = 4 and c2 = 5, then 4n2 ≤ 4n2+n-2 ≤ 5n2

⇒ 16 ≤ 16 ≤ 20 [Since n0 = 2]

For n0 ≥ 2, 4n2 ≤ f(n) ≤ 5n2

So, worst case time complexity θ(n2), where n0 = 2, c1 = 4 and c2 = 5.

Dr. Ashutosh Satapathy Searching and Sorting Algorithms March 9, 2024 70 / 73](https://image.slidesharecdn.com/searchingandsortingalgorithmsandtheircomplexities-240309142853-cf6c60fe/75/Searching-and-Sorting-Algorithms-70-2048.jpg)