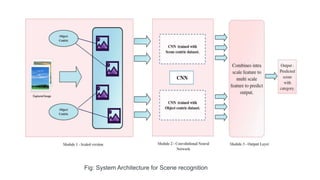

The document discusses scene recognition using convolutional neural networks. It begins with an abstract stating that scene recognition allows context for object recognition. While object recognition has improved due to large datasets and CNNs, scene recognition performance has not reached the same level of success. The document then discusses using a new scene-centric database called Places with over 7 million images to train CNNs for scene recognition. It establishes new state-of-the-art results on several scene datasets and allows visualization of network responses to show differences between object-centric and scene-centric representations.

![8

Literature

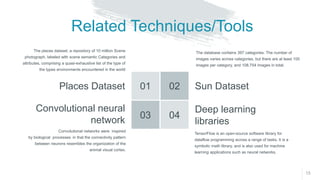

In [1], They measured relative densities and diversities between SUN, IMAGENET and PLACES using

AMT(Automated Mechanical Transmission).They intoduced PLACES as a new dataset containing 7 million

images from 476 places.

In [2], They implemented dataset bias of IMAGENET and PLACES to increases the accuracy upto 70%.

In [3], They stated that, Convolutional neural network helps us to simulate human vision which is amazing

at scene recognition.

In [4], They improved the PLACES dataset with adding extra 3 million images, containing 900 different

Categeries.](https://image.slidesharecdn.com/scenerec-200926053428/85/Scene-recognition-using-Convolutional-Neural-Network-8-320.jpg)

![26

References

Research Papers

[1] Bolei Zhou1, Agata Lapedriza1,3, Jianxiong Xiao2, Antonio Torralba1, and Aude

Oliva1,“Learning Deep Features for Scene Recognition using Places Database”.

Massachusetts Institute of Technology, Princeton University. (2015)

[2] Luis Herranz, Shuqiang Jiang, Xiangyang Li,“Scene recognition with CNNs: objects,

scales and dataset bias”. IEEE Conference on Computer Vision and Pattern Recognition. (2016)

[3] Bavin Ondieki,“Convolutional Neural Networks for Scene Recognition” Stanford

University.(2016)

[4] Bolei Zhou1, Agata Latdriza, Adtiya Khosala, “Places: A 10 million image database for

scene recognition” IEEE Transactions on Pattern Analysis and Machine Intelligence.(2017)](https://image.slidesharecdn.com/scenerec-200926053428/85/Scene-recognition-using-Convolutional-Neural-Network-26-320.jpg)