Sccg Many Projects Layout03

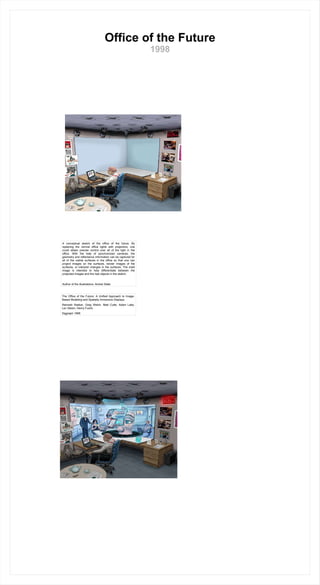

- 1. Office of the Future 1998 A conceptual sketch of the office of the future. By replacing the normal office lights with projectors, one could obtain precise control over all of the light in the office. With the help of synchronized cameras, the geometry and reflectance information can be captured for all of the visible surfaces in the office so that one can project images on the surfaces, render images of the surfaces, or interpret changes in the surfaces. The inset image is intended to help differentiate between the projected images and the real objects in the sketch. Author of the illustrations: Andrei State The Office of the Future: A Unified Approach to Image-Based Modeling and Spatially Immersive Displays Ramesh Raskar, Greg Welch, Matt Cutts, Adam Lake, Lev Stesin, Henry Fuchs Siggraph 1998

- 2. Shader Lamps - Taj Mahal 2001 We are used to looking at the output of a computer graphics program on a monitor or on a screen. But, how can we see the "special effects" directly in our environment ? For example, how can we make a clay vase sitting on a table look like it is made up of gold, with fine details ? The appearance of an object is a function of illumination, surface reflectance and viewer location. Hence, we can rearrange the factors along the optical path and reproduce the equivalent desired appearance. We have recently introduced a new paradigm and related techniques to graphically animate physical objects with projectors. Because the approach is to effectively "lift" the visual properties of the object into the projector, we call the projectors shader lamps. We address the central issue of complete illumination of non-trivial physical objects using multiple projectors and present a set of new techniques that make the process of illumination practical. We show some results and describe the new challenges in graphics, geometry, vision and user interfaces. www.cs.unc.edu/~raskar/Shaderlamps/abstract.txt Raskar, R.; Welch, G.; Low, K-L.; Bandyopadhyay, D., "Shader Lamps: Animating Real Objects with Image Based Illumination", Eurographics Workshop on Rendering, June 2001

- 3. Image Fusion 2004 Night Image Glass world Day Image We present a class of image fusion techniques to automatically combine images of a scene captured under different illumination. Beyond providing digital tools for artists for creating surrealist images and videos, the methods can also be used for practical applications. For example, the non-realistic appearance can be used to enhance the context of nighttime traffic videos so that they are easier to understand. The context is automatically captured from a fixed camera and inserted from a day-time image (of the same scene). Our approach is based on a gradient domain technique that preserves important local perceptual cues while avoiding traditional problems such as aliasing, ghosting and haloing. We presents several results in generating surrealistic videos and in increasing the information density of low quality nighttime videos. Context Enhanced Image Flowchart for asymmetric fusion. Importance image is derived from only the night time image. Mixed gradient field is created by linearly blending intensity gradients. Stylization by mosaicing vertical strips of a day to night sequence (Left) Naive algorithm (Right)The output of our algorithm. Automatic context enhancement of a night time scene. The image is reconstructed from a gradient field. The gradient field is a linear blend of intensity gradients of the night time image and a corresponding day time image of the same scene. Image Fusion for Context Enhancement and Video Surrealism R Raskar, A Ilie, J Yu ACM Nonphotorealistic Animation and Rendering (NPAR) 2004, Annecy, France

- 4. RFIG Application Examples 2004 Finding millimeter-precise RFID location using a handheld RF reader and pocket projector without RF collision A photosensor is embedded in the RFID tag. A coded illumination via pocket projector locates the tag. The computer generated labels are projected and overlay the object creating augmented reality (AR). (Left) Detecting an obstruction (such as person on the tracks near a platform, a disabled vehicle at a railroad intersection, or suspicious material on the tracks). Identifying an obstruction with a camera-based system is difficult, owing to the necessary complex image analysis under unknown lighting conditions. RFIG tags can be sprinkled along the tracks and illuminated with a fixed or steered beam of temporally modulated light (not necessarily a projector). Tags respond with the status of the reception of the modulated light. Lack of reception indicates an obstruction; a notice can then be sent to a central monitoring facility where a railroad traffic controller observes the scene, perhaps using a pan-tilt-zoom surveillance camera. (Middle) Books in a library. RF-tagged books make it easy to generate a list of titles within the RF range. However, incomplete location information makes it difficult to determine which books are out of alphabetically sorted order. In addition, inadequate information concerning book orientation makes it difficult to detect whether books are placed upside down. With RFIG and a handheld projector, the librarian can identify book title, as well as the book’s physical location and orientation. Based on a mismatch in title sort with respect to the location sort, the system provides instant visual feedback and instructions (shown here as red arrows for original positions). (Right) Laser-guided robot. Guiding a robot to pick a certain object in a pile of objects on a moving conveyor belt, the projector locates the RFIG-tagged object, illuminating it with an easily identifiable temporal pattern. A camera attached to the robot arm locks onto this pattern, enabling the robot to home in on the object. We describe how to instrument the physical world so that objects become self-describing, communicating their identity, geometry, and other information such as history or user annotation. The enabling technology is a wireless tag which acts as a radio frequency identity and geometry (RFIG) transponder. We show how addition of a photo-sensor to a wireless tag significantly extends its functionality to allow geometric operations - such as finding the 3D position of a tag, or detecting change in the shape of a tagged object. Tag data is presented to the user by direct projection using a handheld locale-aware mobile projector. We introduce a novel technique that we call interactive projection to allow a user to interact with projected information e.g. to navigate or update the projected information. The work was motivated by the advent of unpowered passive-RFID, a technology that promises to have significant impact in real-world applications. We discuss how our current prototypes could evolve to passive-RFID in the future. RFIG Lamps: Interacting with Self-describing World via Photosensing Wireless Tags and Projectors R Raskar, P Beardsley, J vanBaar, Y Wang, P Dietz, J Lee, D Leigh, T Willwacher SIGGRAPH 2004

- 5. Multiflash Edge Detection 2004 Glass of wine after work Imagine a camera, no larger than existing digital cameras, that can directly find depth edges. As we know, a flash to the left of a camera creates a sliver of shadow to the right of each silhouette (depth discontinuity) in the image. We add a flash on the right, which creates a sliver of shadow to the left of each silhouette, a flash to the top and bottom. By observing the shadows, one can robustly find all the pixels corresponding to shape boundaries (depth discontinuities). This is a strikingly simple way of calculating depth edges. One screenshot from a research submission video created in march 2007. You can see a glass filled with wine and a flower in front. The light transport through the glass is calculated in real time. Paper: Non-photorealistic Camera: Depth Edge Detection and Stylized Rendering using Multi-Flash Imaging R Raskar, K Tan, R Feris, J Yu, M Turk SIGGRAPH 2004

- 6. Non-photorealistic Camera 2004 Texture de-emphasized rendering. We present a non-photorealistic rendering approach to capture and convey shape features of real-world scenes. We use a camera with multiple flashes that are strategically positioned to cast shadows along depth discontinuities in the scene. The projective-geometric relationship of the camera-flash setup is then exploited to detect depth discontinuities and distinguish them from intensity edges due to material discontinuities. We introduce depiction methods that utilize the detected edge features to generate stylized static and animated images. We can highlight the detected features, suppress unnecessary details or combine features from multiple images. The resulting images more clearly convey the 3D structure of the imaged scenes. We take a very different approach to capturing geometric features of a scene than traditional approaches that require reconstructing a 3D model. This results in a method that is both surprisingly simple and computationally efficient. The entire hardware/software setup can conceivably be packaged into a self-contained device no larger than existing digital cameras. Paper: Non-photorealistic Camera: Depth Edge Detection and Stylized Rendering using Multi-Flash Imaging R Raskar, K Tan, R Feris, J Yu, M Turk SIGGRAPH 2004 Texture de-emphasized rendering. Color assignment. (a) Attenuation Map (b) Attenuated Image (c) Colored edges on de-emphasized texture

- 7. Qualitative Depth 2005 Discontinuity preserving stereo with small baseline multi-flash illumination Feris, R.; Raskar, R.; Longbin Chen; Kar-Han Tan; Turk, M. International Conference on Computer Vision, 2005. We use a single multi-flash camera to derive a qualitative depth map based on two important measurements: the shadow width, which encodes object relative distances, and the sign of each depth edge pixel, which indicates which side of the edge corresponds to the foreground and background. Based on this measurements, we create a depth gradient field and integrate it by solving a Poisson equation. The resultant map effectively segments objects in the scene, providing depth-order relations.

- 8. Flash and Ambient Images 2005 Flash images are known to suffer from several problems: saturation of nearby objects, poor illumination of distant objects, reflections of objects strongly lit by the flash and strong highlights due to the reflection of flash itself by glossy surfaces. We propose to use a flash and no-flash (ambient) image pair to produce better flash images. We present a novel gradient projection scheme based on a gradient coherence model that allows removal of reflections and highlights from flash images. We also present a brightness-ratio based algorithm that allows us to compensate for the falloff in the flash image brightness due to depth. In several practical scenarios, the quality of flash/no-flash images may be limited in terms of dynamic range. In such cases, we advocate using several images taken under different flash intensities and exposures. We analyze the flash intensity-exposure space and propose a method for adaptively sampling this space so as to minimize the number of captured images for any given scene. We present several experimental results that demonstrate the ability of our algorithms to produce improved flash images. Removing Photography Artifacts Using Gradient Projection and Flash-Exposure Sampling A Agrawal, R Raskar, S Nayar, Y Li SIGGRAPH 2005

- 9. Image Refocusing 2007 Input Blurred Image Sharpened in PhotoShop Input Blurred Image Refocused Image Encoded Blur Camera, i.e. with mask in the aperture, can high spatial images frequencies in the defocus blur. Notice the glint in the eye. In the misfocused photo, on the left, the bright spot appears blurred with the bokeh of the chosen aperture (shown in the inset). In the deblurred result, on the right, the details on the eye are correctly recovered Refocused Image on Person Captured Blurred Photo Dappled Photography: Mask Enhanced Cameras for Heterodyned Light Fields and Coded Aperture Refocusing Ashok Veeraraghavan, Ramesh Raskar, Amit Agrawal, Ankit Mohan and Jack Tumblin ACM SIGGRAPH 2007

- 10. The Poor Man's Palace 2005 What are the potential great challenges and research topics in Interactive Computer Graphics? Today, great advances in photorealistic image synthesis allow us to enjoy special effects but they remain on flat screens in movies and in video games. But in the future, will special effects have some bearing on the daily life of an average person? Can we develop new computer graphics techniques, algorithm as well as capture, interface and display devices that will empower humans every second of their life? We must strive to bring those special effects into the real world. The challenge in bringing those visual effects into the real world is to make the experience aesthetic, seamless and natural. One may classify this problem as a futuristic 'Augmented Reality' and the VR and AR community has made big strides in solving the pieces of the puzzle. Recently we have seen a range of practical solutions using Spatially Augmented Reality (SAR). In place of eye-worn or hand held displays, Spatial Augmented Reality methods exploit video projectors, cameras, radio frequency tags such as RFID, large optical elements, holograms and tracking technologies. So far, SAR research has taken only baby steps in supporting programmable reflectance, virtual illumination, synthetic motion and untethered interaction. Emerging novel display technologies, innovations in sensors and advances in material science, have the potential to enable broader applications. But the next big challenge for Computer Graphics and HCI is to exploit these innovations and deliver daily benefits for the common man via a powerful infusion of synthetic elements in the real world.