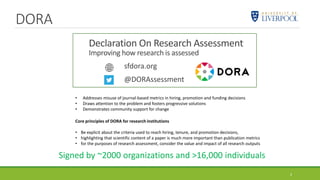

The document discusses the DORA initiative, which aims to improve research assessment by addressing the misuse of publication metrics in hiring and funding decisions. It highlights the importance of using a variety of metrics and expert judgment rather than relying solely on traditional bibliometrics like journal impact factors. The document advocates for a cultural shift within research institutions to prioritize research quality over quantity, encouraging responsible metrics practices and more inclusive assessment approaches.