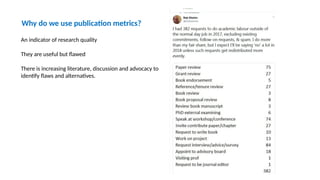

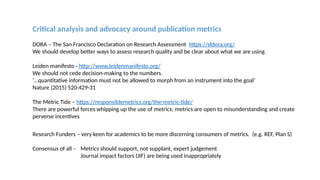

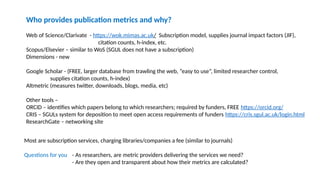

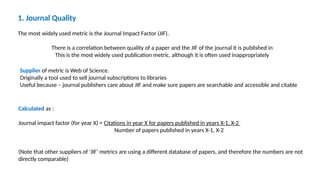

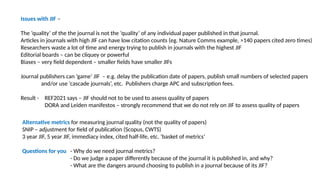

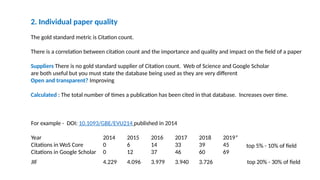

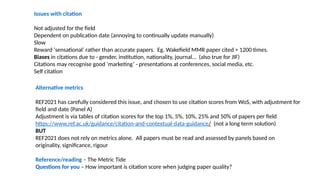

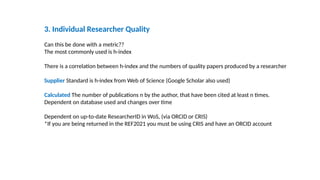

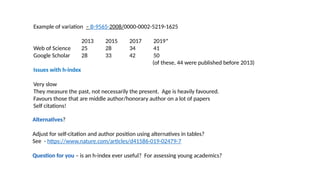

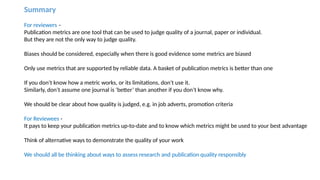

The session focuses on the responsible use of publication metrics in assessing research quality, particularly in contexts such as hiring and grant reviews. It highlights the flaws and limitations of popular metrics like journal impact factors (JIF), citation counts, and h-index, advocating for a more discerning and informed approach to their application. It references key initiatives like the San Francisco Declaration on Research Assessment (DORA) and the Leiden Manifesto, urging a balance between quantitative measures and expert judgment.