1. Research instruments are required in research to systematically collect and measure data relevant to the research problem or questions.

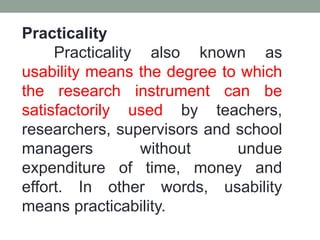

2. The key qualities of a good research instrument are validity, reliability, and usability. Validity ensures an instrument measures what it intends to measure. Reliability means an instrument produces consistent results. Usability means an instrument can be used practically.

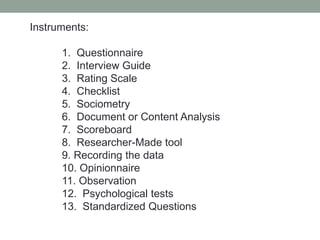

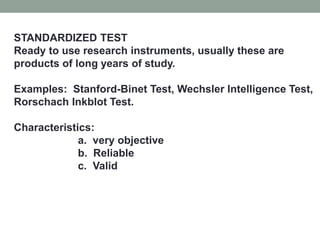

3. Common types of instruments include questionnaires, interviews, checklists, tests, and observations. Quantitative instruments like questionnaires use closed-form questions while qualitative instruments like interviews use open-form questions. Standardized tests are published and validated over time while researcher-made tools require validation.