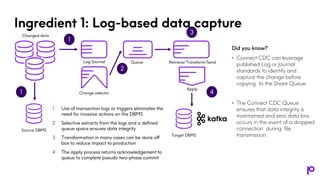

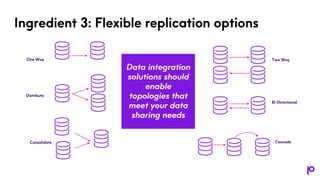

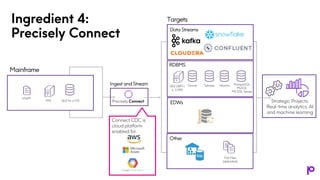

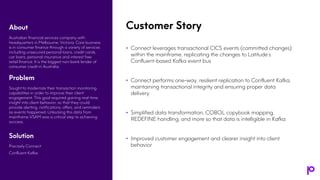

The document outlines strategies for transforming mainframe data for the cloud using Apache Kafka, focusing on application transformation, cloud connections, and real-time data pipelines. Key ingredients for successful migration include log-based data capture, flexibility in replication options, and resilient data delivery. A case study highlights how an Australian financial services company improved client engagement by modernizing its transaction monitoring capabilities through real-time insights and Kafka integration.