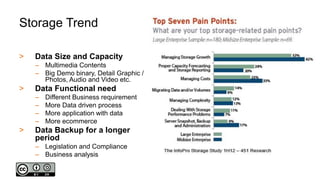

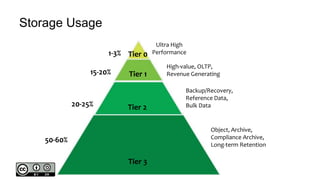

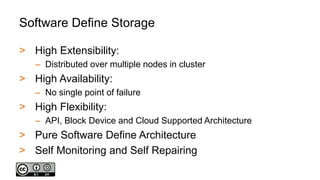

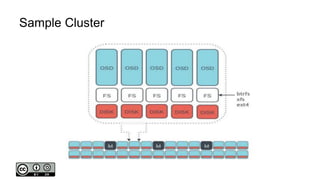

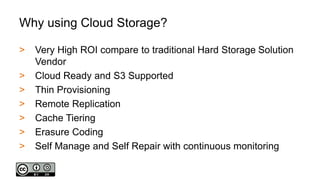

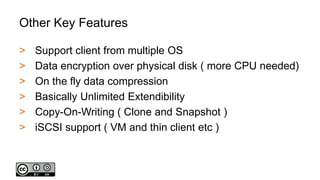

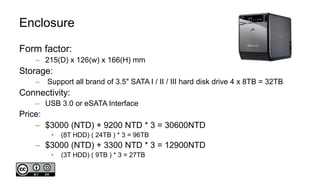

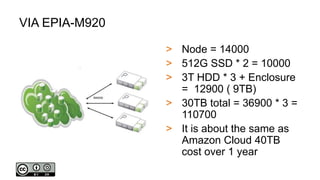

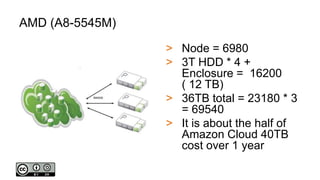

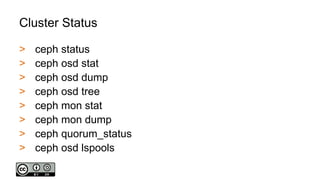

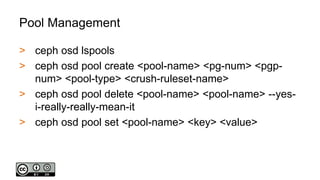

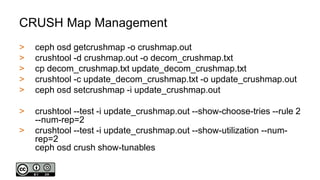

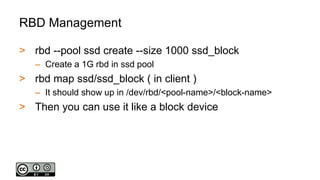

This document provides an introduction to cloud storage, including trends driving increased data storage needs, how cloud storage is tiered and used, how software-defined storage works, advantages of using cloud storage, example hardware configurations and costs for setting up a small private cloud storage cluster using Ceph, and basic management of the Ceph cluster, pools, and RBD block storage. It demonstrates configuring a 3-node Ceph cluster on inexpensive hardware that can provide over 30TB of storage, costing about the same or half of 1 year of a commercial cloud storage service.