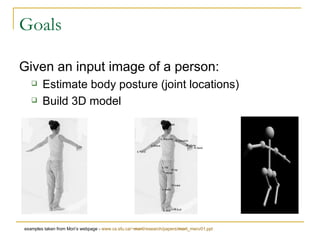

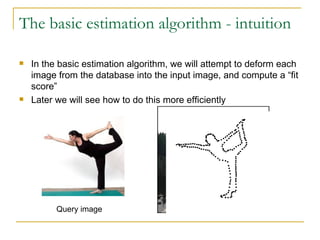

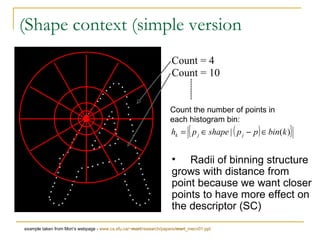

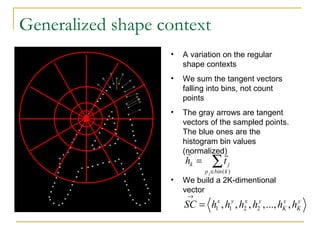

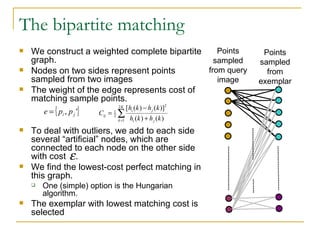

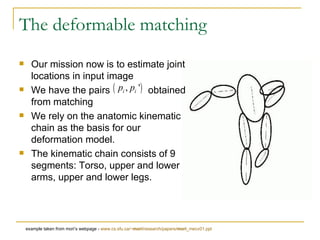

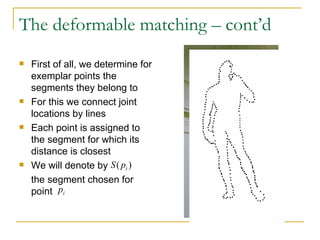

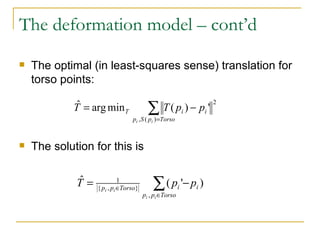

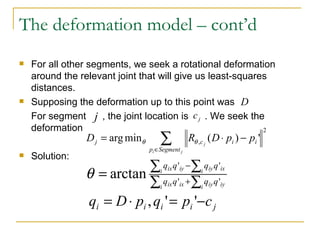

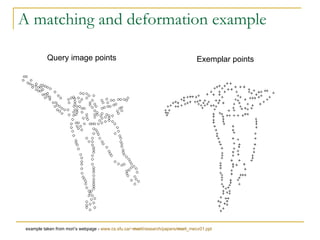

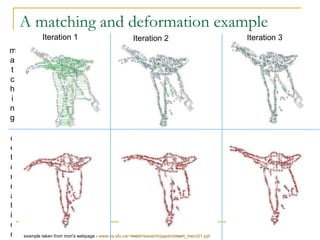

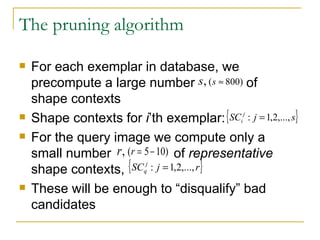

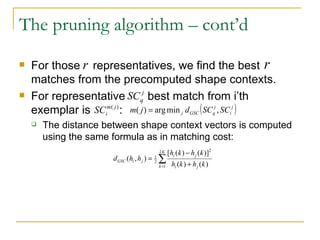

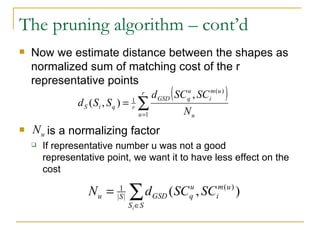

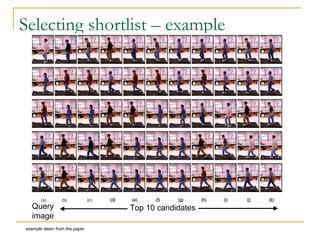

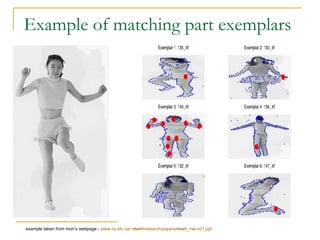

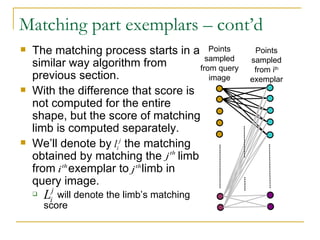

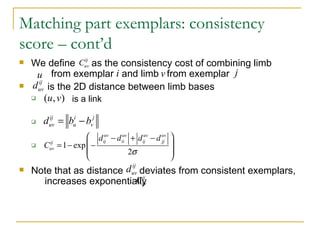

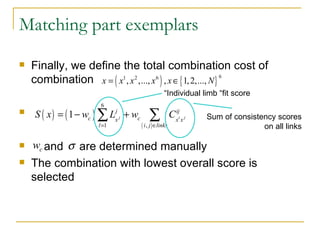

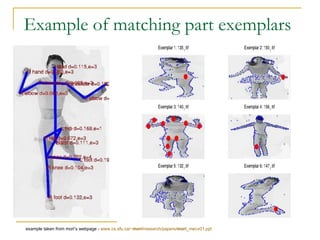

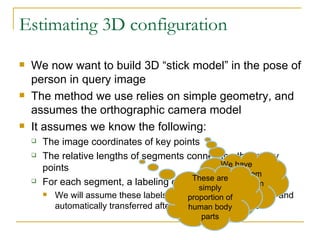

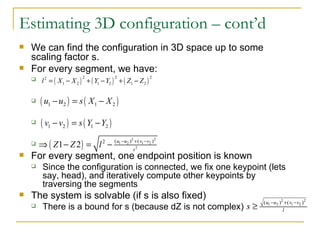

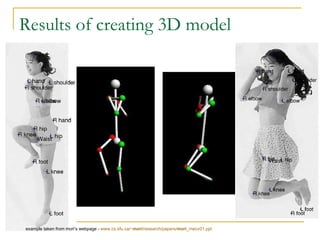

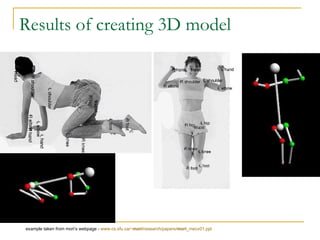

The document summarizes a method for estimating 3D human body pose from a single image. It uses a database of labeled example poses and shape context descriptors to match image regions and estimate joint locations through an iterative deformation process. Key steps include using shape contexts for fast candidate pruning when matching to large databases, and independently matching body parts from different examples to assemble full poses.