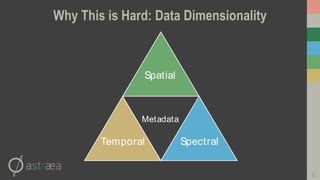

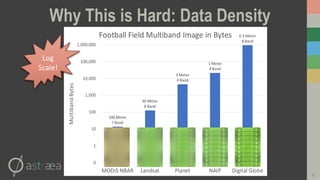

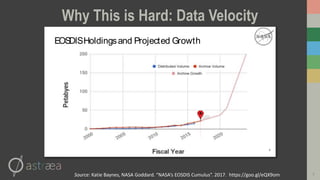

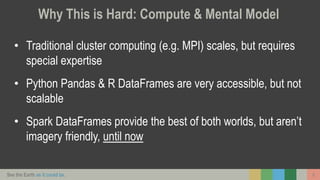

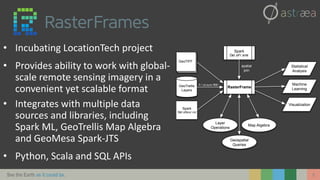

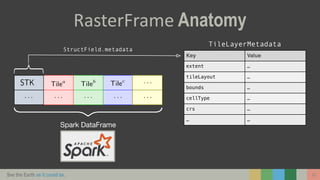

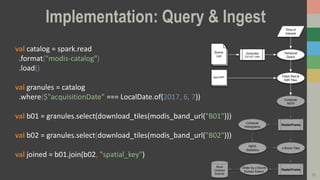

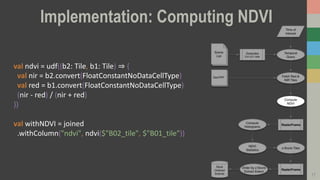

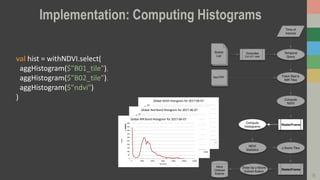

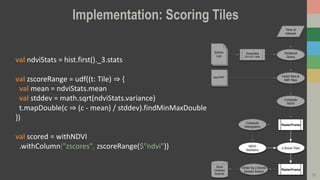

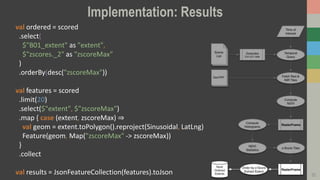

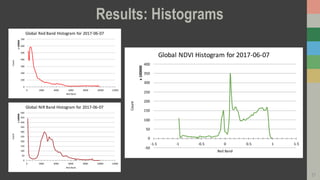

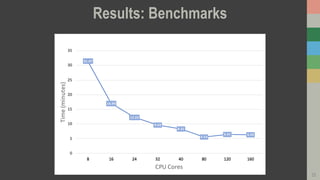

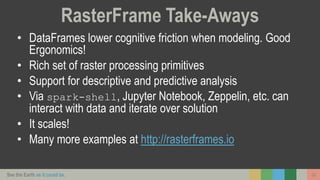

This document introduces RasterFrames, an open source project that enables global-scale geospatial machine learning. RasterFrames provides scalable tools for working with large remote sensing datasets in a convenient format. It integrates with Spark, GeoTrellis and other libraries. The document demonstrates RasterFrames by computing NDVI values from MODIS data and finding the highest NDVI locations globally on a given day. Performance benchmarks show RasterFrames can scale to large datasets across multiple CPU cores.