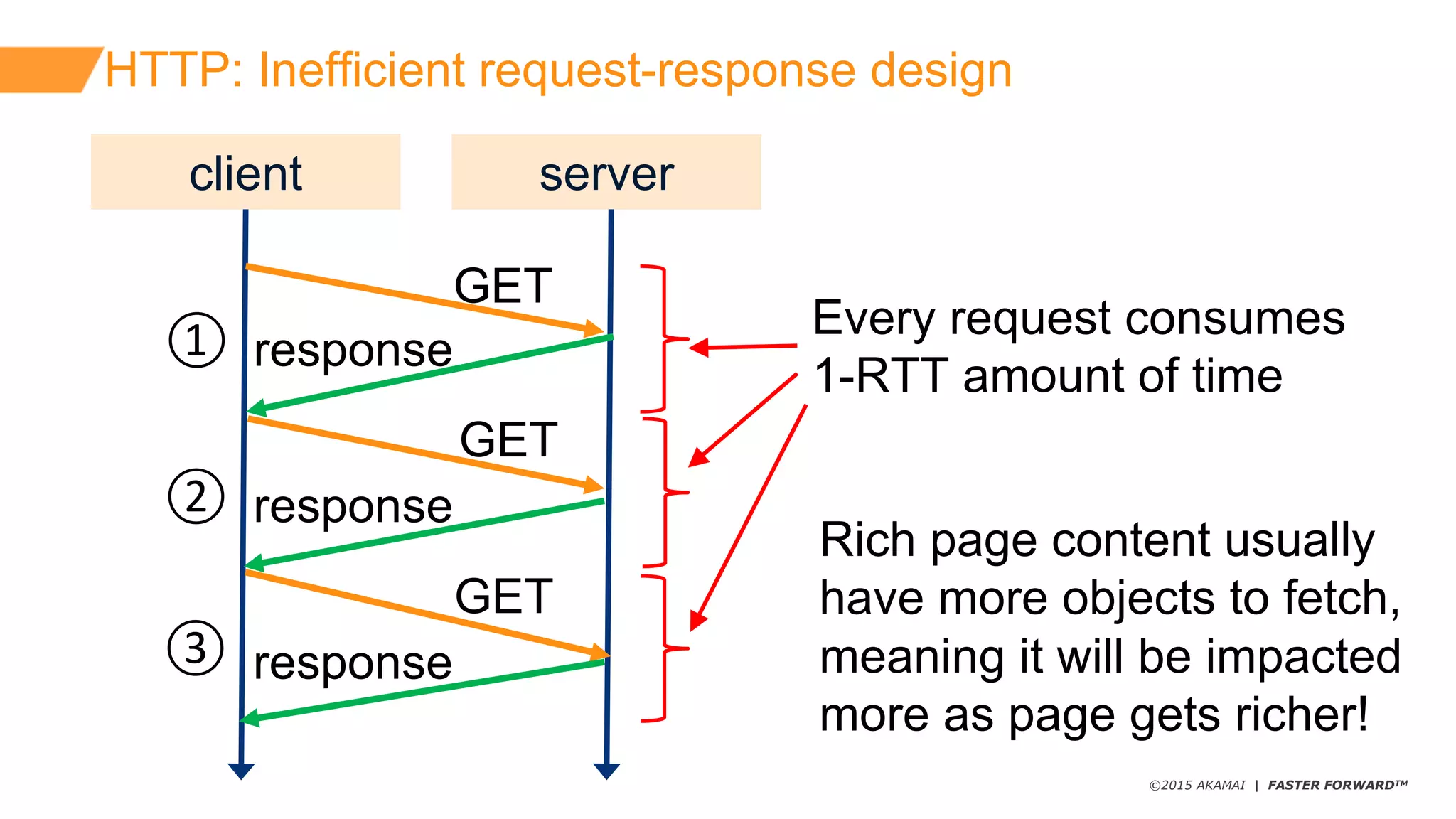

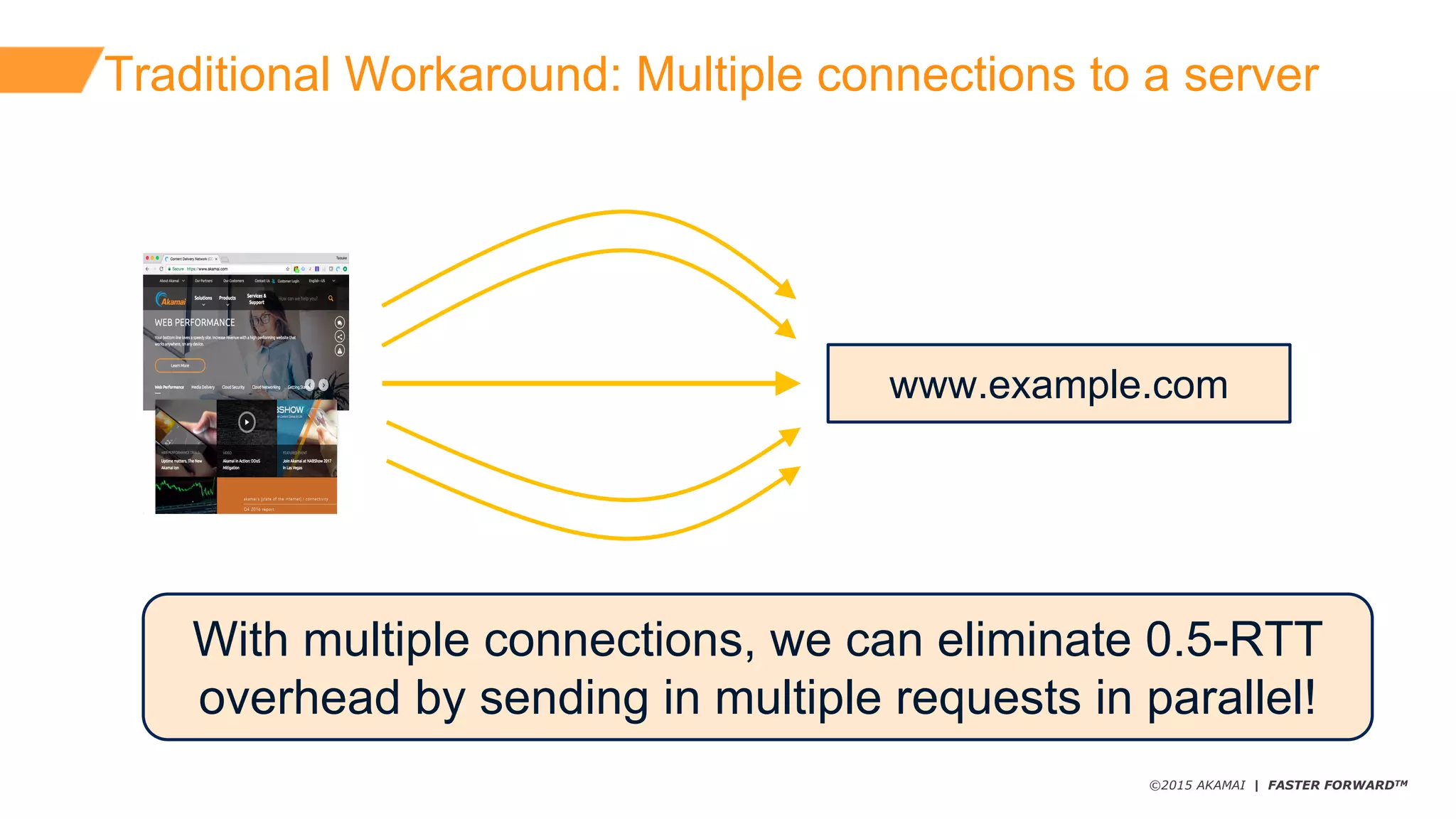

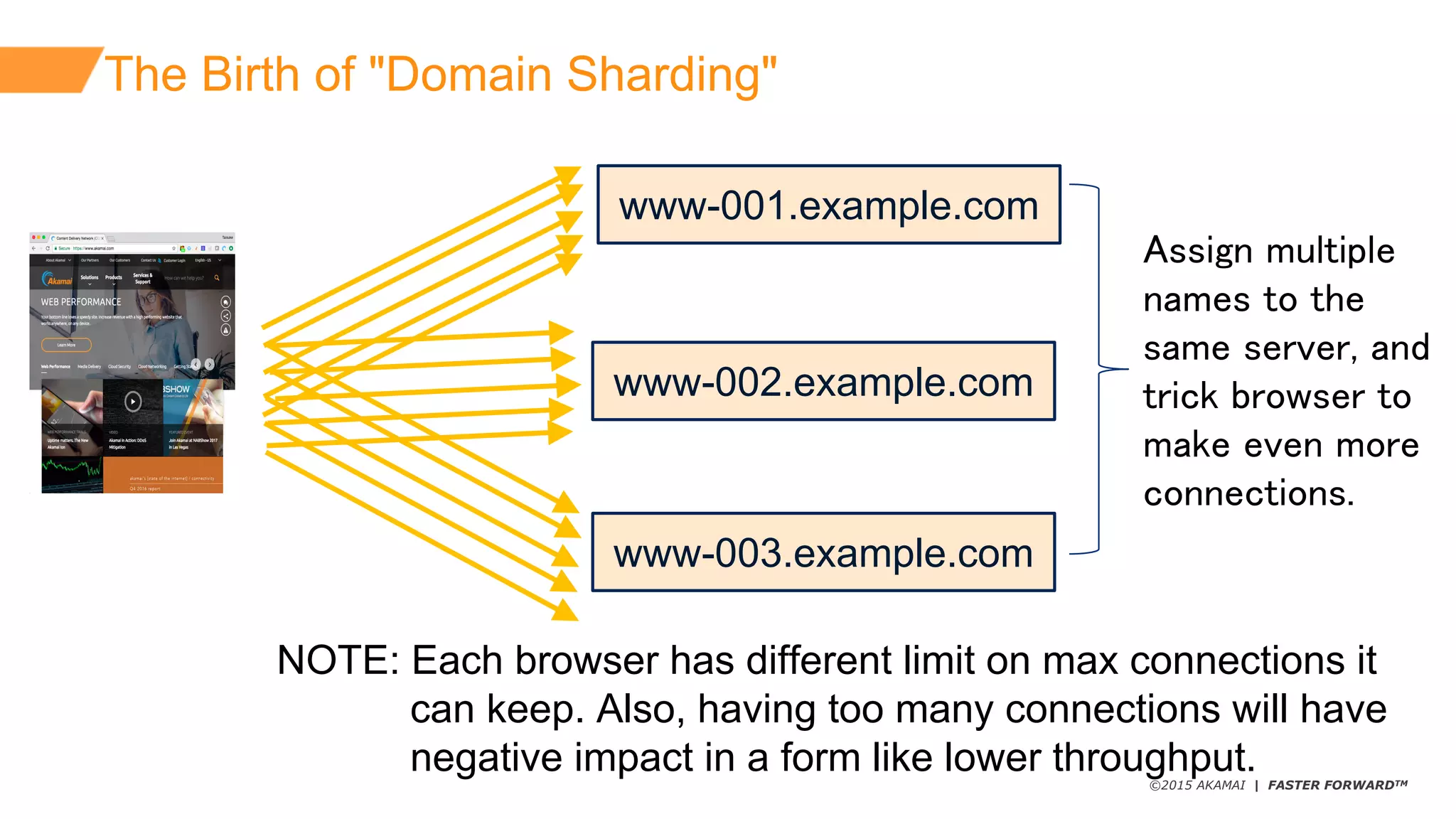

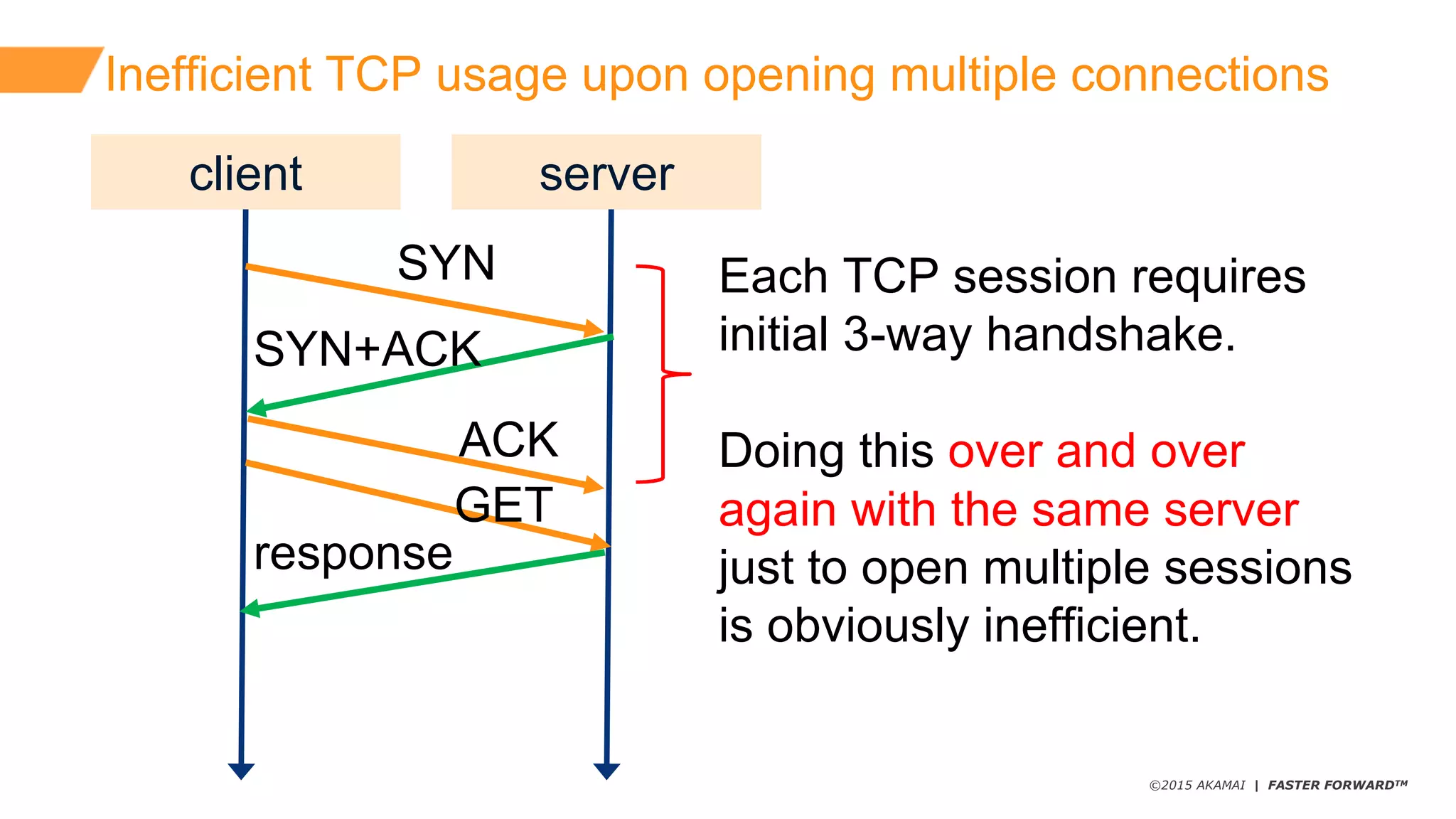

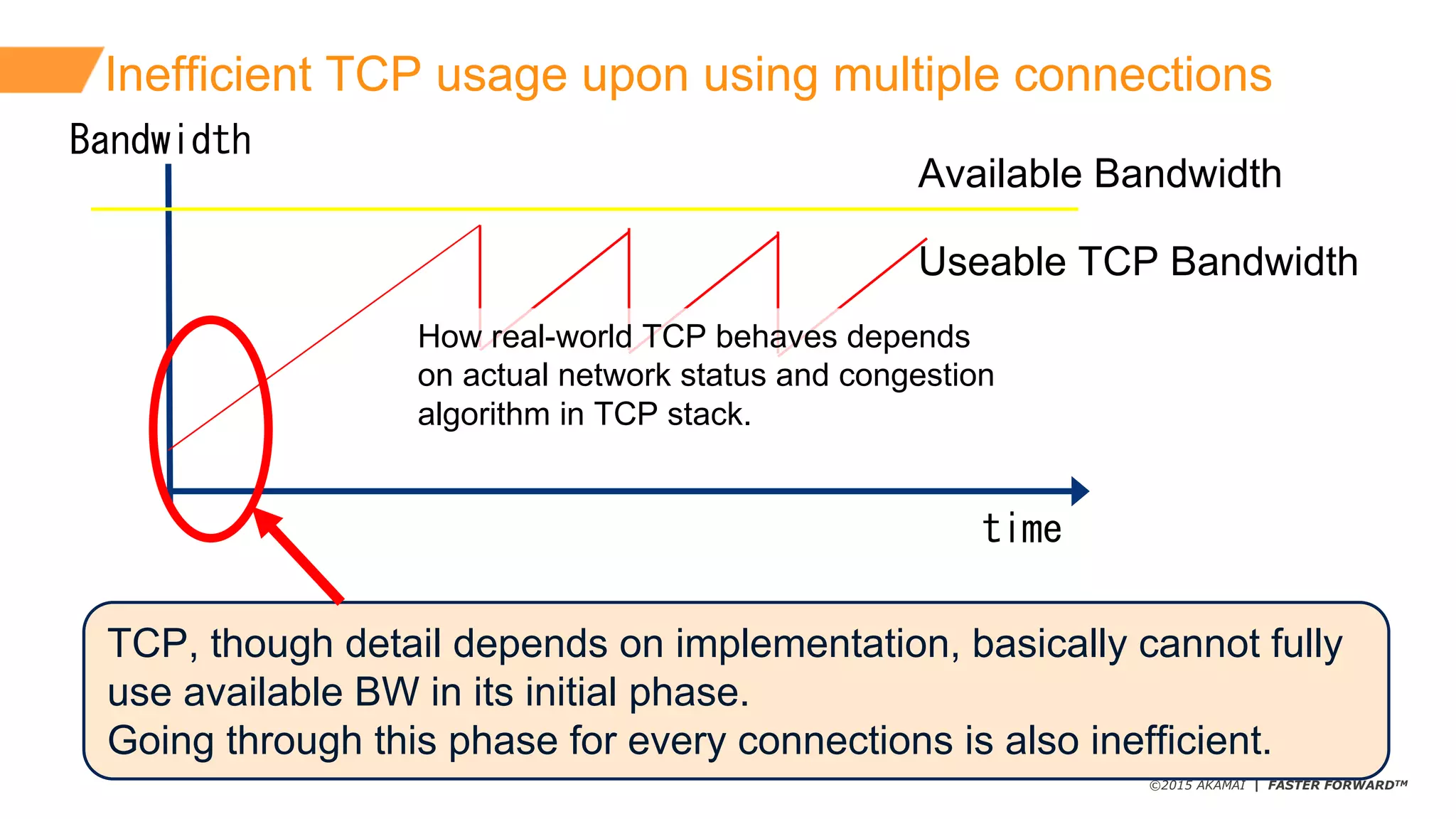

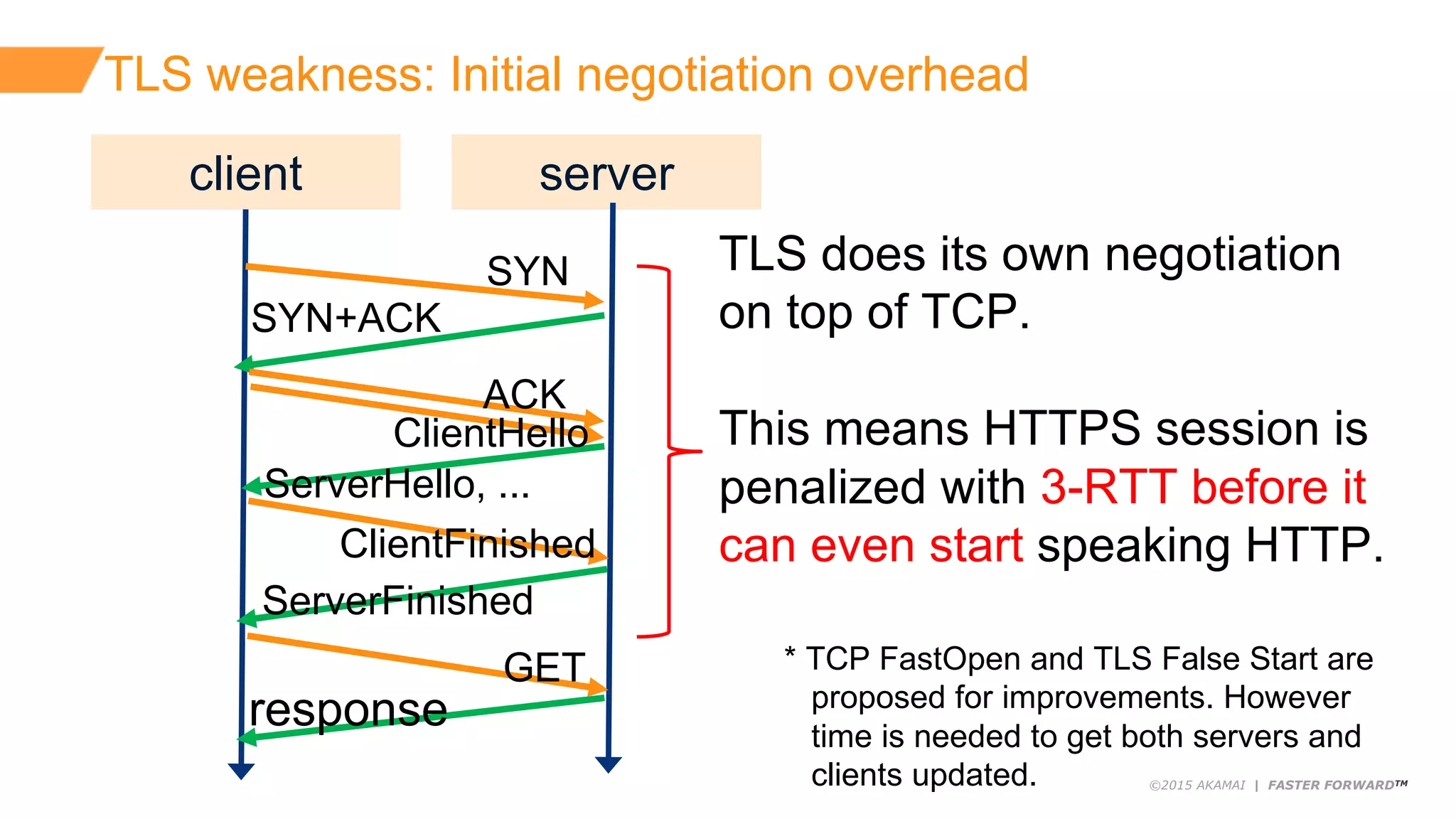

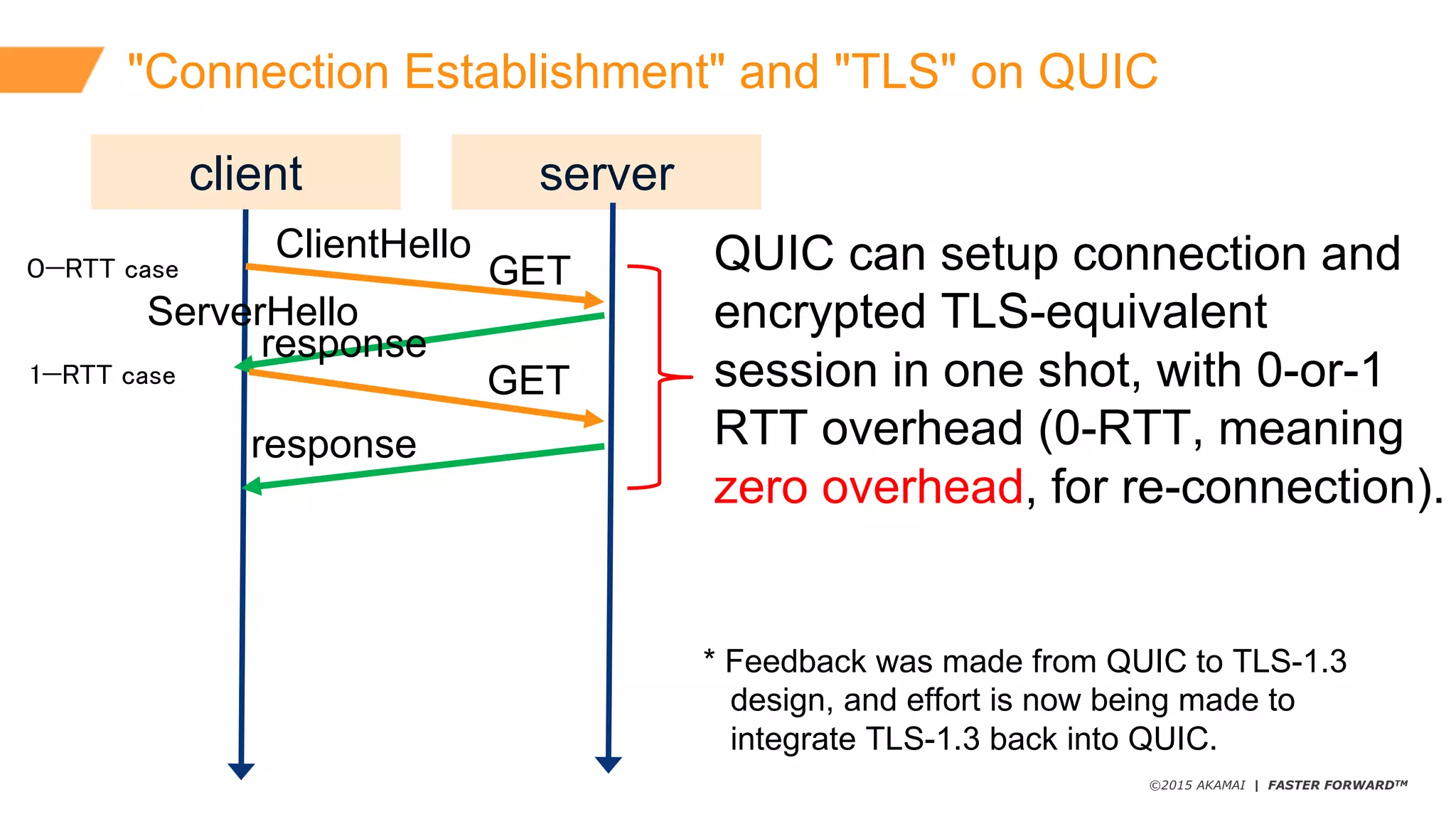

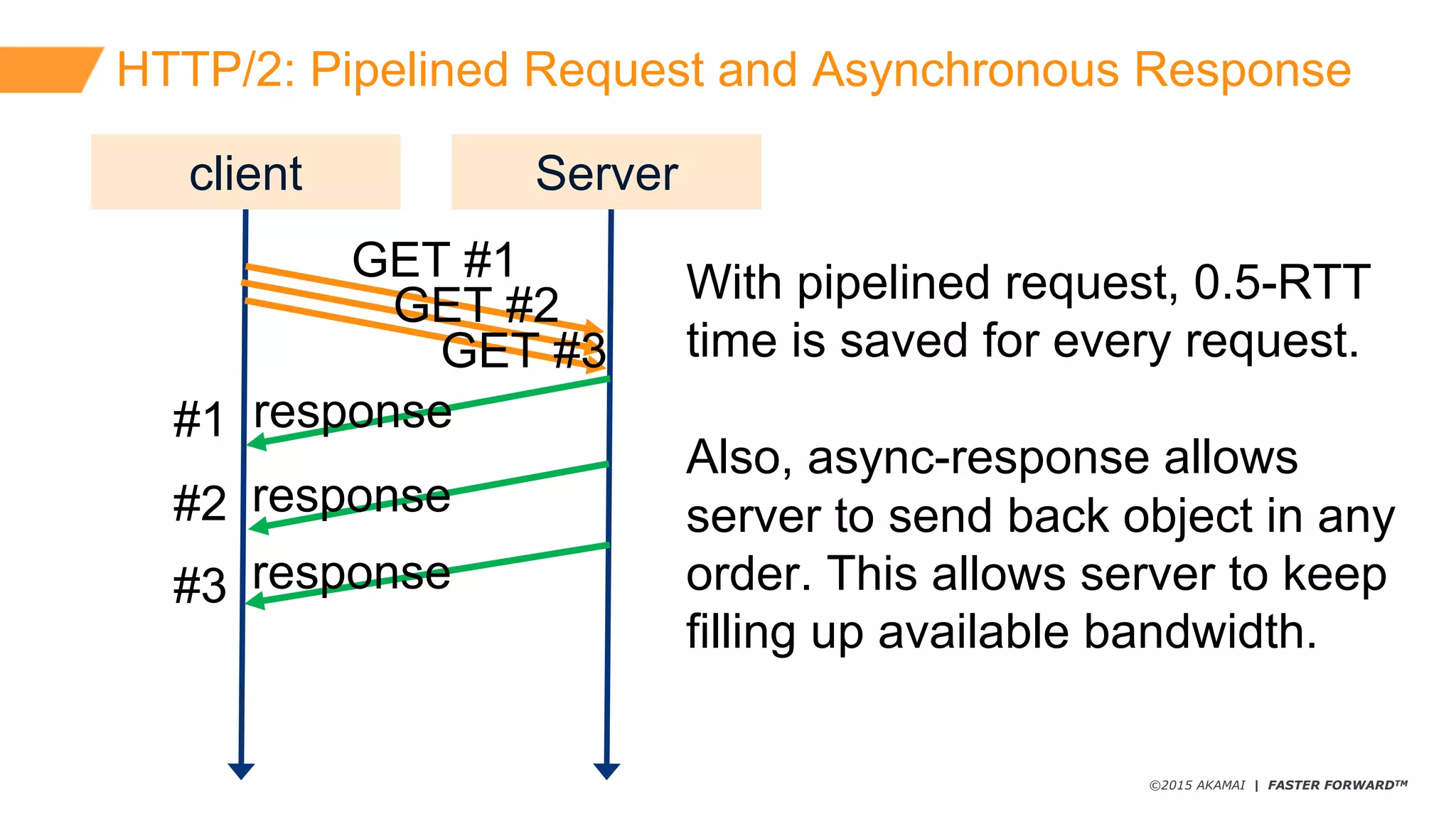

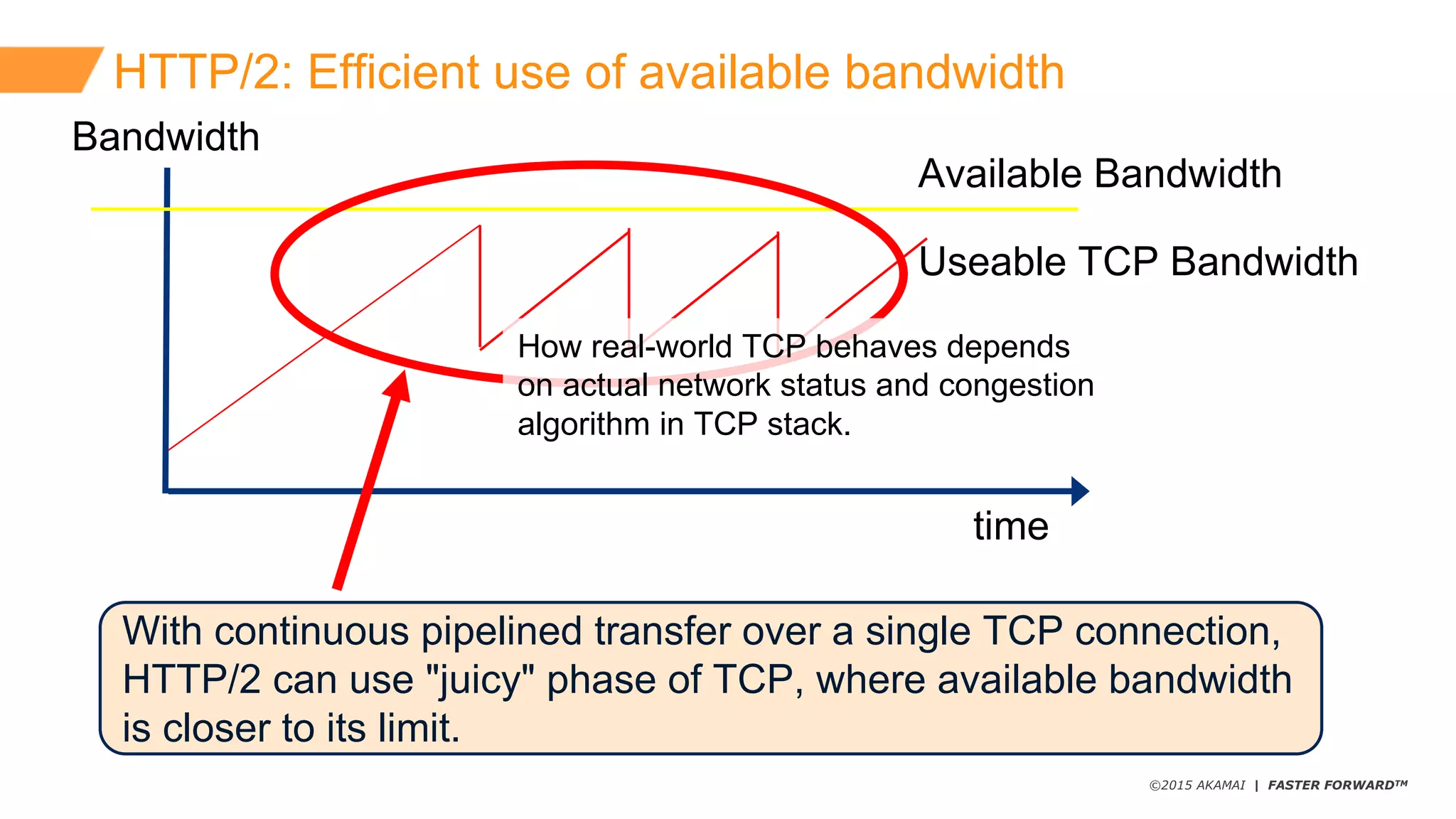

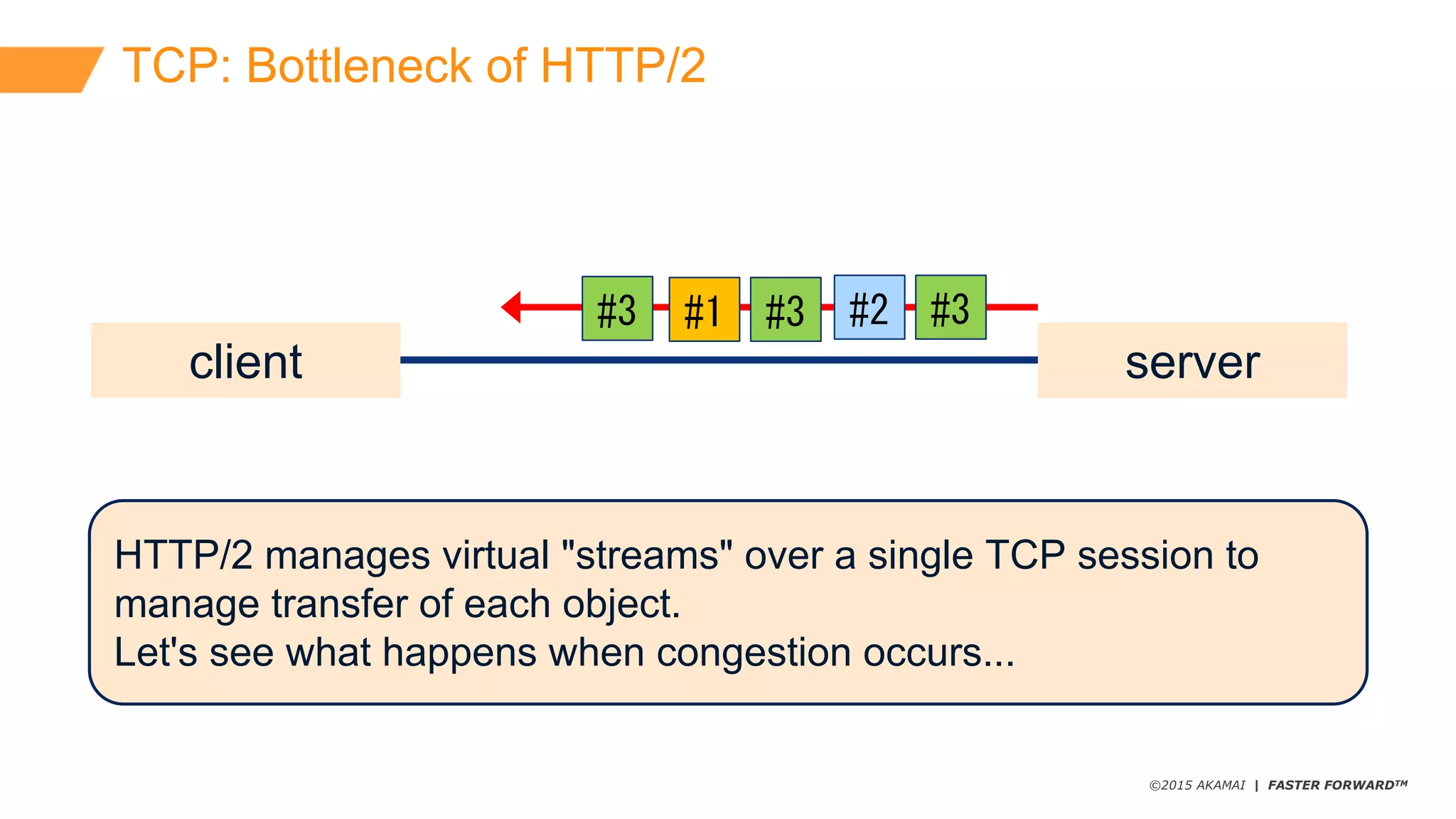

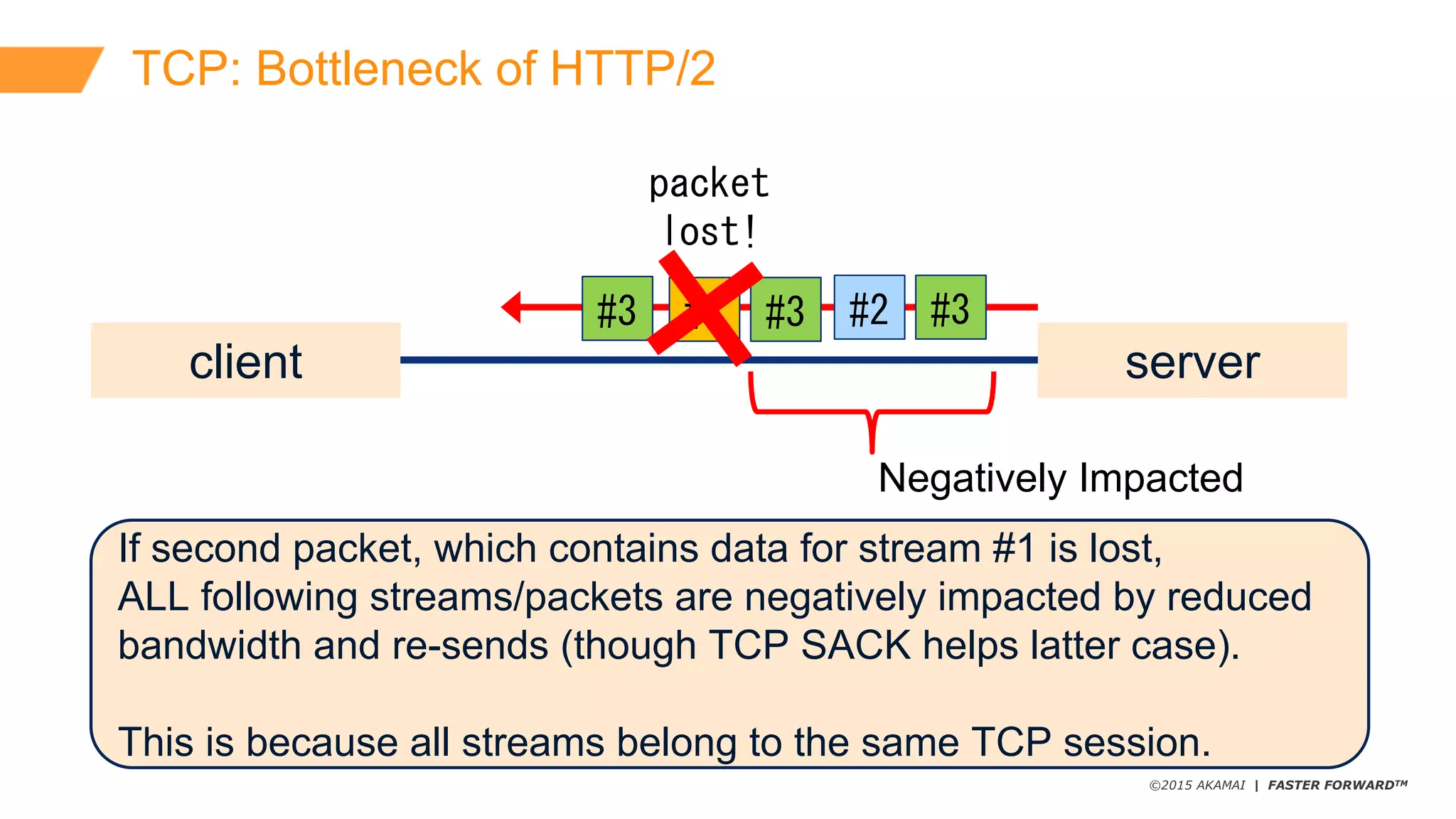

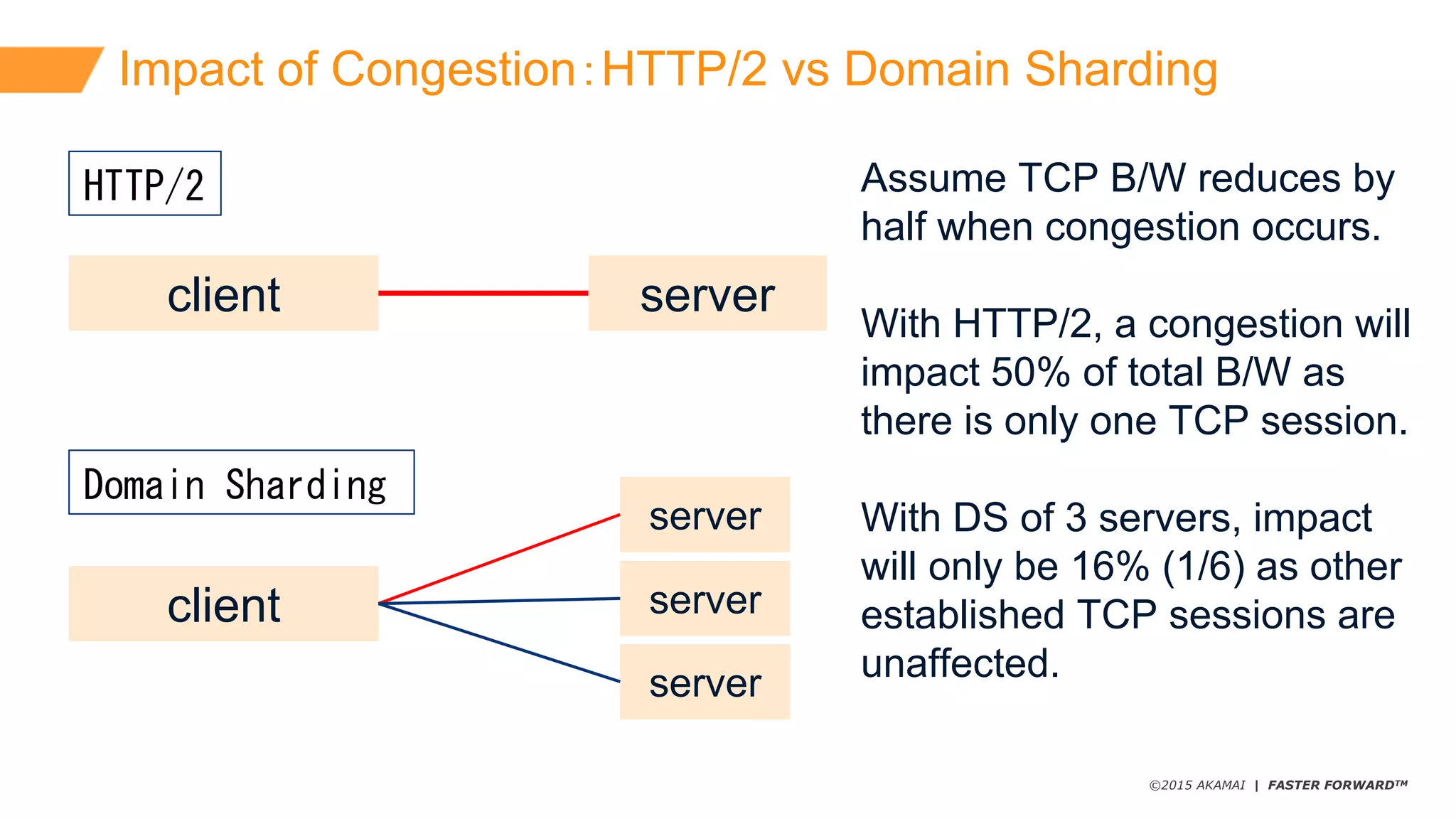

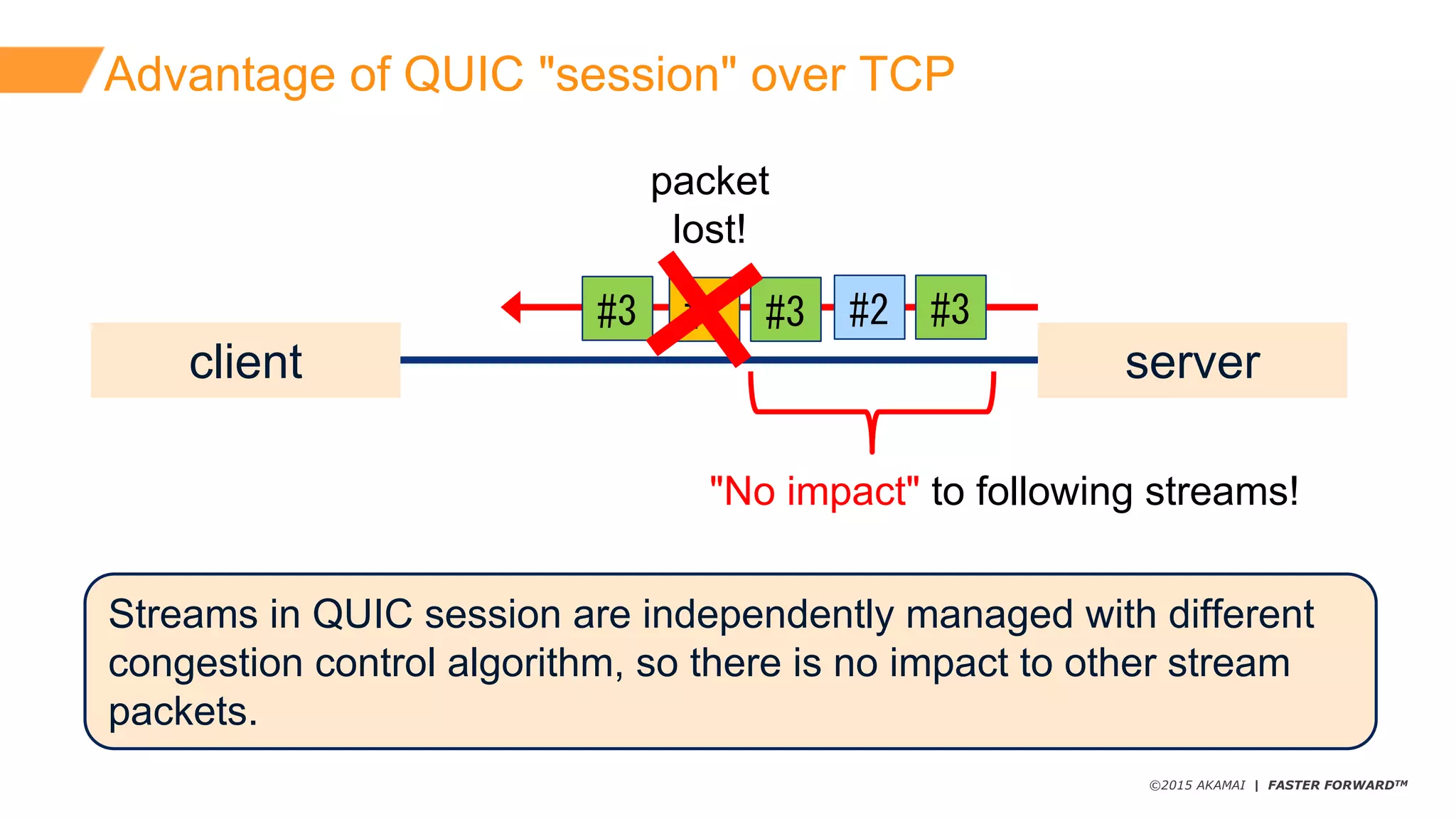

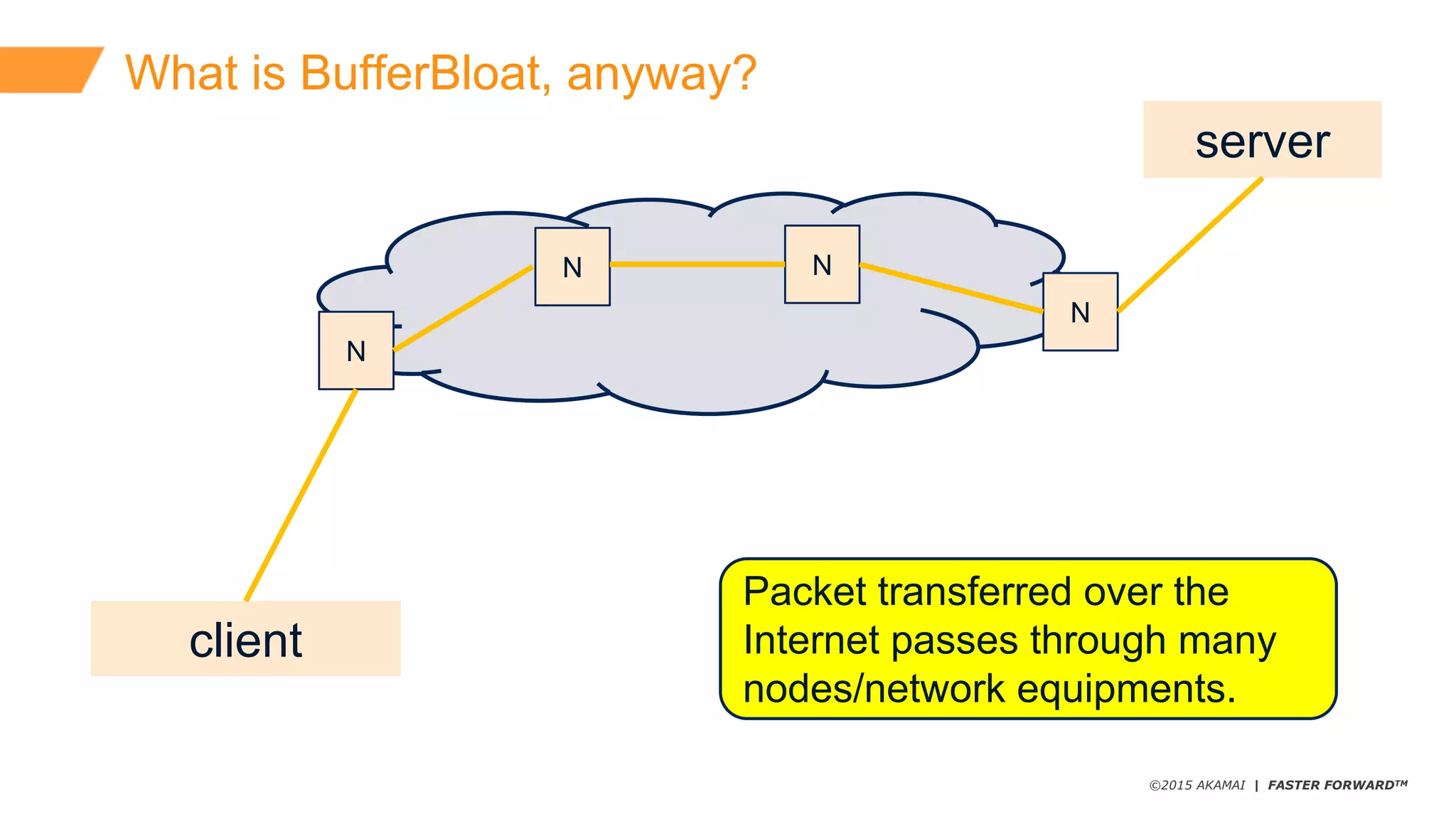

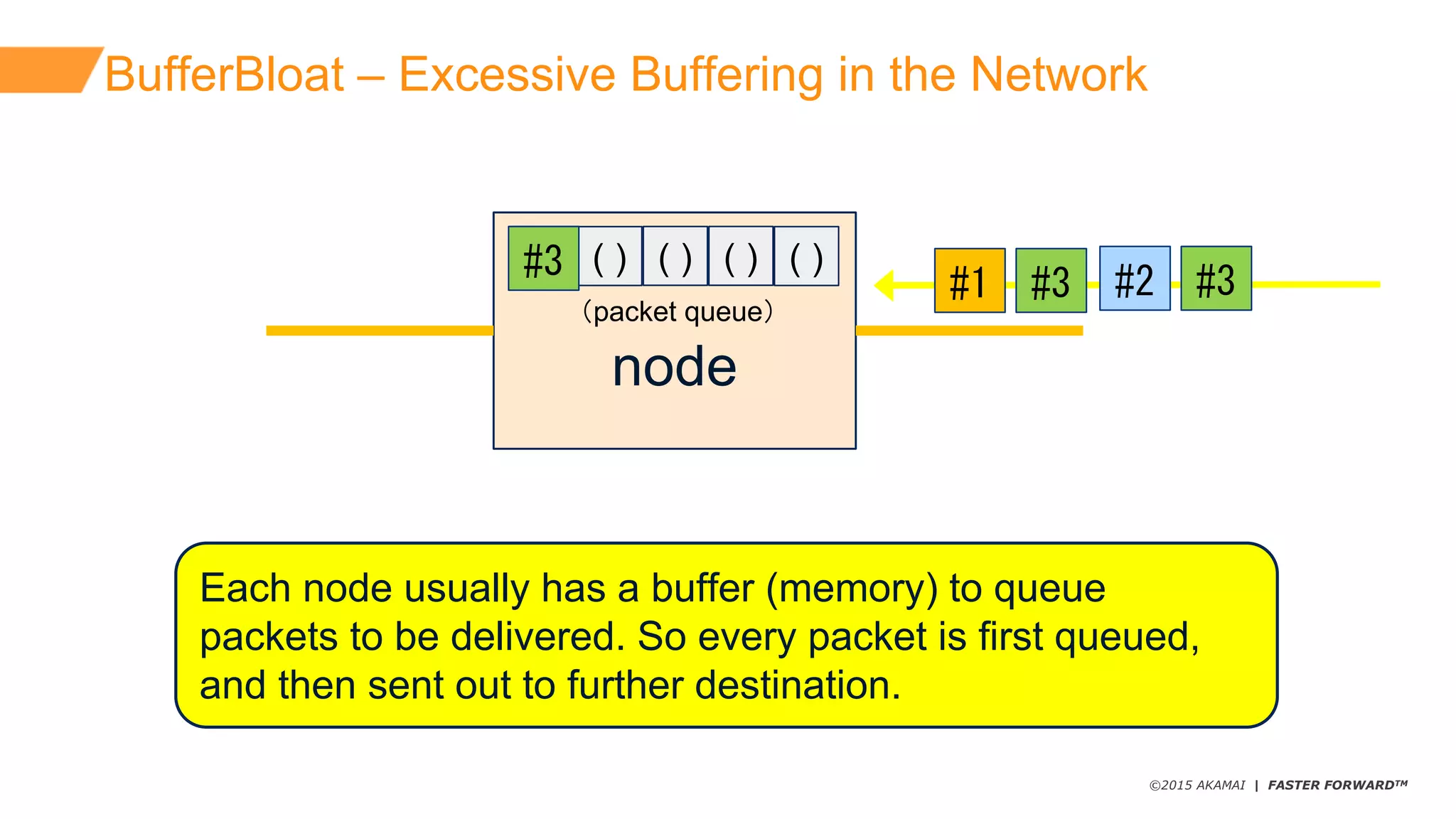

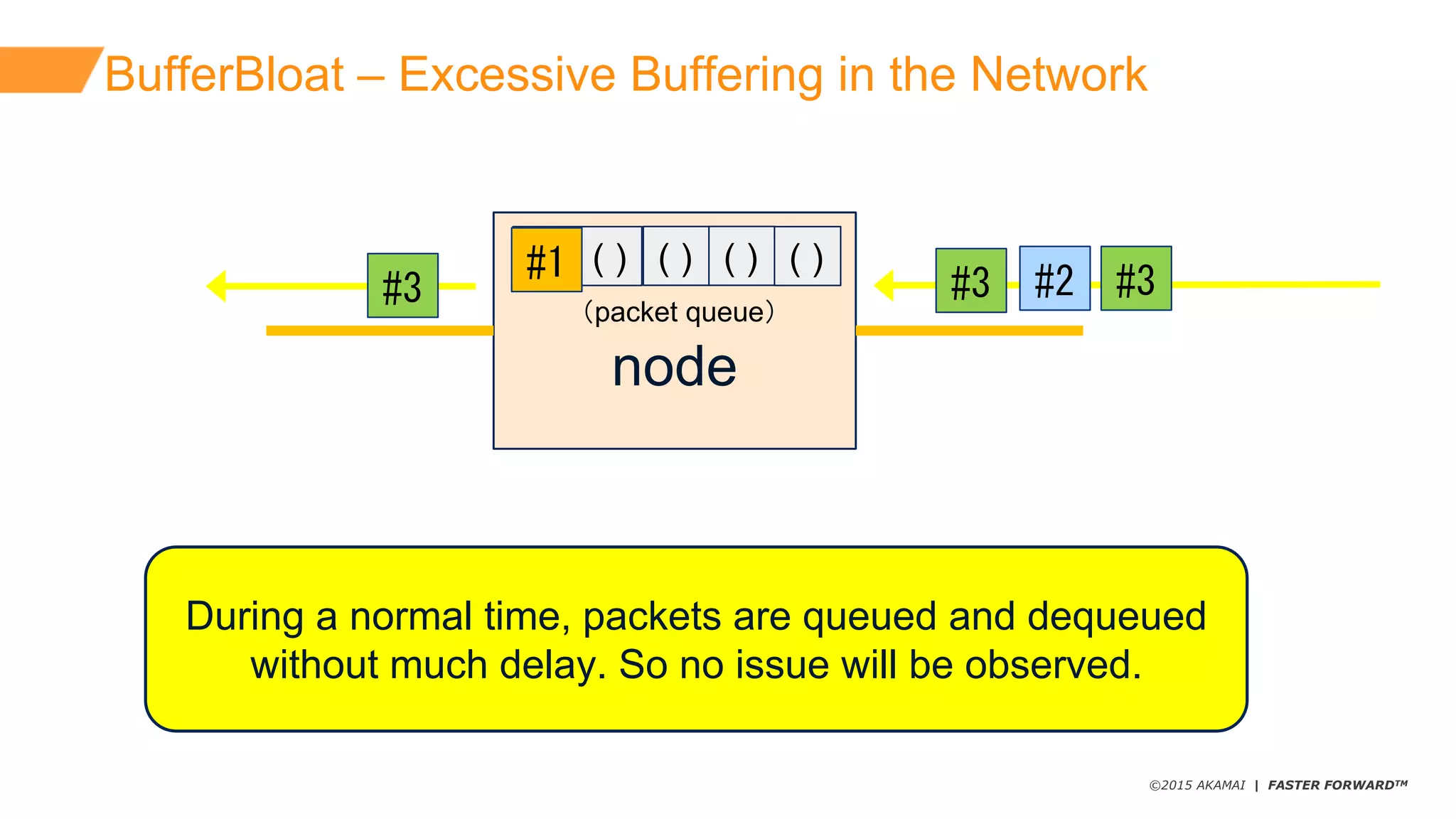

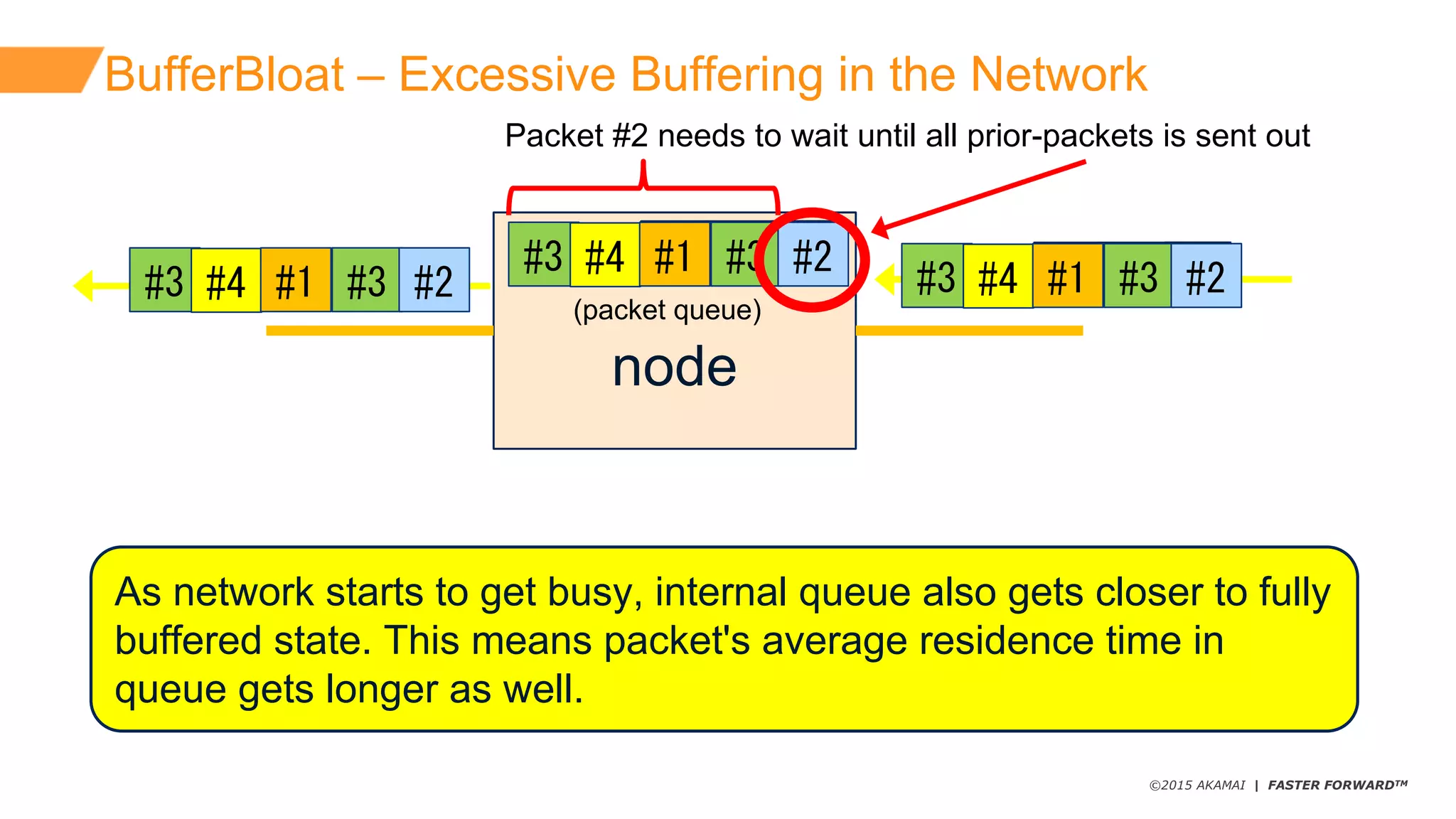

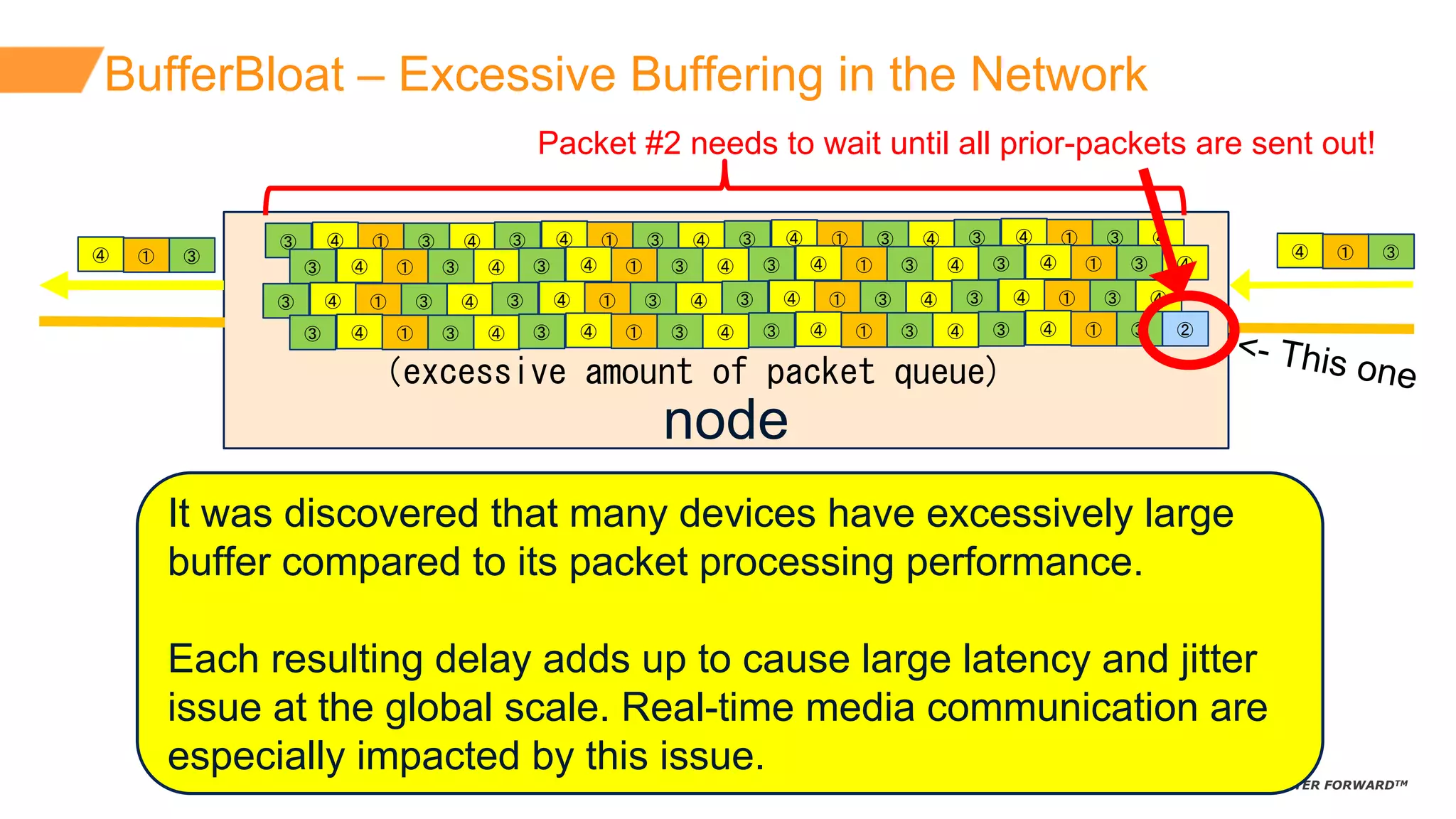

This document summarizes a presentation about the QUIC protocol. It begins with an overview of QUIC and its goals of eliminating overhead from the strict layering of TCP, TLS, and HTTP. It then discusses problems with the traditional protocols like multiple roundtrips needed for HTTP requests, TCP handshake overhead, and inefficient usage of bandwidth. QUIC aims to address these by being UDP-based and combining connection establishment and encryption with sending and receiving data in one roundtrip or less. The presentation also covers how prior protocols like SPDY and HTTP/2 improved performance but were still bottlenecked by relying on TCP. It concludes with an explanation of bufferbloat and how excessive buffering in network nodes can increase latency and jitter.