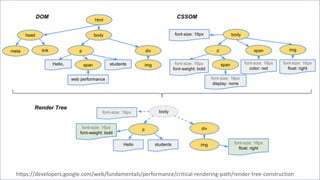

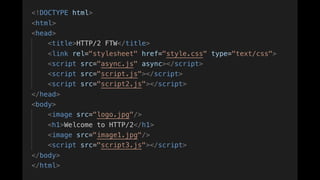

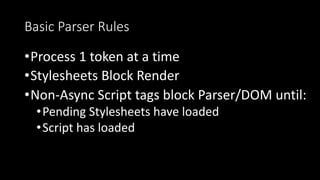

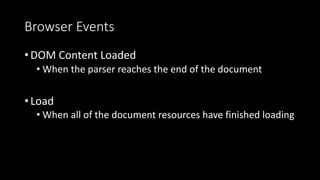

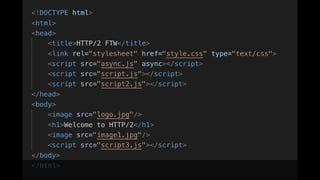

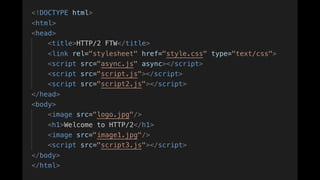

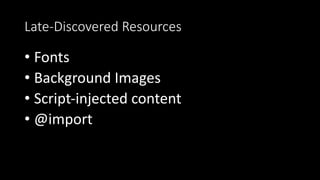

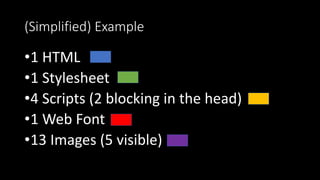

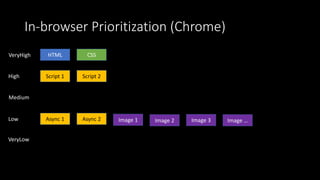

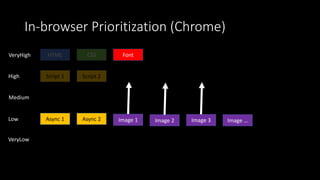

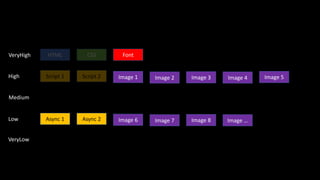

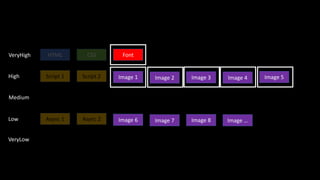

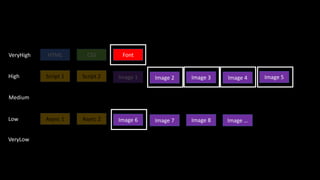

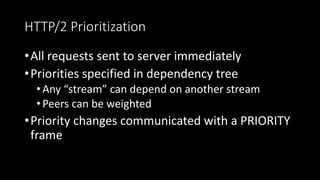

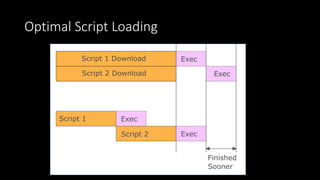

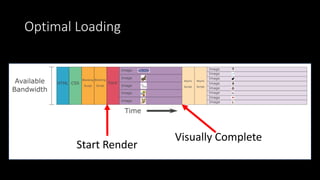

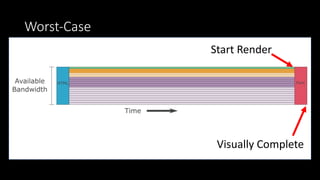

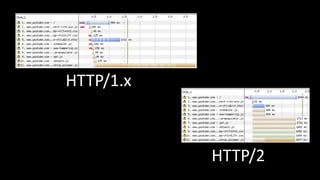

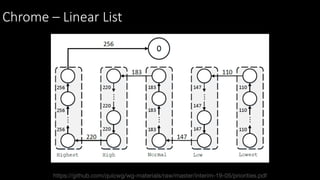

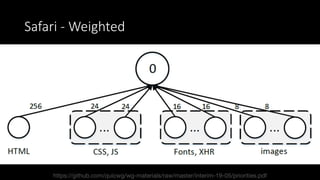

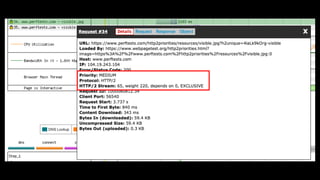

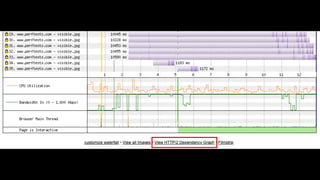

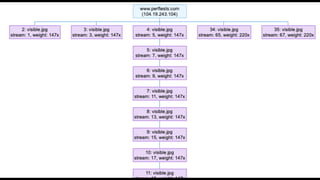

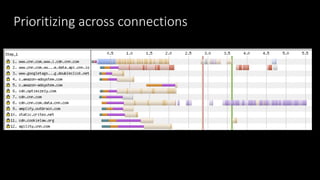

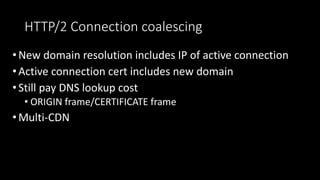

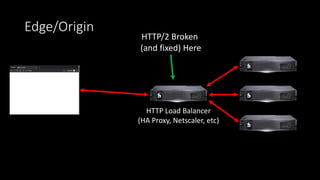

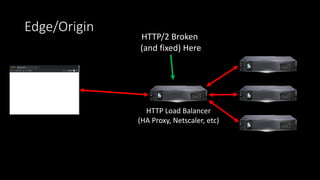

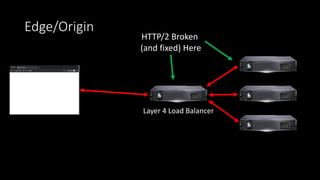

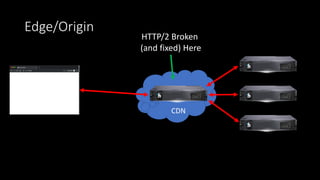

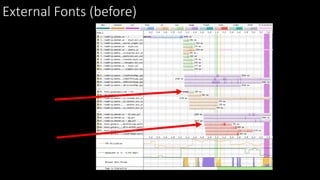

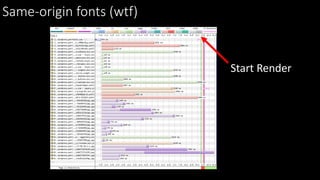

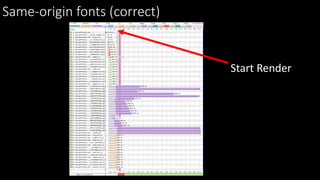

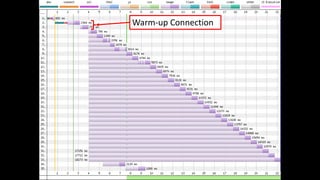

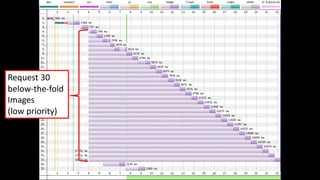

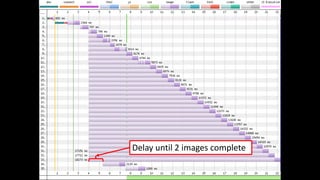

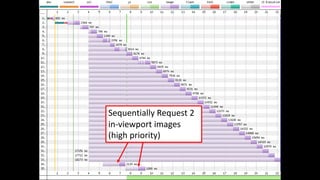

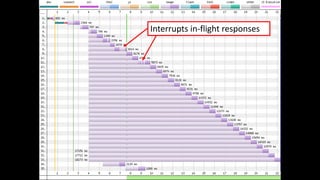

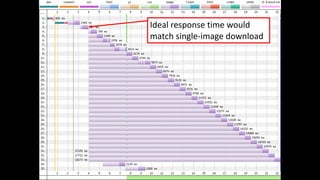

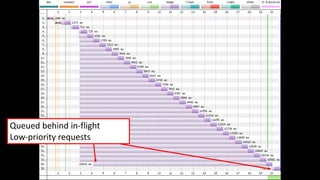

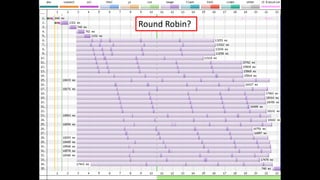

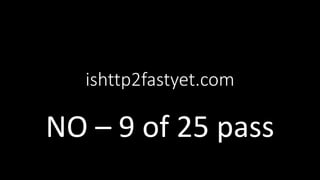

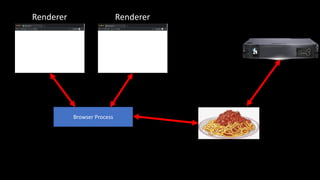

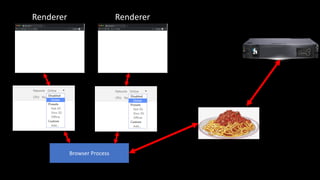

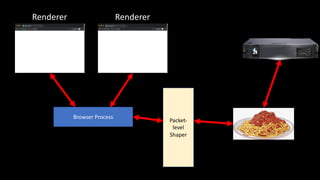

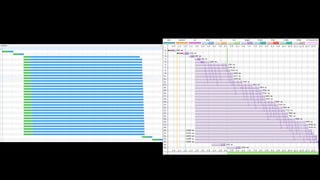

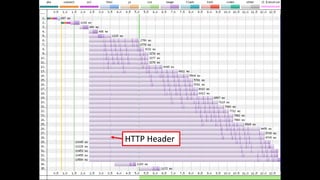

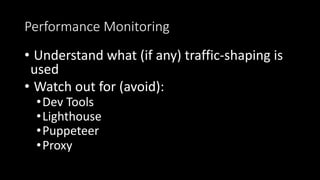

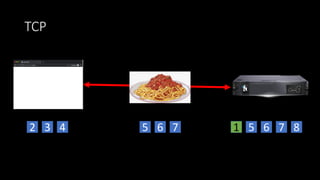

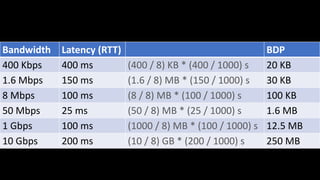

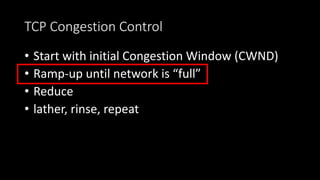

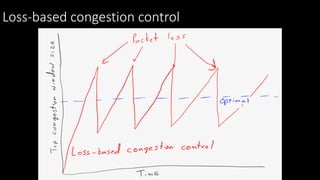

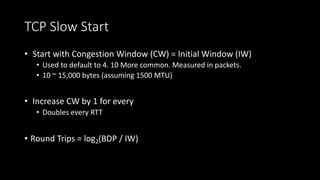

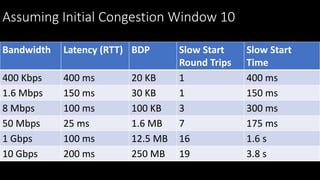

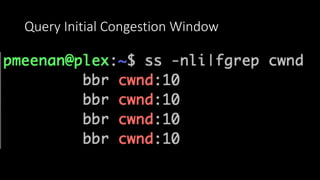

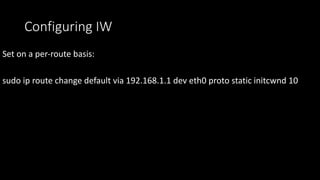

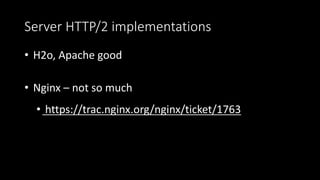

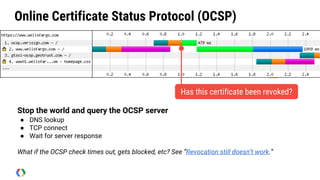

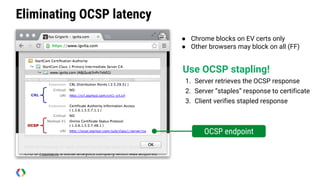

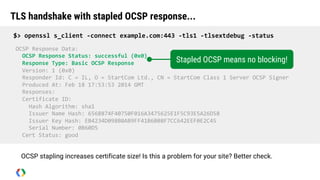

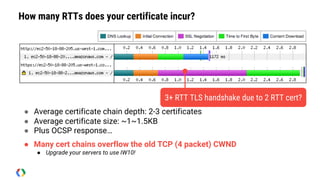

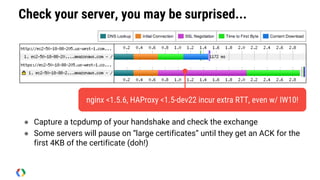

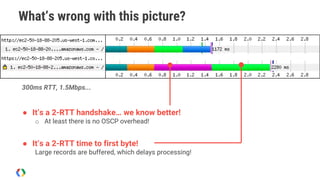

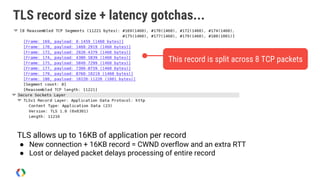

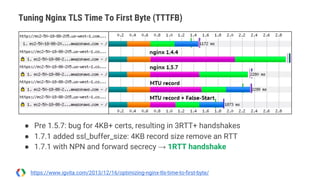

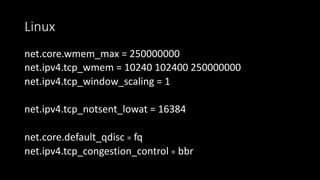

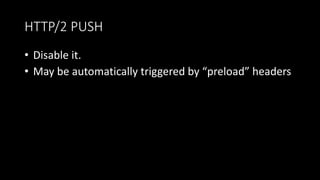

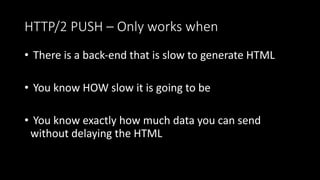

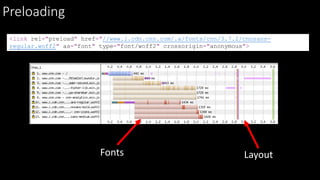

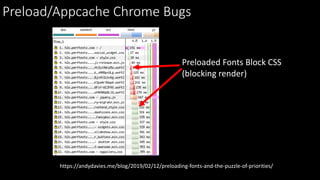

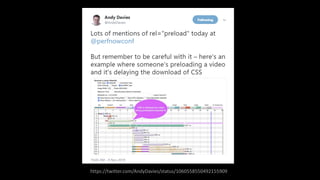

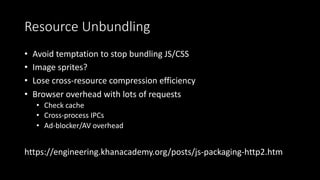

The document discusses the differences and optimizations in HTTP/2 compared to HTTP/1.x, focusing on resource prioritization, browser rendering processes, and the handling of fonts and images. It highlights the role of various browser behaviors, server configurations, and TCP mechanisms in improving performance, while also addressing challenges like TCP congestion control and OCSP latency. Additionally, it emphasizes the importance of using proper content delivery networks (CDNs) and tuning server settings for better web performance.