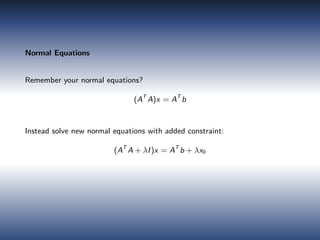

This document discusses an iterative approach to Tikhonov regularization for matrix inversion, particularly in handling ill-conditioned systems using techniques like regularization and single value decomposition (SVD). It outlines the methodology for stabilizing solutions to linear equations by adding regularization terms, comparing Tikhonov regularization to truncated SVD, and emphasizes the importance of selecting an appropriate lambda for effective results. Practical applications include image reconstruction in various fields, and it provides code references for implementing the discussed methods.