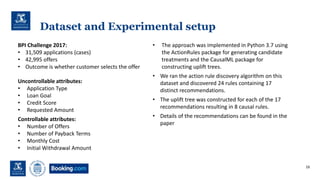

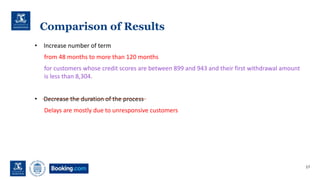

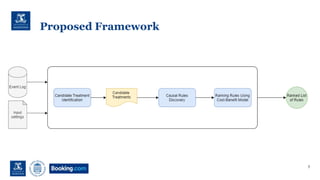

The document proposes a framework that combines process mining and causal machine learning to discover causal rules from event logs. It identifies candidate treatments using action rule mining on event log data. It then uses uplift trees to discover subgroups where a treatment has a high causal effect on outcomes, addressing confounding. The approach was tested on a loan application dataset, discovering 8 causal rules that recommend process changes to increase loan selections. Future work includes incorporating other recommendation types and addressing unobserved confounding.

![Preliminaries

How do we measure causal effects?

Taking the difference between potential outcomes

Example: Loan application Process

Treatment/intervention: calling the customer after offer

Outcome: whether customer selects a loan offer or not

4

World 1

E[Y1] E[Y0]

World 2

Average Treatment Effect:

ATE = E[Y1 – Y0]

Conditional Average Treatment Effect:

CATE = E[Y1 – Y0|X= x]

CATE is also known as Uplift

in marketing literature.](https://image.slidesharecdn.com/icpm-causal-process-mining-201009144232/85/Process-Mining-Meets-Causal-Machine-Learning-Discovering-Causal-Rules-From-Event-Logs-4-320.jpg)

![Candidate Treatment Identification

Aim: generating recommendations automatically

Method: Action rule mining

• Extension of classification rules

• Suggests actions to change class label

• Based on support

Action Rule: r = [(a: a1) ∧ (b: b1 → b2)] ⇒ [y: y1 → y2]

8

Controllable

Uncontrollable

Input: Case Attributes](https://image.slidesharecdn.com/icpm-causal-process-mining-201009144232/85/Process-Mining-Meets-Causal-Machine-Learning-Discovering-Causal-Rules-From-Event-Logs-8-320.jpg)

![Candidate Treatment Identification

9

Rule: r = [(LoanGoal: Home Improvement) ∧ (NumOfTerms: 48 → 84)] ⇒ [Selected: 0 → 1]

with support = 0.05

Pre-condition Action Outcome

Example:

In loan applications where the loan goal is home improvement, changing the number of payback months (terms)

from 48 to 84 months will increase the chance of the customer selecting the loan offer.

Example: Loan application process

Attributes: Loan Goal (Uncontrollable)

Number of terms (Controllable)](https://image.slidesharecdn.com/icpm-causal-process-mining-201009144232/85/Process-Mining-Meets-Causal-Machine-Learning-Discovering-Causal-Rules-From-Event-Logs-9-320.jpg)

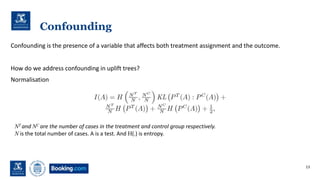

![Uplift Tree

E[Y|T=1, X=x] – E[Y|T=0, X=x]

Recall:

Uplift = E[Y1 | X=x] – E[Y0 | X=x]

The reason is Confounding.

12

≠](https://image.slidesharecdn.com/icpm-causal-process-mining-201009144232/85/Process-Mining-Meets-Causal-Machine-Learning-Discovering-Causal-Rules-From-Event-Logs-12-320.jpg)

![Example Causal Rule

Example rule:

• Action: [NumOfTerms: 48 → 84]

• Objective: [Selected: 0 → 1]

• Sub-group:

a) Customers whose loan goal is not existing loan takeover AND

b) have a credit score less than 920 AND

c) their offer includes a monthly cost greater than 149 AND

d) and the first withdrawal amount is less than 8304

14](https://image.slidesharecdn.com/icpm-causal-process-mining-201009144232/85/Process-Mining-Meets-Causal-Machine-Learning-Discovering-Causal-Rules-From-Event-Logs-14-320.jpg)