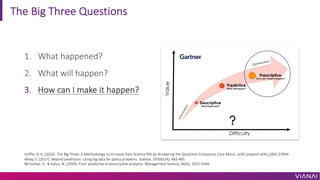

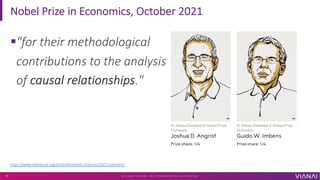

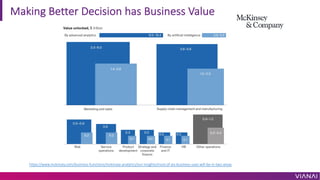

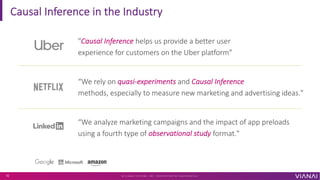

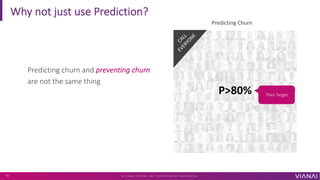

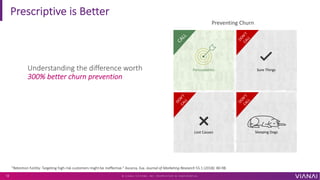

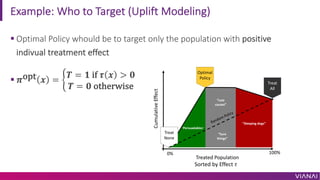

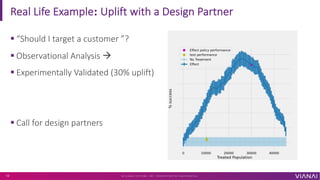

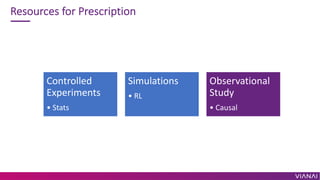

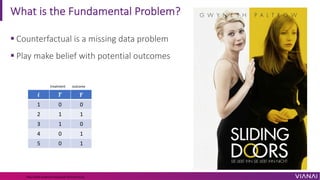

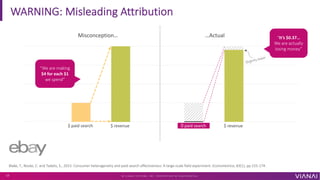

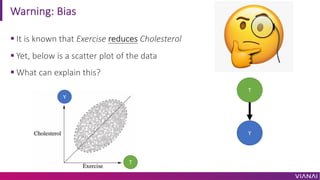

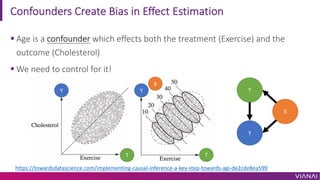

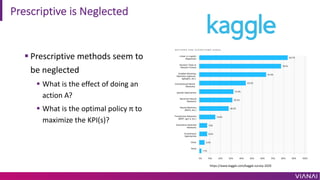

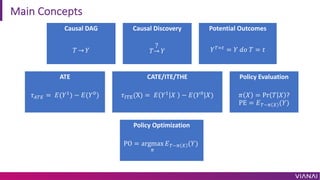

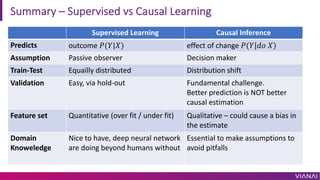

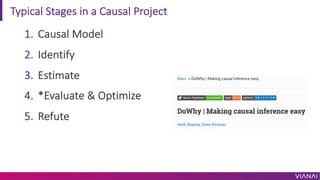

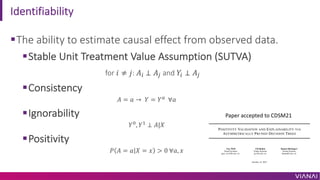

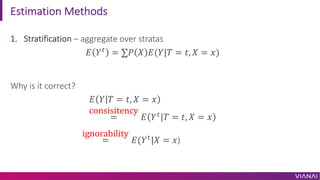

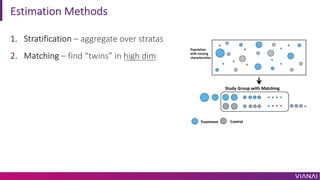

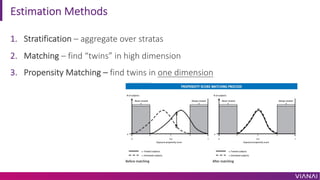

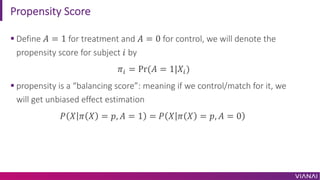

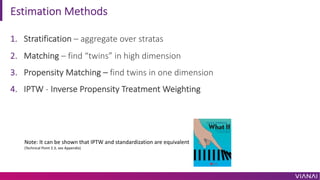

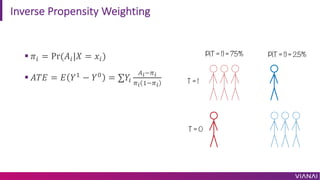

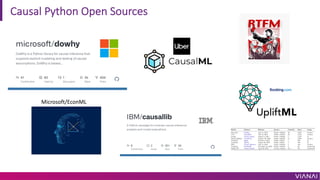

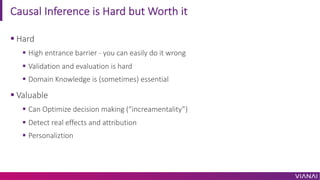

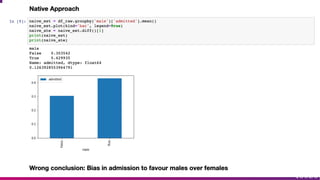

The document discusses causal inference and its importance for business decision making. It notes that causal inference allows companies to make better decisions by understanding the causal effects of actions using past data. This can help companies optimize outcomes by targeting interventions only to individuals expected to benefit. The document outlines key causal inference concepts like treatment effects and explores methods like matching, weighting, and experiments to estimate causal relationships from data.