1) The document discusses key concepts in probability and random processes including random variables, probability density functions, mean and variance, joint distributions, and the central limit theorem.

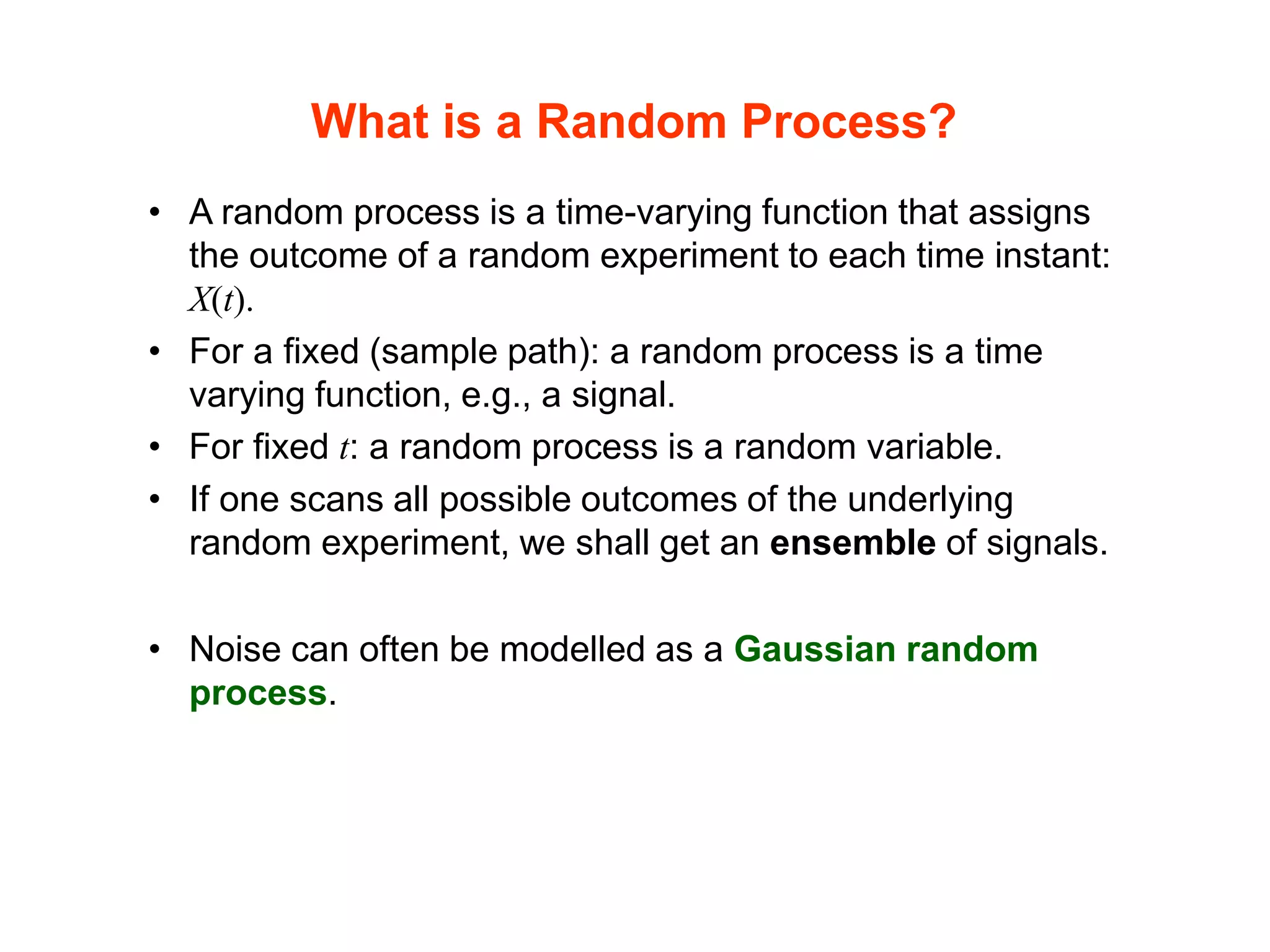

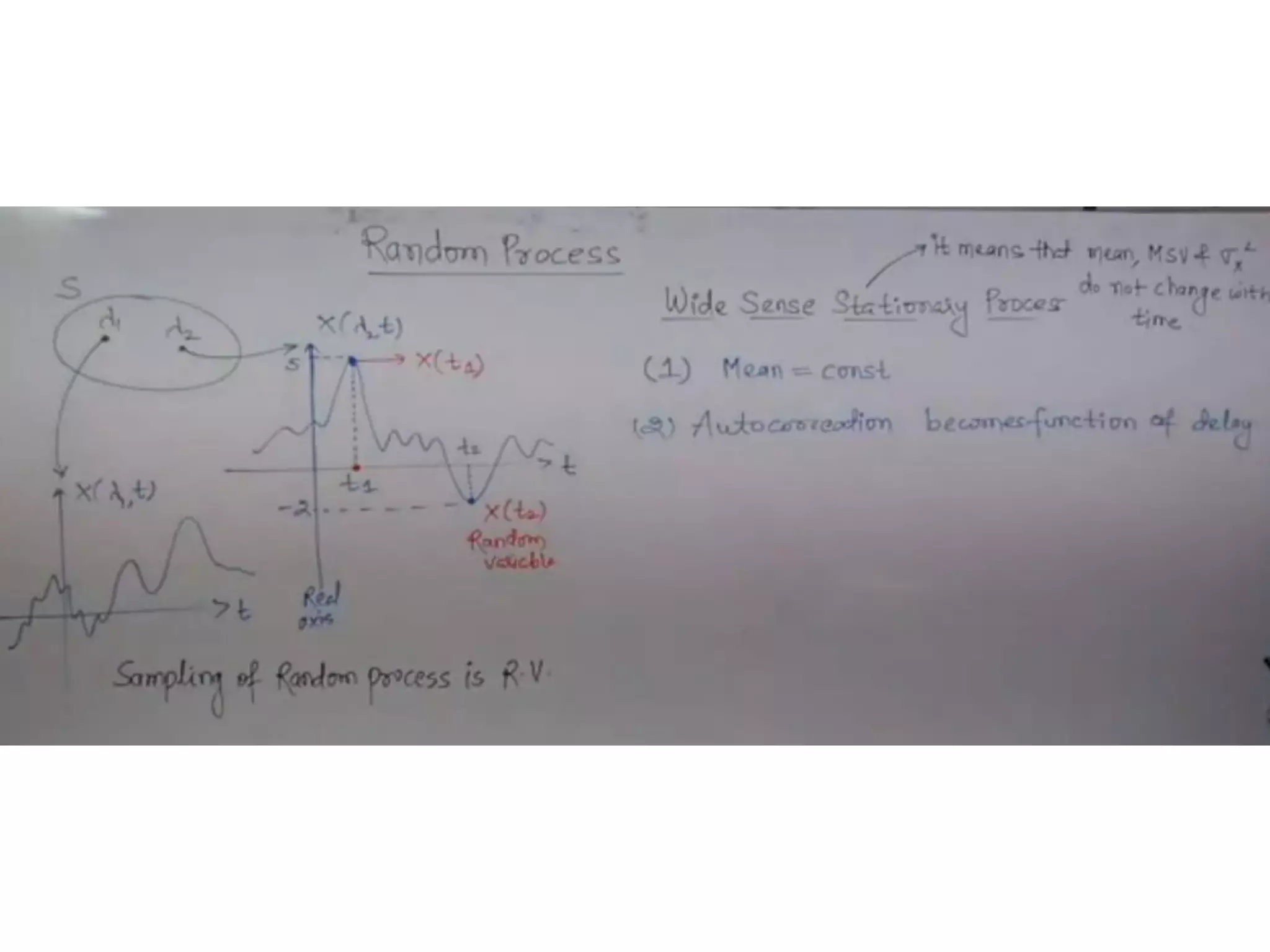

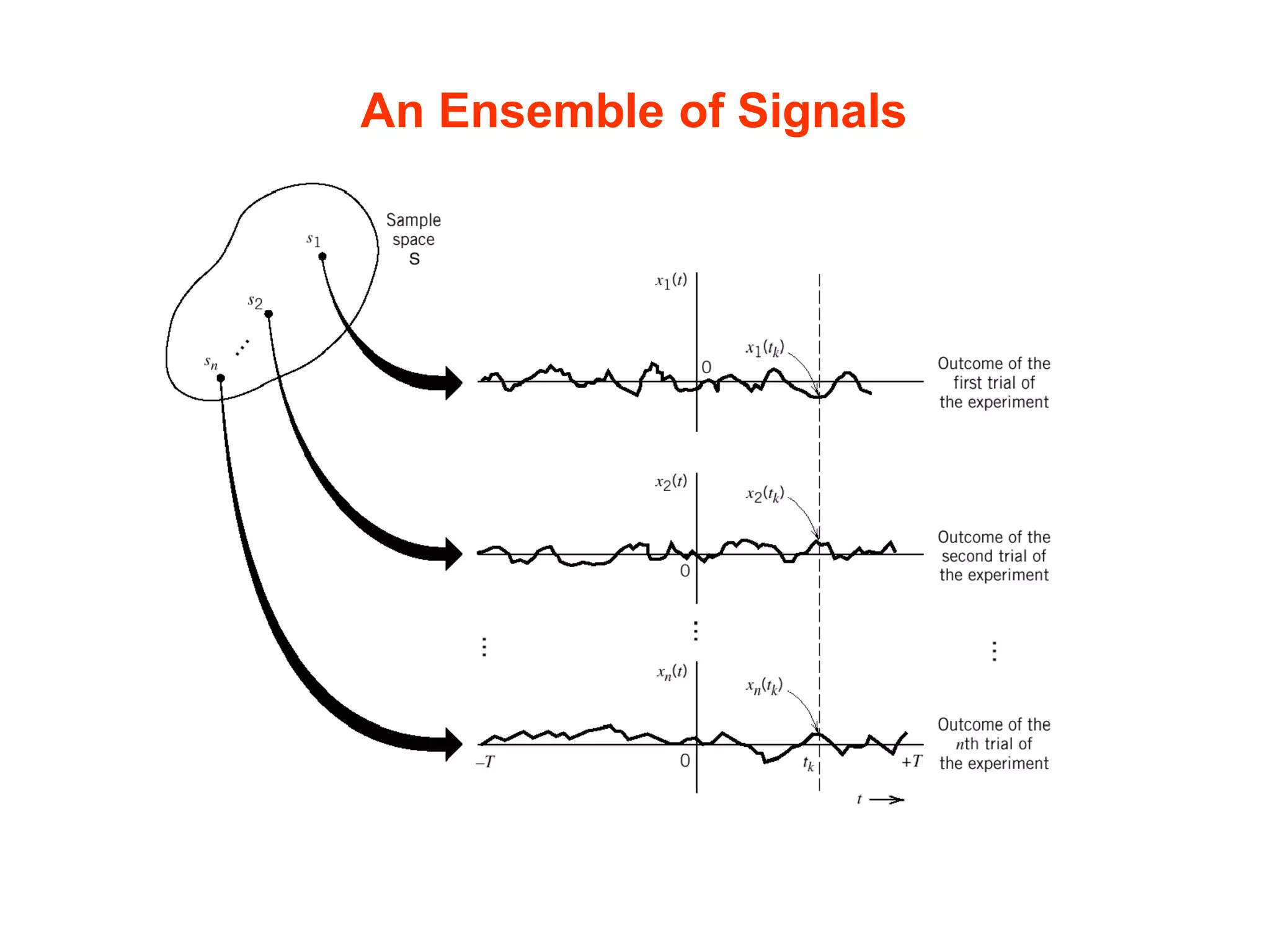

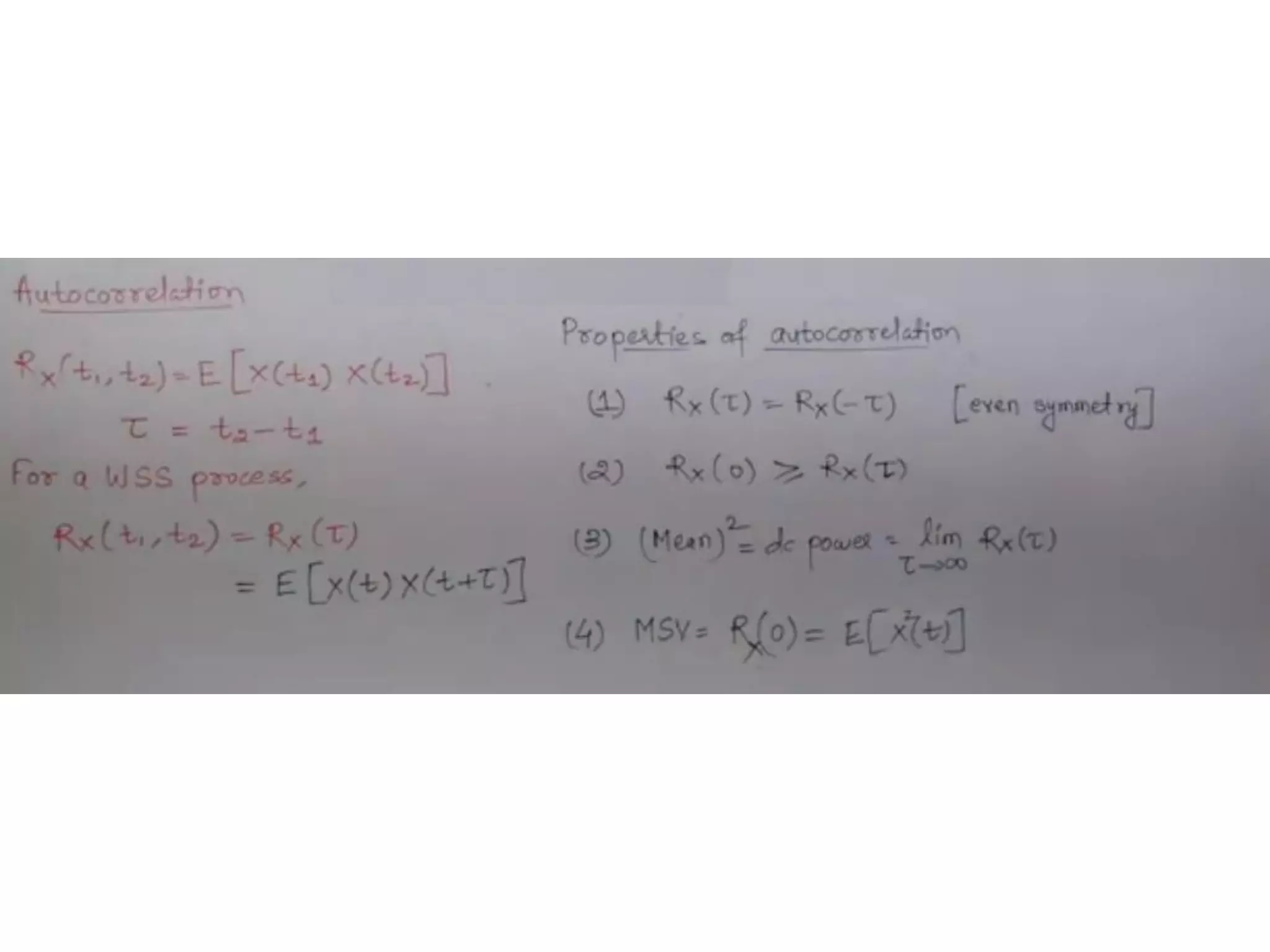

2) It defines a random process as a time-varying function that assigns outcomes of a random experiment to time instances. Power spectral density measures the distribution of power over frequency for stationary random processes.

3) Random processes are widely used to model noise and interference in communication systems, which often exhibit random behavior. Probability and stochastic models provide important mathematical tools for analyzing communication systems.

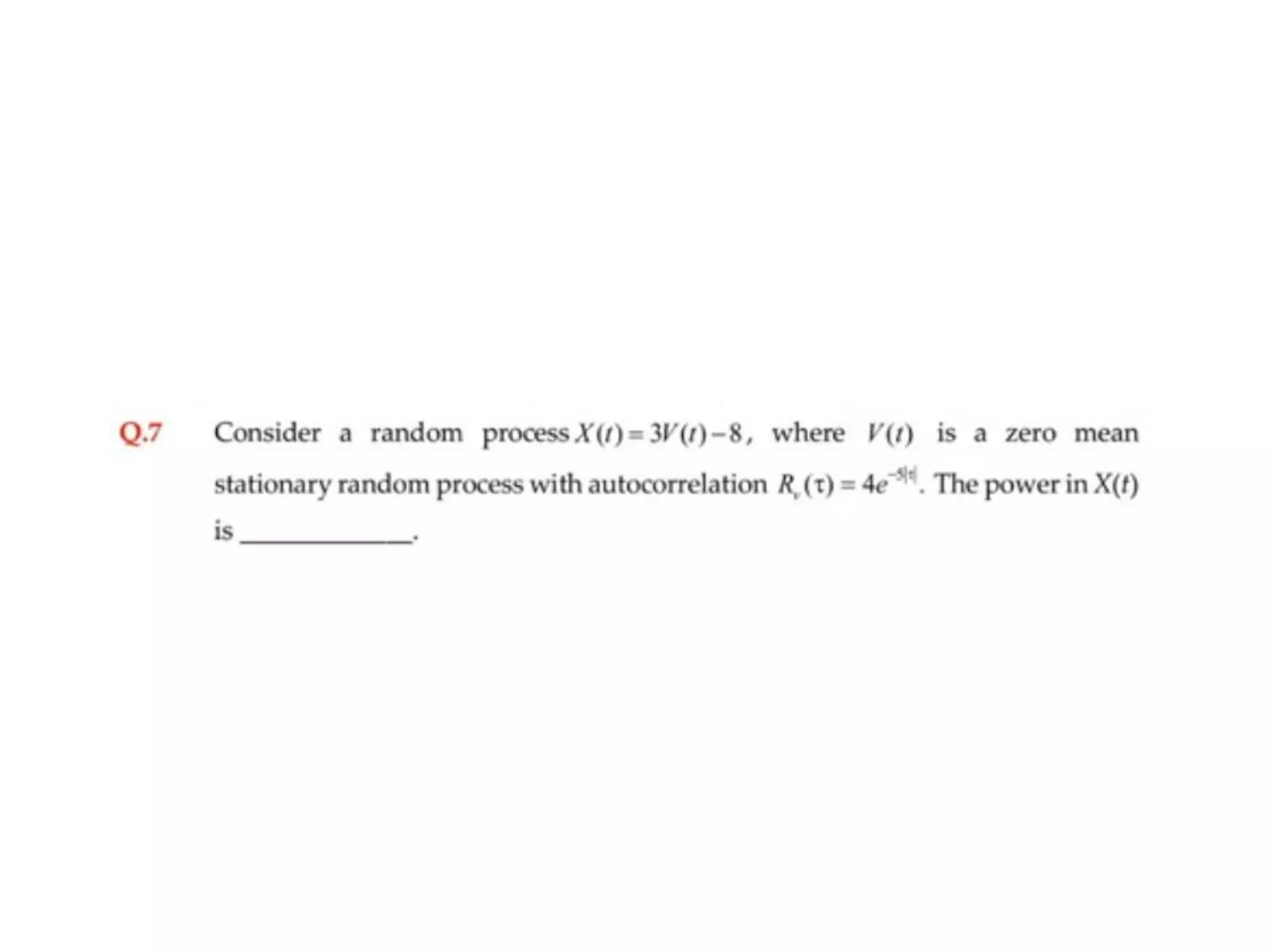

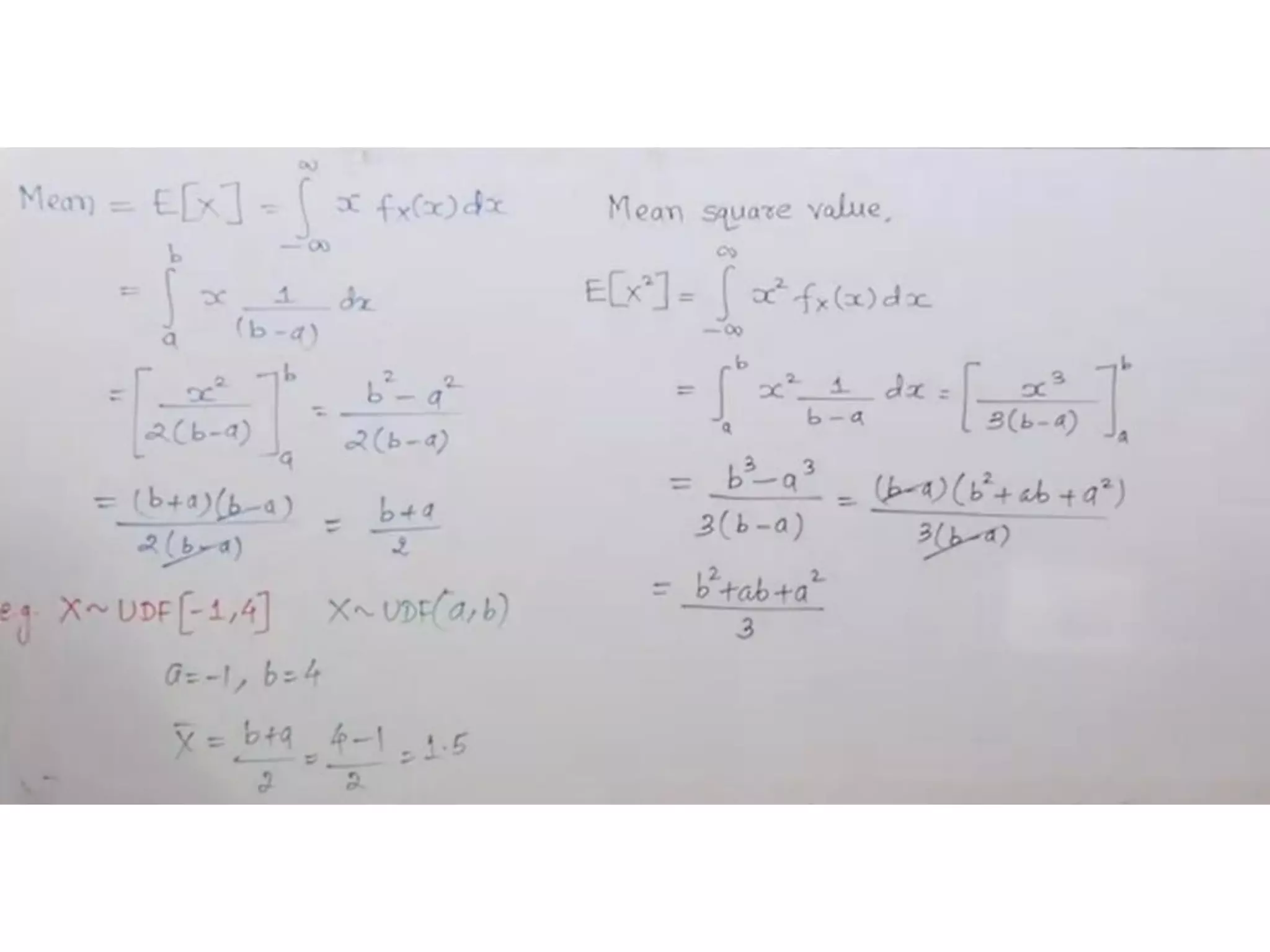

![Mean and Variance

X X

x f (x)dx

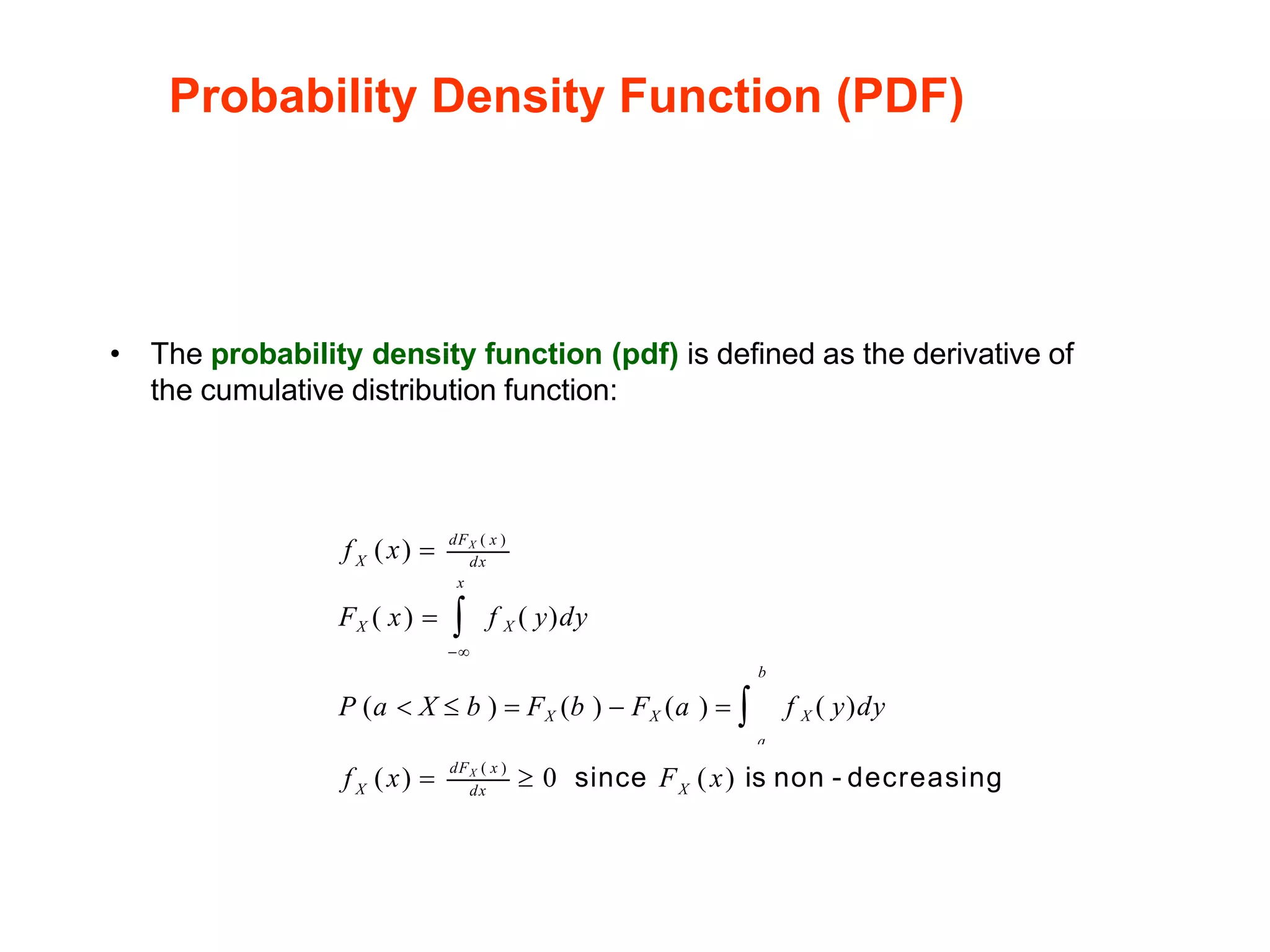

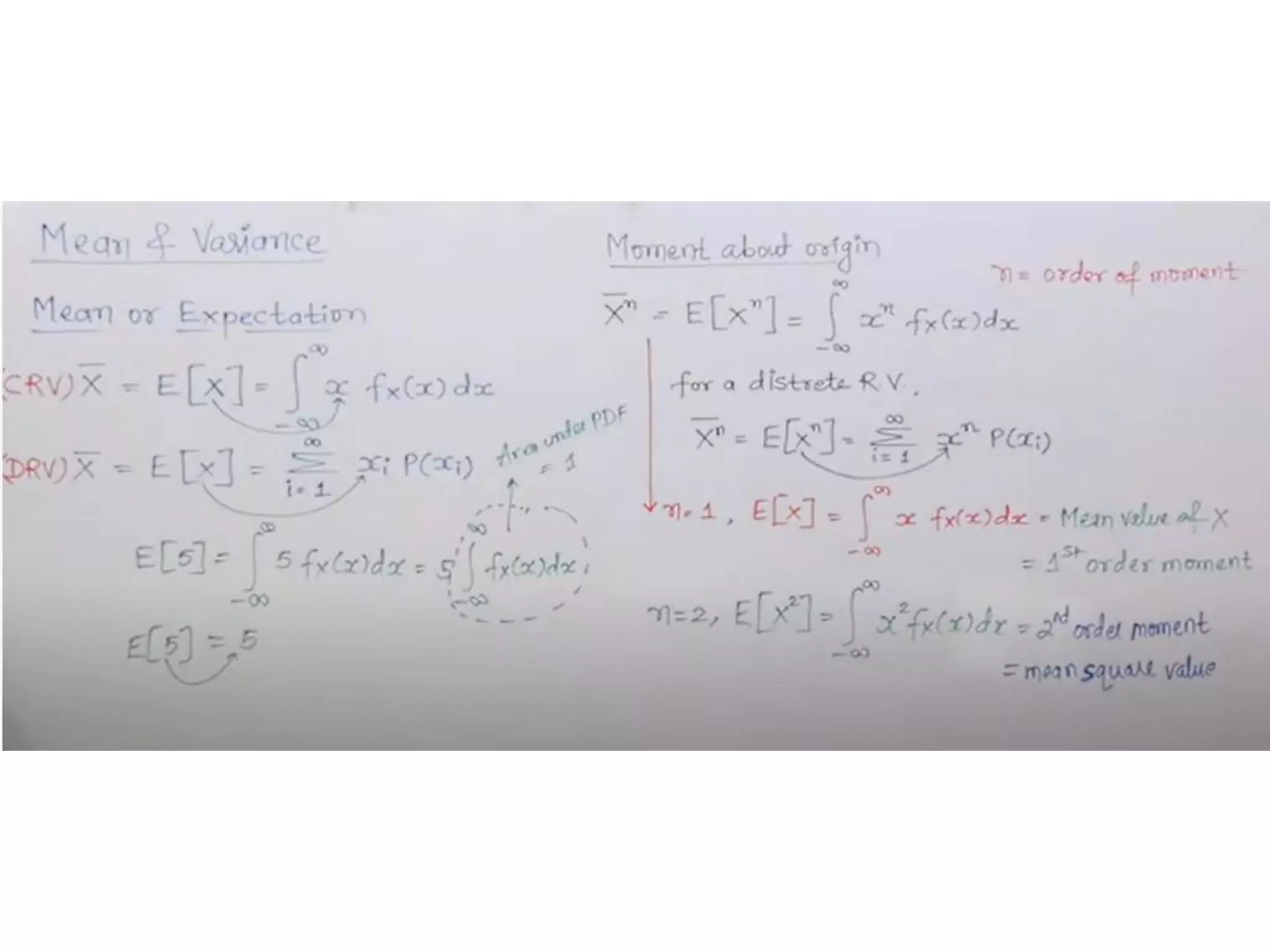

• Mean (or expected value DC level):

E[X ] = =

E[ ]: expectation operator

−](https://image.slidesharecdn.com/probabilityrvlecslides-221130150730-cb972974/75/Probability-RV-Lec-Slides-pdf-14-2048.jpg)

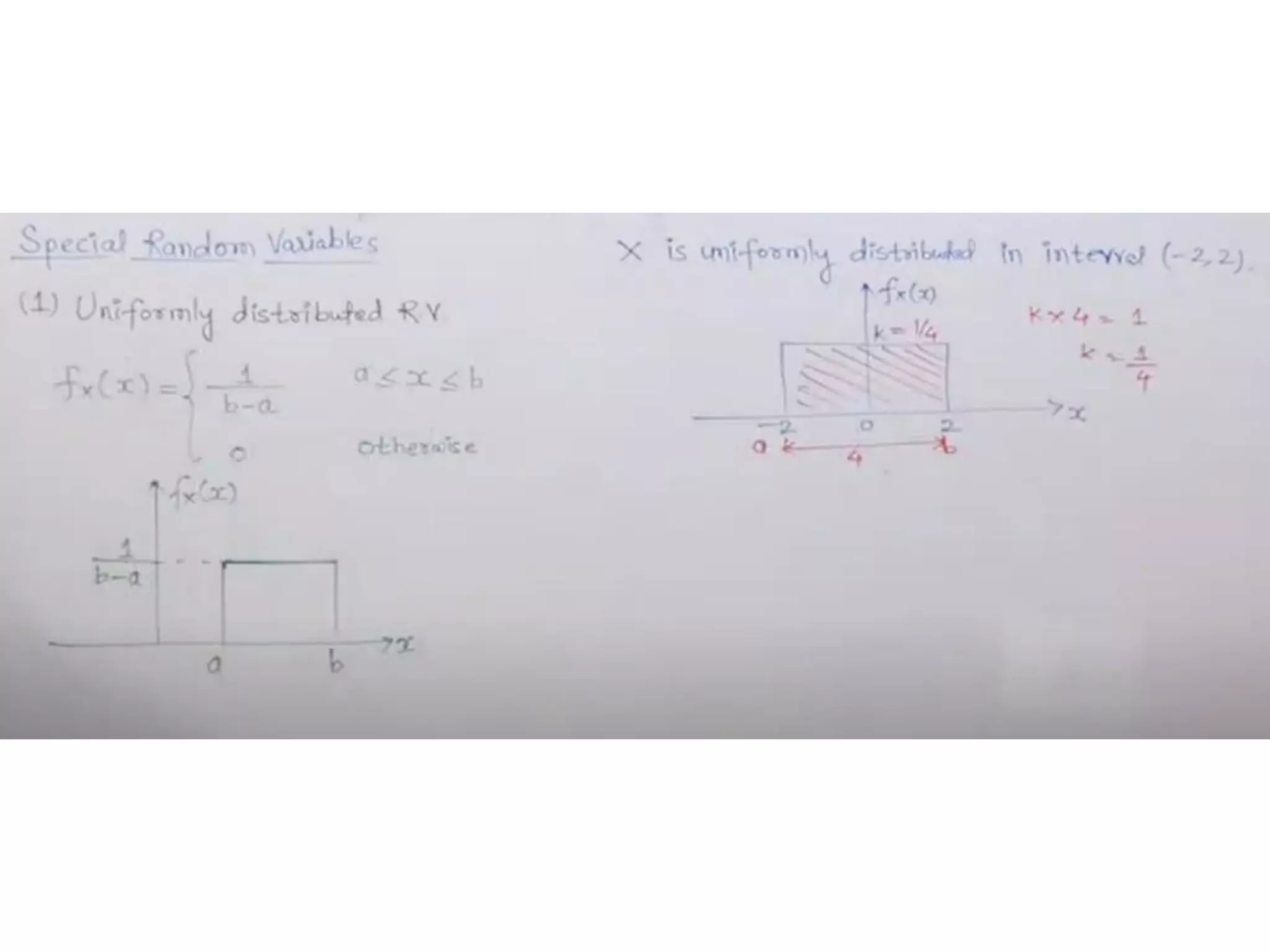

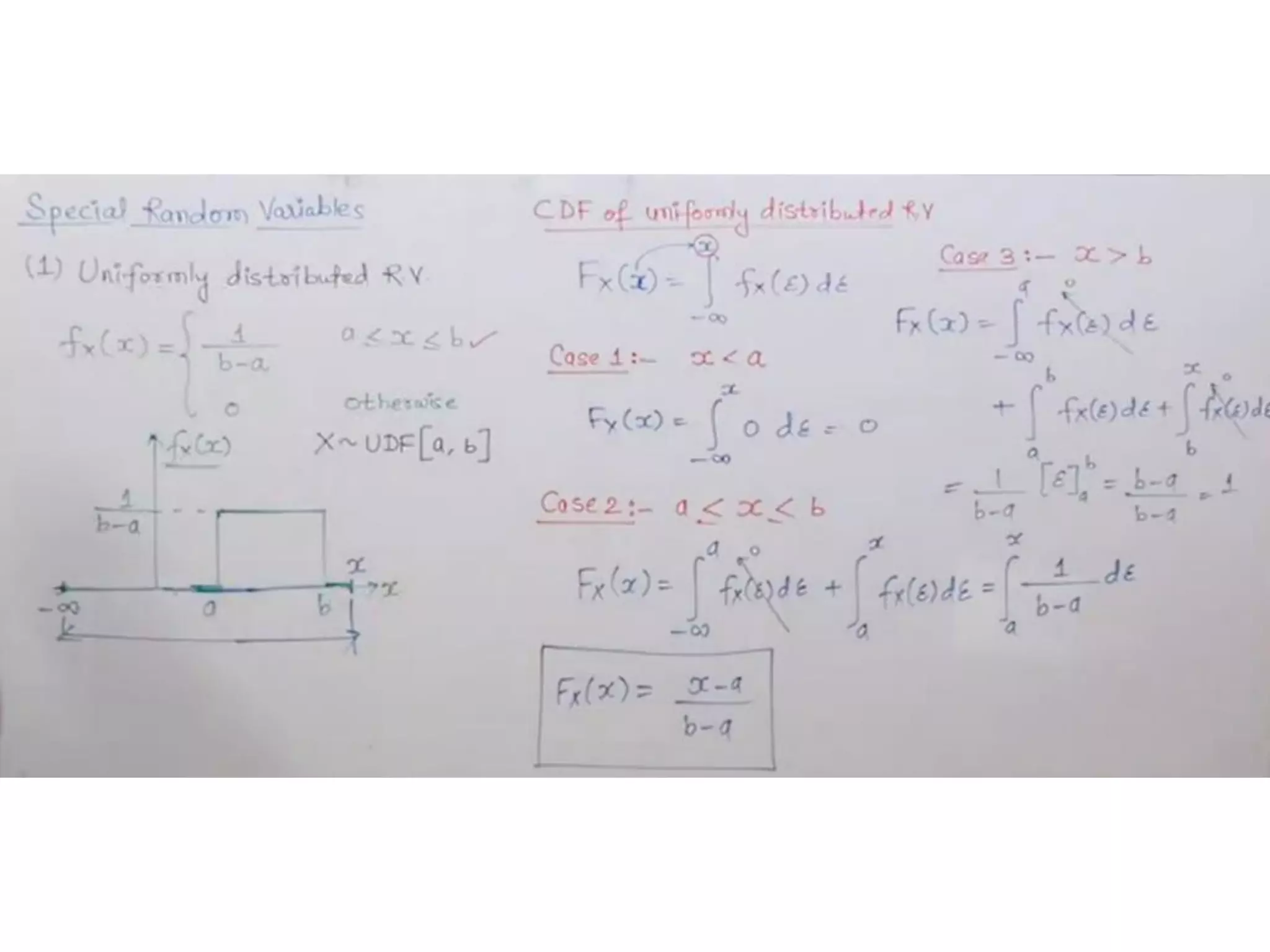

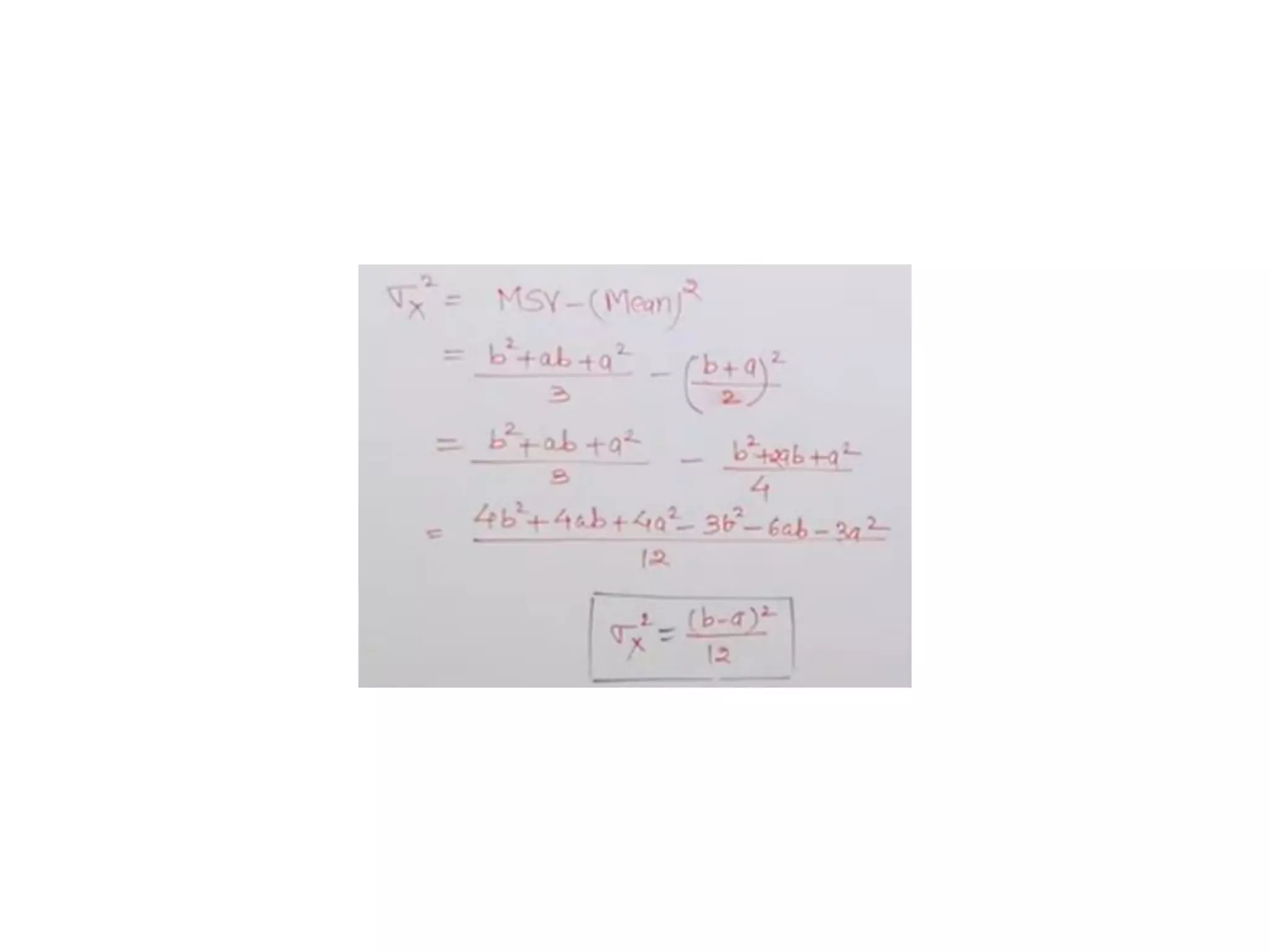

![Uniform Distribution

fX(x)

1

X

a x b

f (x) =

b − a

2

a + b

E [ X ]=

0

0

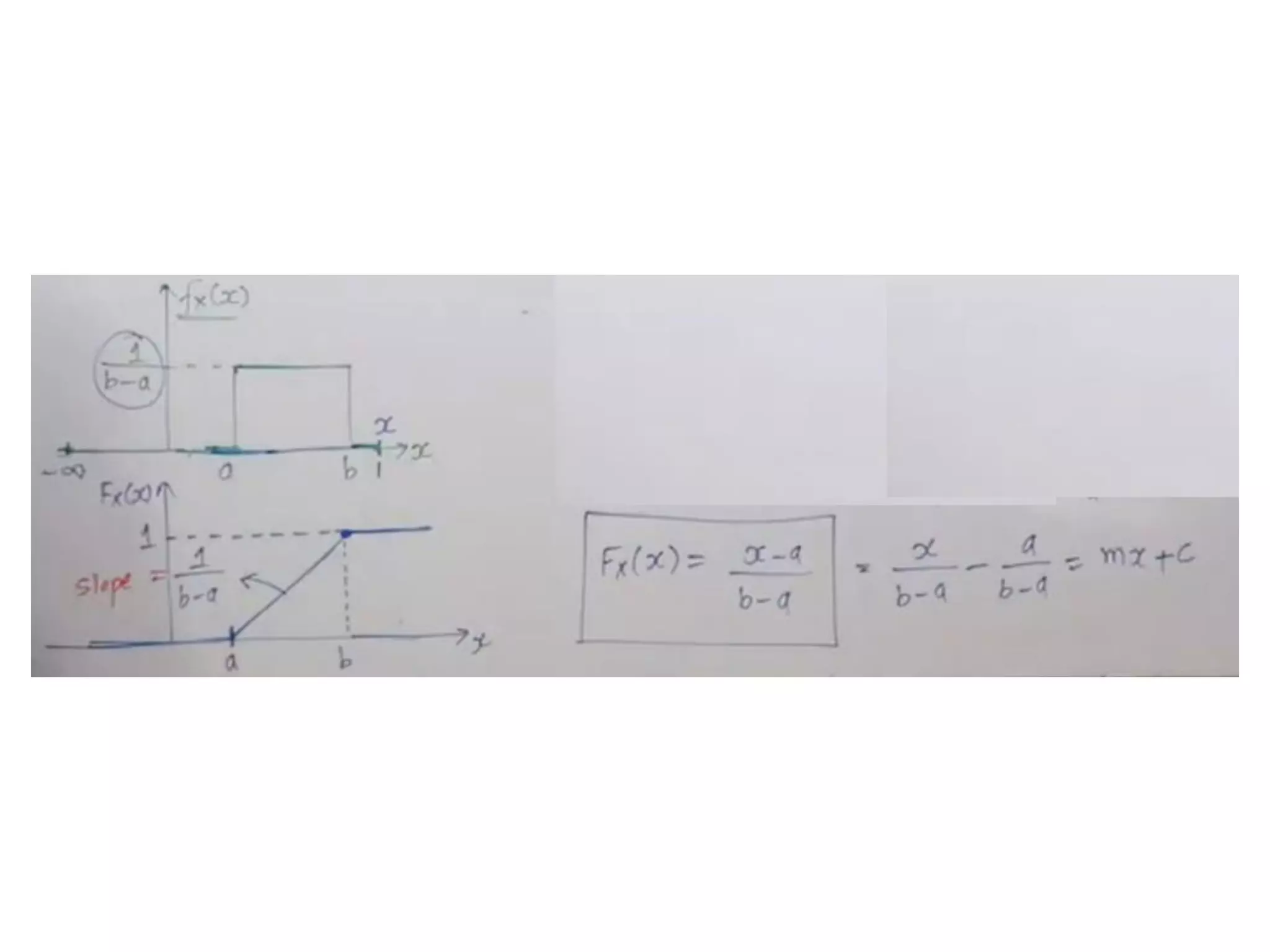

x − a

FX ( x) =

b −a

2

12

X

2

=

(b- a)

elsewhere

x a

a x b

x b

1](https://image.slidesharecdn.com/probabilityrvlecslides-221130150730-cb972974/75/Probability-RV-Lec-Slides-pdf-24-2048.jpg)

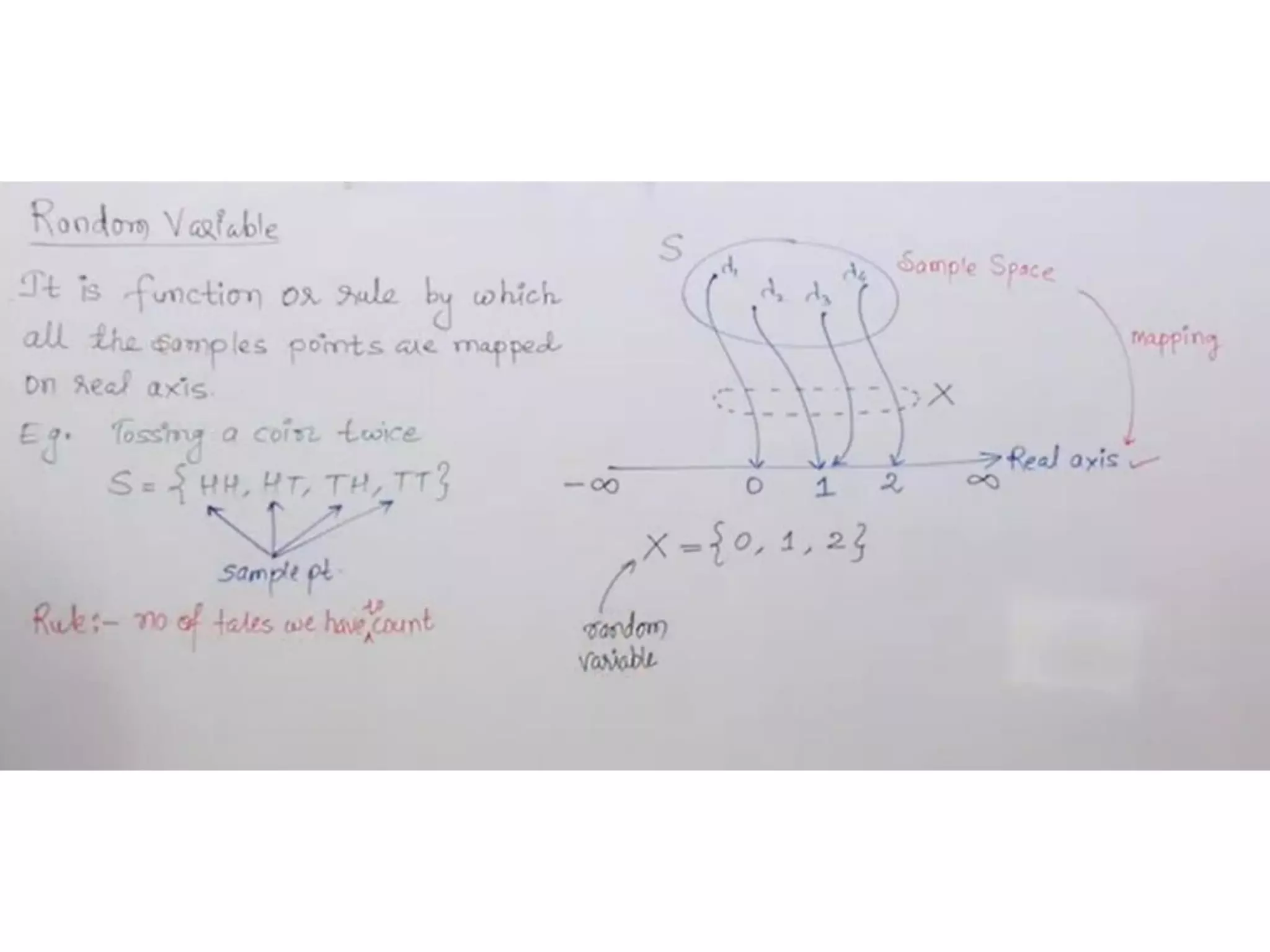

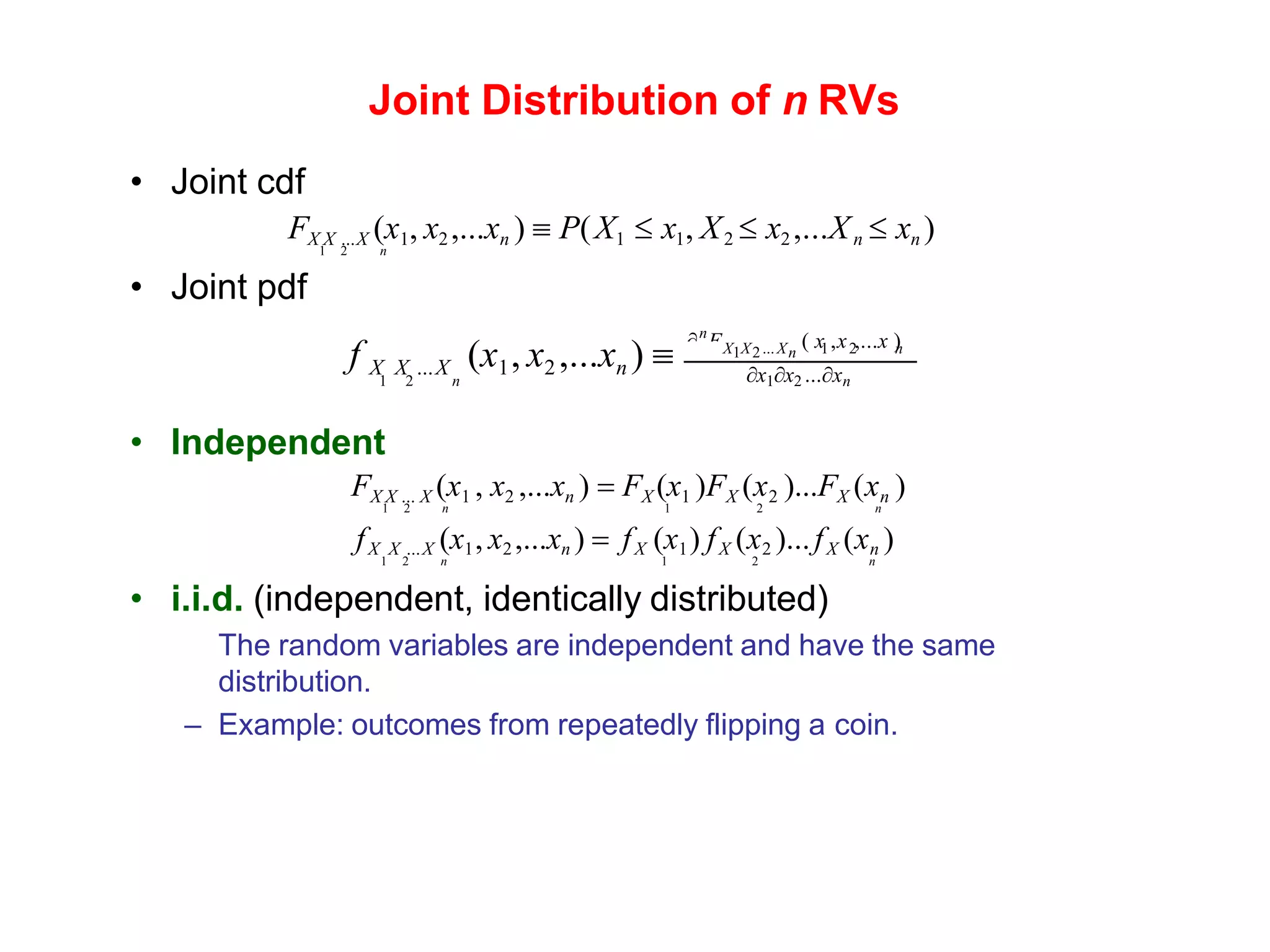

![Joint Distribution

• Joint distribution function for two random variables X and Y

FXY (x, y) = P( X x,Y y)

• Joint probability density function

XY xy

2

FXY (x, y)

f (x, y) =

• Properties

1) FXY (, ) = f XY (u, v)dudv =1

− −

2) f X (x) = f XY (x,y)dy

3) fY (x) = f XY (x,y)dx

x=−

y=−

4) X , Y are independent fXY (x, y) = fX (x) fY (y)

5) X , Y are uncorrelated E[XY] = E[X ]E[Y]](https://image.slidesharecdn.com/probabilityrvlecslides-221130150730-cb972974/75/Probability-RV-Lec-Slides-pdf-30-2048.jpg)

![Joint Distribution

• Joint distribution function for two random variables X and Y

FXY (x, y) = P( X x,Y y)

• Joint probability density function

XY xy

2

FXY (x, y)

f (x, y) =

• Properties

1) FXY (, ) = f XY (u, v)dudv =1

− −

2) f X (x) = f XY (x,y)dy

3) fY (x) = f XY (x,y)dx

x=−

y=−

4) X , Y are independent fXY (x, y) = fX (x) fY (y)

5) X , Y are uncorrelated E[XY] = E[X ]E[Y]](https://image.slidesharecdn.com/probabilityrvlecslides-221130150730-cb972974/75/Probability-RV-Lec-Slides-pdf-31-2048.jpg)

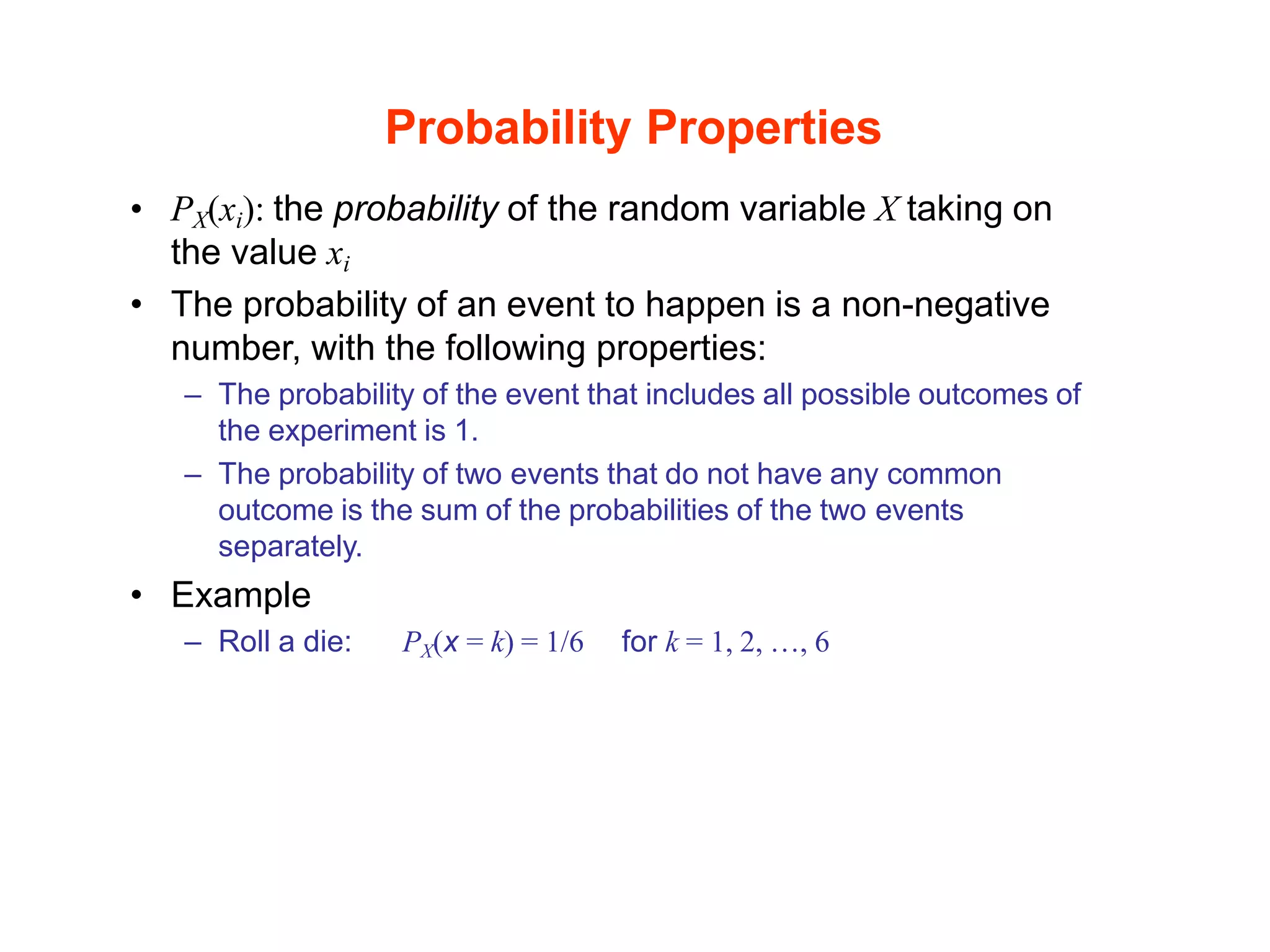

![Power Spectral Density

• Power spectral density (PSD) is a function that measures

the distribution of power of a random process with

frequency.

• PSD is only defined for stationary processes.

• Wiener-Khinchine relation: The PSD is equal to the

Fourier transform of its autocorrelation function:

X

X

−

R ( )e− j 2 f

d

S ( f ) =

– A similar relation exists for deterministic signals

• Then the average power can be found as

X X

S ( f )df

−

P = E[ X 2

(t)]= R (0) =

• The frequency content of a process depends on how

rapidly the amplitude changes as a function of time.

– This can be measured by the autocorrelation function.](https://image.slidesharecdn.com/probabilityrvlecslides-221130150730-cb972974/75/Probability-RV-Lec-Slides-pdf-42-2048.jpg)