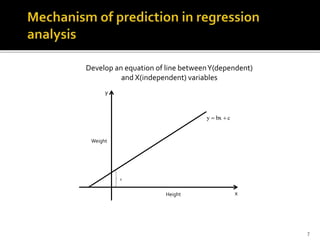

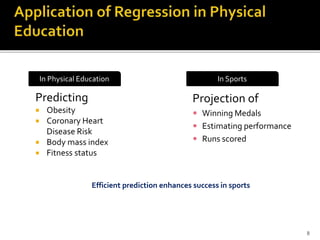

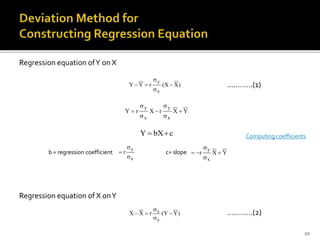

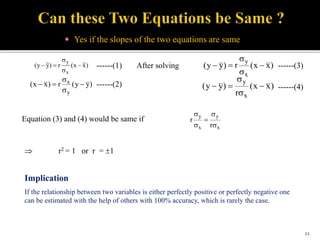

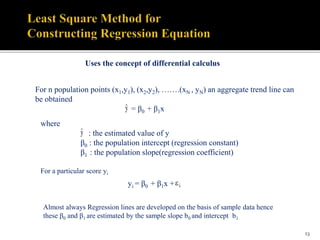

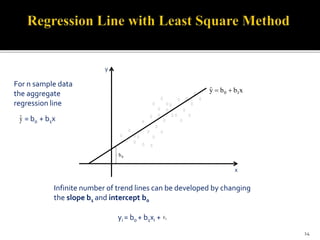

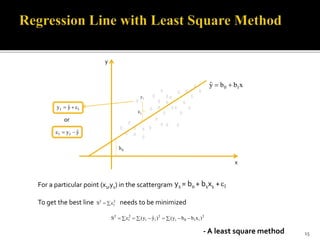

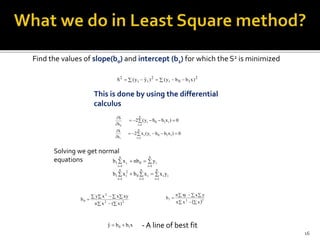

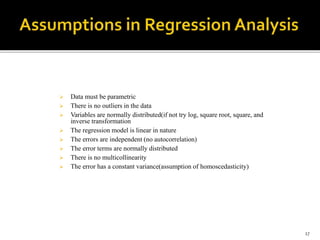

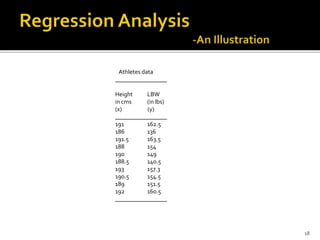

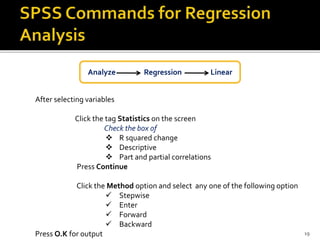

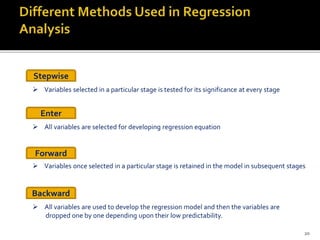

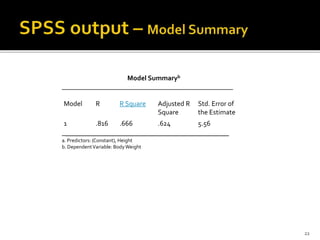

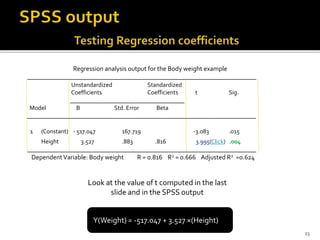

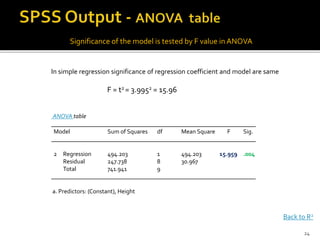

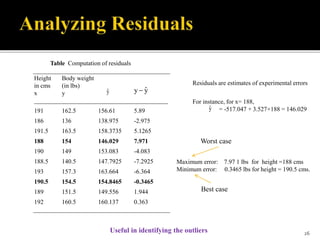

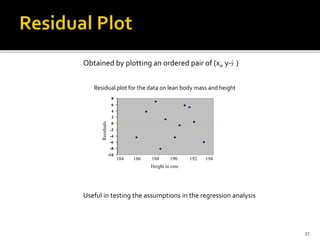

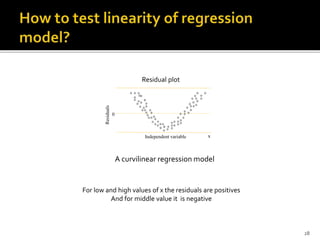

The document is a presentation by Dr. J.P. Verma that focuses on regression analysis as described in Chapter 9 of the book 'Sports Research with Analytical Solutions Using SPSS.' It covers the development of regression equations, methods for computing coefficients, and the implications of associations between independent and dependent variables in sports analytics. Specific examples of predicting body weight based on height using regression models are also discussed.