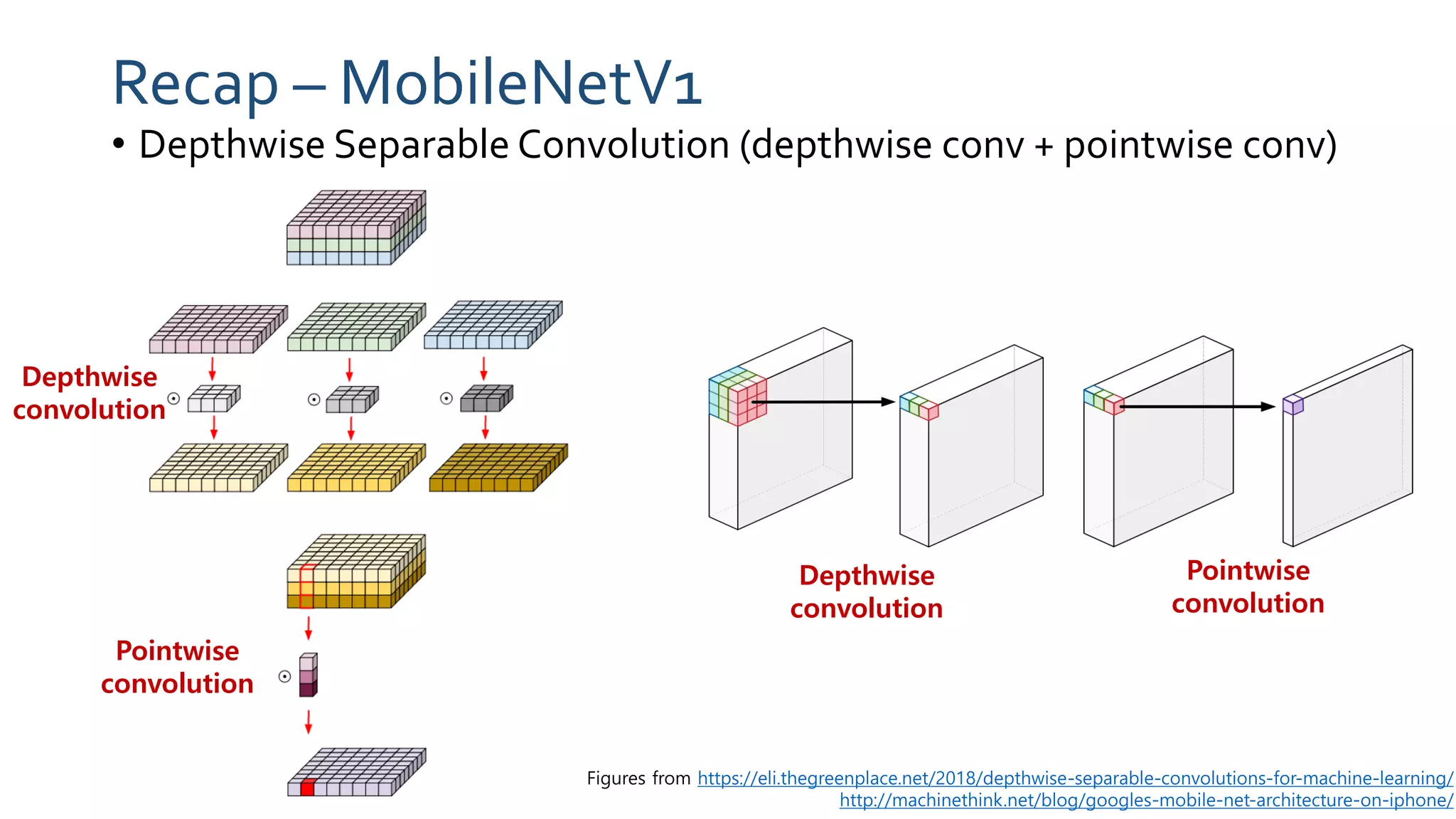

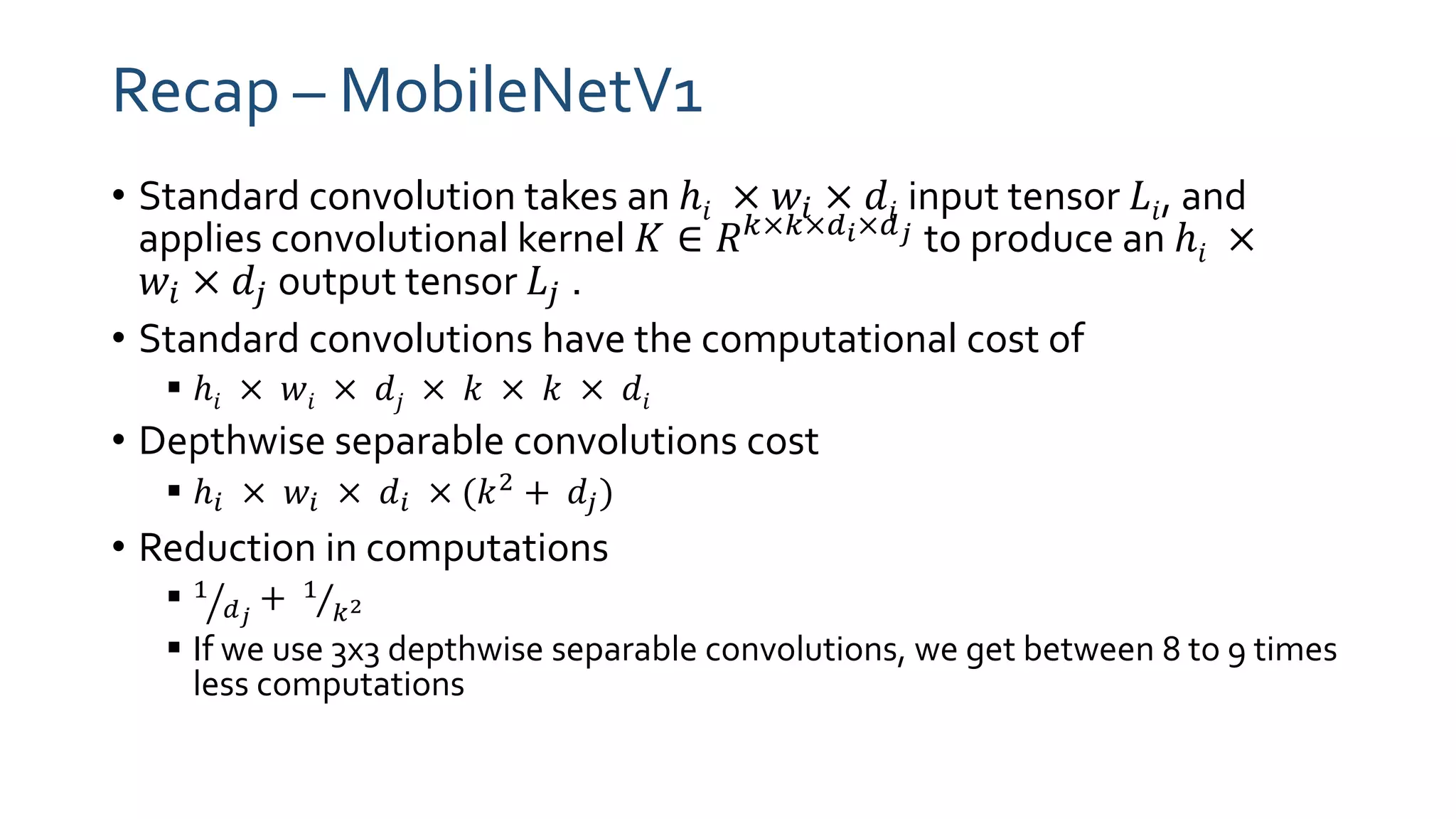

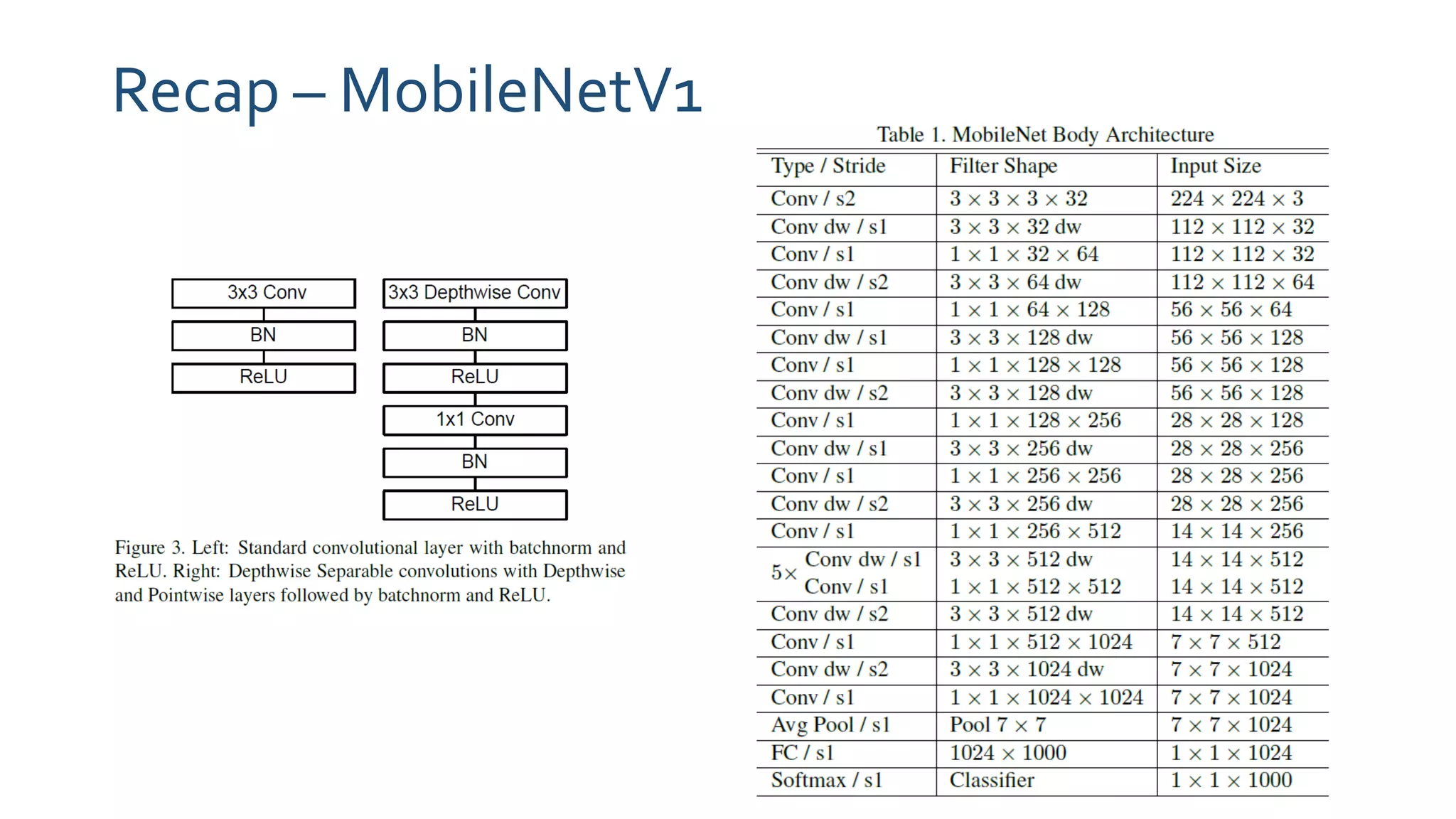

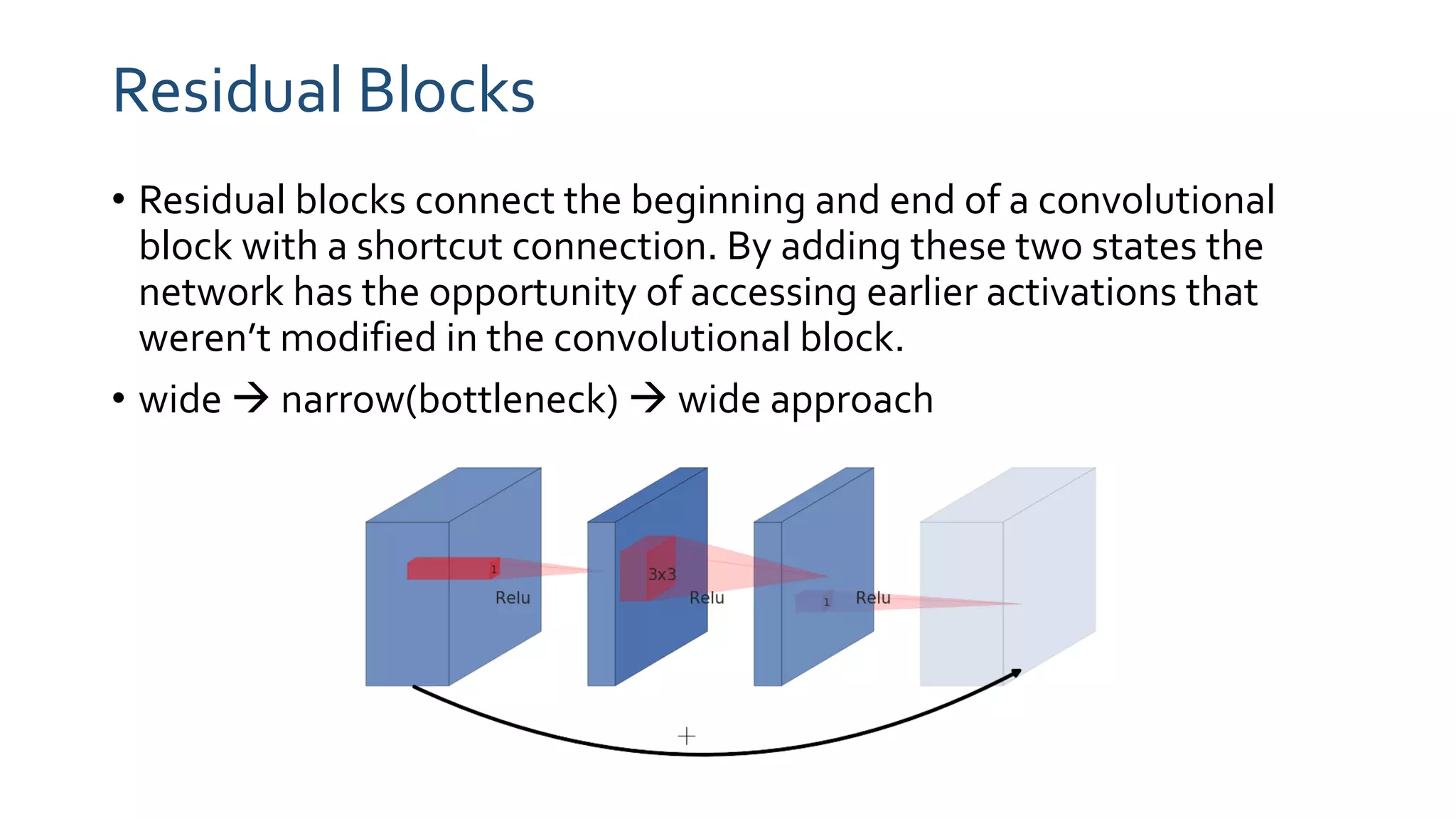

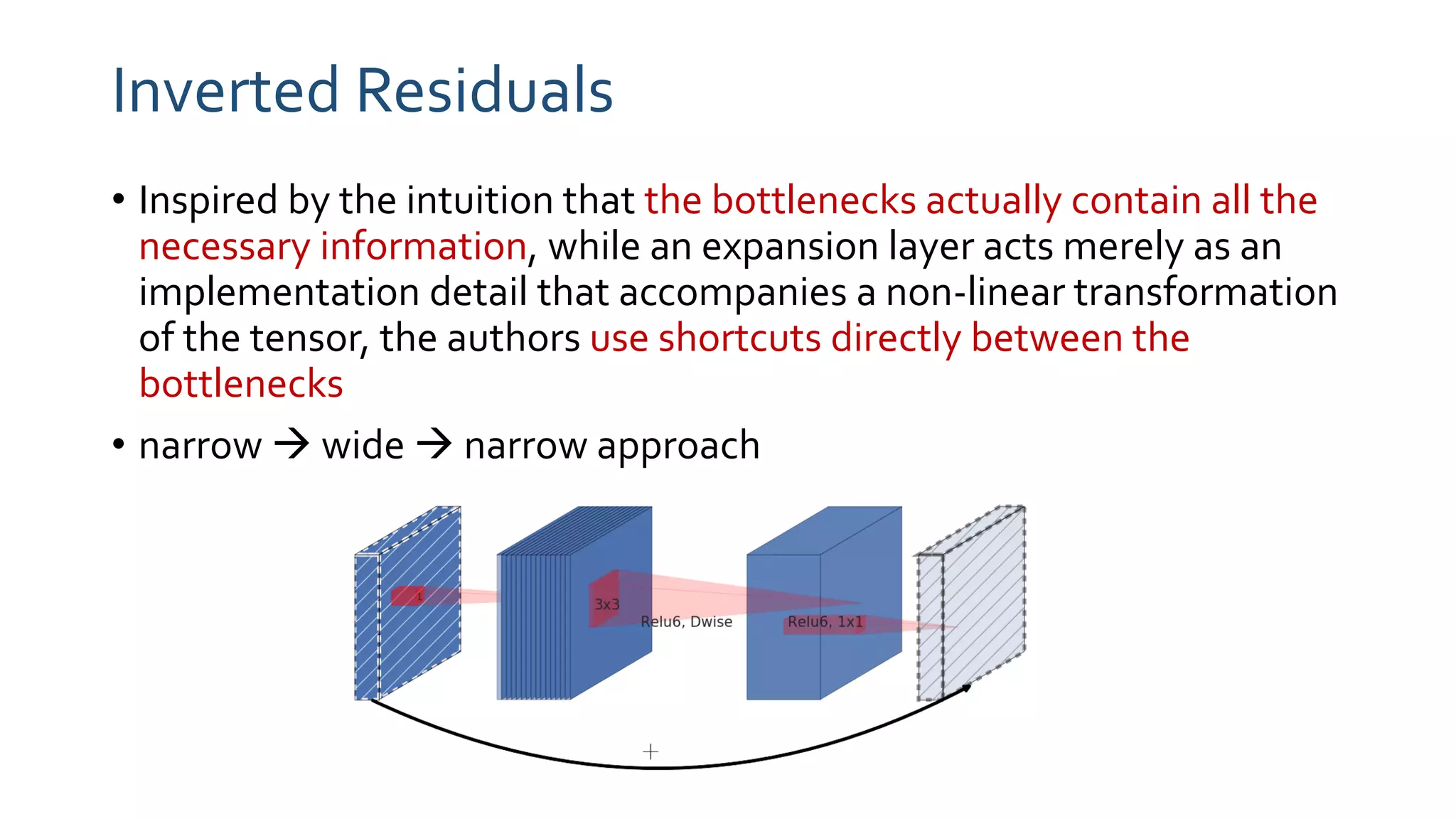

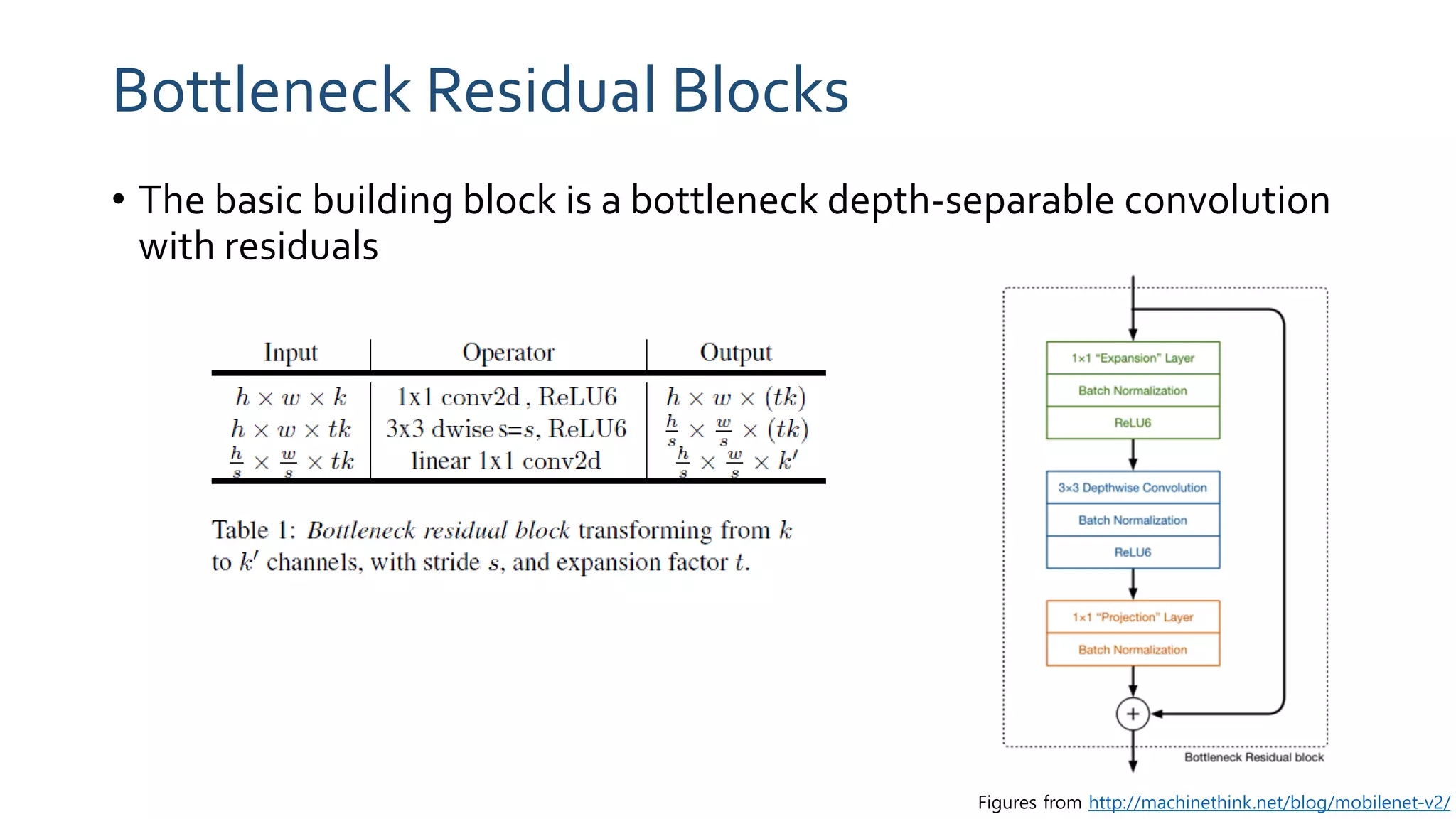

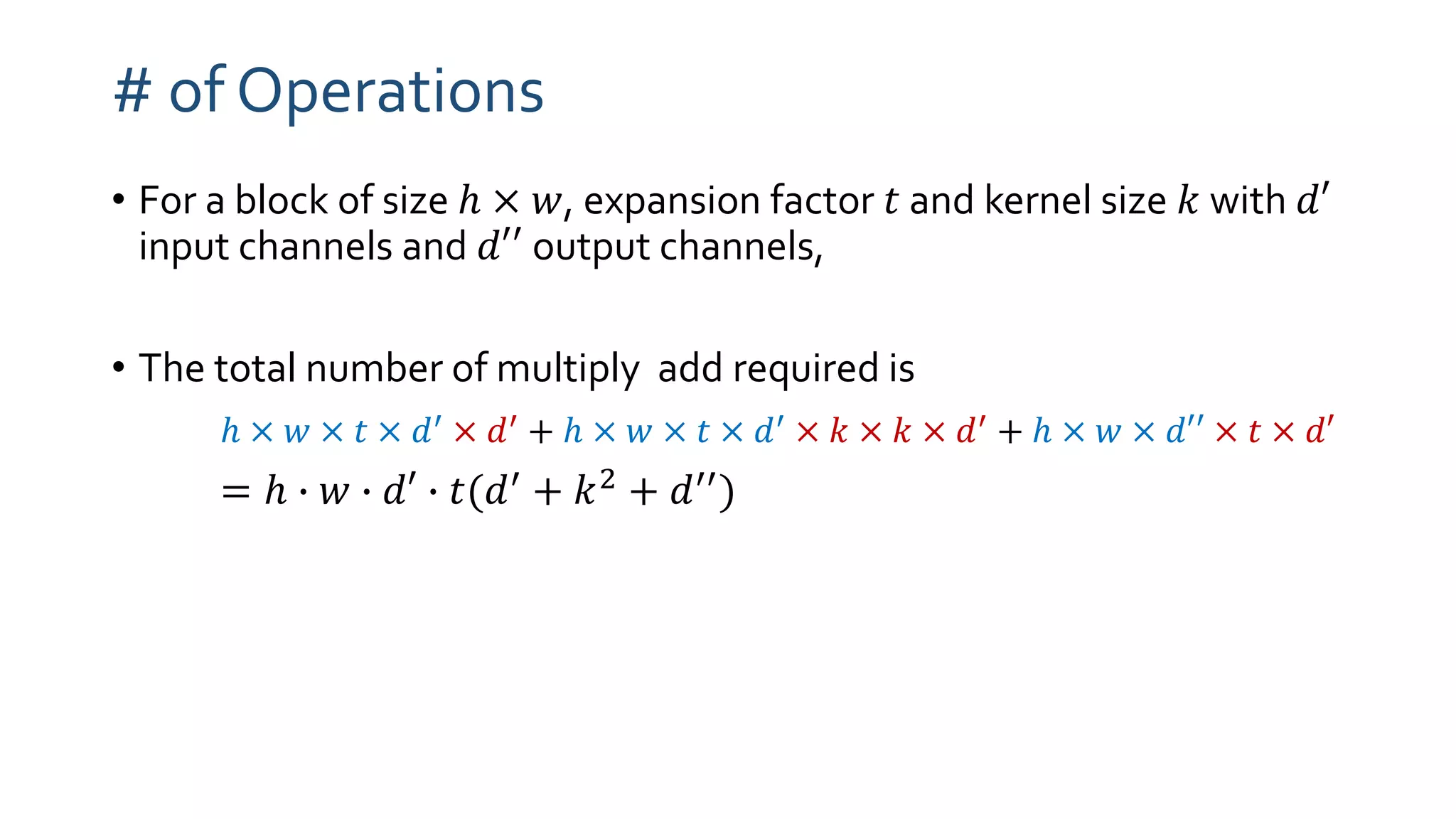

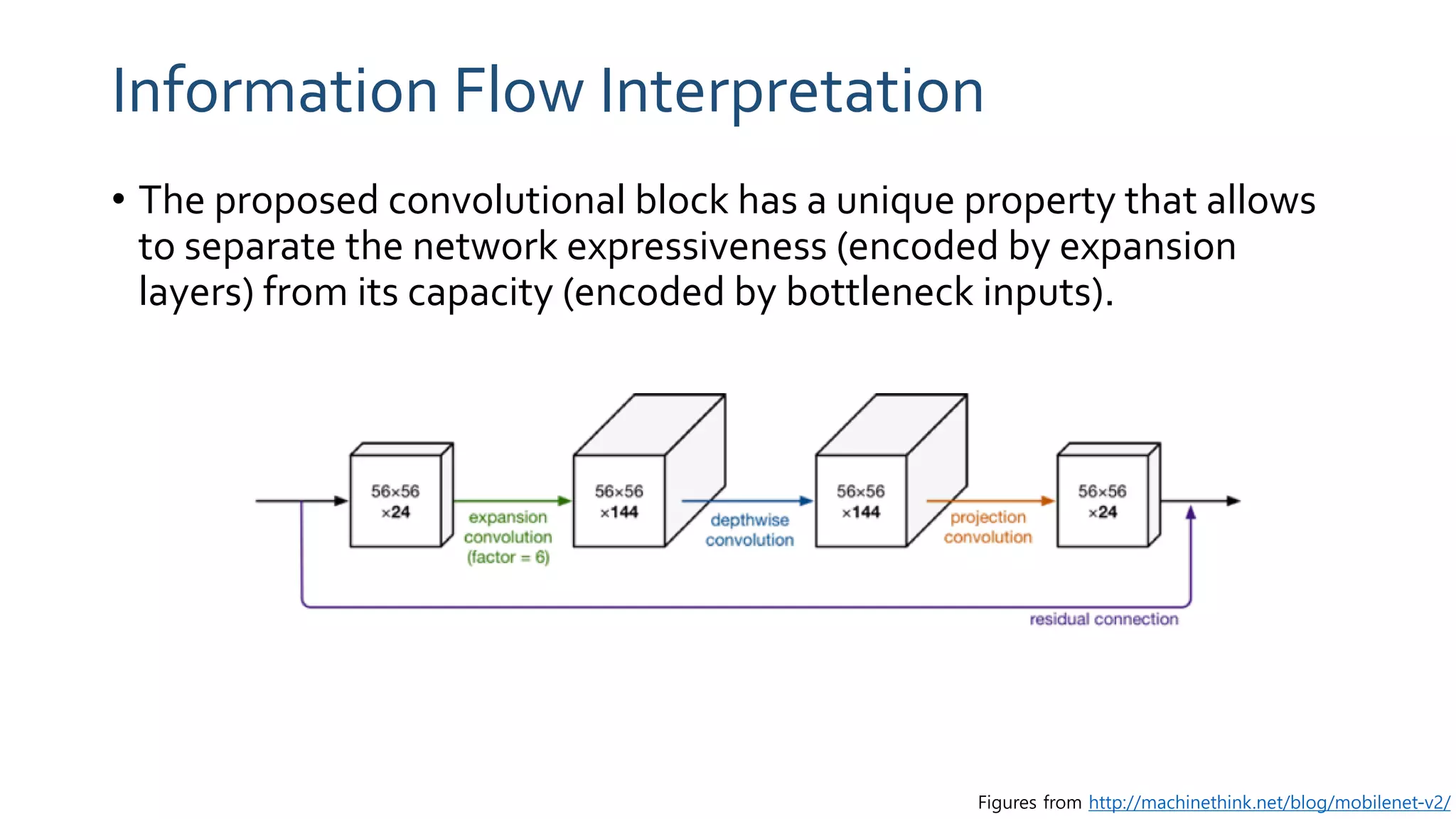

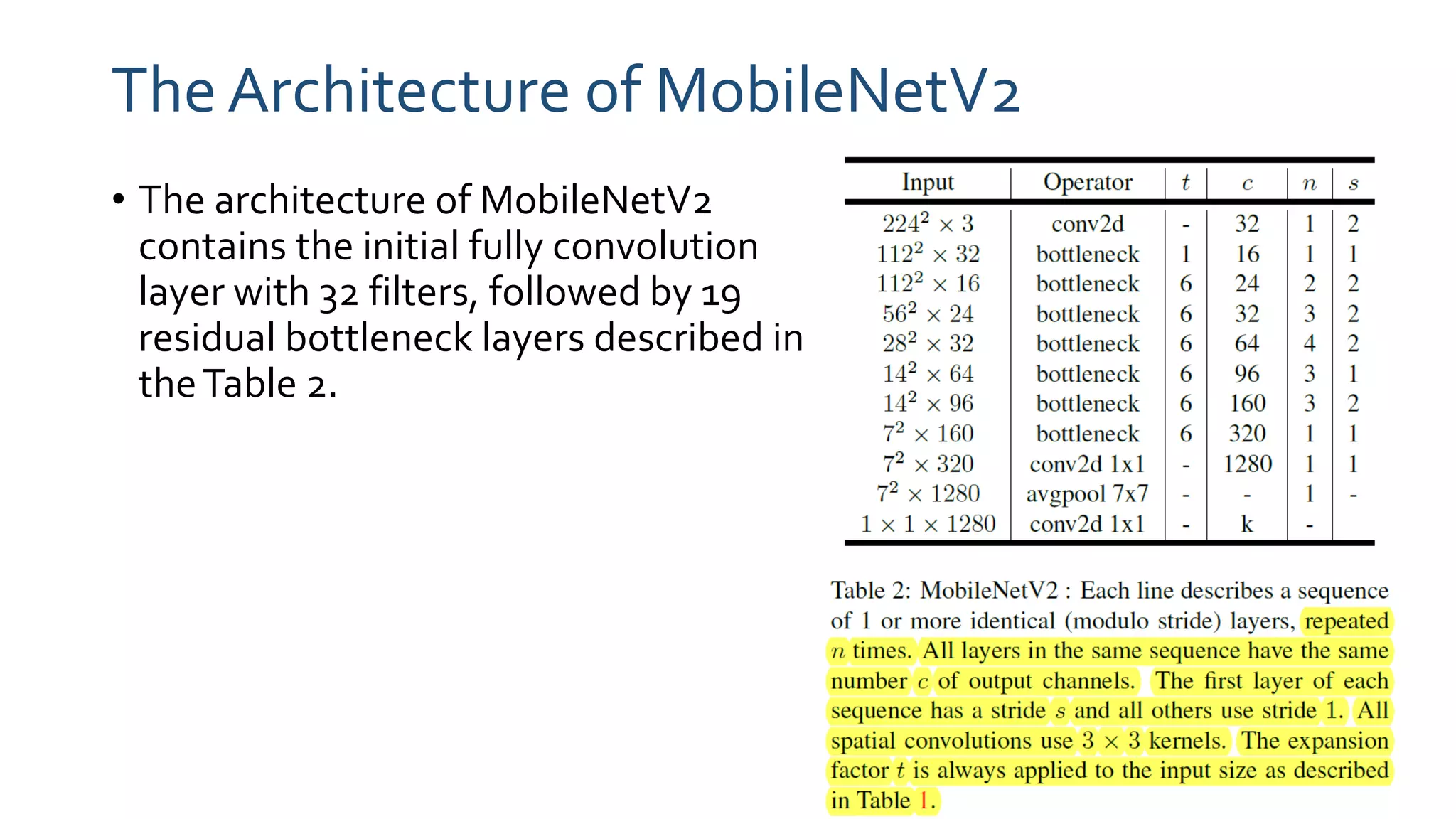

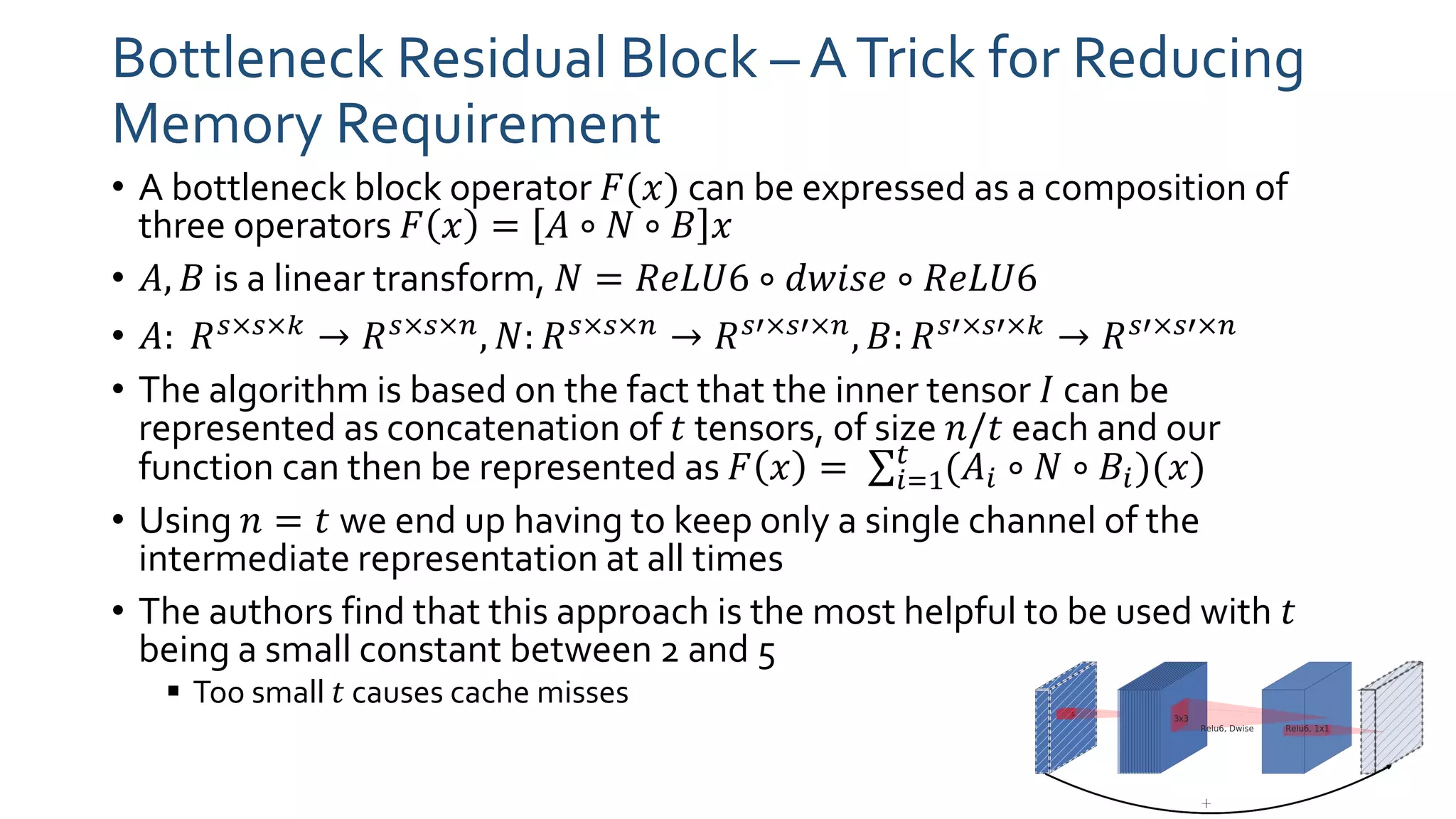

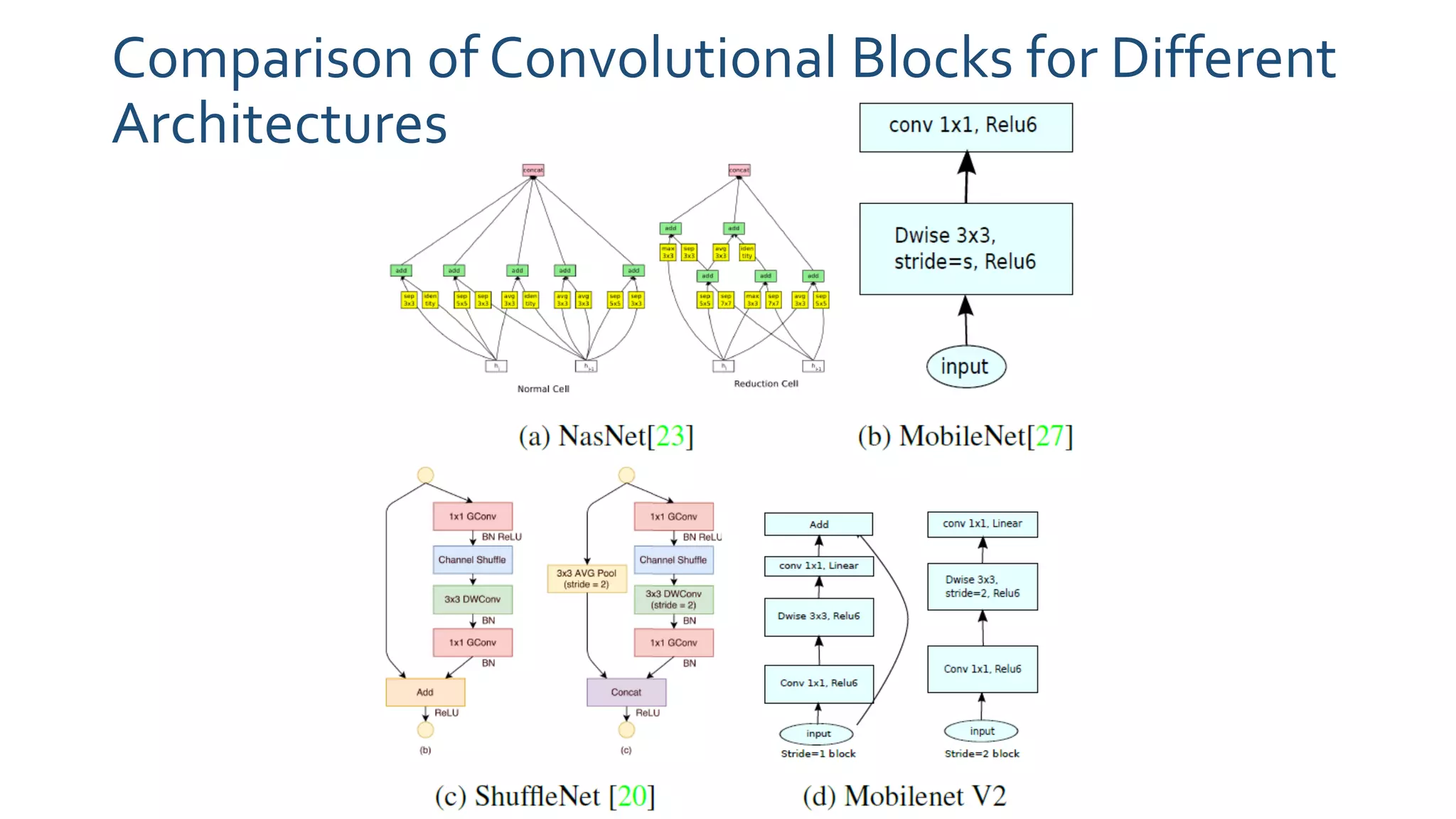

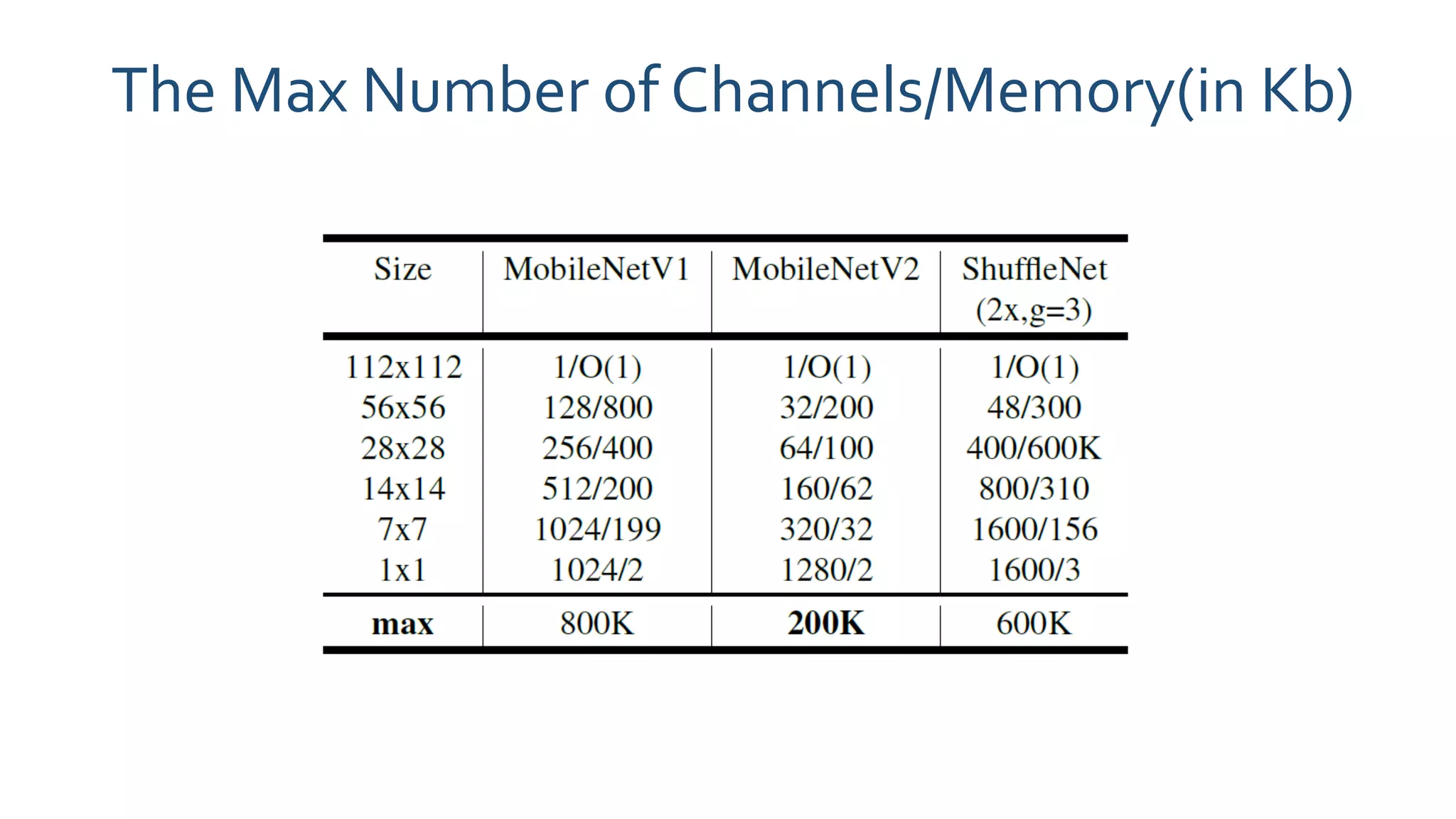

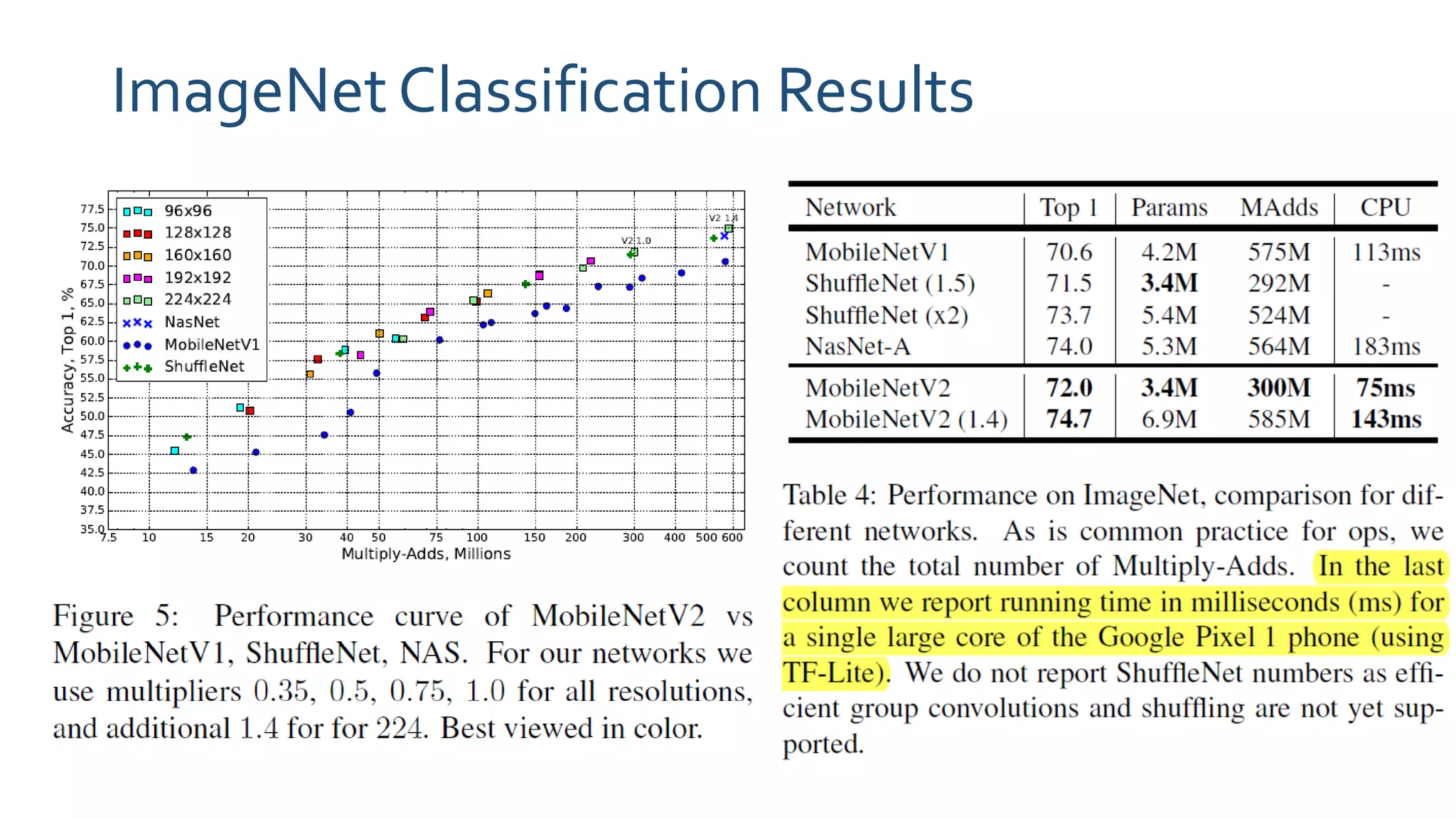

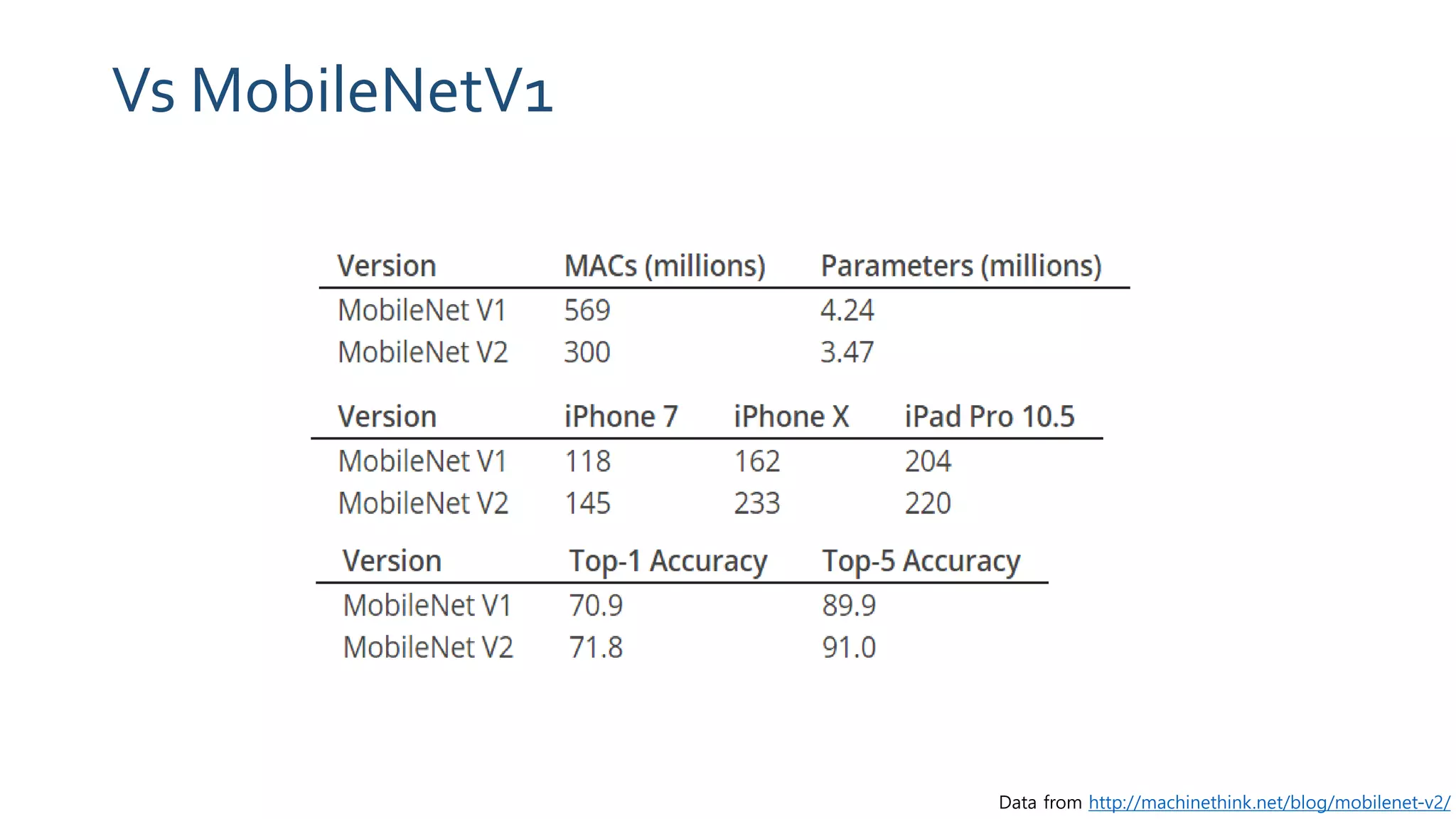

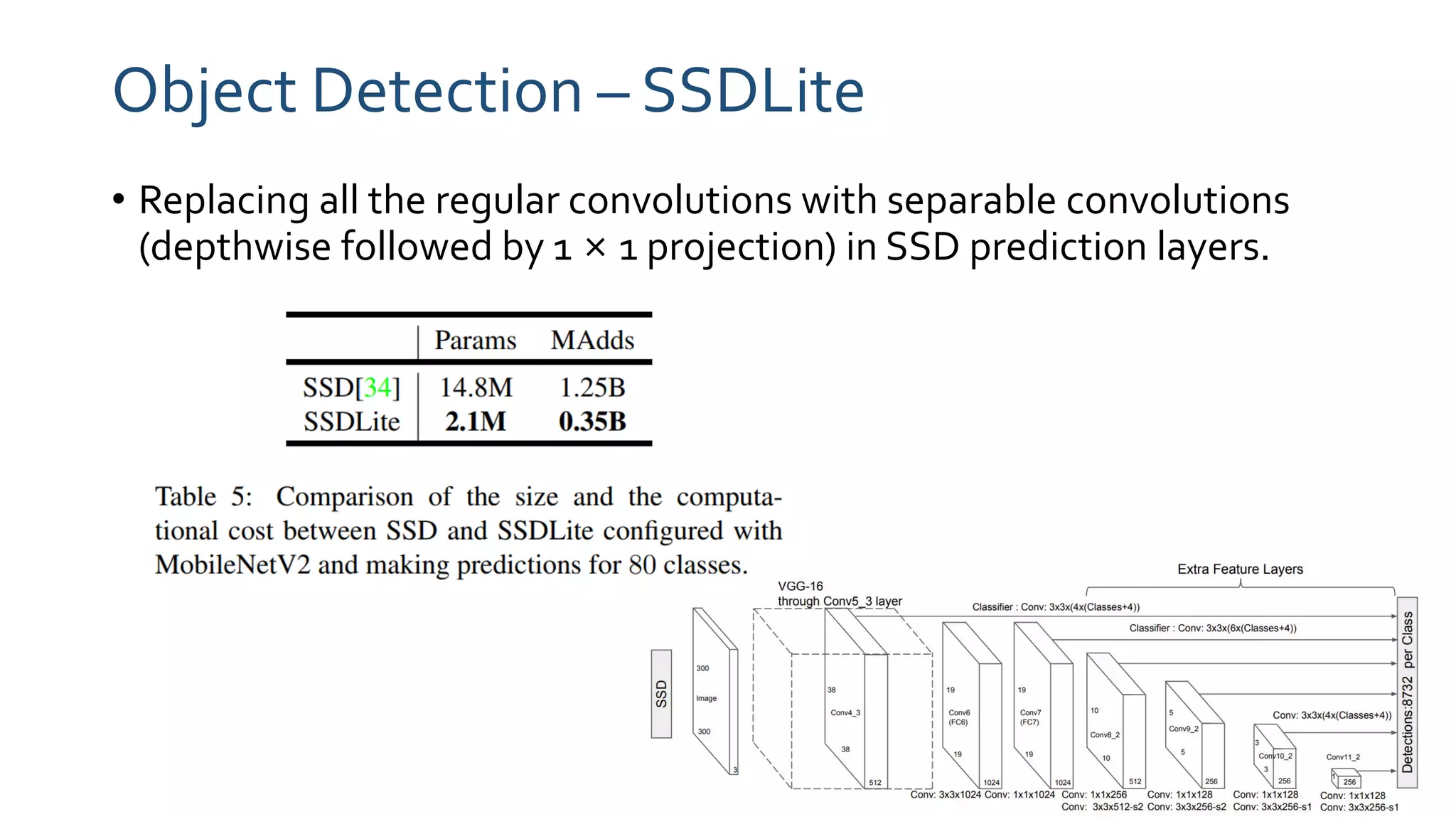

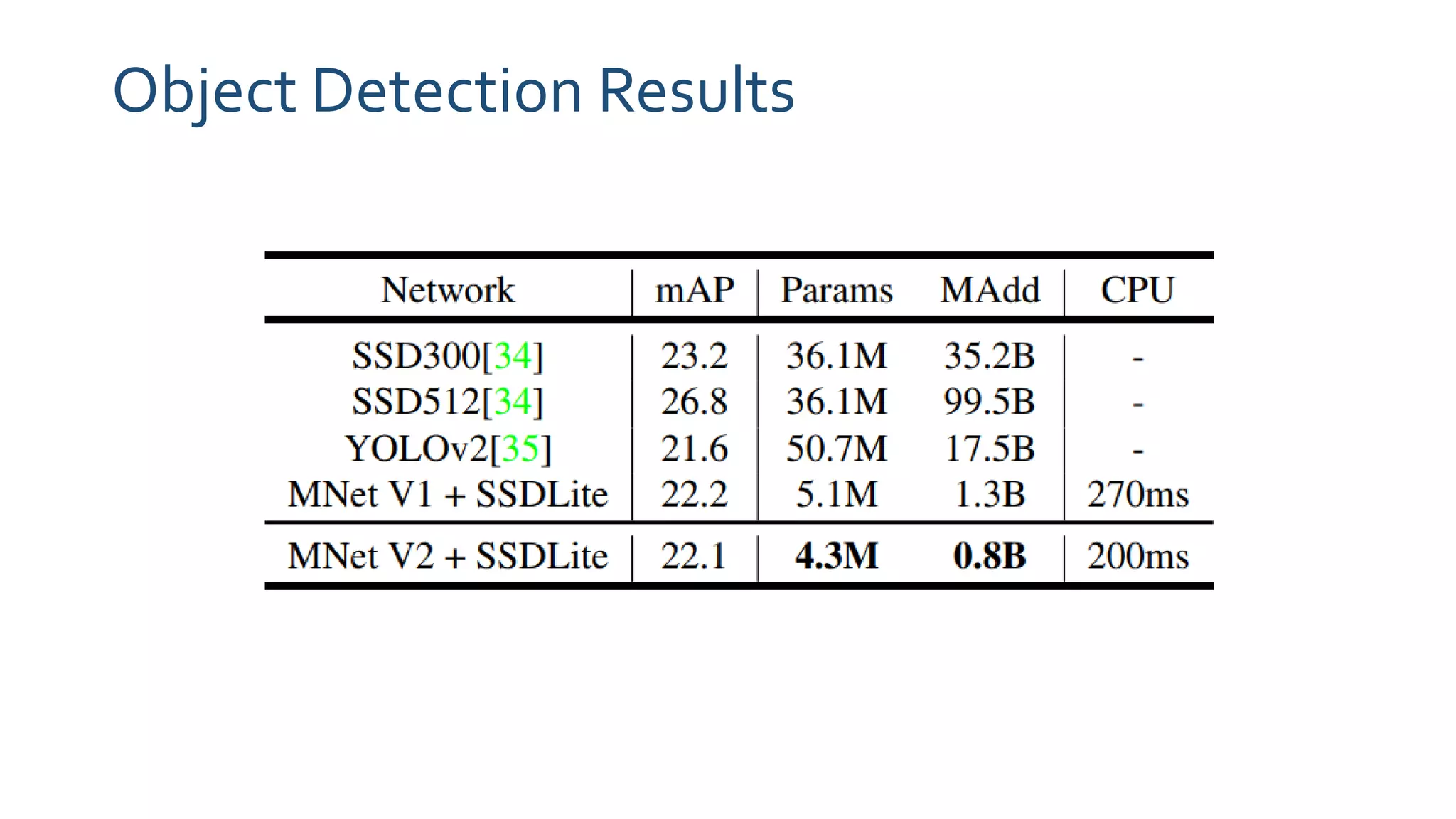

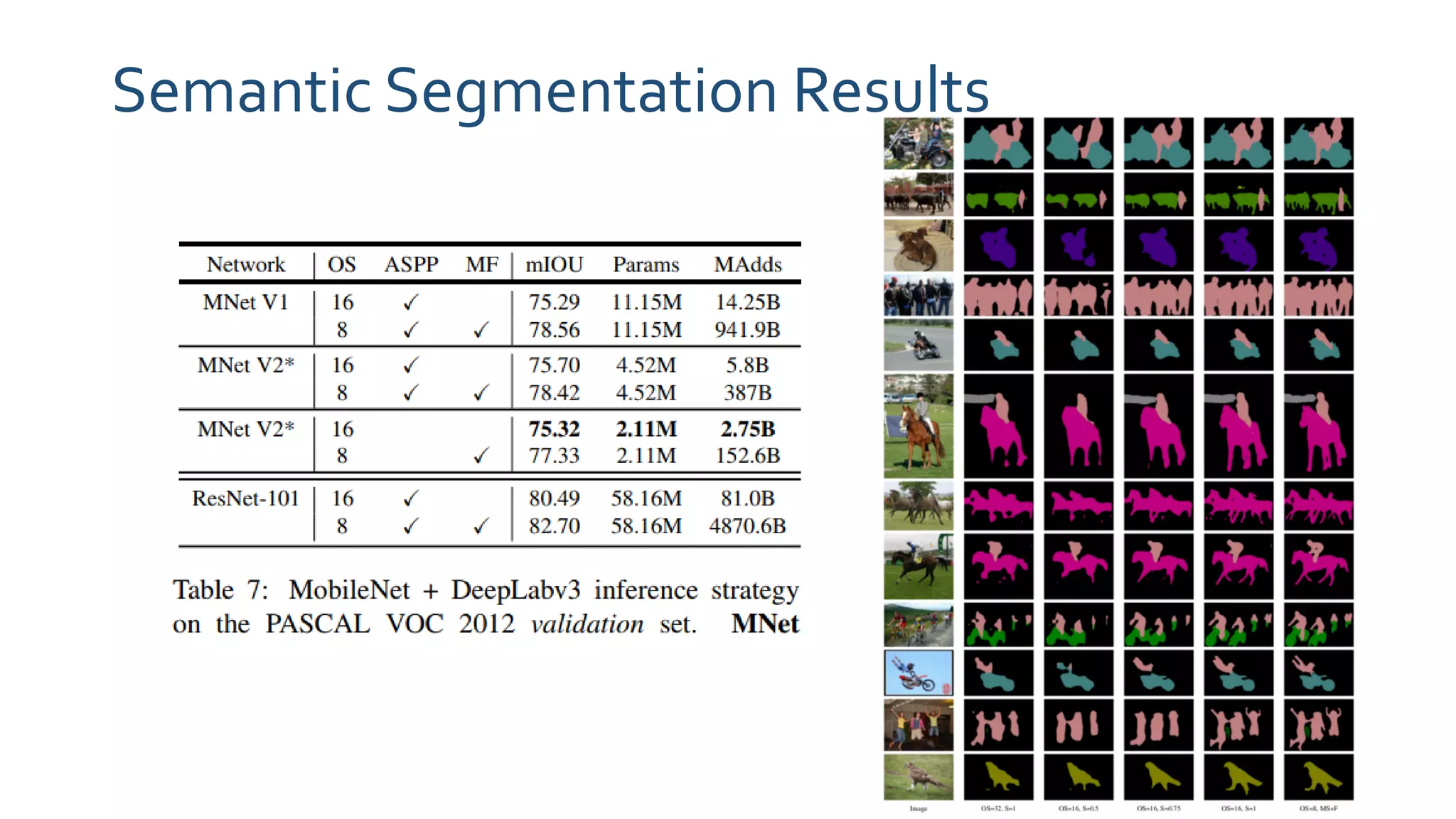

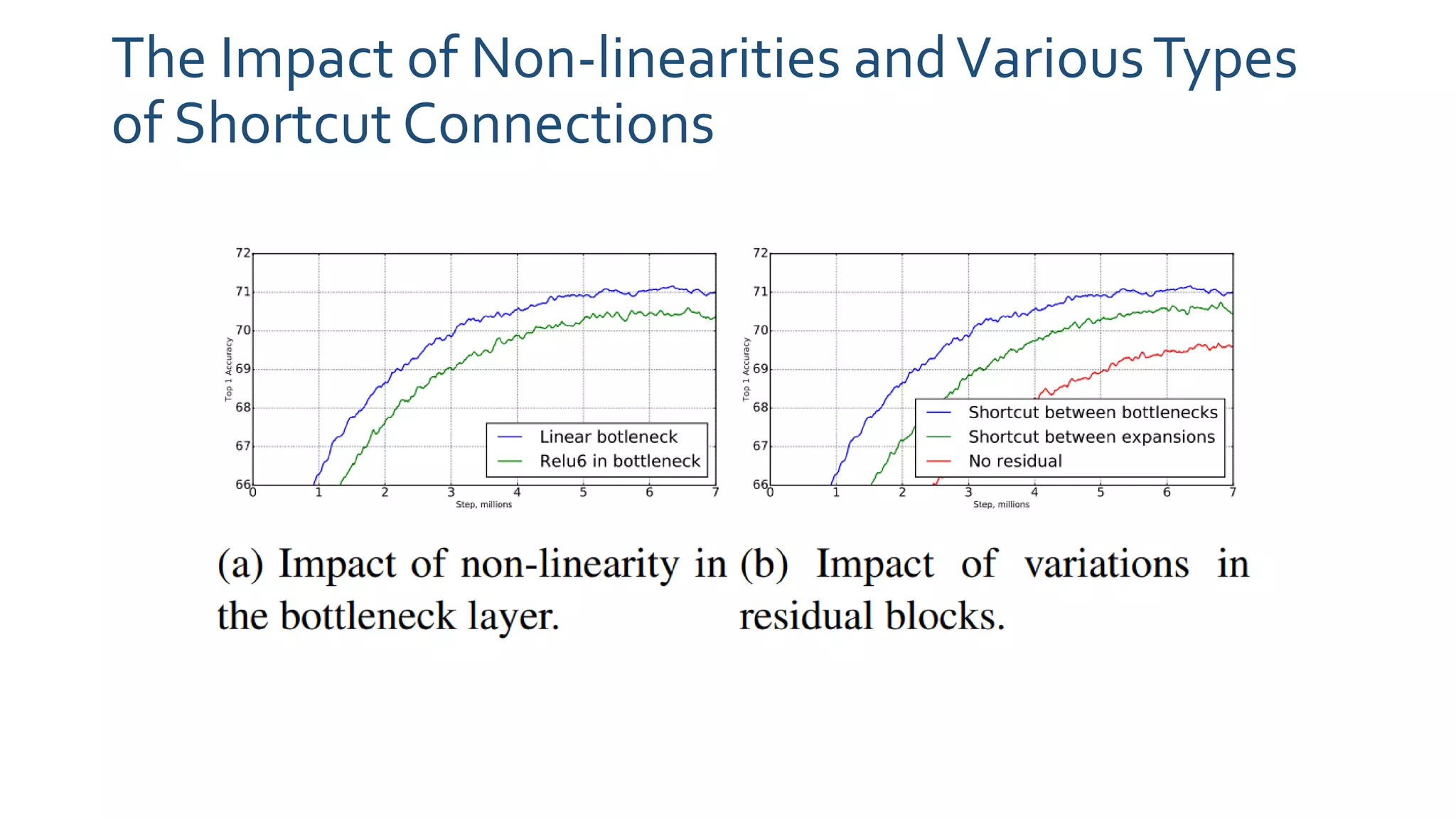

The paper 'MobileNetV2: Inverted Residuals and Linear Bottlenecks' discusses advancements in mobile neural networks, specifically emphasizing depthwise separable convolutions, linear bottlenecks, and inverted residuals to optimize computational efficiency. It introduces a novel architecture that maintains performance while significantly reducing computational costs, enhancing memory efficiency for mobile and embedded applications. The architecture includes 19 residual bottleneck layers following an initial fully convolutional layer, allowing for effective object detection and semantic segmentation.