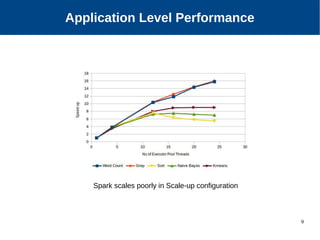

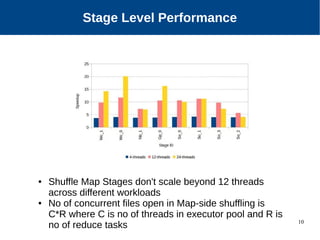

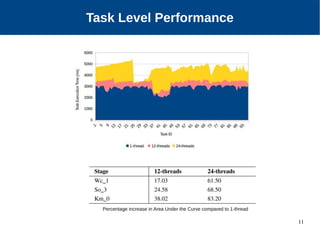

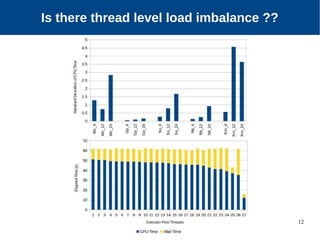

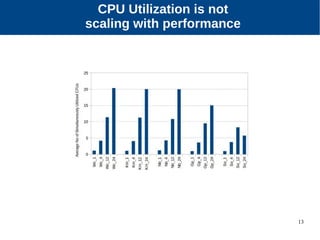

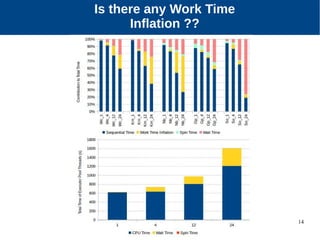

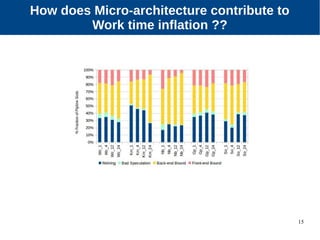

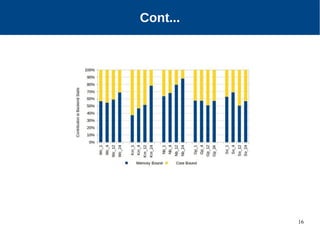

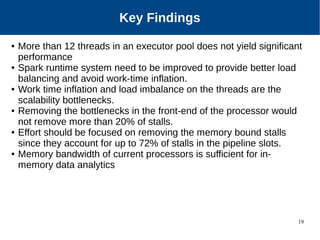

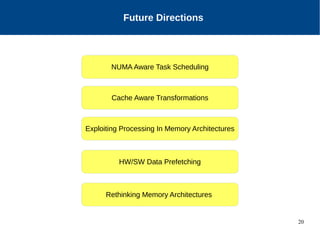

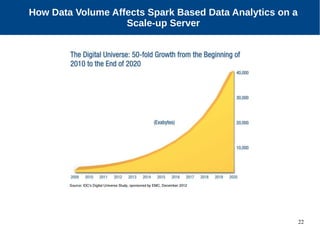

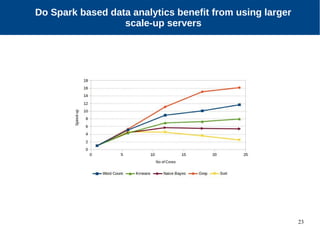

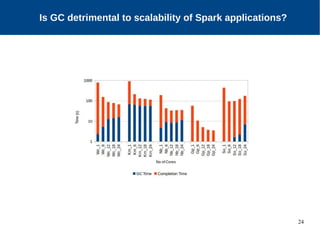

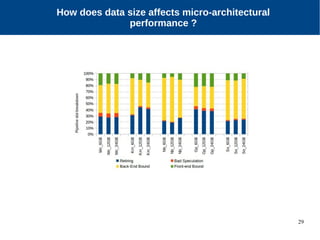

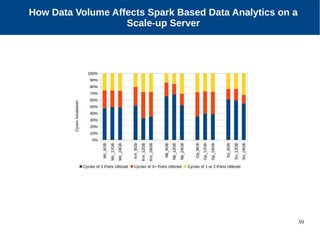

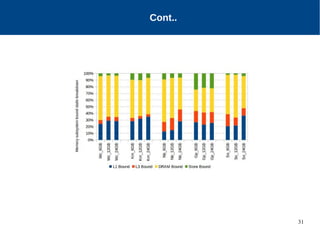

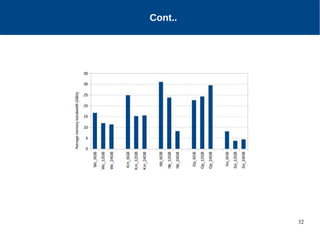

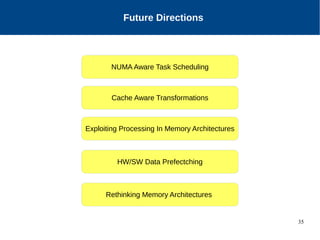

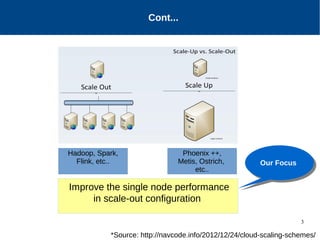

The document discusses the performance characterization of in-memory data analytics on cloud servers, focusing on improving single node performance in scale-out configurations. Key findings reveal that Spark's scalability is hindered by load imbalance and work time inflation, with optimal executor pool threads not exceeding 12 for significant performance gains. Future directions include enhancing task scheduling and optimizing memory architectures to address identified bottlenecks.

![4

Progress Meeting 12-12-14

Which Scale-out Framework ?

[Picture Courtesy: Amir H. Payberah]](https://image.slidesharecdn.com/bdcloudpresentation-160205170024/85/Performance-Characterization-of-In-Memory-Data-Analytics-on-a-Modern-Cloud-Server-4-320.jpg)