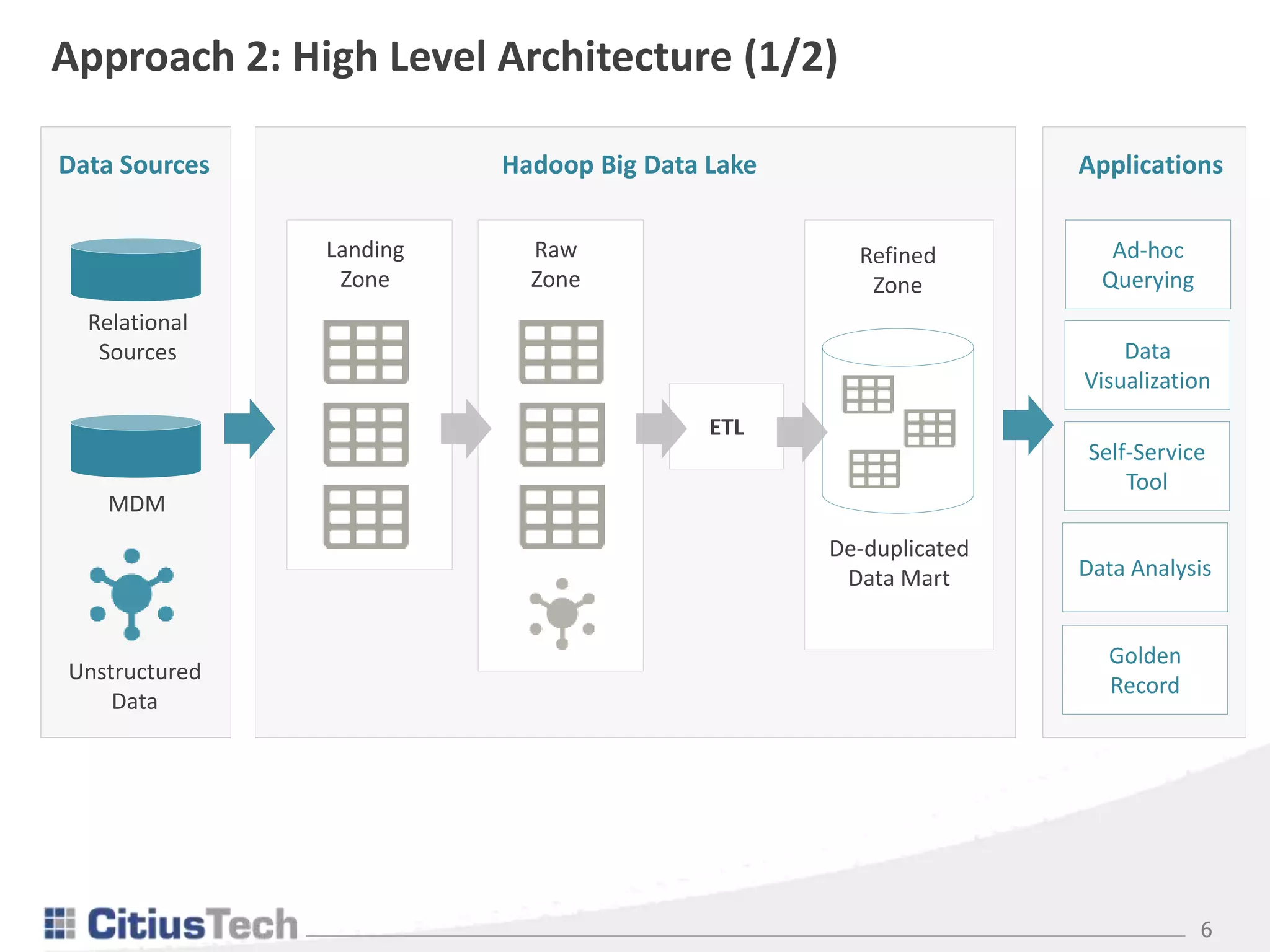

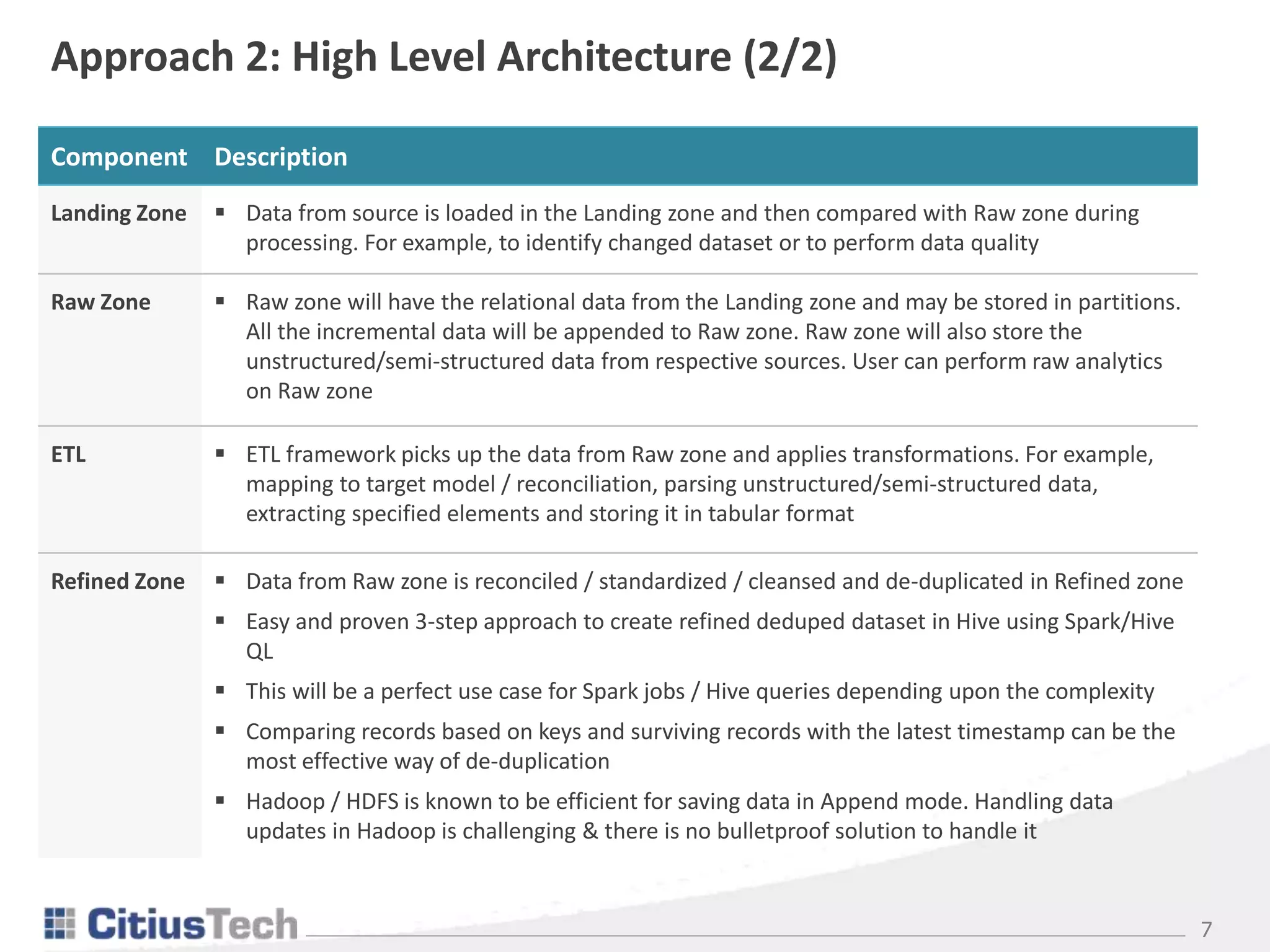

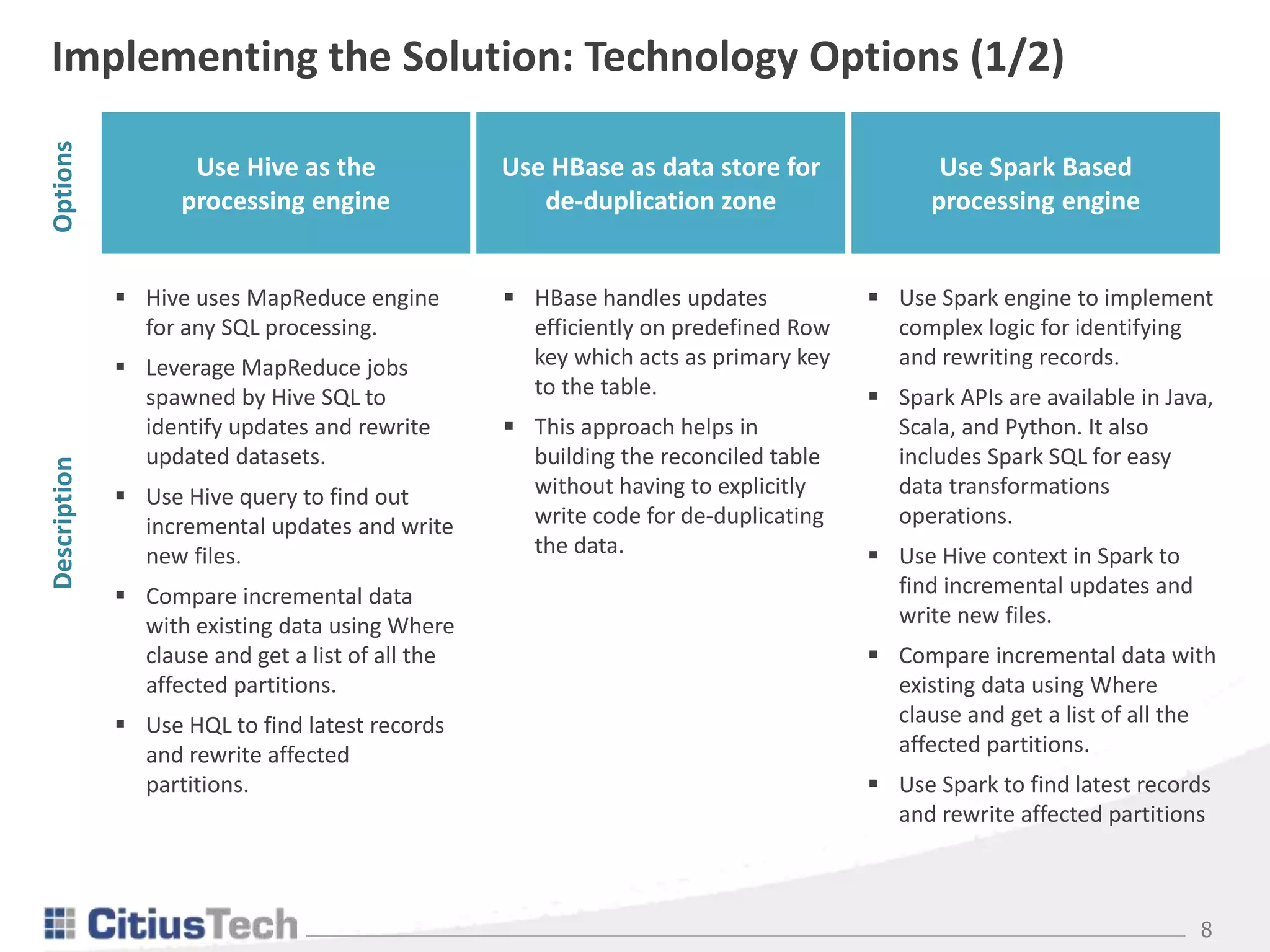

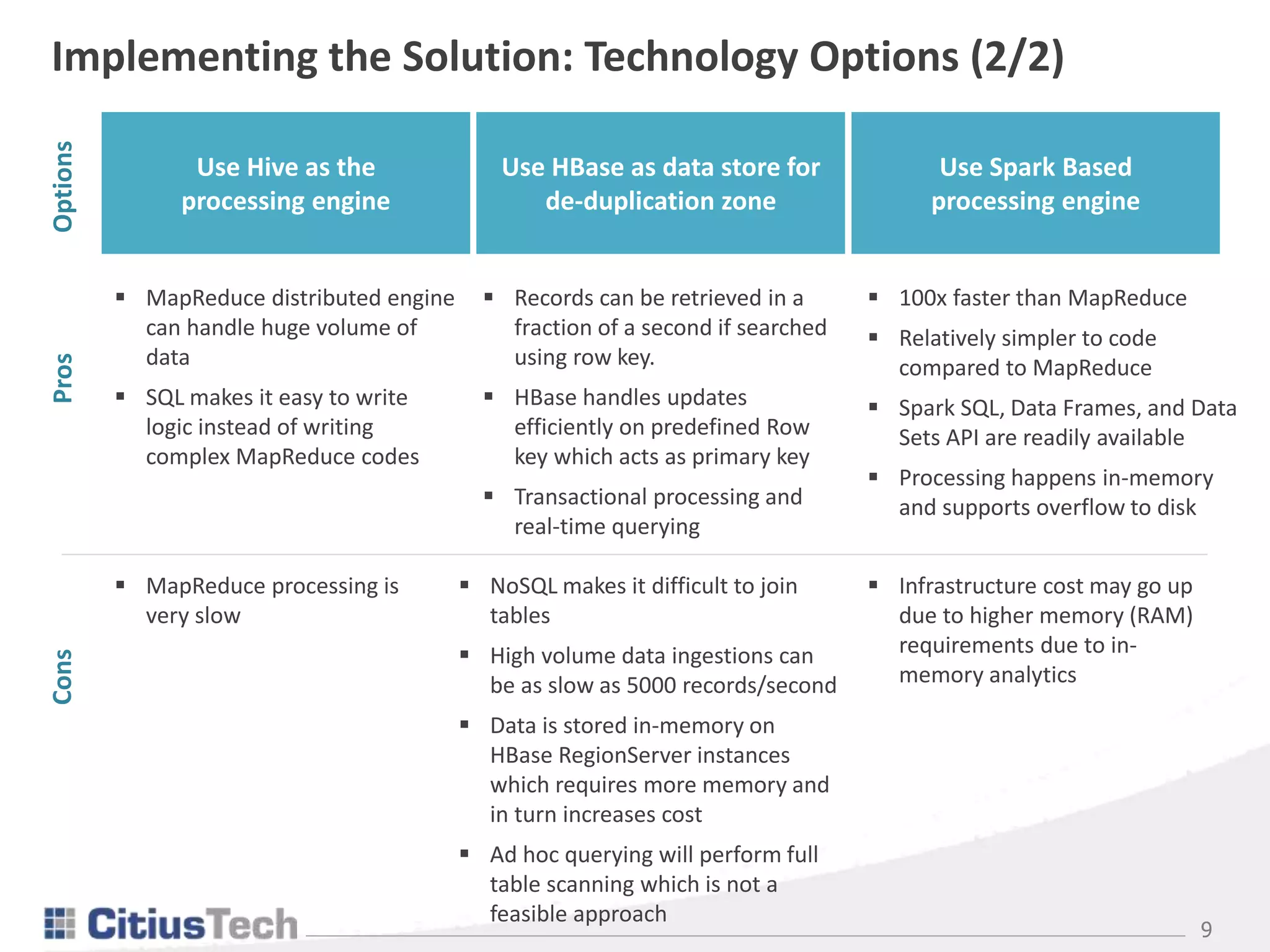

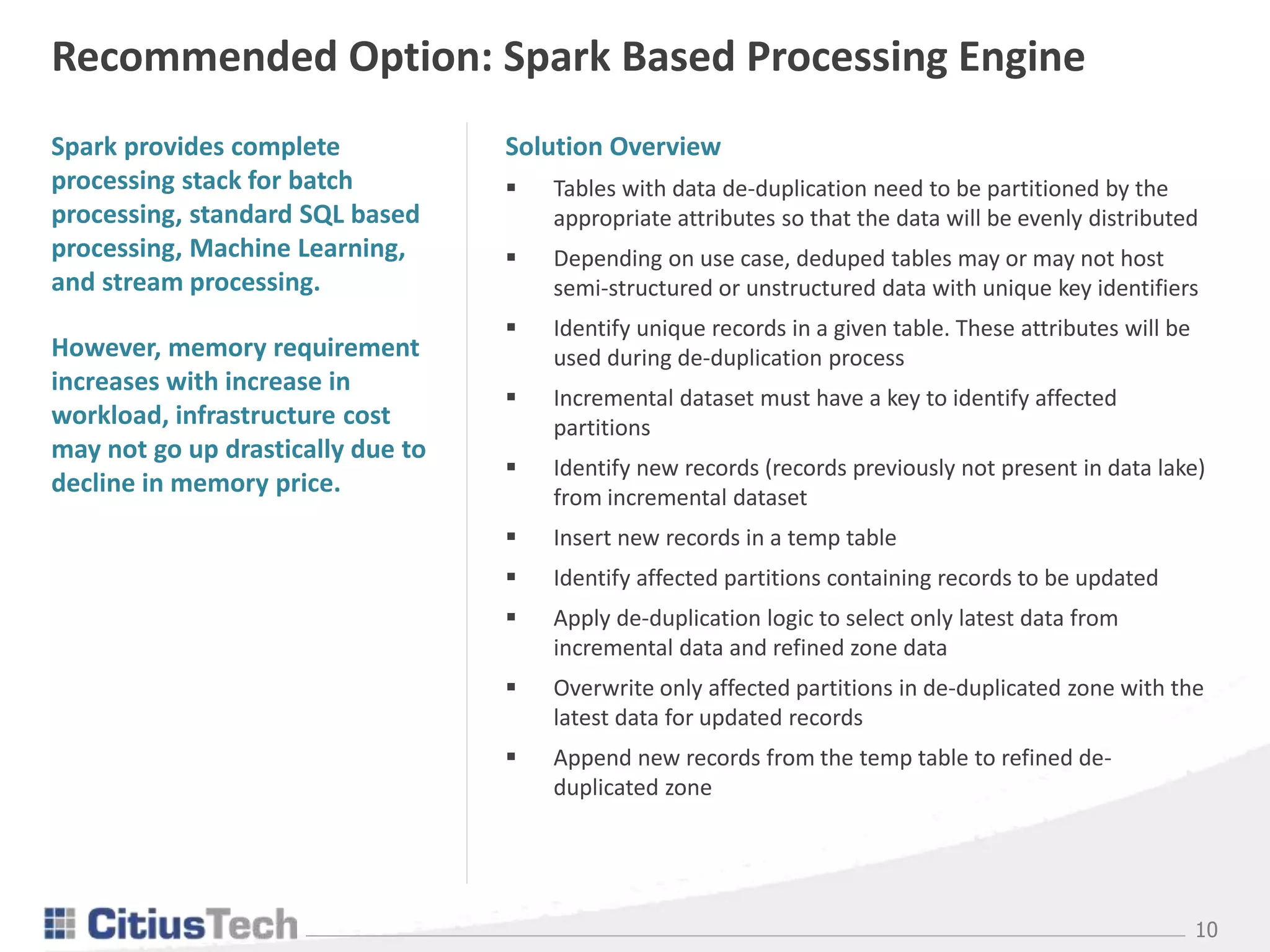

This document discusses de-duplicating data in a healthcare data lake using big data processing frameworks. It describes keeping duplicate records and querying the latest one, or rewriting records to create a golden copy. The preferred approach uses Spark to partition data, identify new/updated records, de-duplicate by selecting the latest from incremental and refined data, and overwrite only affected partitions. This creates a non-ambiguous, de-duplicated dataset for analysis in a scalable and cost-effective manner.