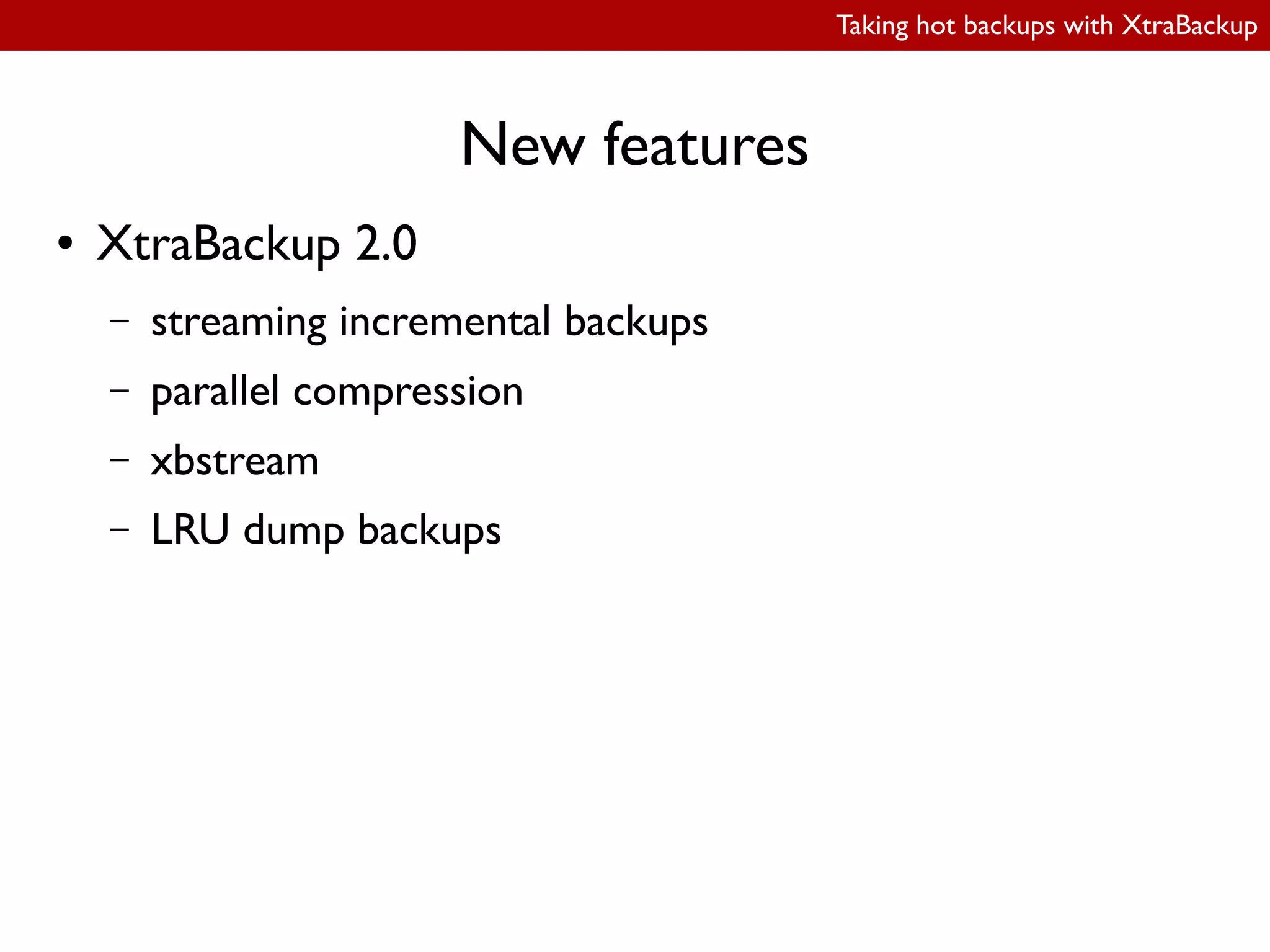

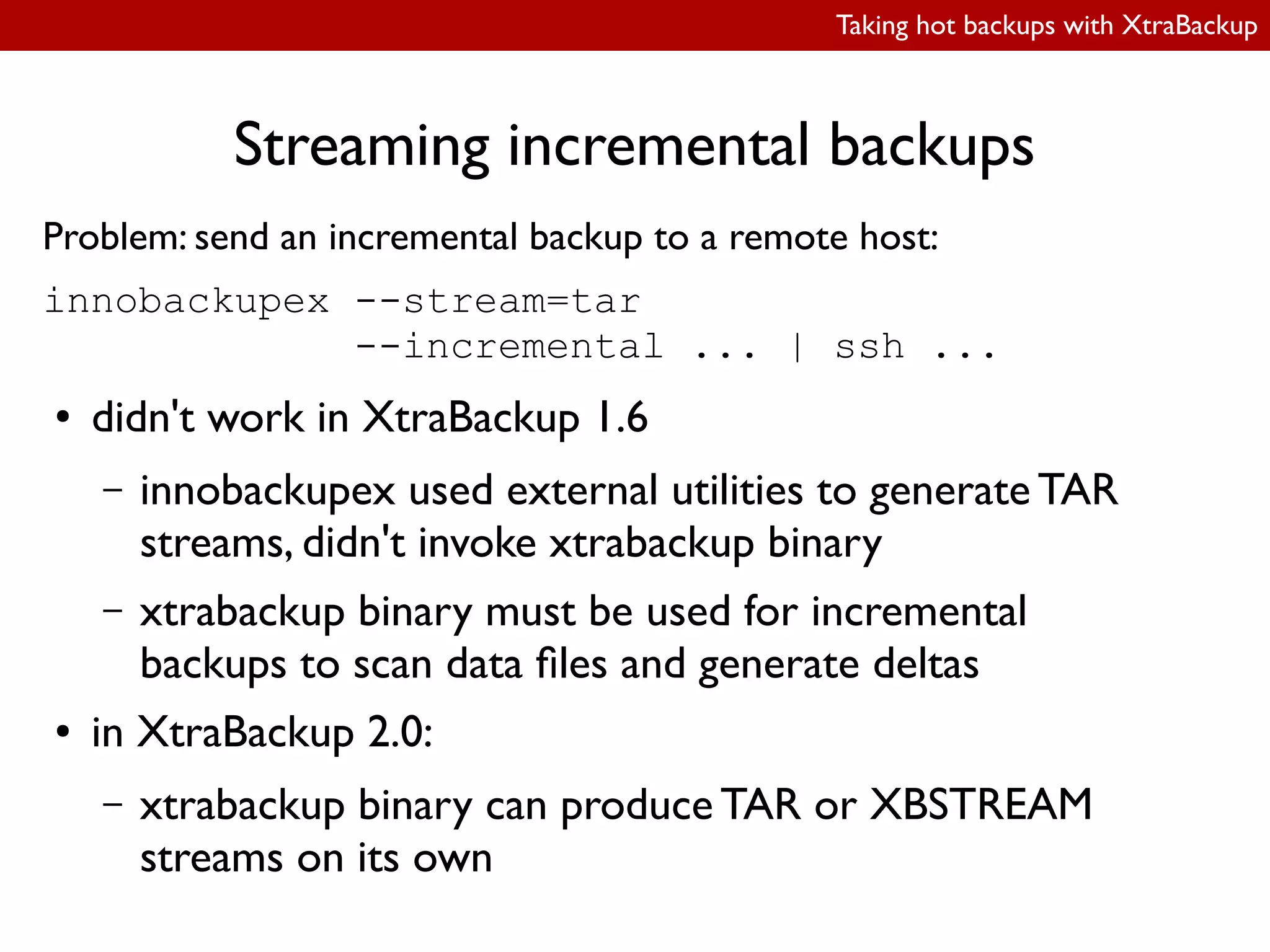

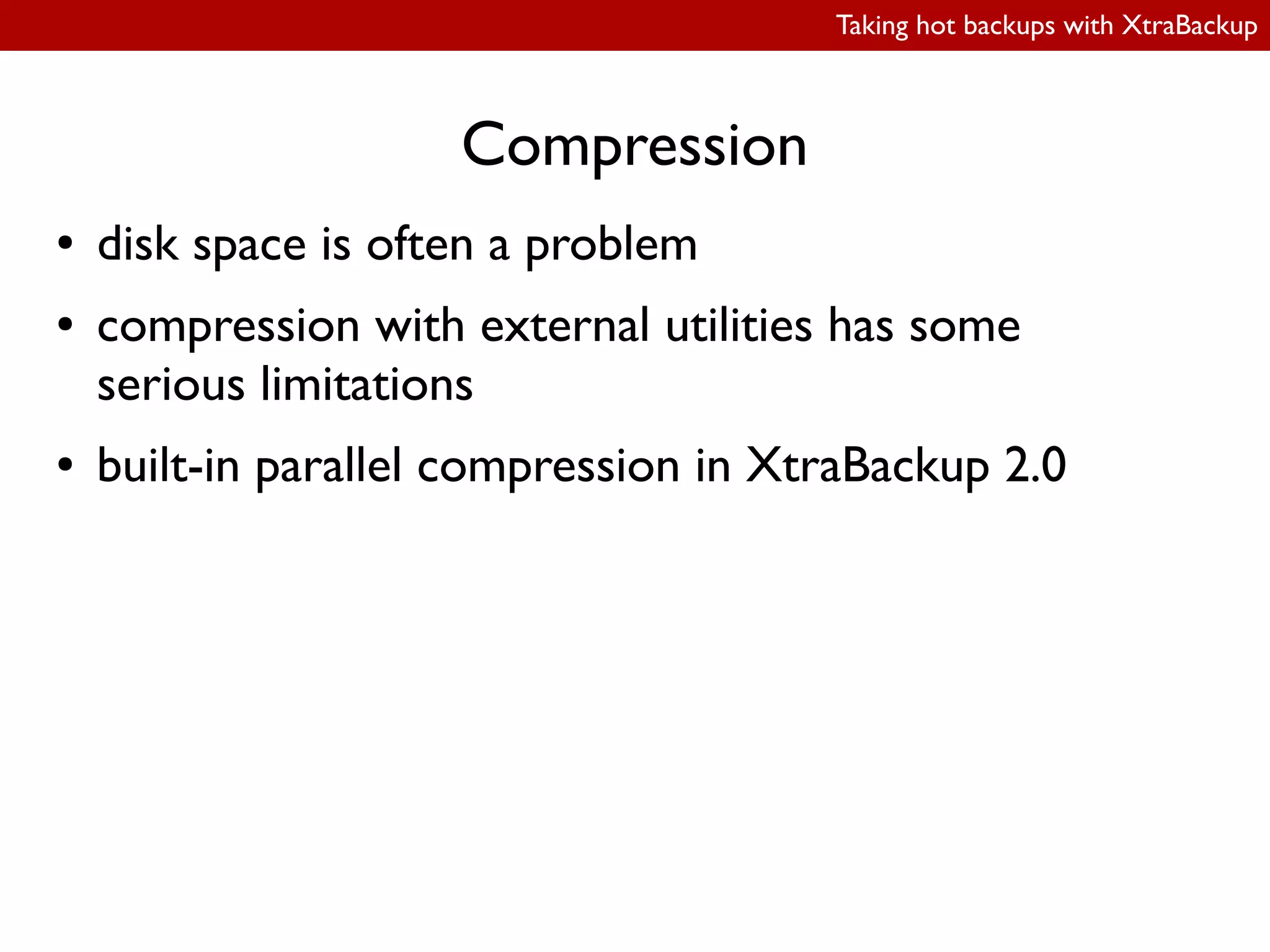

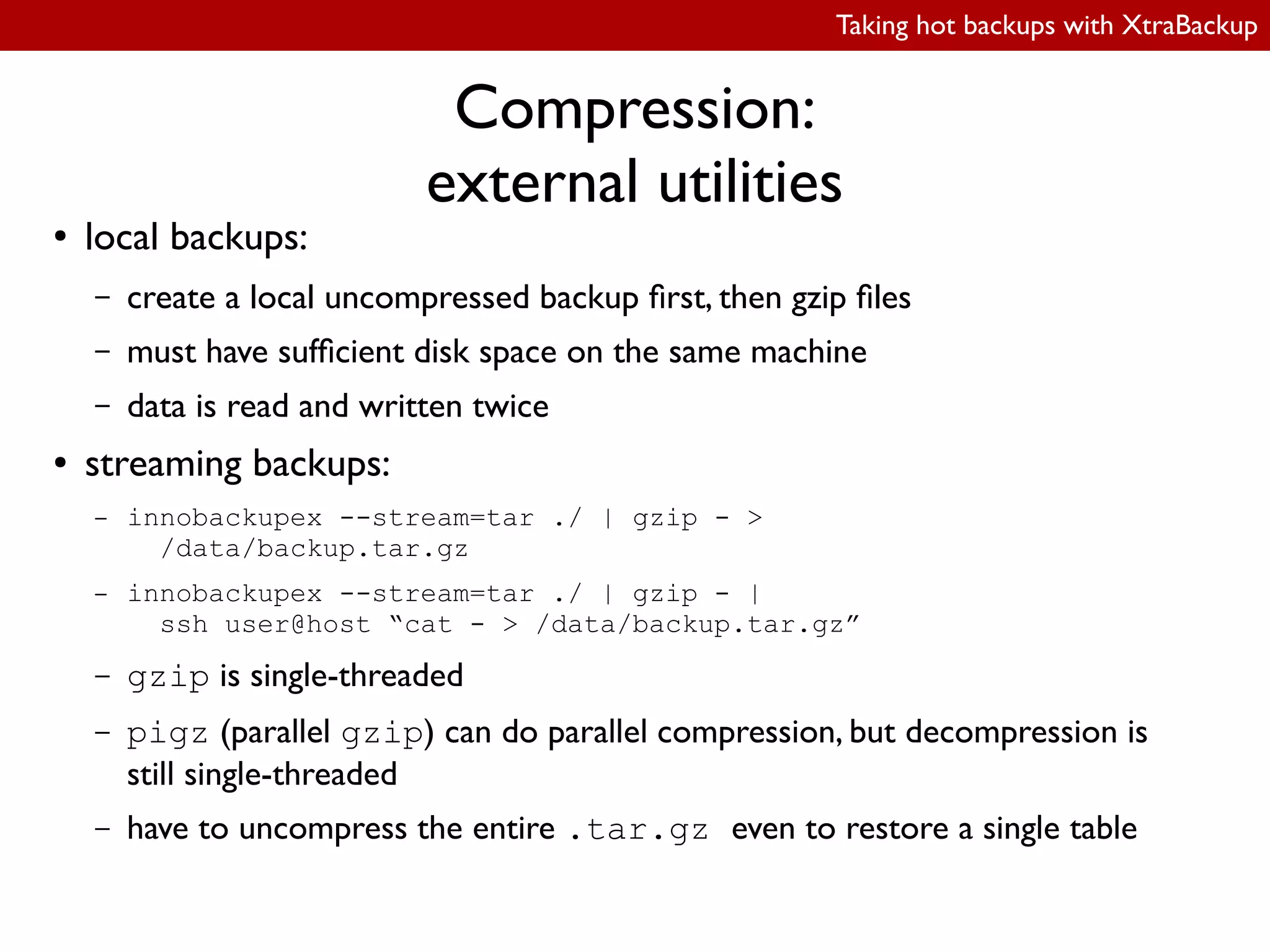

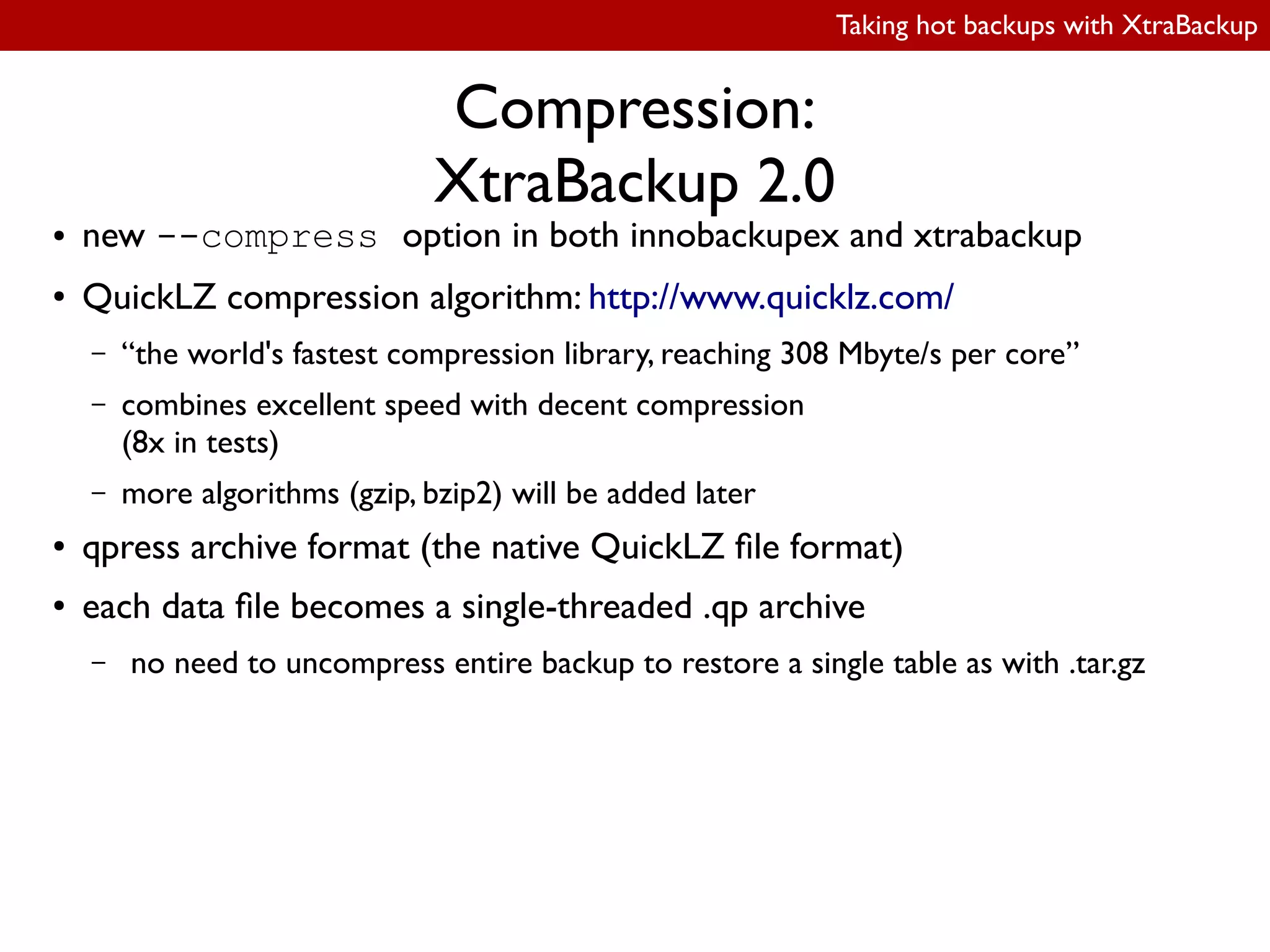

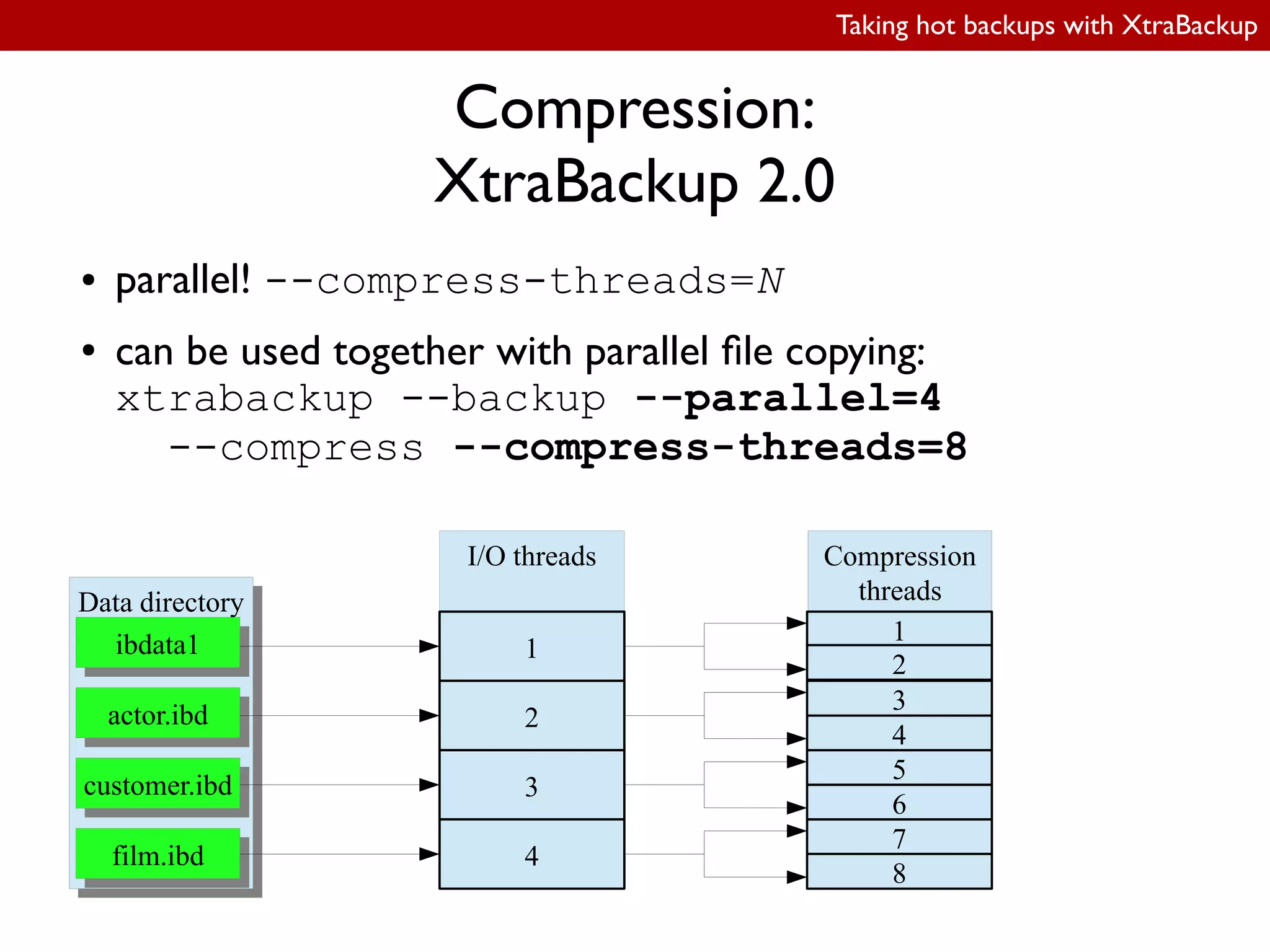

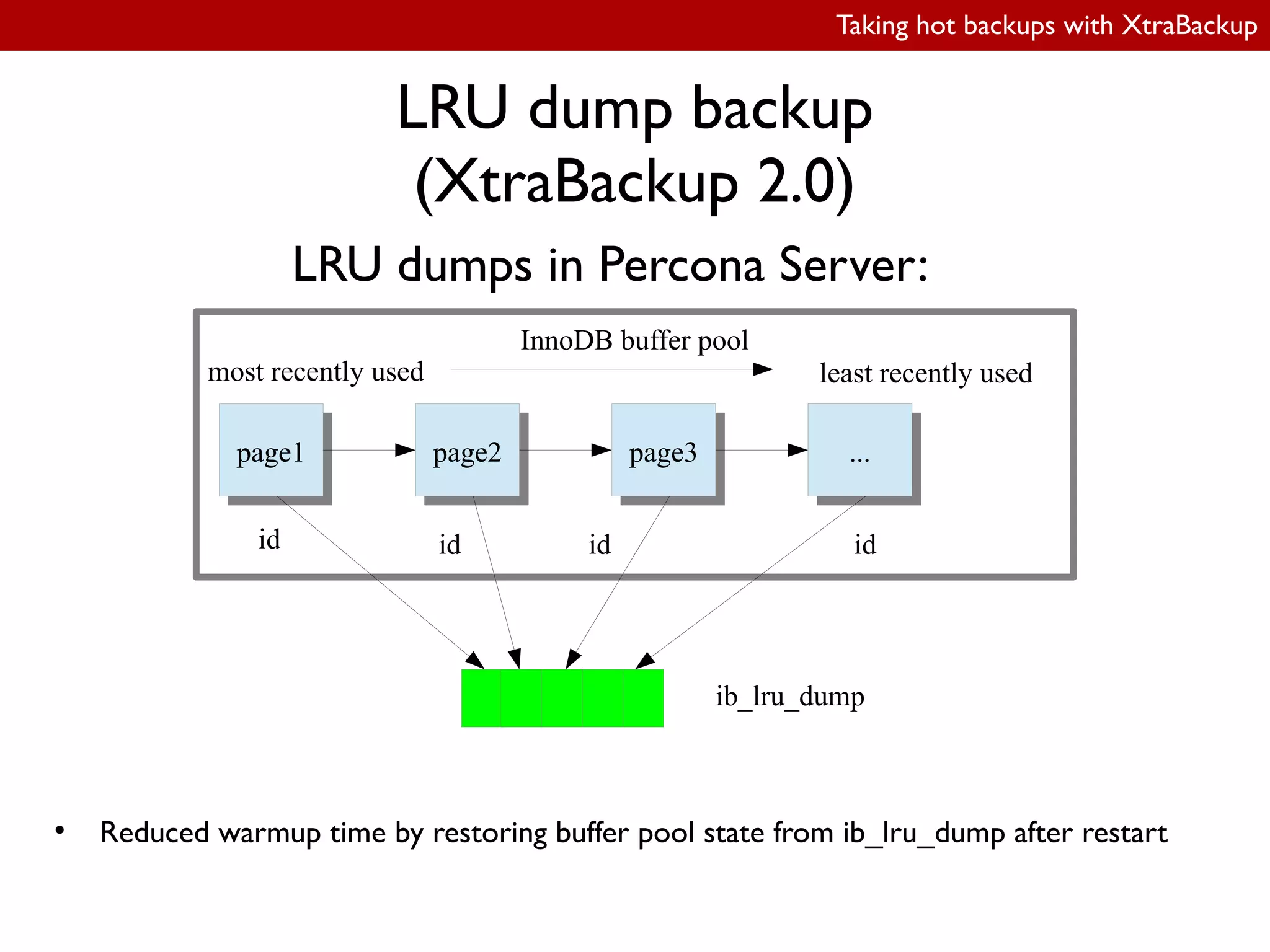

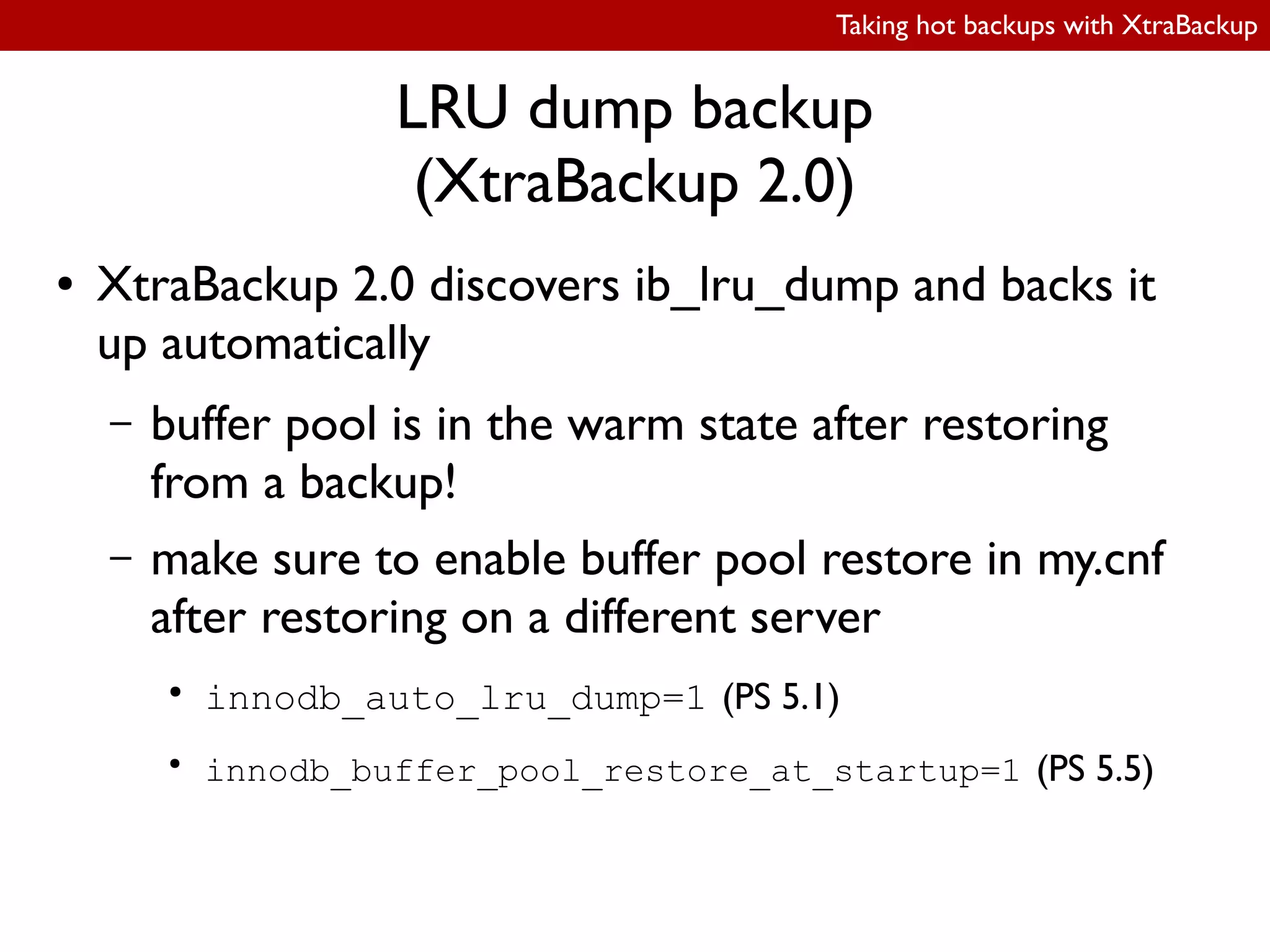

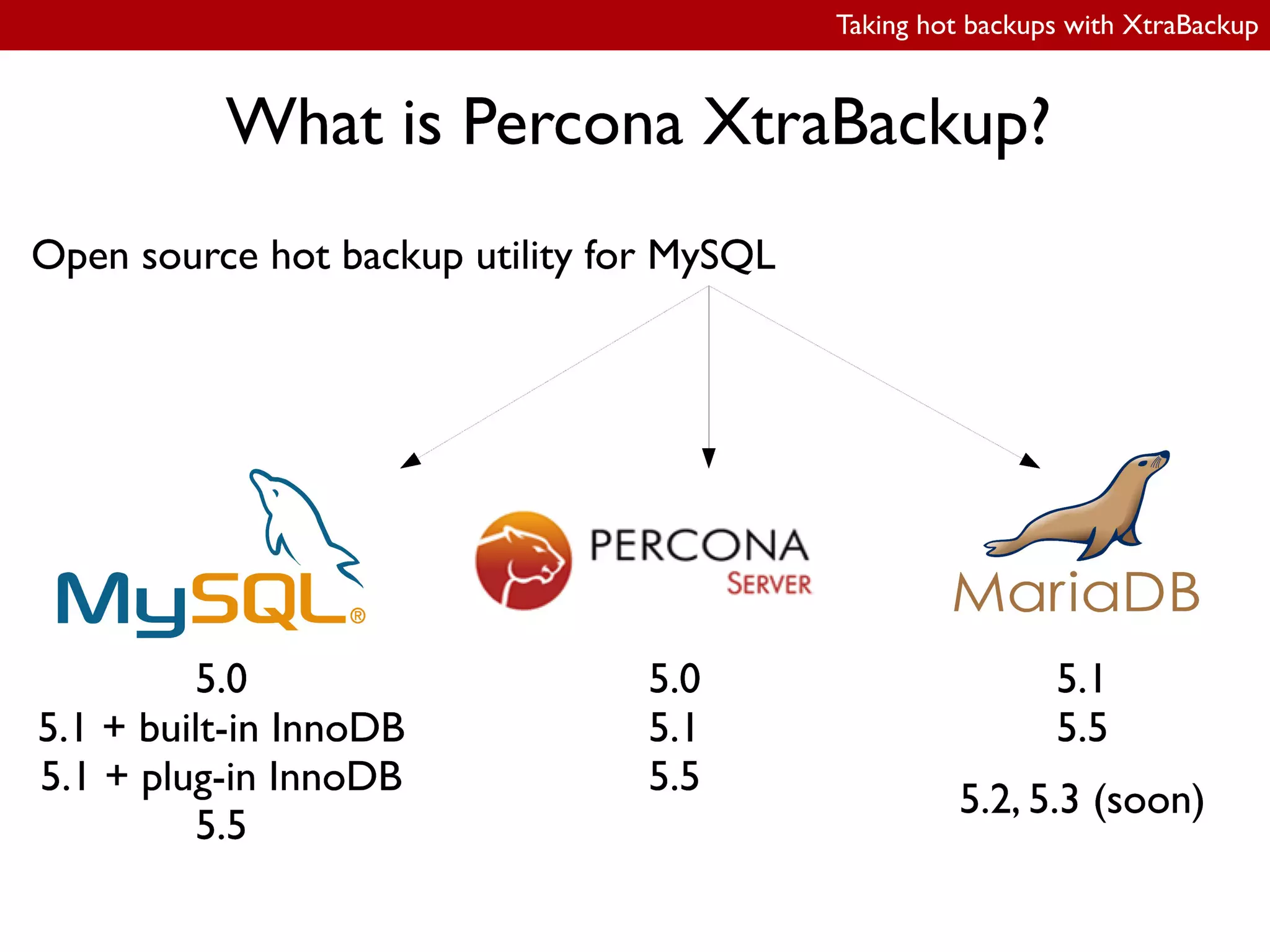

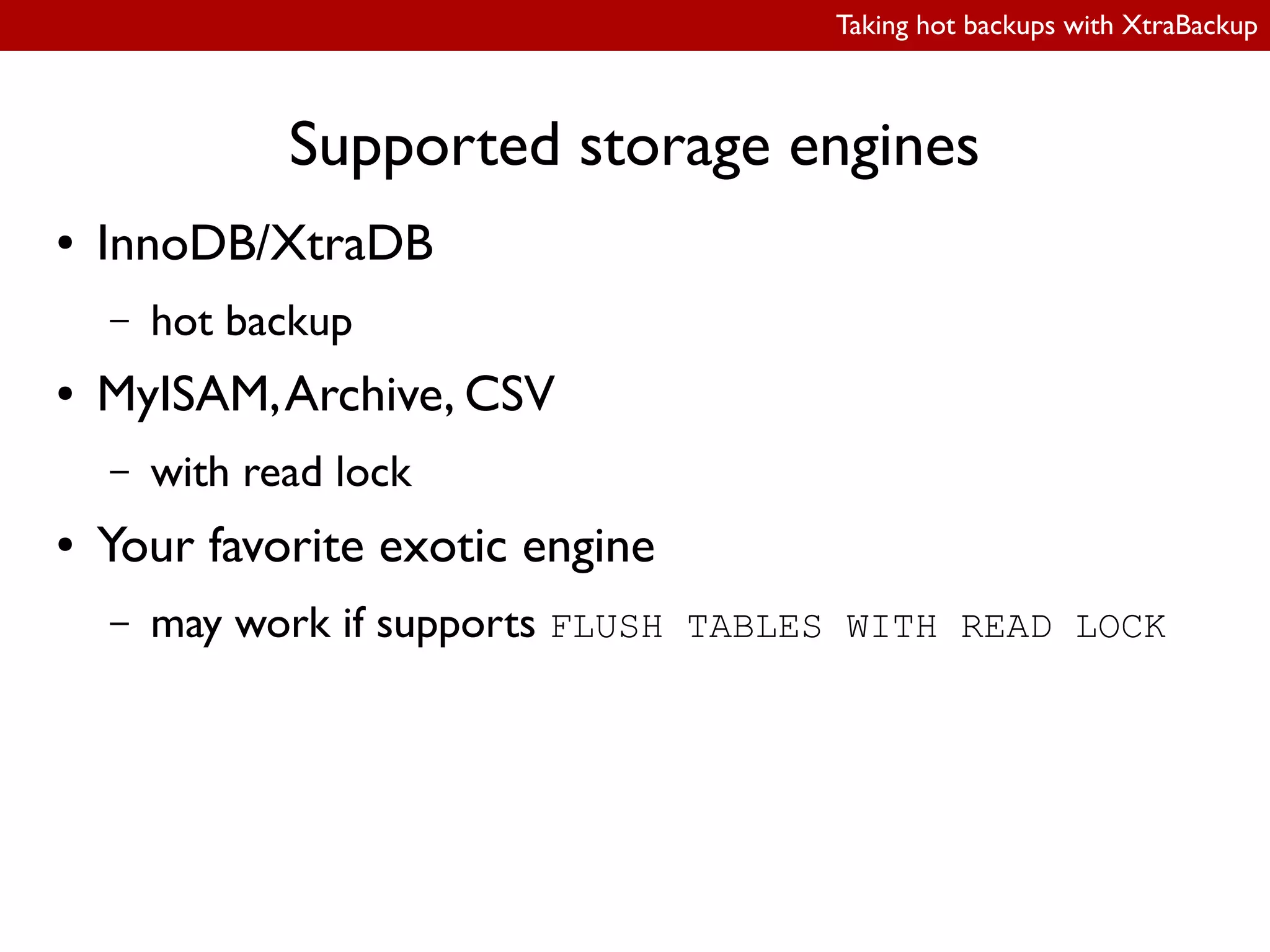

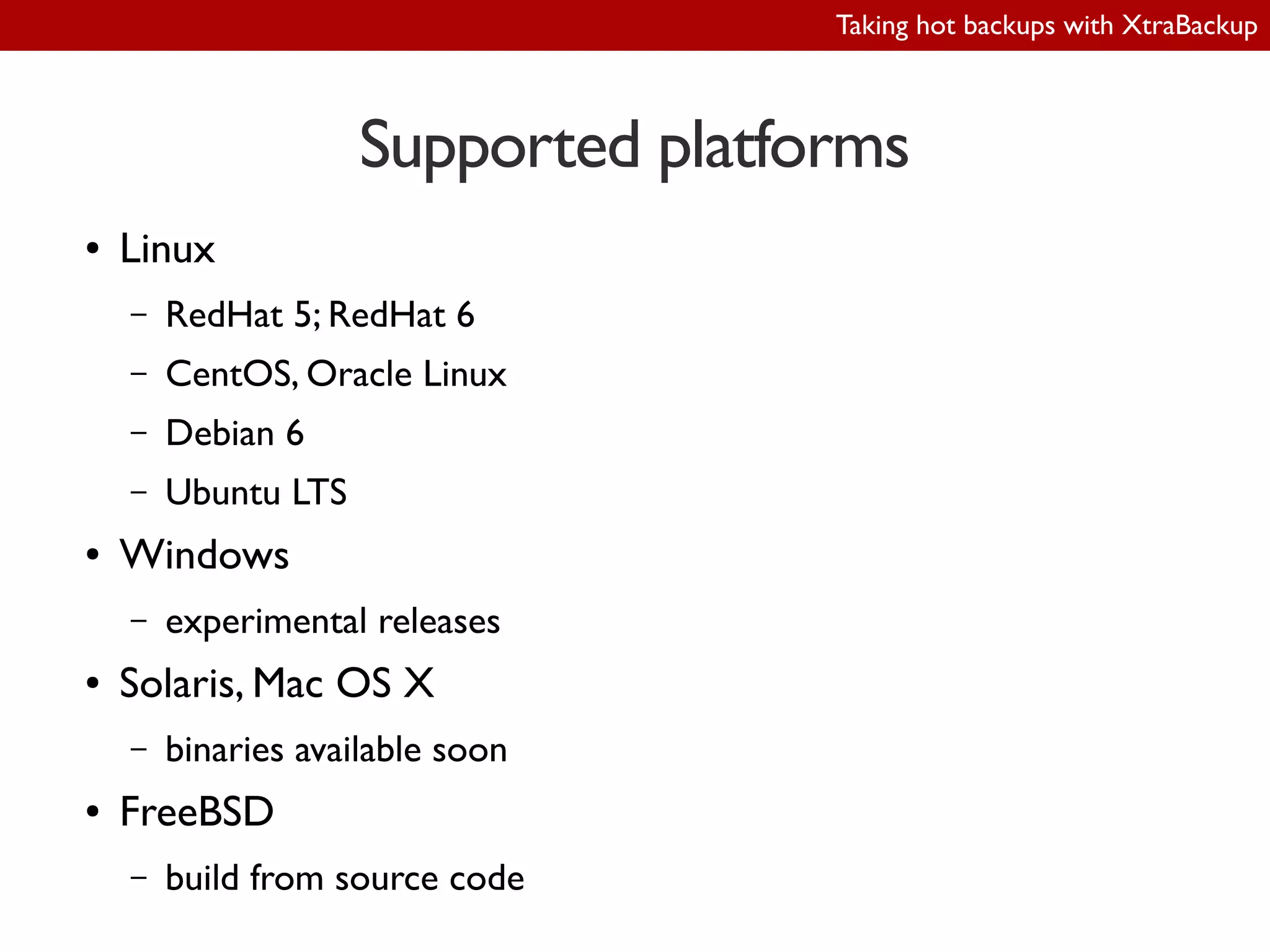

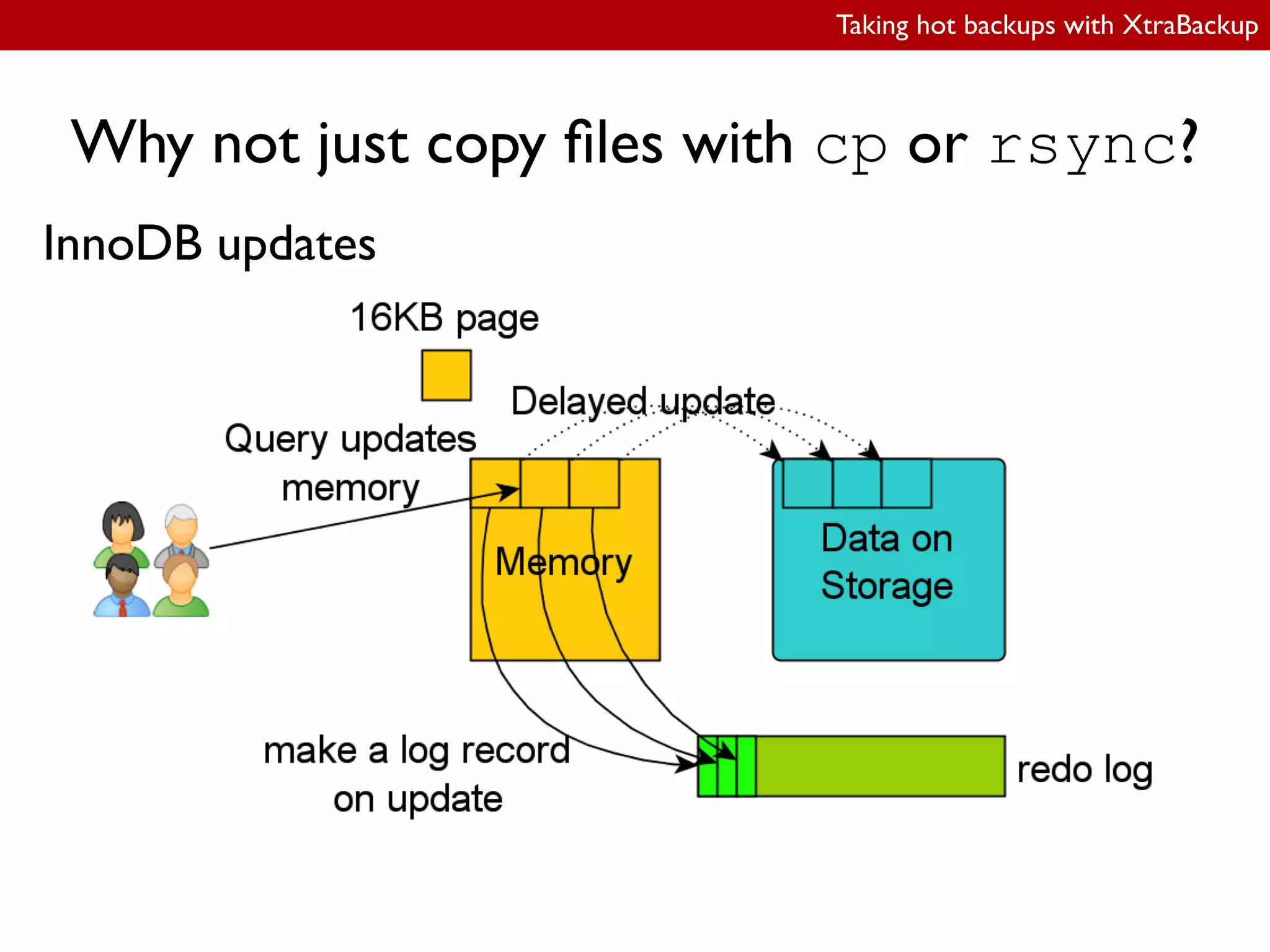

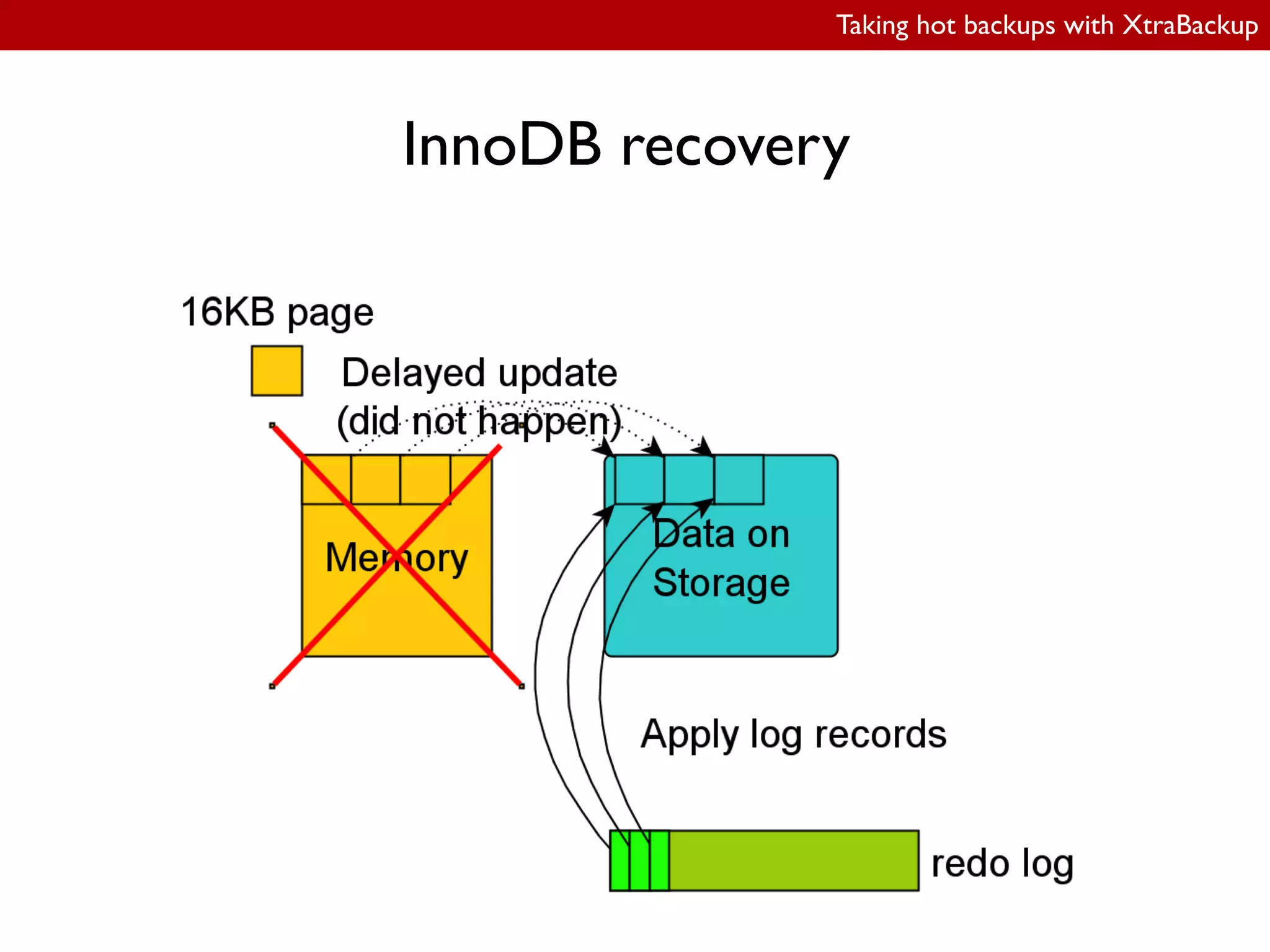

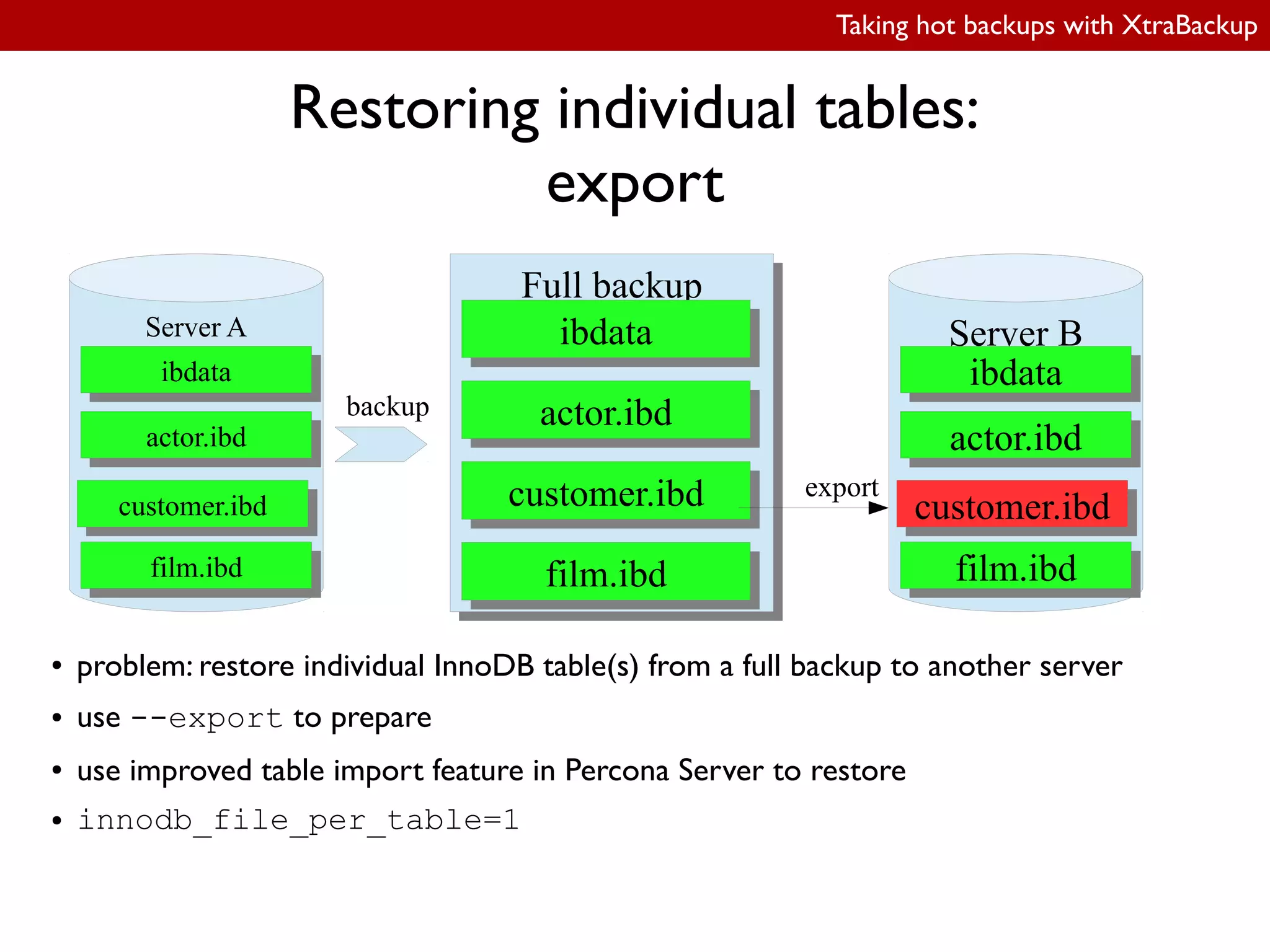

The document provides an in-depth guide on taking hot backups using XtraBackup, a tool developed by Percona for MySQL databases. It details supported storage engines, platforms, backup strategies, incremental backups, and restoring individual tables, highlighting usage examples and key configuration options. Additionally, it discusses new features in XtraBackup 2.0, including streaming incremental backups, parallel compression, and LRU dump backups for improved recovery times.

![Taking hot backups with XtraBackup

Basic usage

(taking a backup)

innobackupex [options…] /data/backup

Options:

● --defaults-file=/path/to/my.cnf

– datadir (/var/lib/mysql by default)

● --user

● --password

● --host

● --socket](https://image.slidesharecdn.com/alexeykopytovxtrabackup-190301083524/75/OSDC-2012-Taking-hot-backups-with-XtraBackup-by-Alexey-Kopytov-20-2048.jpg)

![Taking hot backups with XtraBackup

Partial backups:

selecting what to backup

innobackupex:

● streaming backups:

● --databases=”database1[.table1] ...”,

e.g.: --databases=”employees sales.orders”

● local backups:

● --tables-file=filename, ile contains database.table, one per line

● --include=regexp,

e.g.: --include='^database(1|2).reports.*'

xtrabackup:

● --tables-file=filename (same syntax as with innobackupex)

● --tables=regexp (equivalent to --include in innobackupex)](https://image.slidesharecdn.com/alexeykopytovxtrabackup-190301083524/75/OSDC-2012-Taking-hot-backups-with-XtraBackup-by-Alexey-Kopytov-39-2048.jpg)

![Taking hot backups with XtraBackup

Partial backups:

selecting what to backup

innobackupex:

● streaming backups:

● --databases=”database1[.table1] ...”,

e.g.: --databases=”employees sales.orders”

● local backups:

● --tables-file=filename, ile contains database.table, one per line

● --include=regexp,

e.g.: --include='^database(1|2).reports.*'

xtrabackup:

● --tables-file=filename (same syntax as with innobackupex)

● --tables=regexp (equivalent to --include in innobackupex)](https://image.slidesharecdn.com/alexeykopytovxtrabackup-190301083524/75/OSDC-2012-Taking-hot-backups-with-XtraBackup-by-Alexey-Kopytov-40-2048.jpg)

![Taking hot backups with XtraBackup

Partial backups:

selecting what to backup

innobackupex:

● streaming backups:

● --databases=”database1[.table1] ...”,

e.g.: --databases=”employees sales.orders”

● local backups:

● --tables-file=filename, ile contains database.table, one per line

● --include=regexp,

e.g.: --include='^database(1|2).reports.*'

xtrabackup:

● --tables-file=filename (same syntax as with innobackupex)

● --tables=regexp (equivalent to --include in innobackupex)](https://image.slidesharecdn.com/alexeykopytovxtrabackup-190301083524/75/OSDC-2012-Taking-hot-backups-with-XtraBackup-by-Alexey-Kopytov-41-2048.jpg)

![Taking hot backups with XtraBackup

Partial backups:

selecting what to backup

innobackupex:

● streaming backups:

● --databases=”database1[.table1] ...”,

e.g.: --databases=”employees sales.orders”

● local backups:

● --tables-file=filename, ile contains database.table, one per line

● --include=regexp,

e.g.: --include='^database(1|2).reports.*'

xtrabackup:

● --tables-file=filename (same syntax as with innobackupex)

● --tables=regexp (equivalent to --include in innobackupex)](https://image.slidesharecdn.com/alexeykopytovxtrabackup-190301083524/75/OSDC-2012-Taking-hot-backups-with-XtraBackup-by-Alexey-Kopytov-42-2048.jpg)