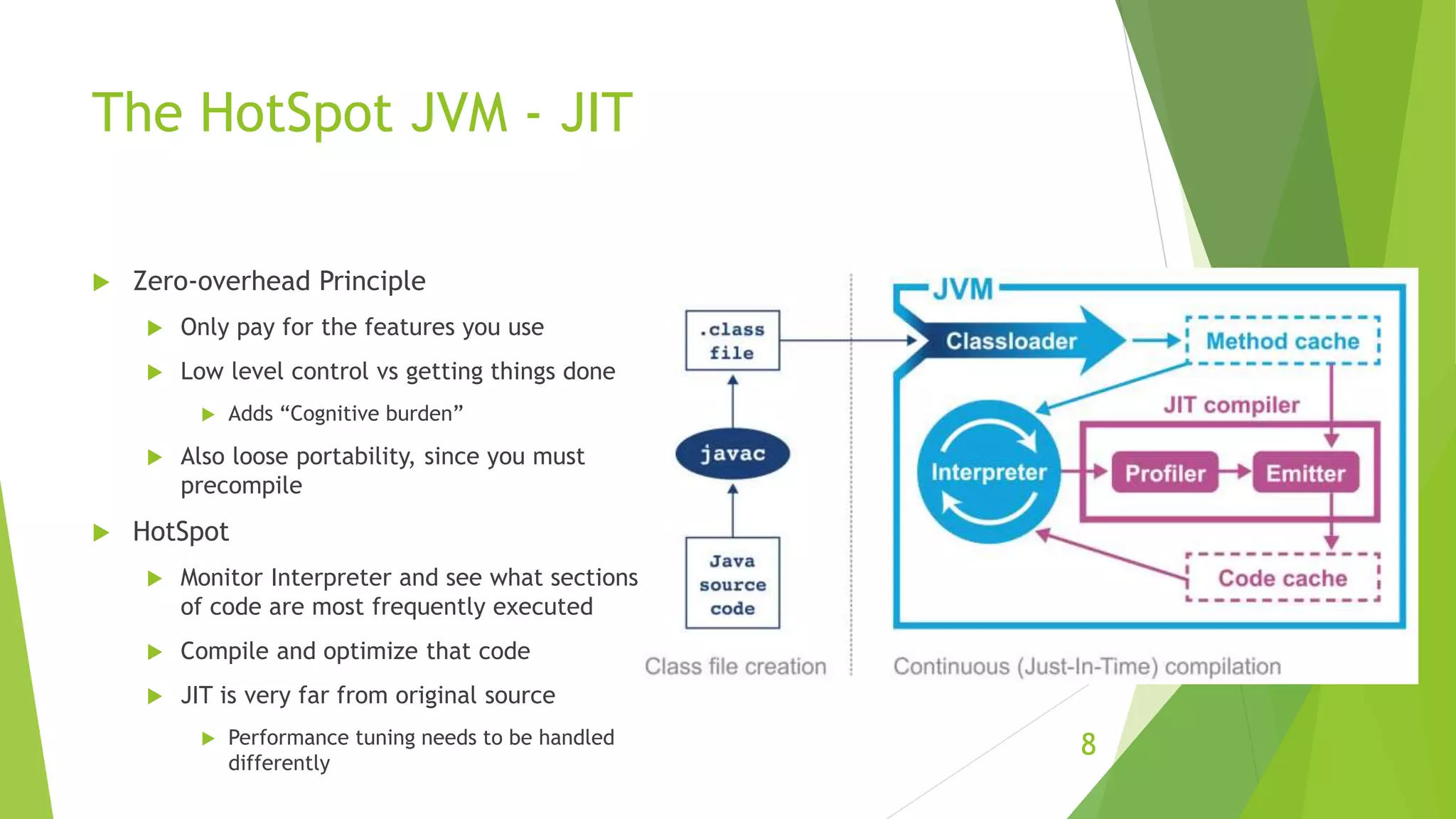

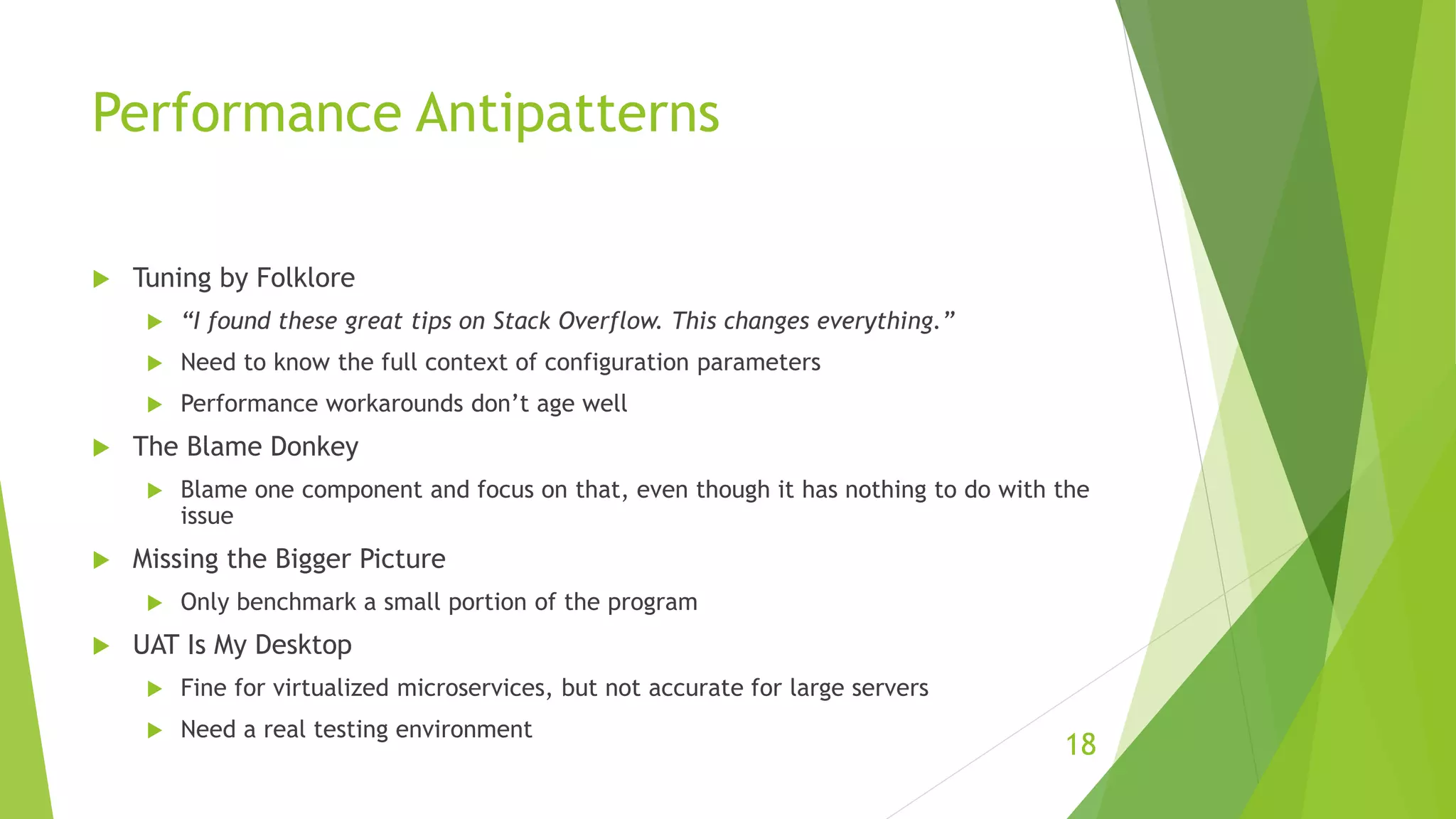

The document discusses optimizing Java performance. It covers the history of Java performance tuning, how performance testing and optimization is experimental in nature, different aspects of performance to measure, how the Java HotSpot JIT compiler works to optimize code at runtime, best practices for performance testing, and common performance antipatterns to avoid.