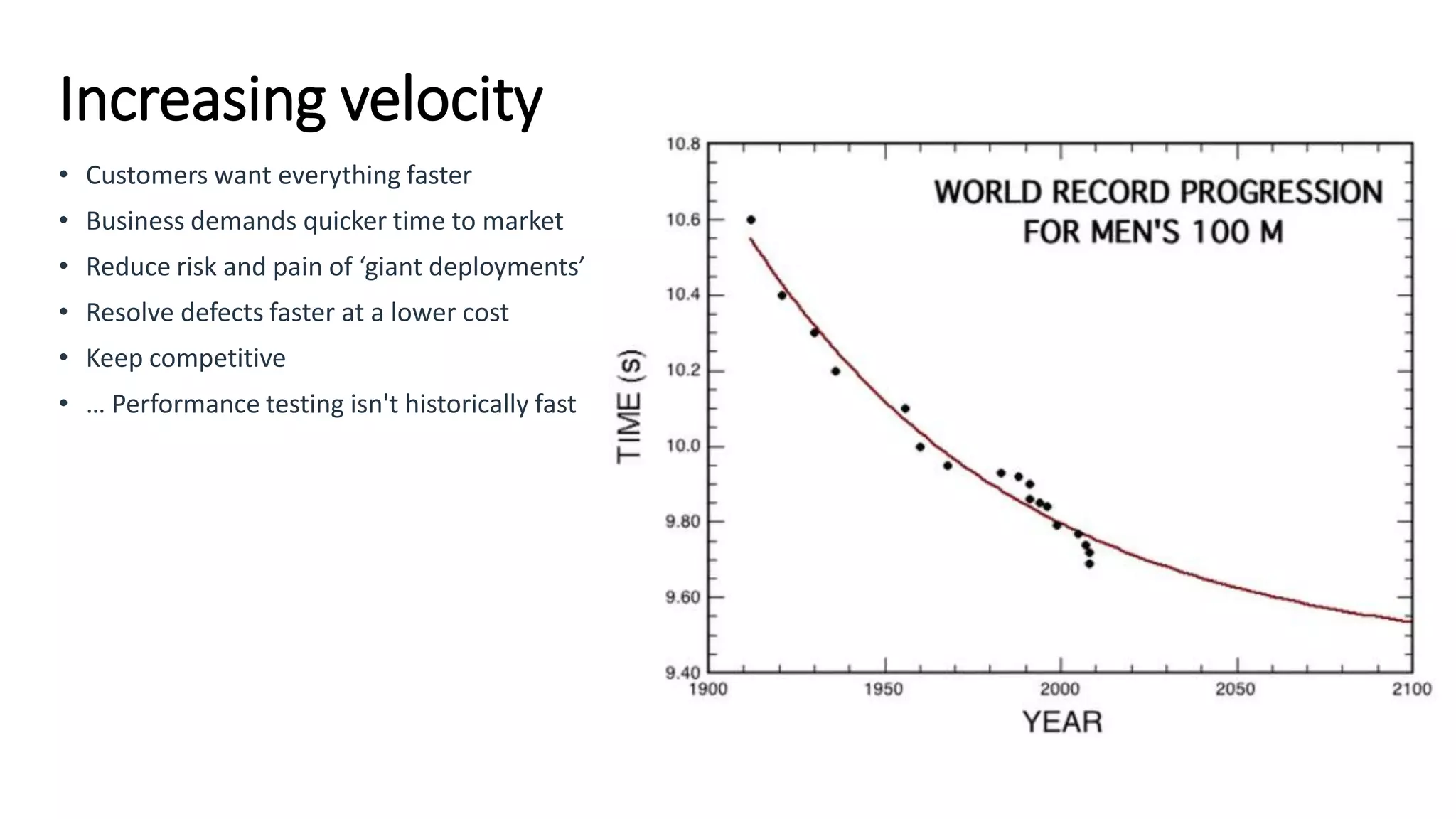

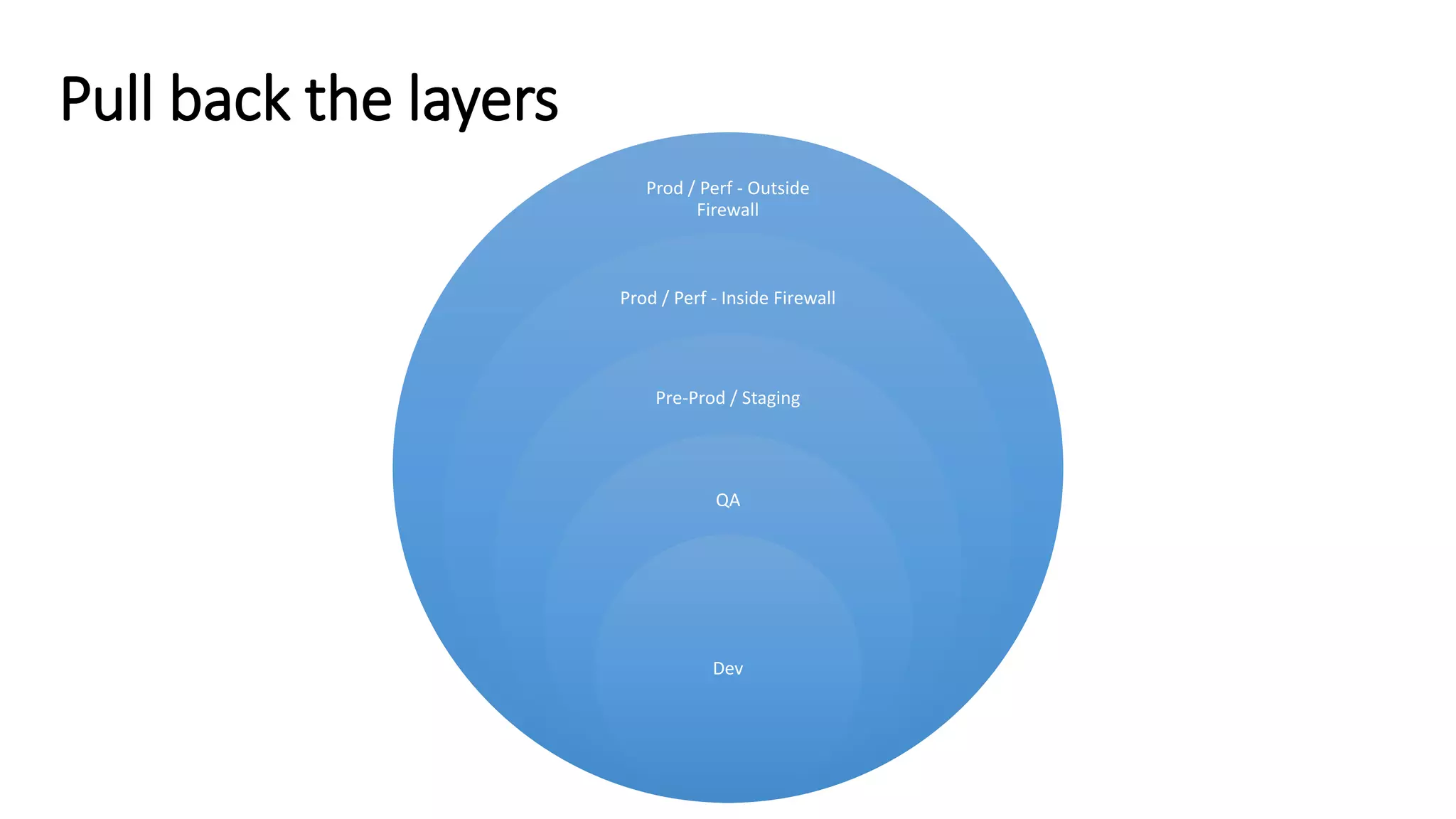

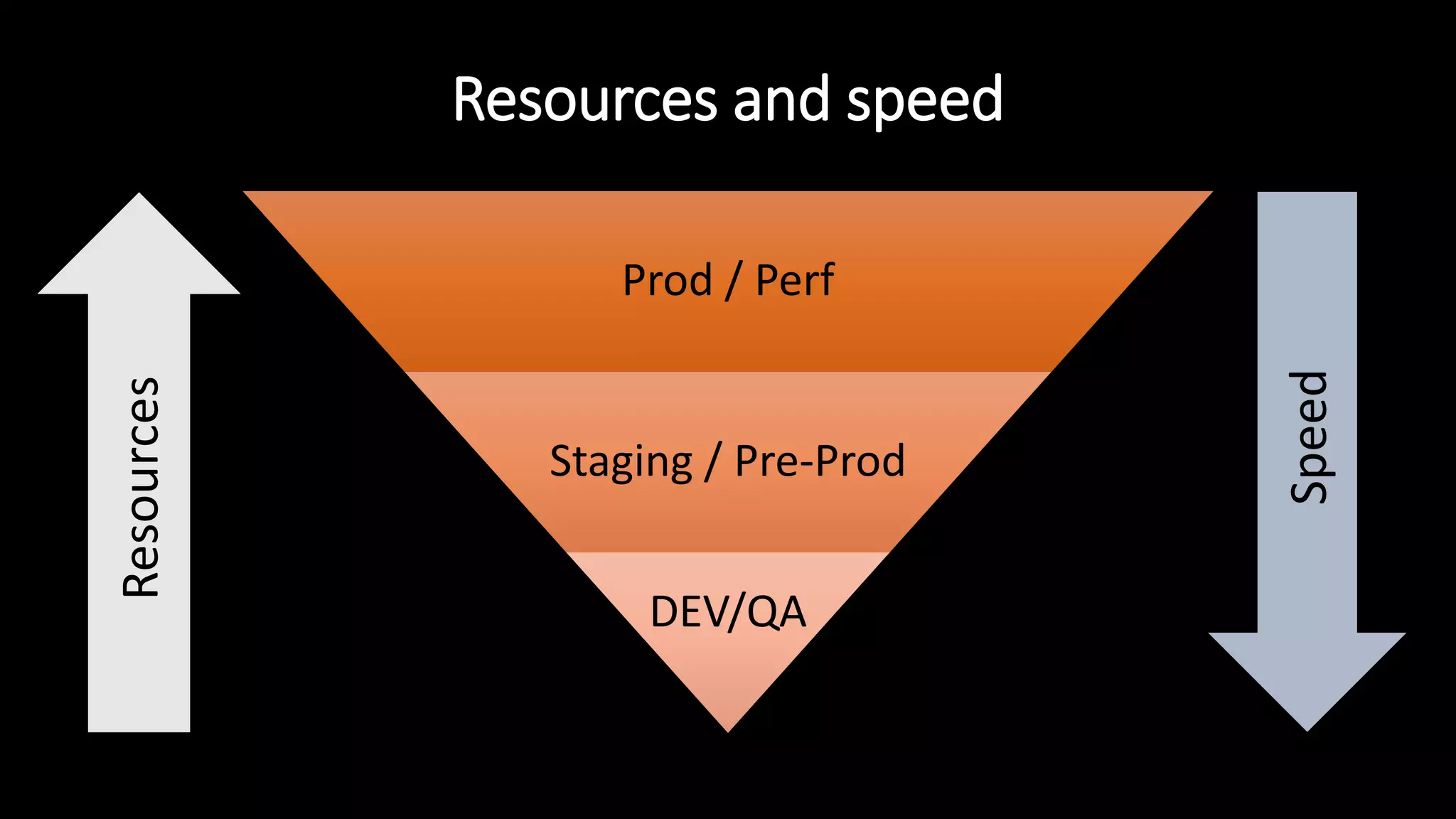

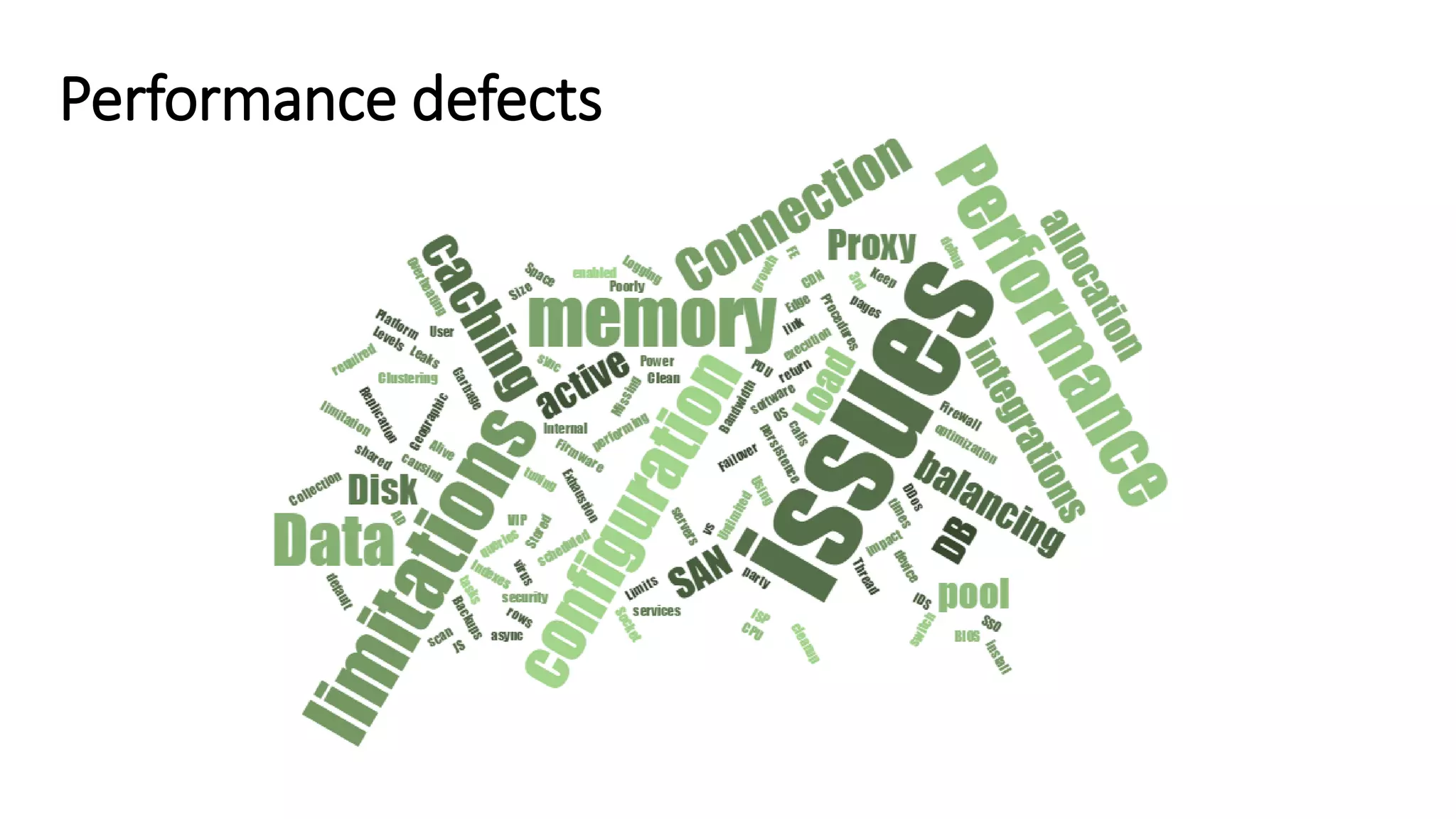

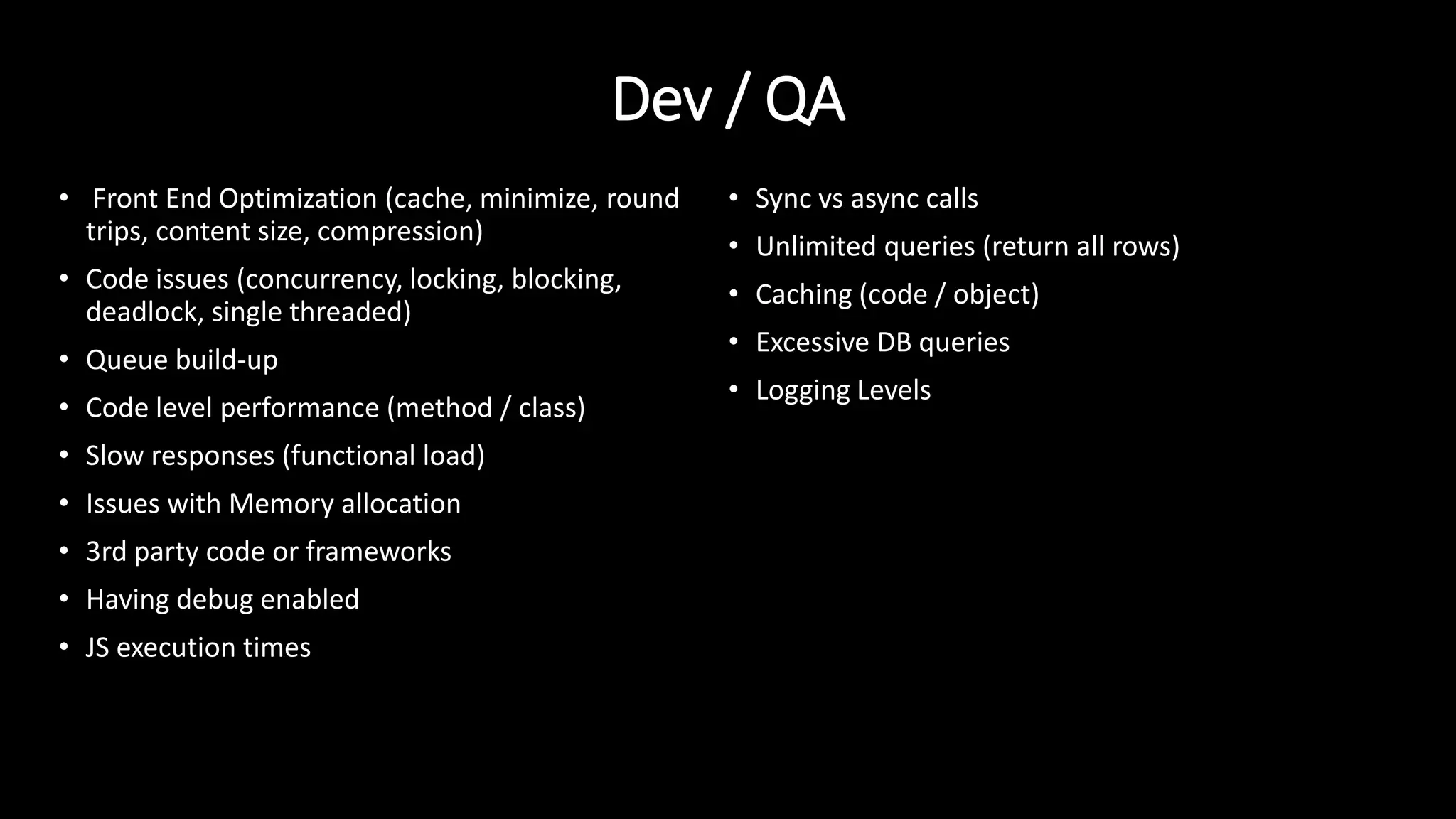

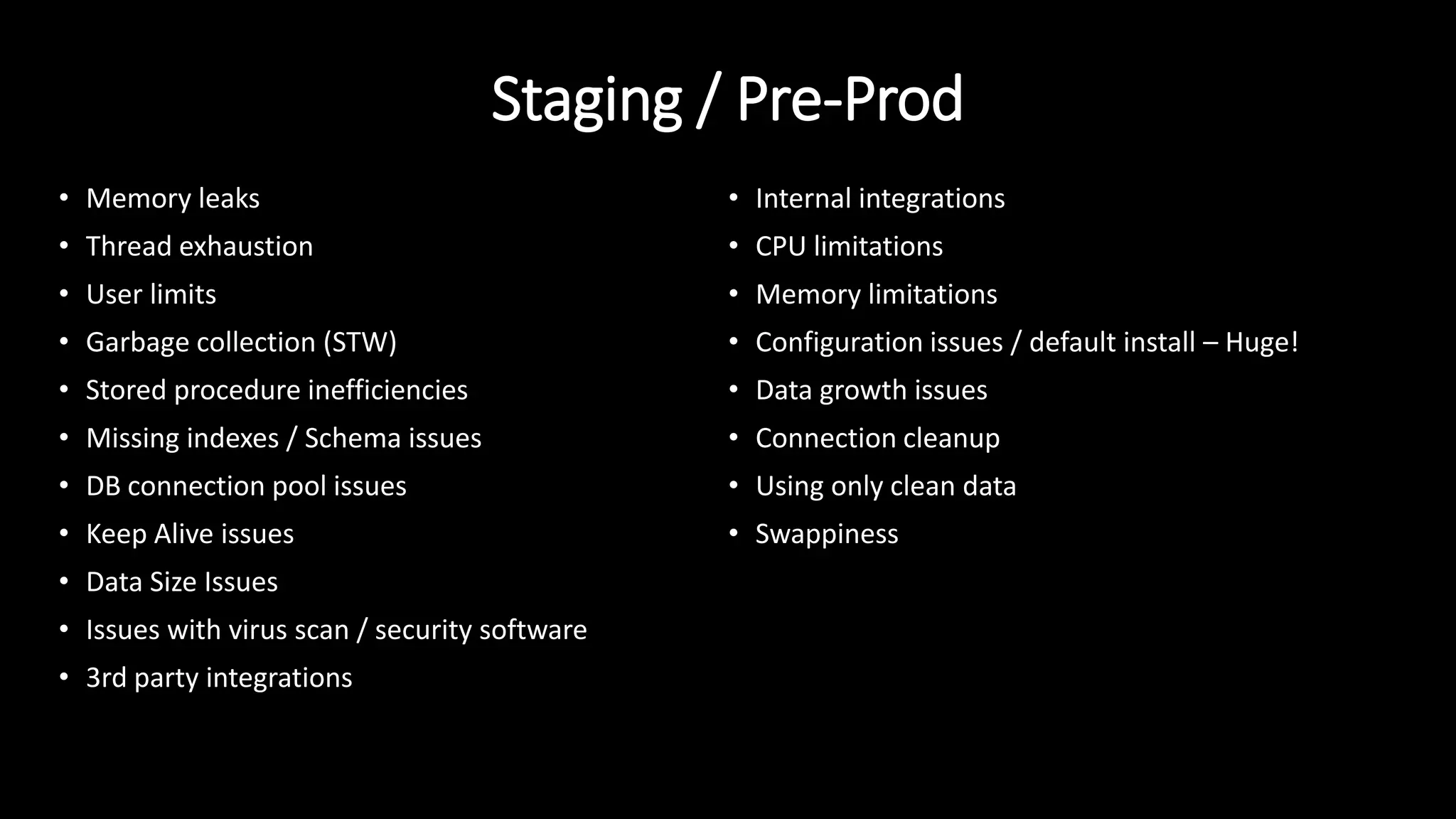

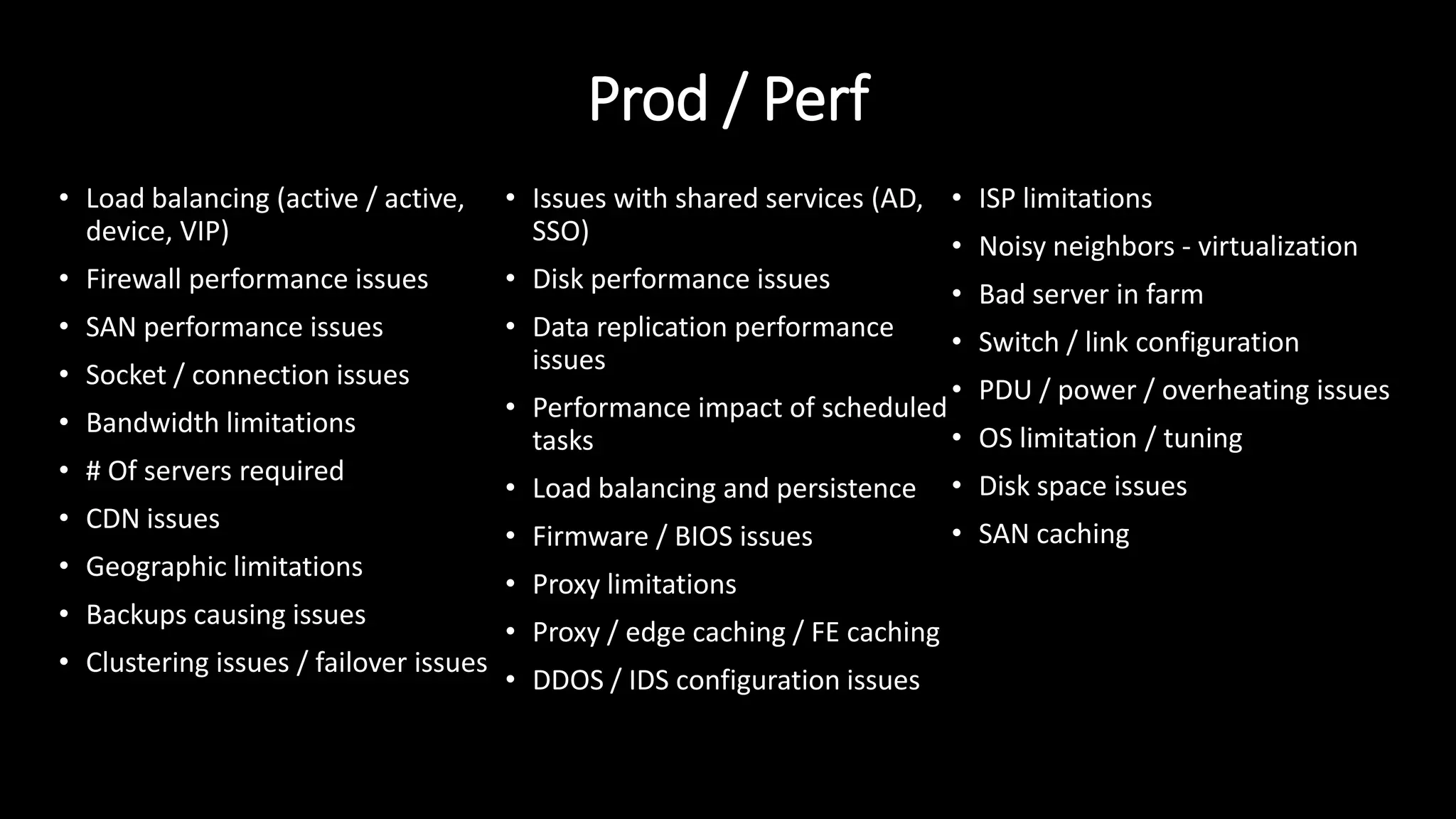

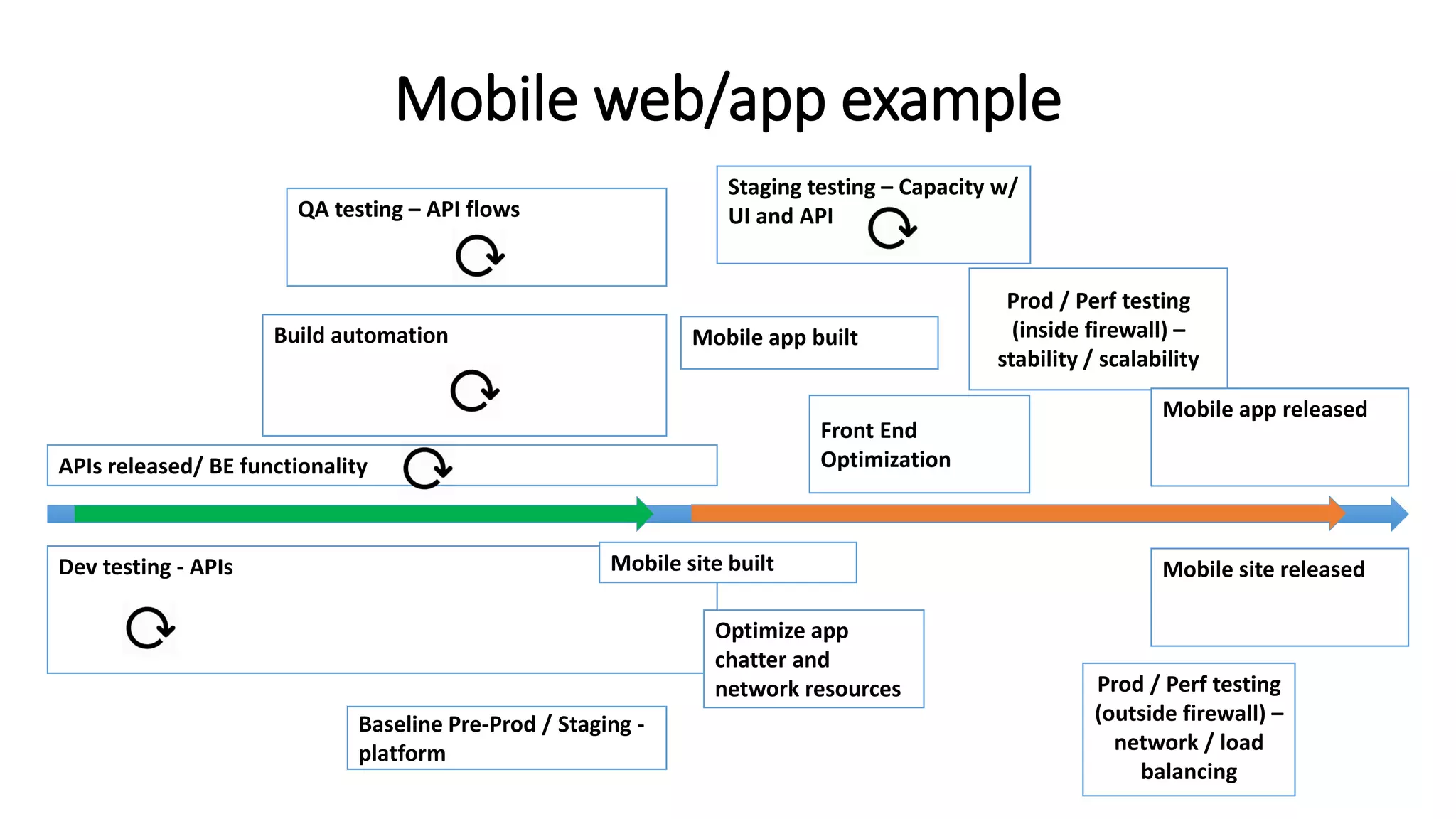

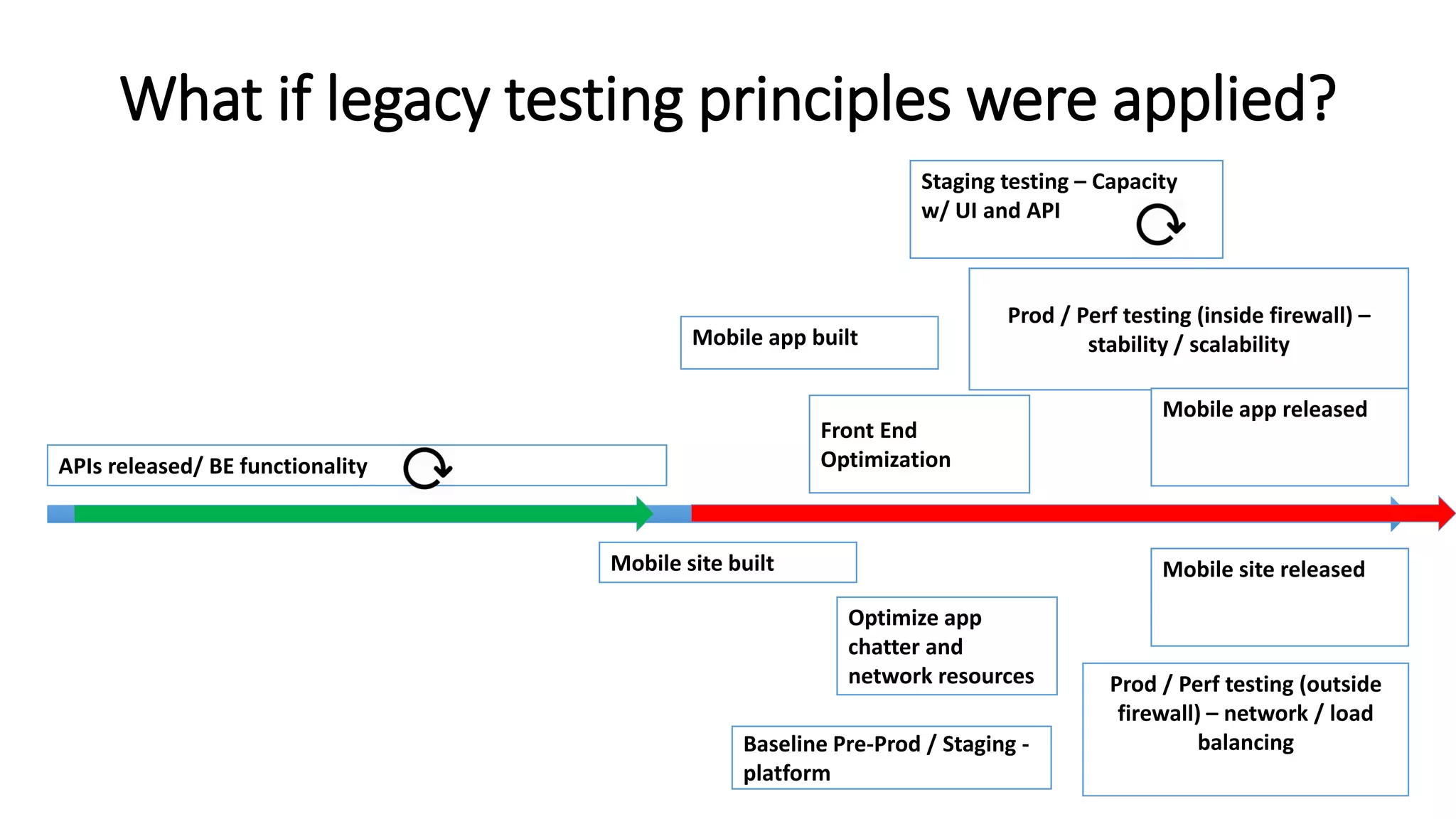

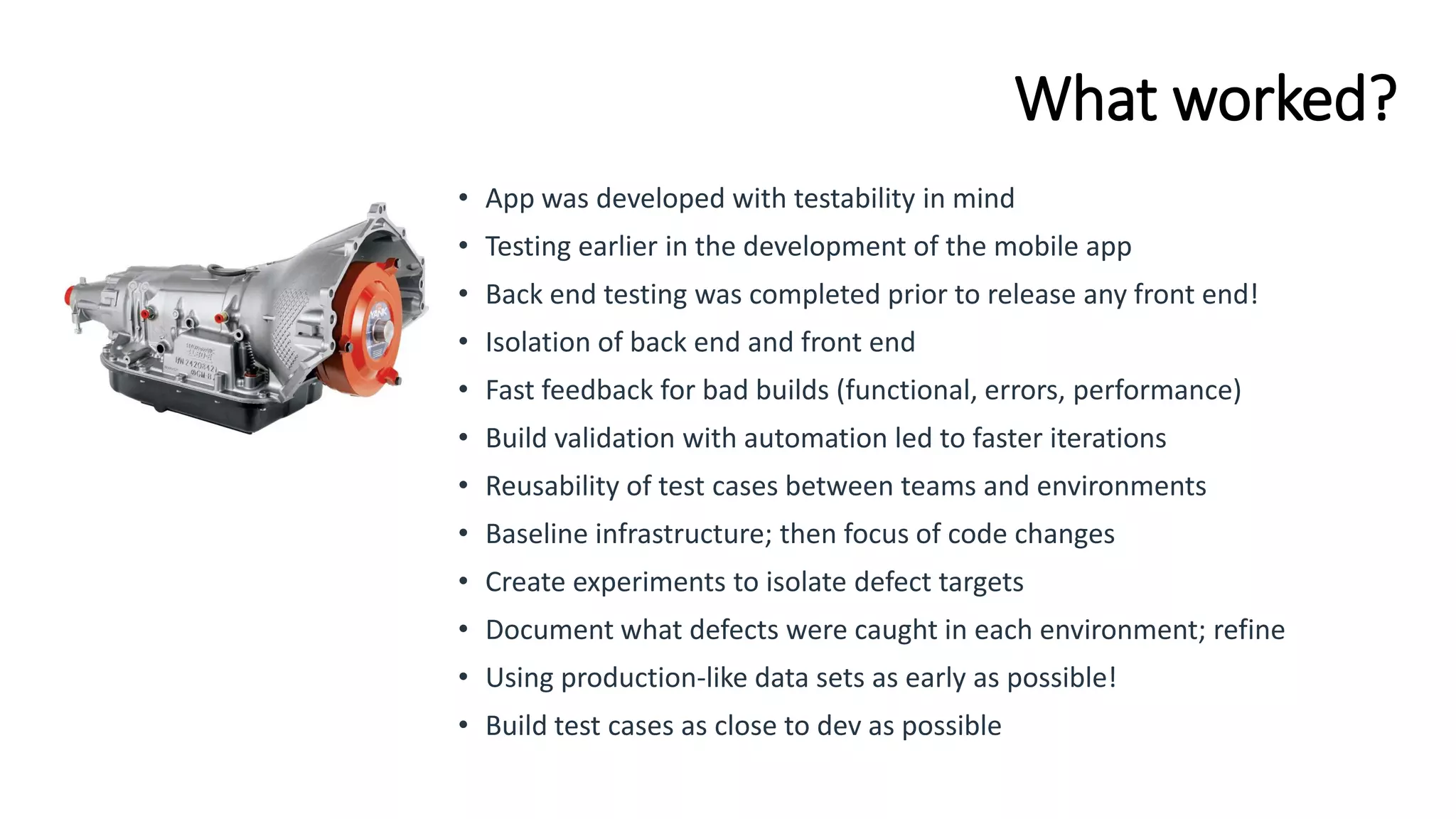

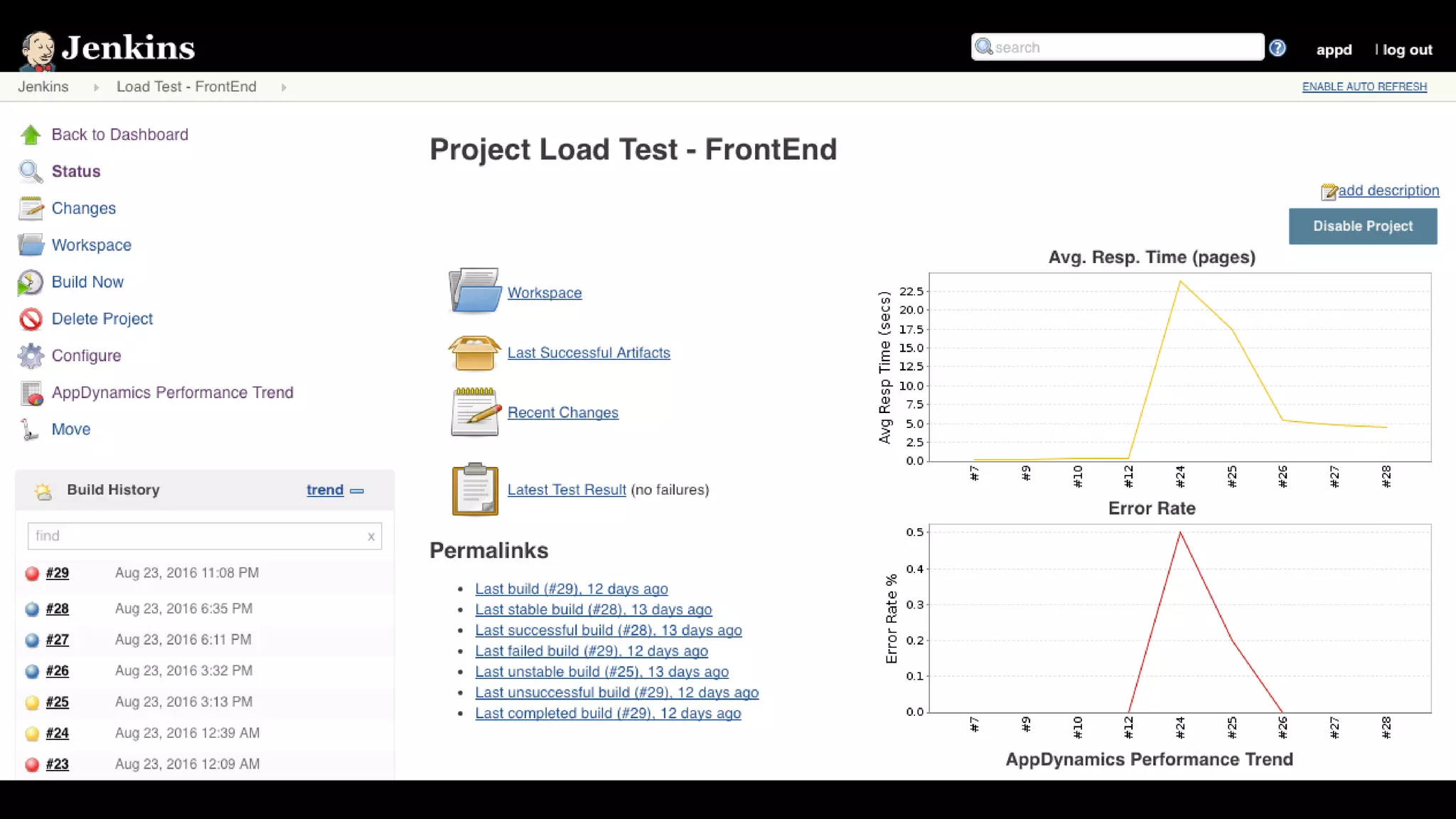

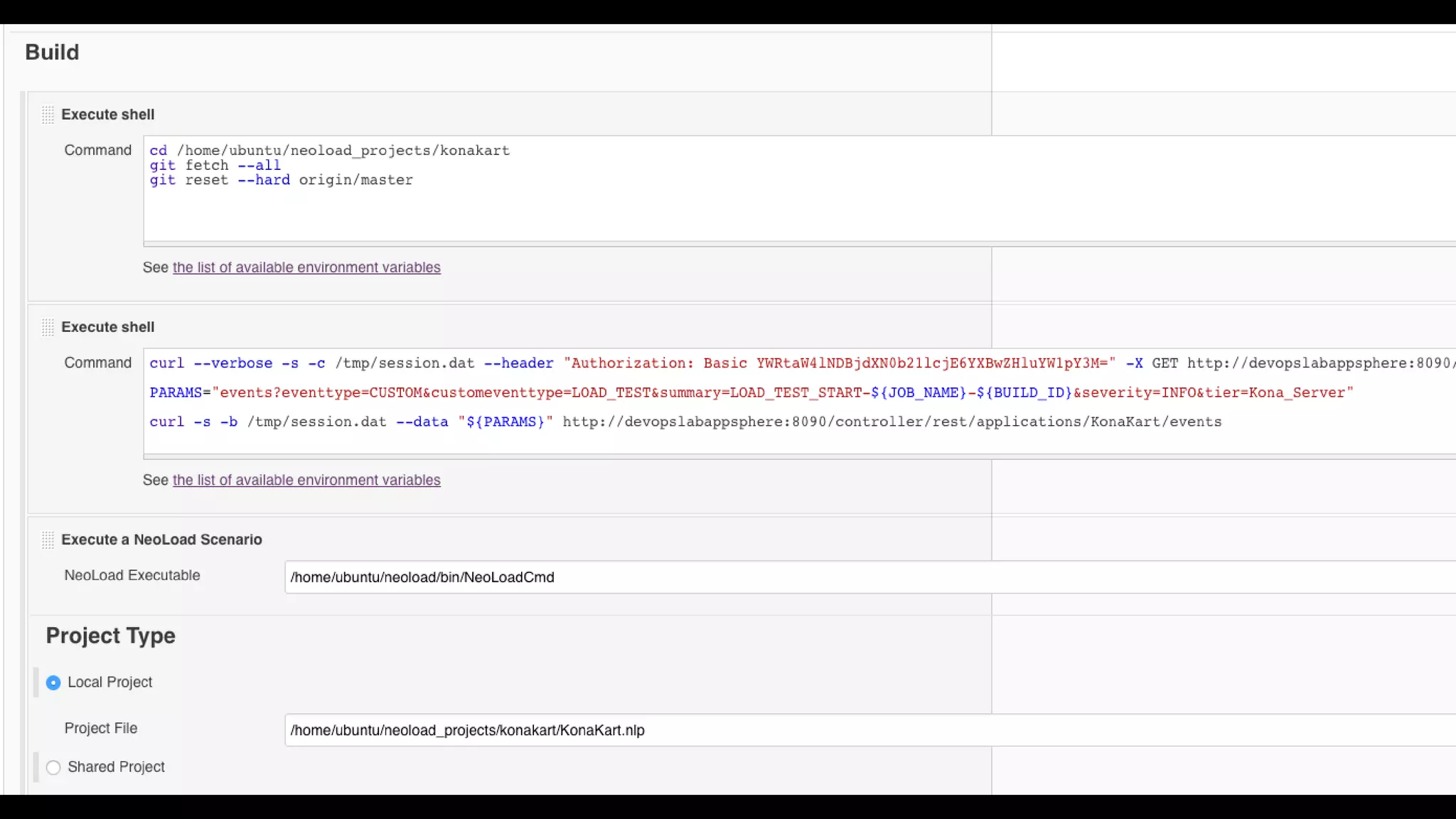

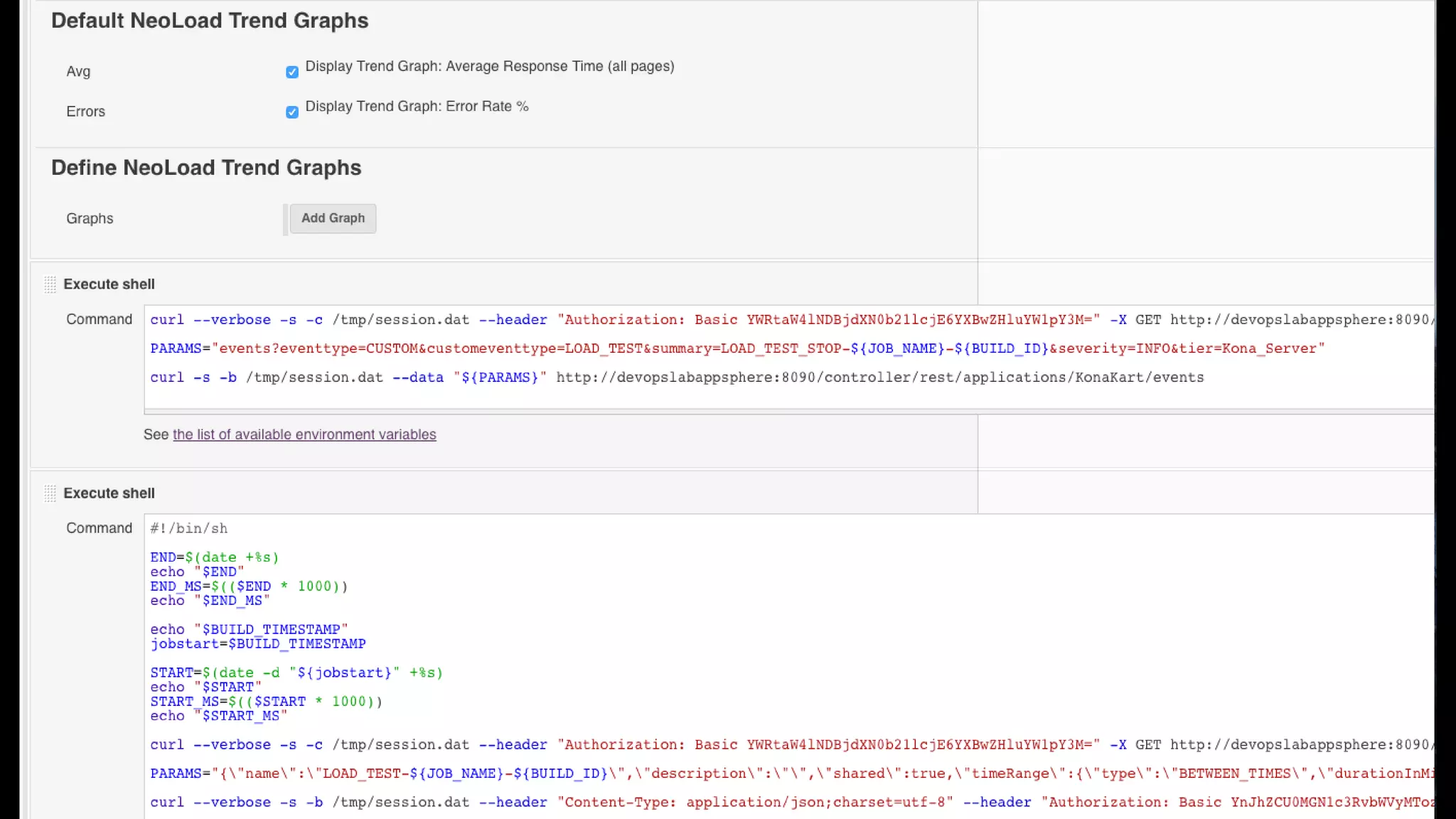

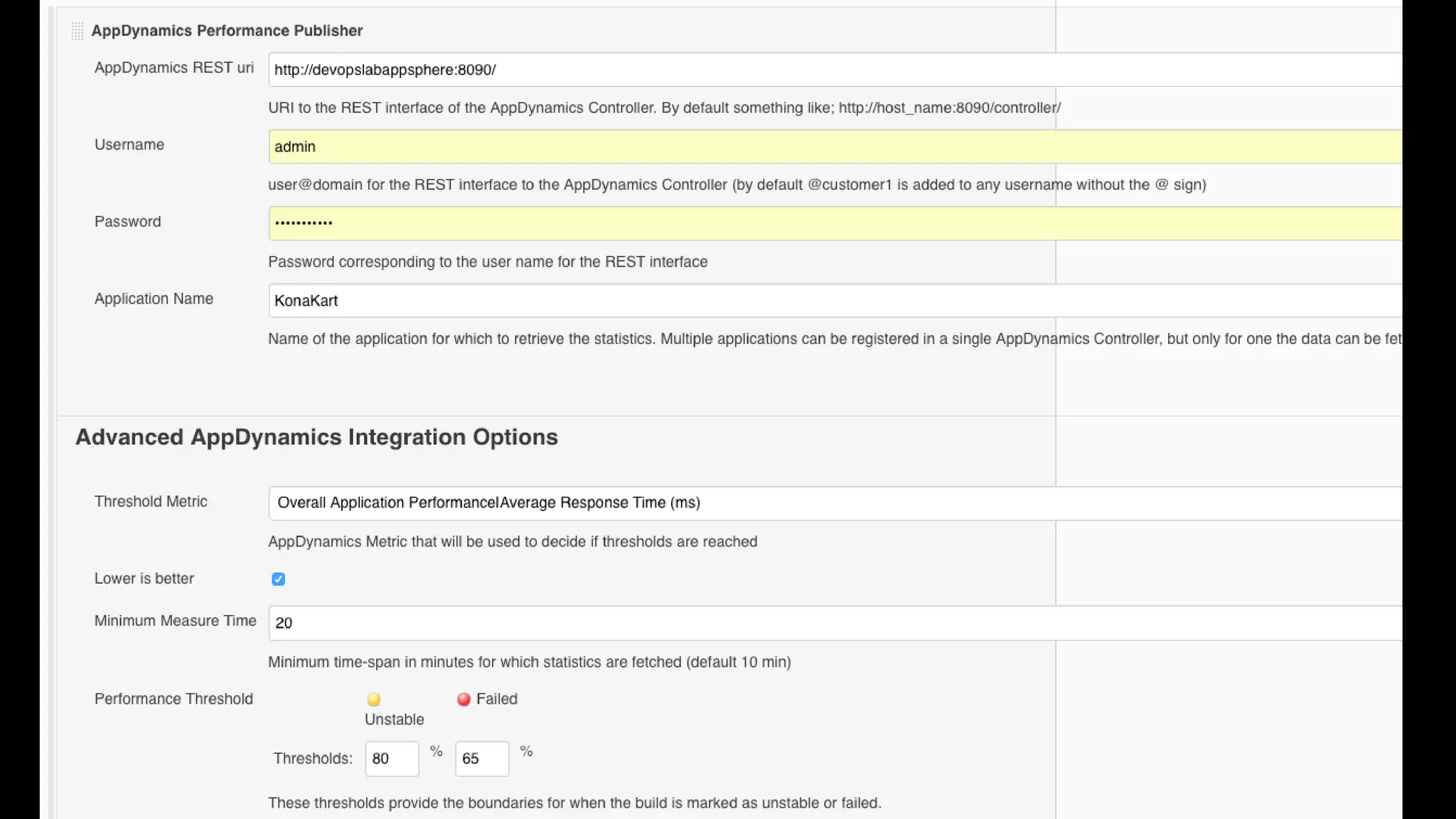

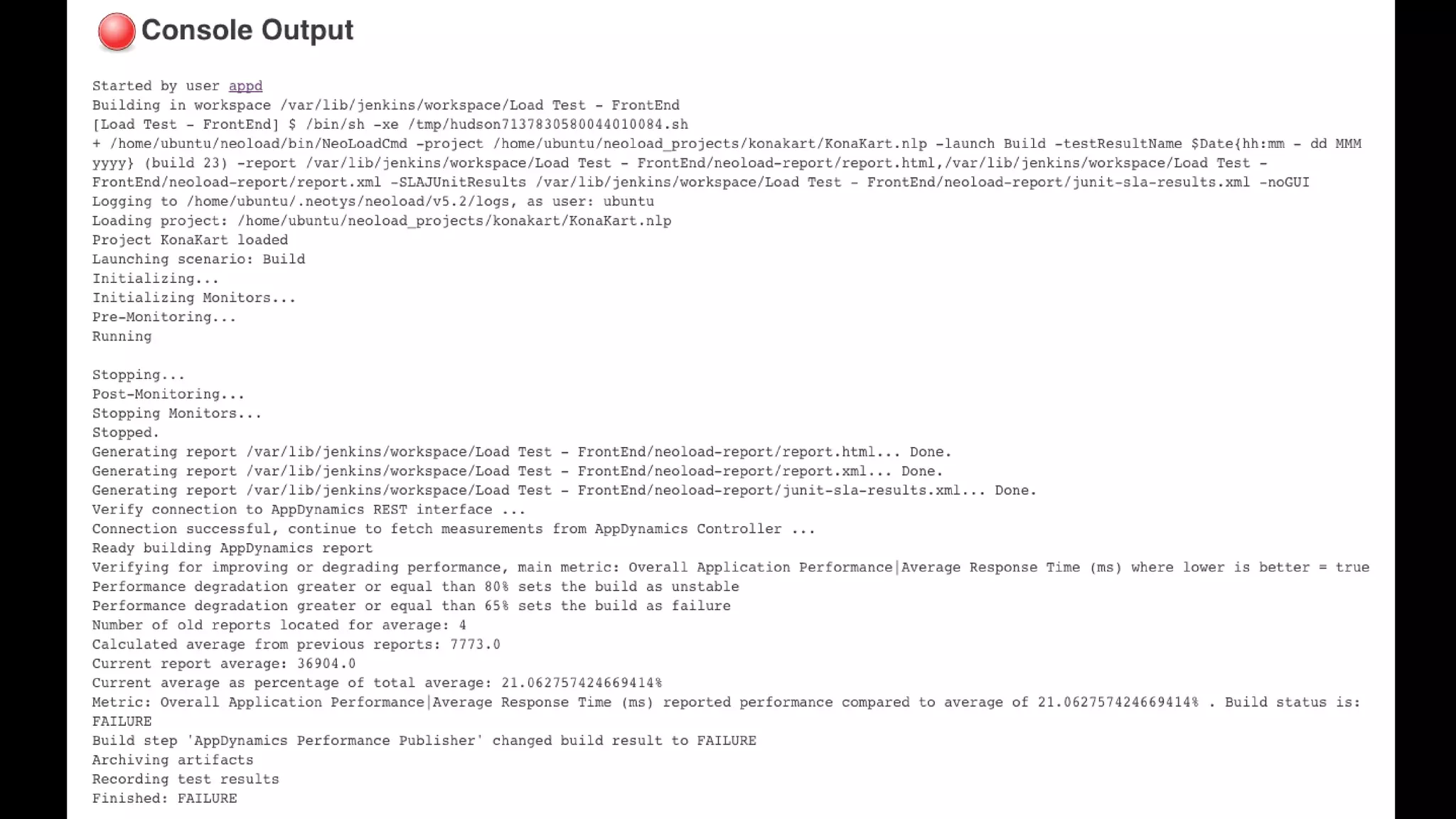

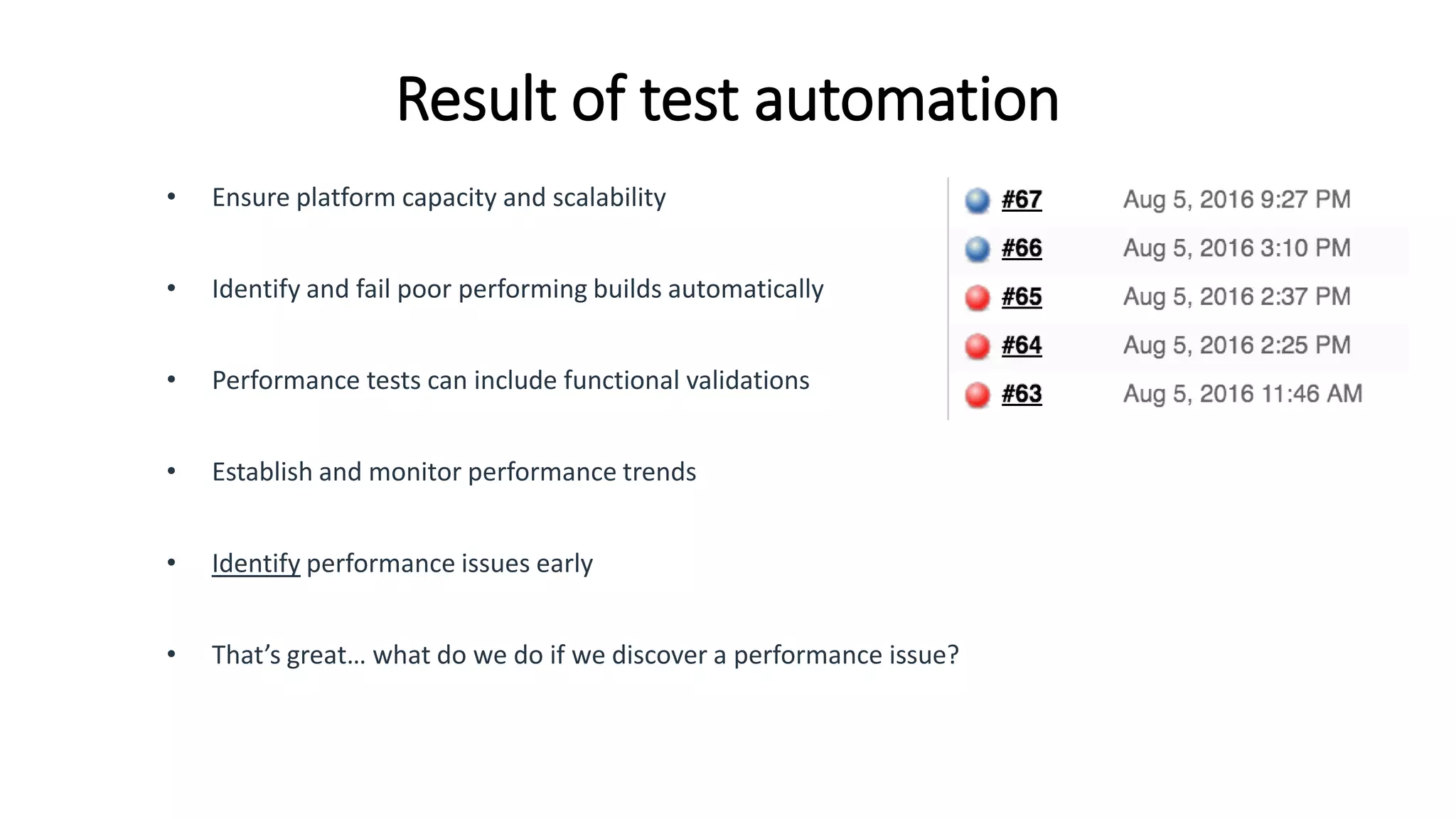

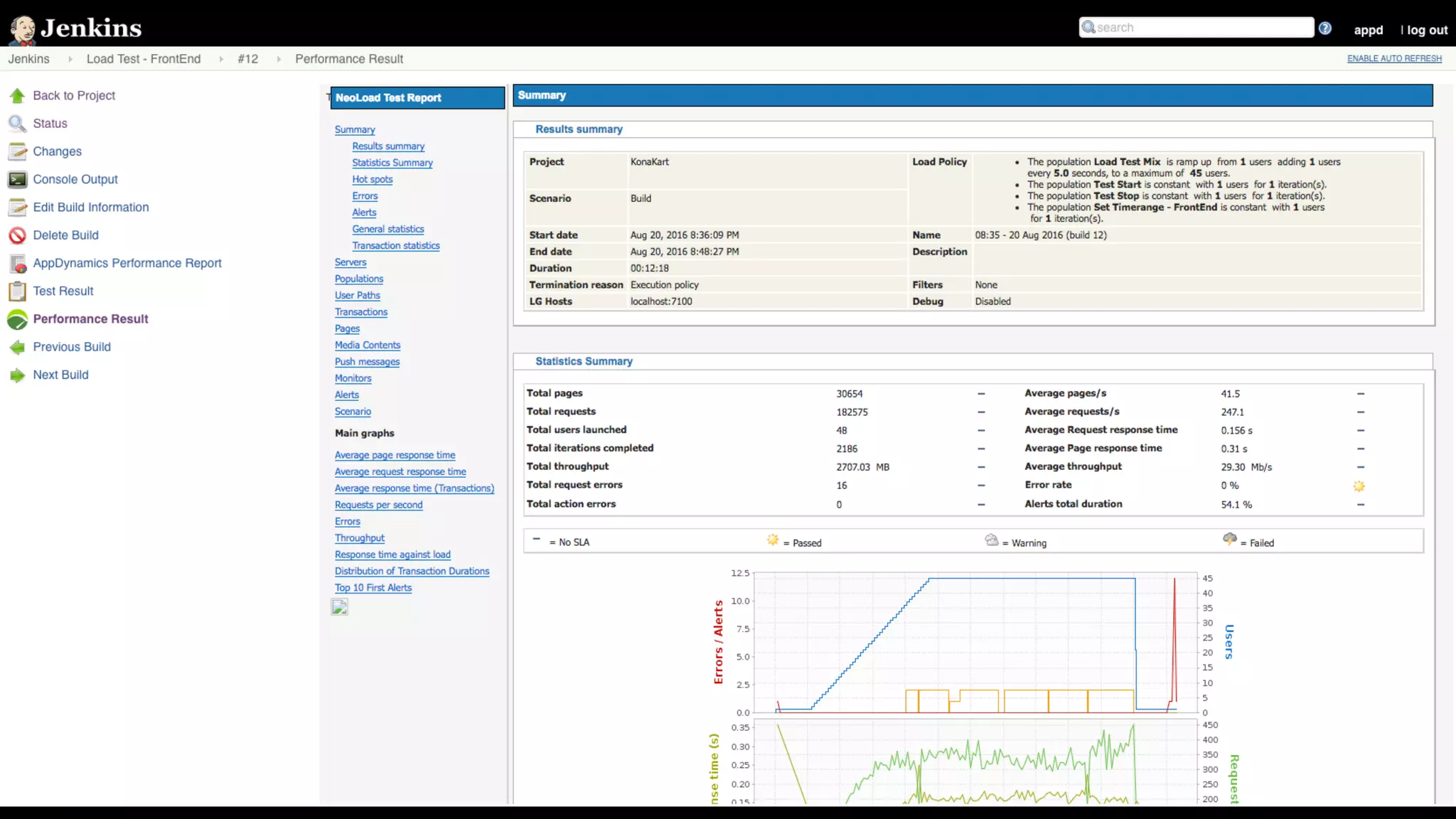

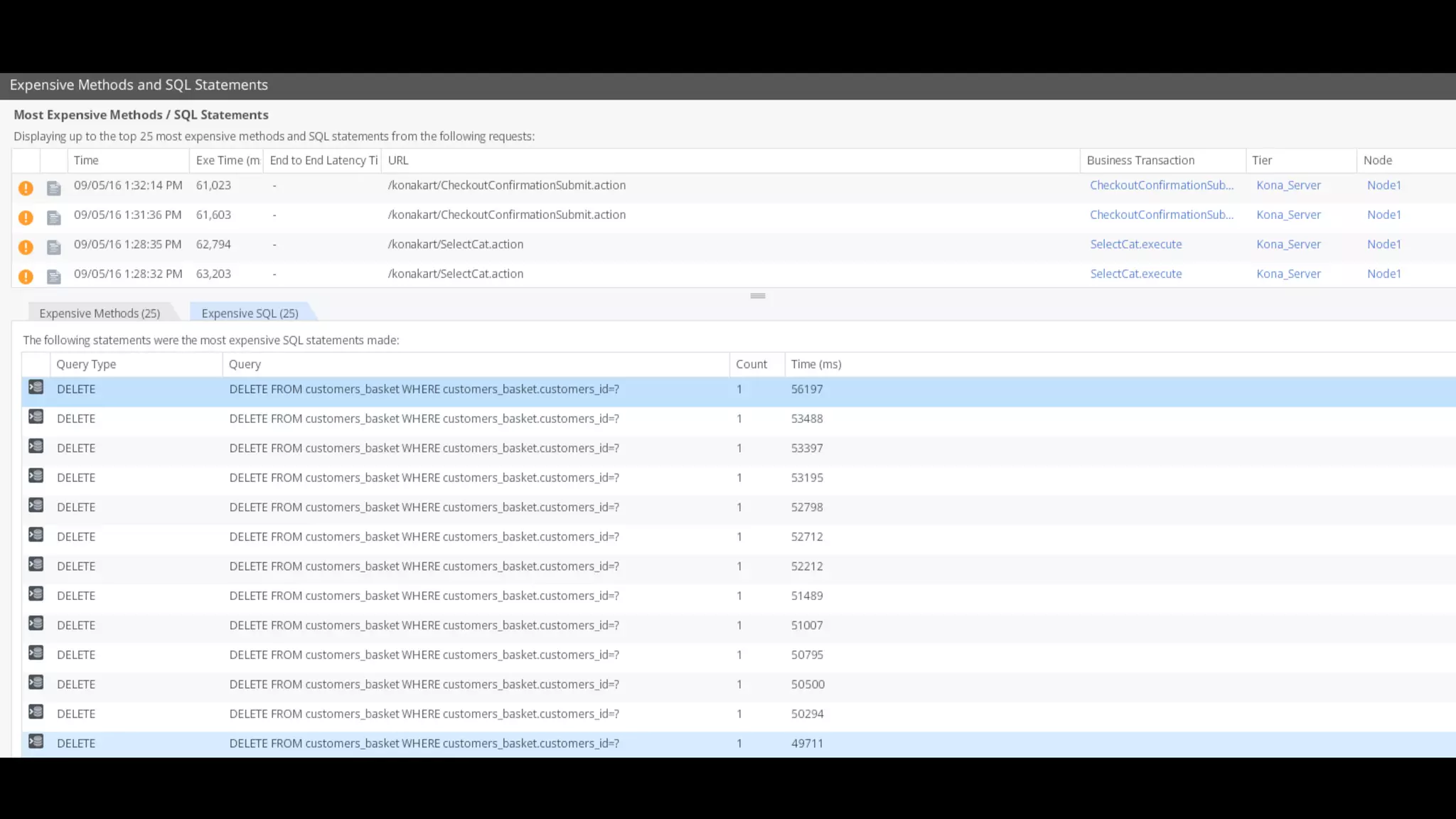

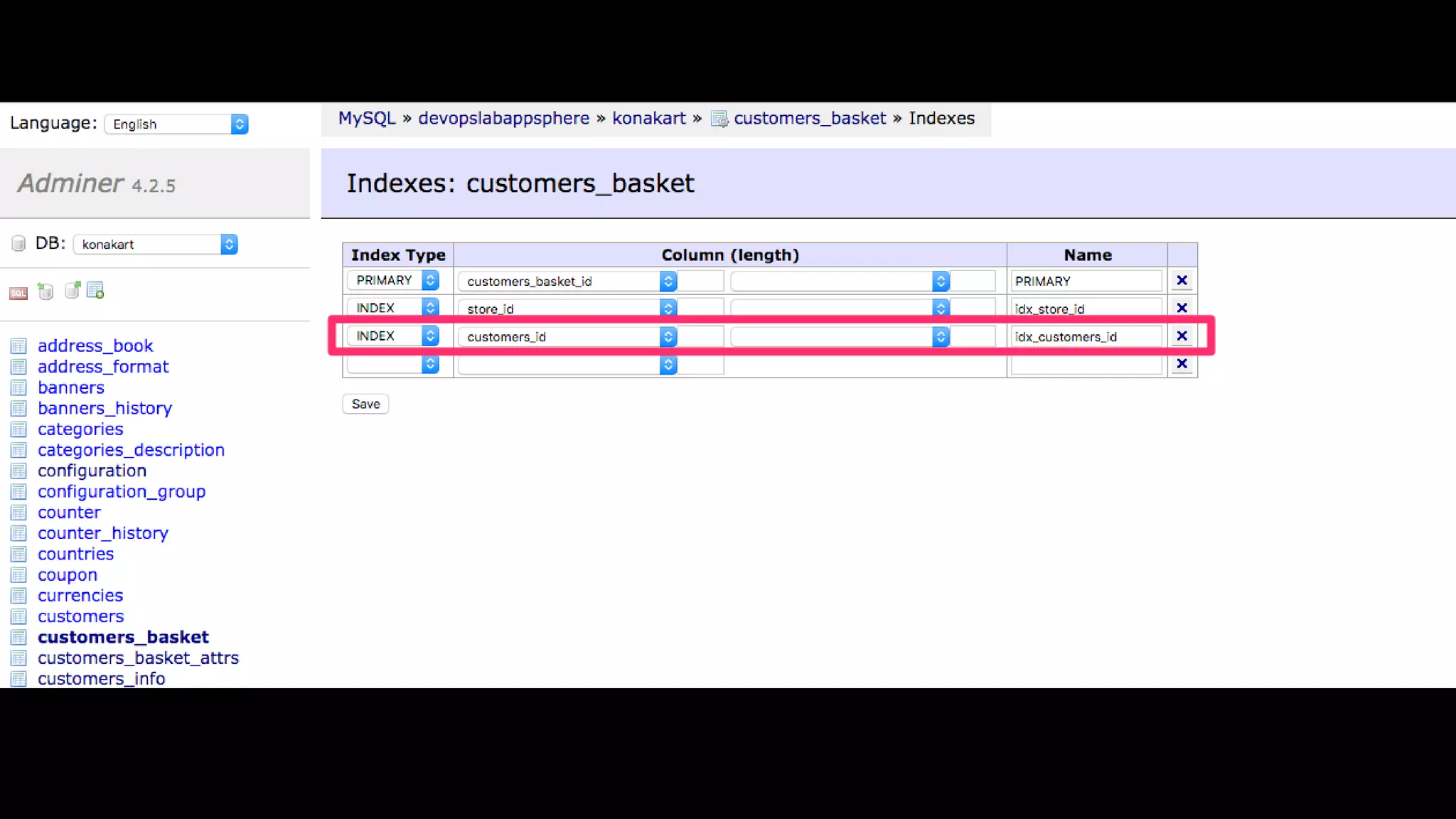

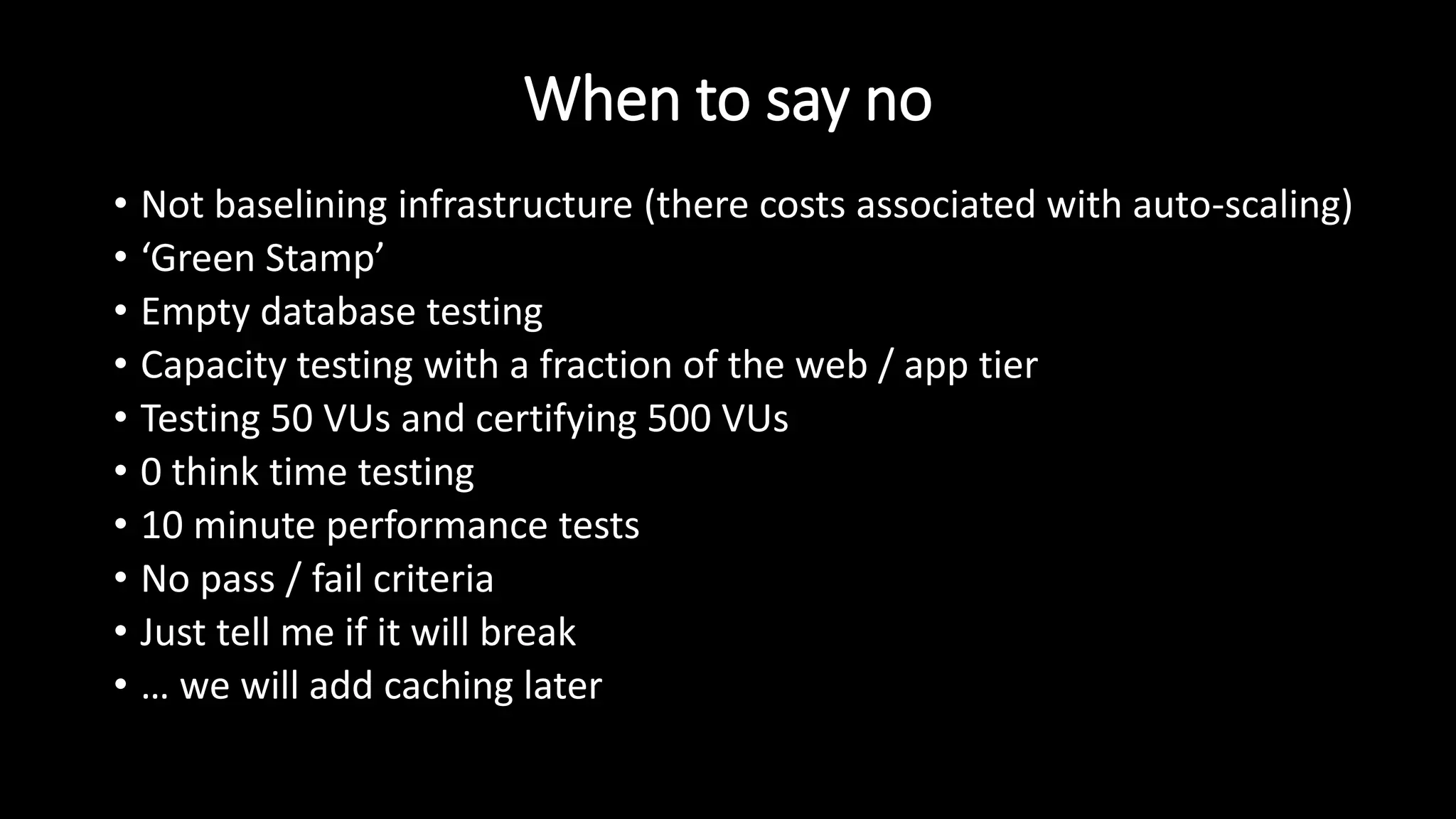

The document presents a performance testing seminar led by Brad Stoner, detailing his extensive experience in the field and the importance of performance testing throughout the software development lifecycle. It discusses traditional challenges in performance testing, the shift towards agile methodologies, and the necessity for test automation to improve efficiency and accuracy. The seminar emphasizes the need for early testing to address performance issues proactively, ensuring scalability and reliability in production environments.