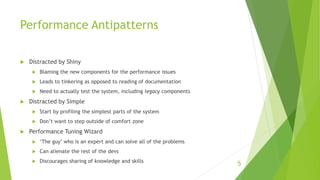

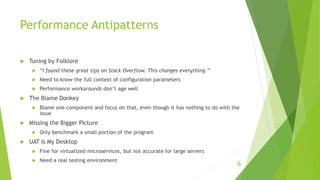

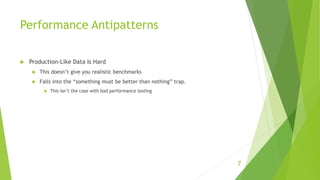

This document discusses best practices and common antipatterns related to performance optimization. It notes that overall benchmarking is easier than optimizing small parts individually, and that testing environments should mimic production. Some causes of antipatterns include assumptions being made without validation, boredom, resume padding, peer pressure, lack of understanding of problems or capabilities, and misunderstood or nonexistent problems. Specific antipatterns called out include being distracted by new or simple components without proper testing, relying on a single "expert", following folklore without full context, blaming the wrong components, missing the bigger picture, using unrealistic testing environments, and believing inadequate data is better than no data for testing.