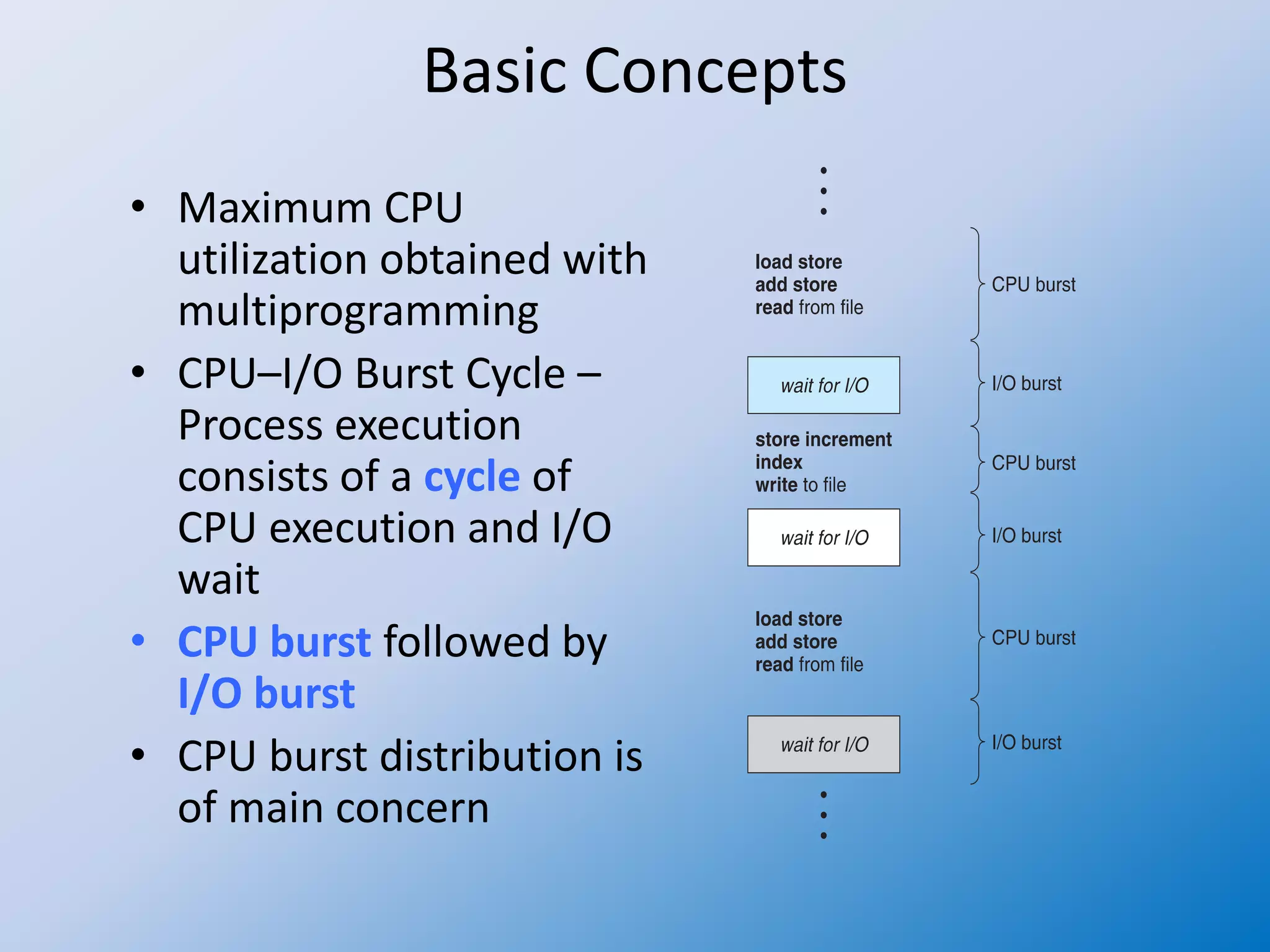

The document outlines the fundamentals of CPU scheduling in operating systems, emphasizing the objectives of multiprogramming and time-sharing systems. It details the processes involved in CPU scheduling, including the roles of short-term schedulers and dispatchers, as well as various scheduling criteria and queues. Additionally, it discusses the life cycle of processes within scheduling queues and the importance of optimization criteria for efficient CPU utilization.