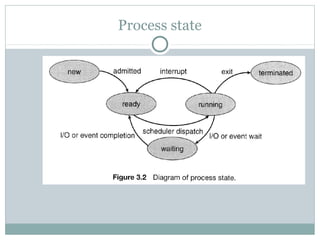

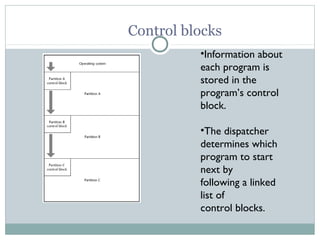

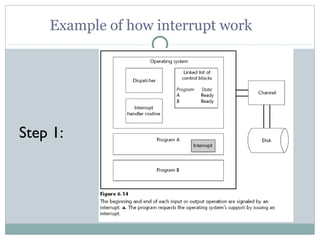

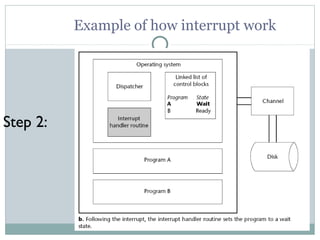

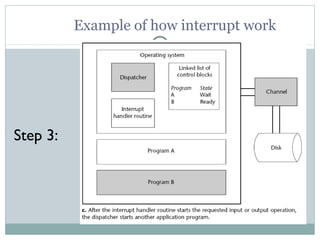

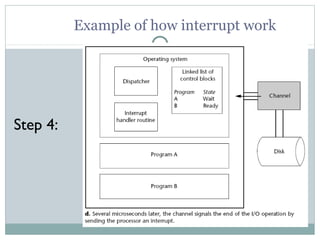

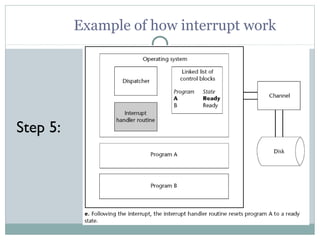

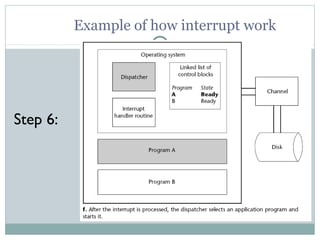

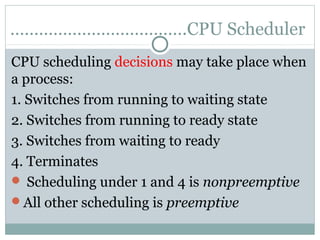

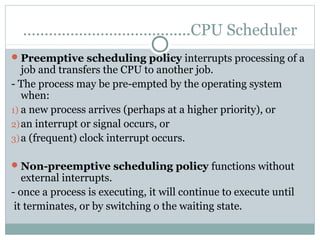

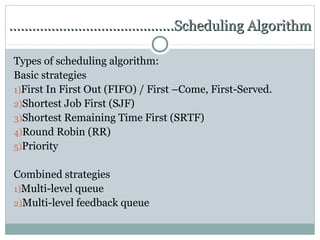

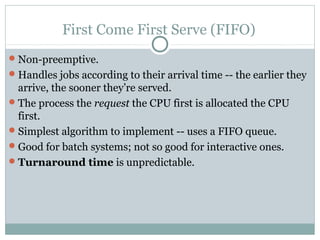

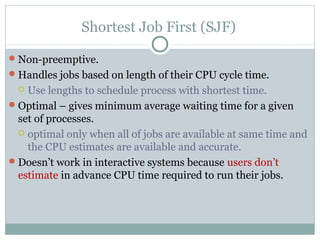

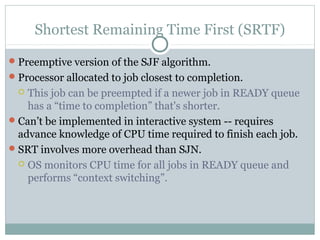

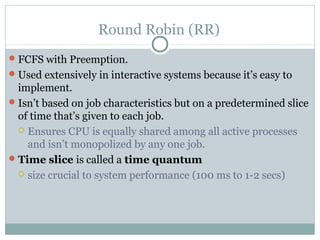

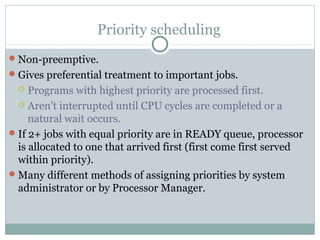

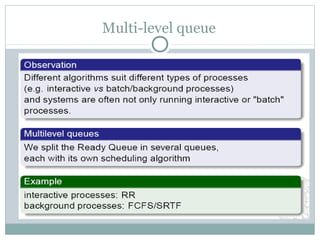

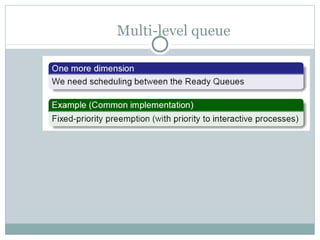

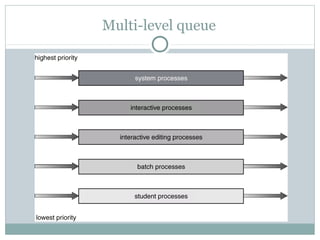

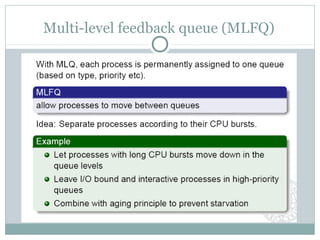

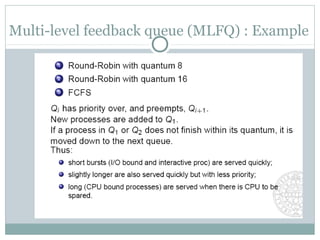

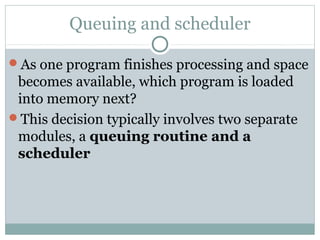

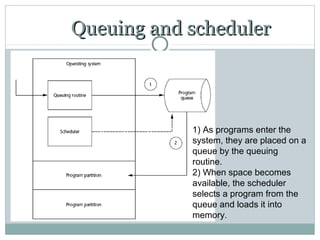

The document discusses process management in operating systems. It covers control blocks, interrupts, process states, scheduling algorithms like FIFO, SJF, SRTF, Round Robin and priority scheduling. It also discusses queuing, multiprogramming vs time sharing and scheduling criteria like CPU utilization, throughput, turnaround time and waiting time. Scheduling can be long, medium or short term and algorithms include priority queues and multilevel feedback queues.