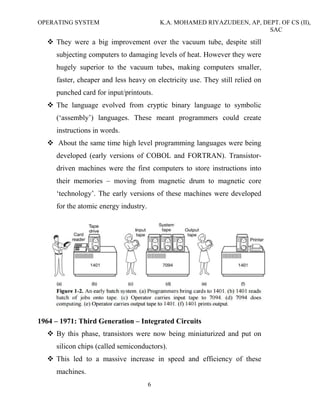

The document discusses the history and functions of operating systems. It begins by defining an operating system and describing its key roles like acting as an interface between the user and computer and managing computer resources. It then discusses the goals of operating systems as making computers easier to use and efficiently managing hardware. The main functions of an operating system are then outlined as process management, memory management, I/O management, file management, and more. Finally, the document summarizes the evolution of operating systems through five generations from the earliest vacuum tube computers to modern systems with artificial intelligence.

![OPERATING SYSTEM K.A. MOHAMED RIYAZUDEEN, AP, DEPT. OF CS (II),

SAC

48

Unit III

CPU Scheduling Introduction:

CPU scheduling is a process which allows one process to use the CPU

while the execution of another process is on hold (in waiting state) due to

unavailability of any resource like I/O etc, thereby making full use of CPU.

The aim of CPU scheduling is to make the system efficient, fast and

fair.

Objectives of Scheduling Algorithm

Max CPU utilization [Keep CPU as busy as possible]

Fair allocation of CPU.

Max throughput [Number of processes that complete their execution

per time unit]

Min turnaround time [Time taken by a process to finish execution]

Min waiting time [Time a process waits in ready queue]

Min response time [Time when a process produces first response]

SchedulingMethod:

Schedulers

Schedulers are special system software which handle process scheduling in

various ways. Their main task is to select the jobs to be submitted into the

system and to decide which process to run. Schedulers are of three types −

• Long-Term Scheduler

• Short-Term Scheduler

• Medium-Term Scheduler](https://image.slidesharecdn.com/osfullnotes2018-190907064840/85/Operating-System-48-320.jpg)