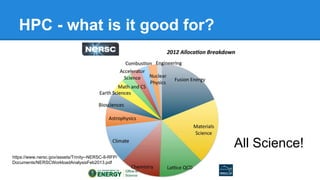

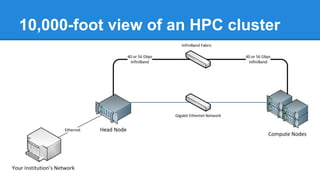

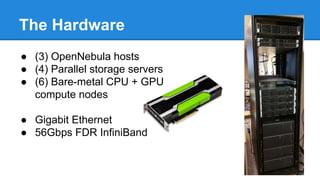

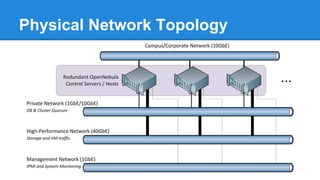

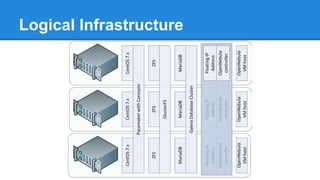

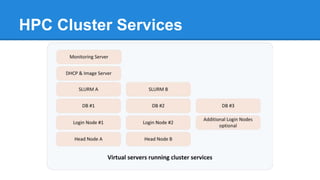

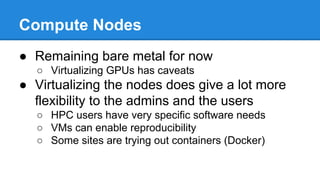

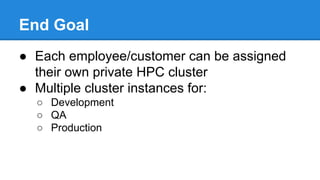

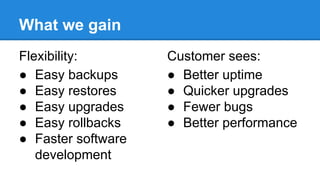

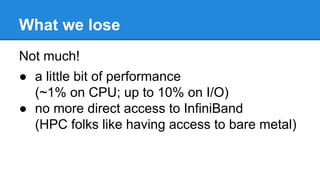

This document discusses high performance computing (HPC) and its uses. It provides examples of how HPC is used for physics simulations like lattice quantum chromodynamics and supernovae, planetary science like hurricane modeling, life sciences like molecular dynamics, engineering applications, machine learning, and big data. It then describes Microway's test drive HPC cluster that uses OpenNebula for infrastructure management across CPU and GPU nodes with InfiniBand networking. Virtualizing the cluster provides flexibility for administrators and users while incurring minimal performance penalties.