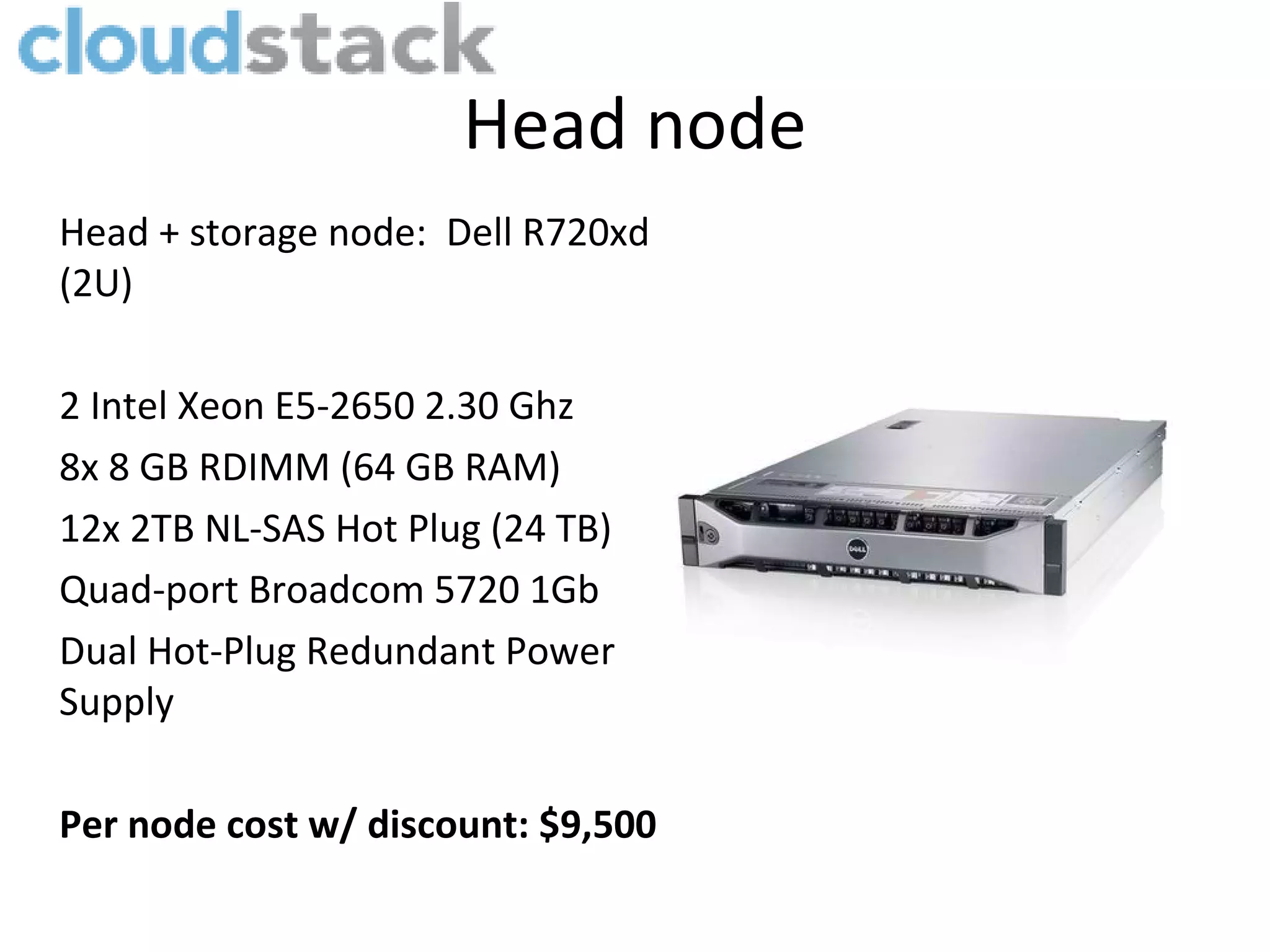

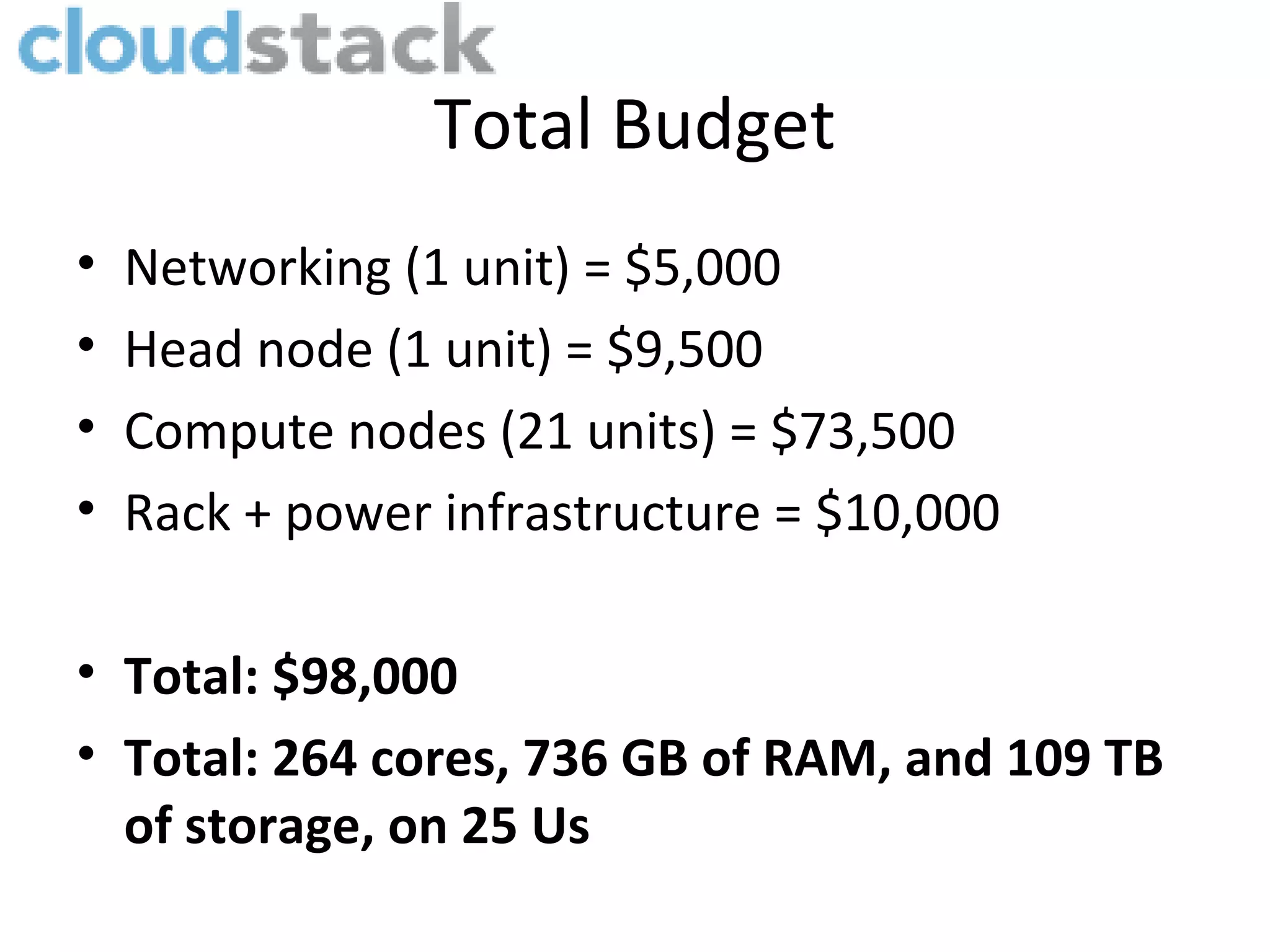

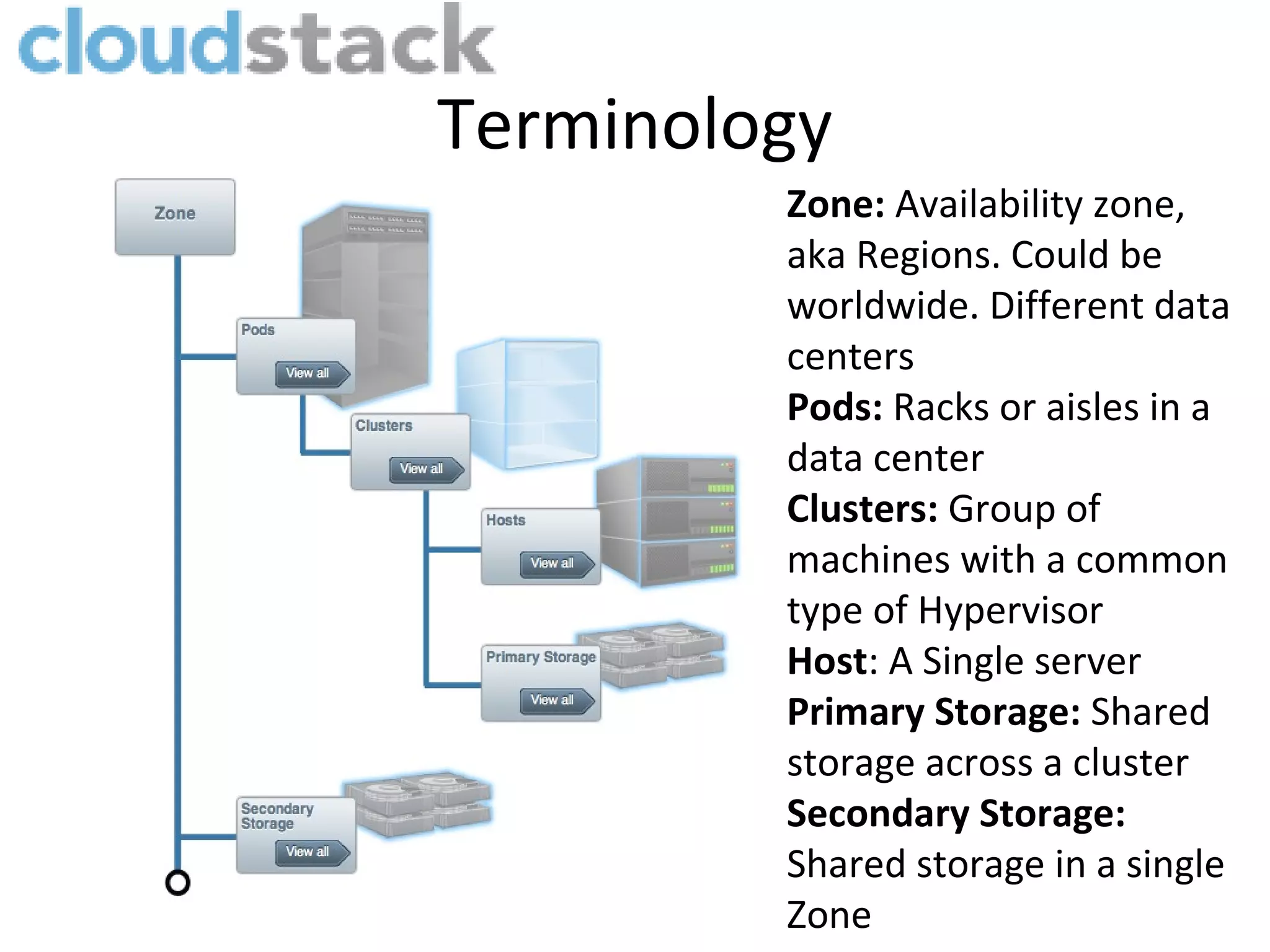

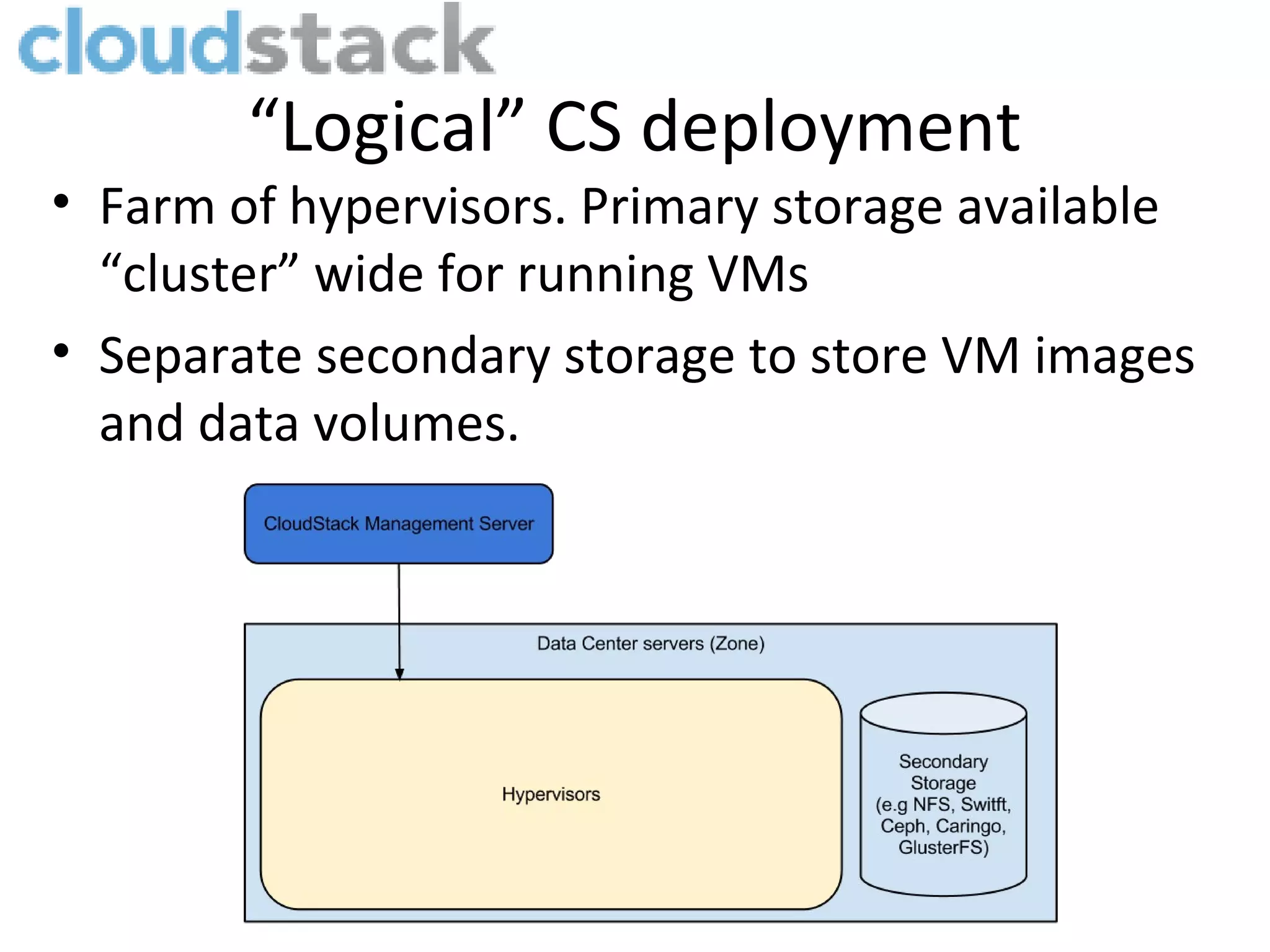

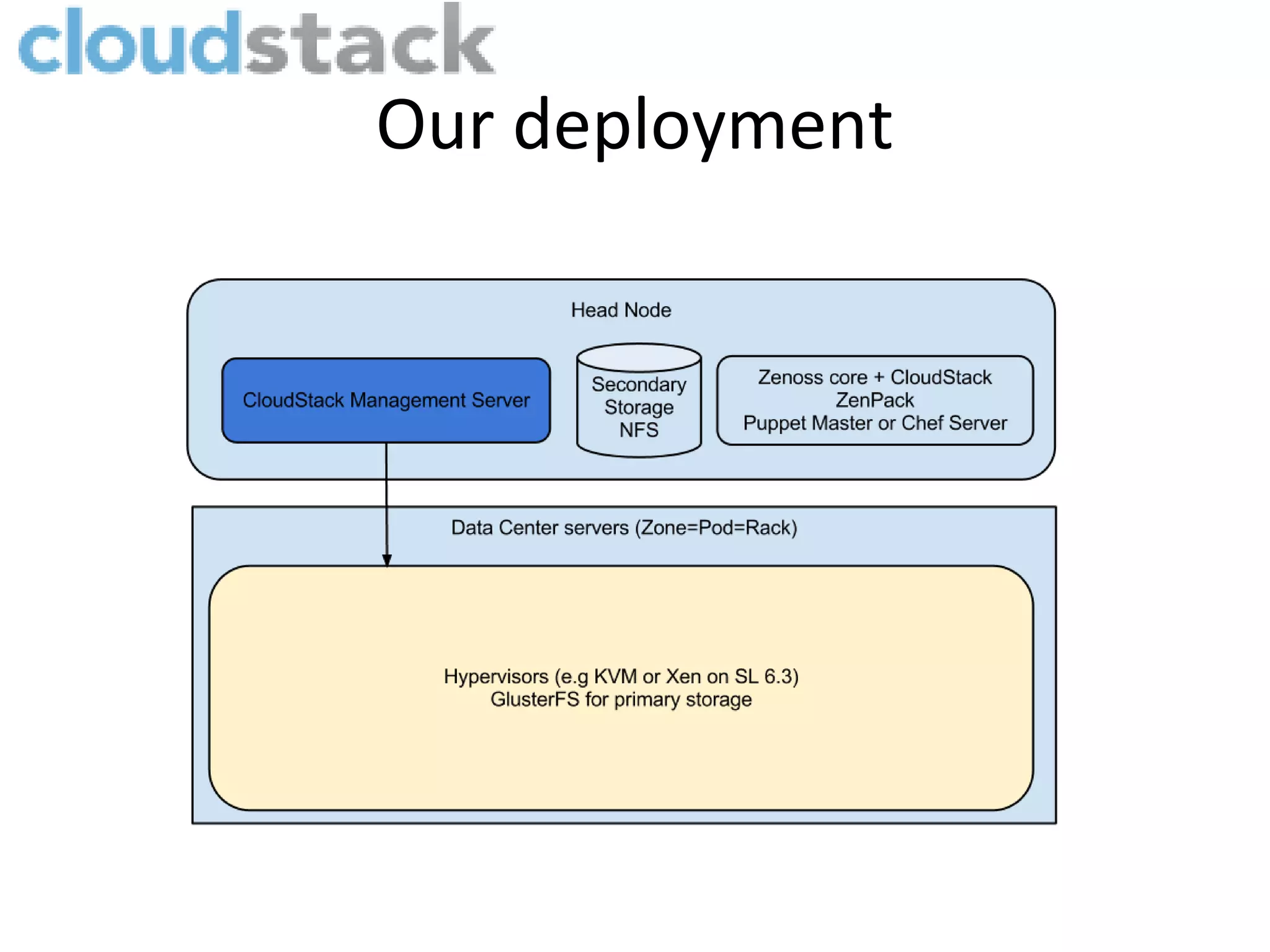

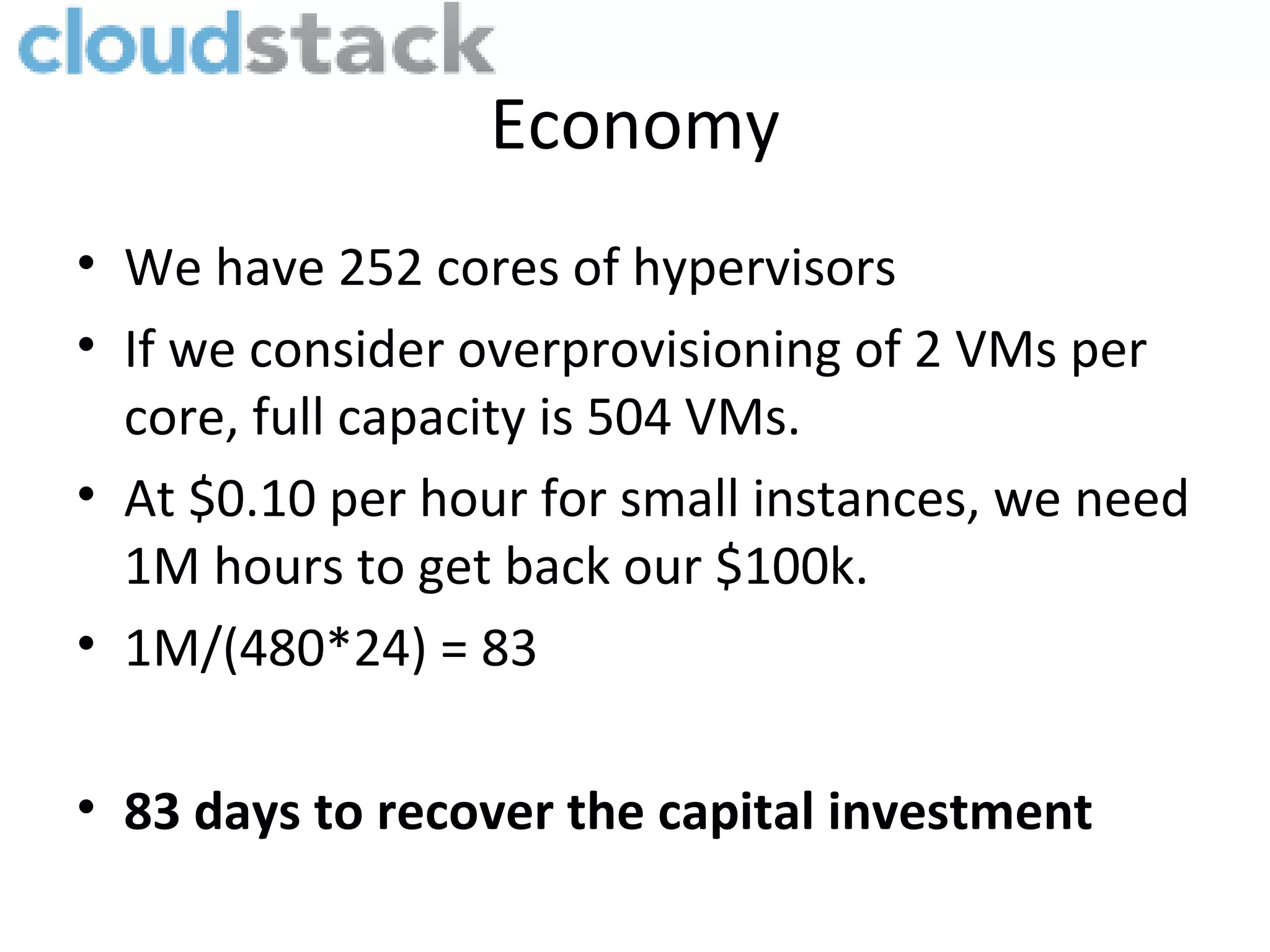

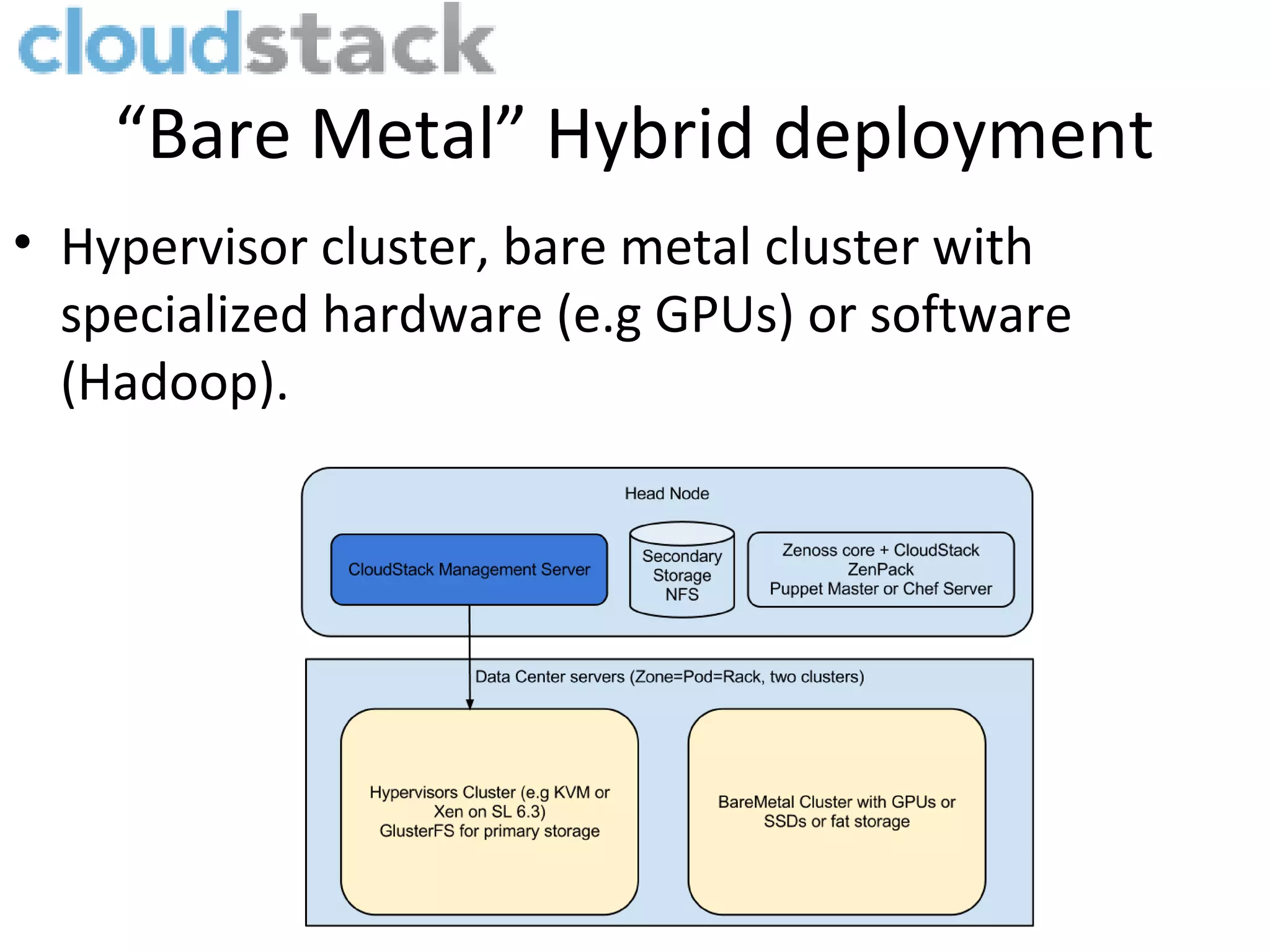

The document outlines a plan to build a private/public cloud infrastructure with a budget of $100k, focusing on an IaaS implementation from hardware to software for small and medium-sized enterprises and academic research. It details the hardware setup, including Dell servers and networking equipment, as well as the software configurations using Apache CloudStack and other open-source tools. The projected deployment includes over 250 cores and aims to recover the investment through cloud services revenue within approximately 83 days.