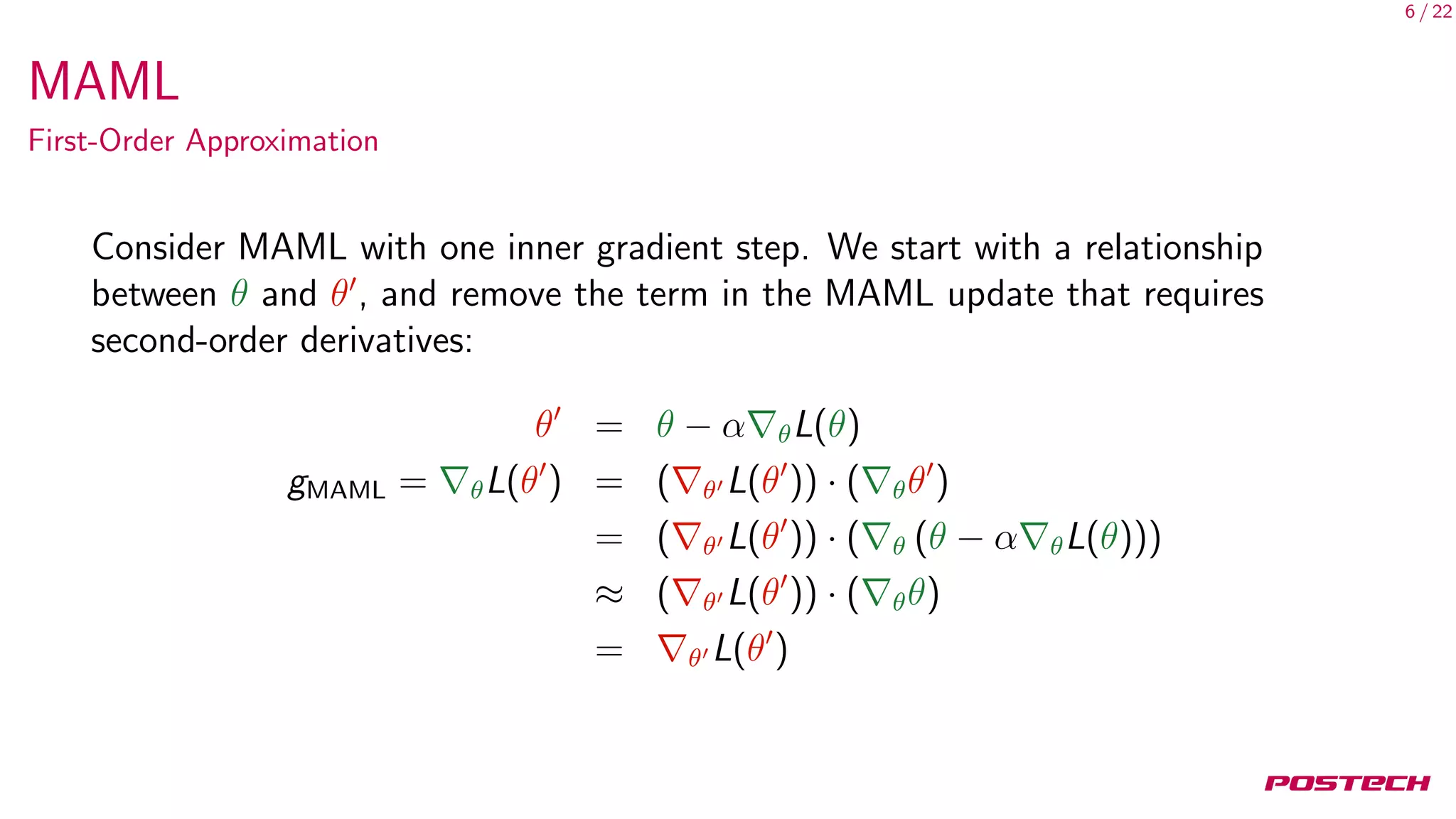

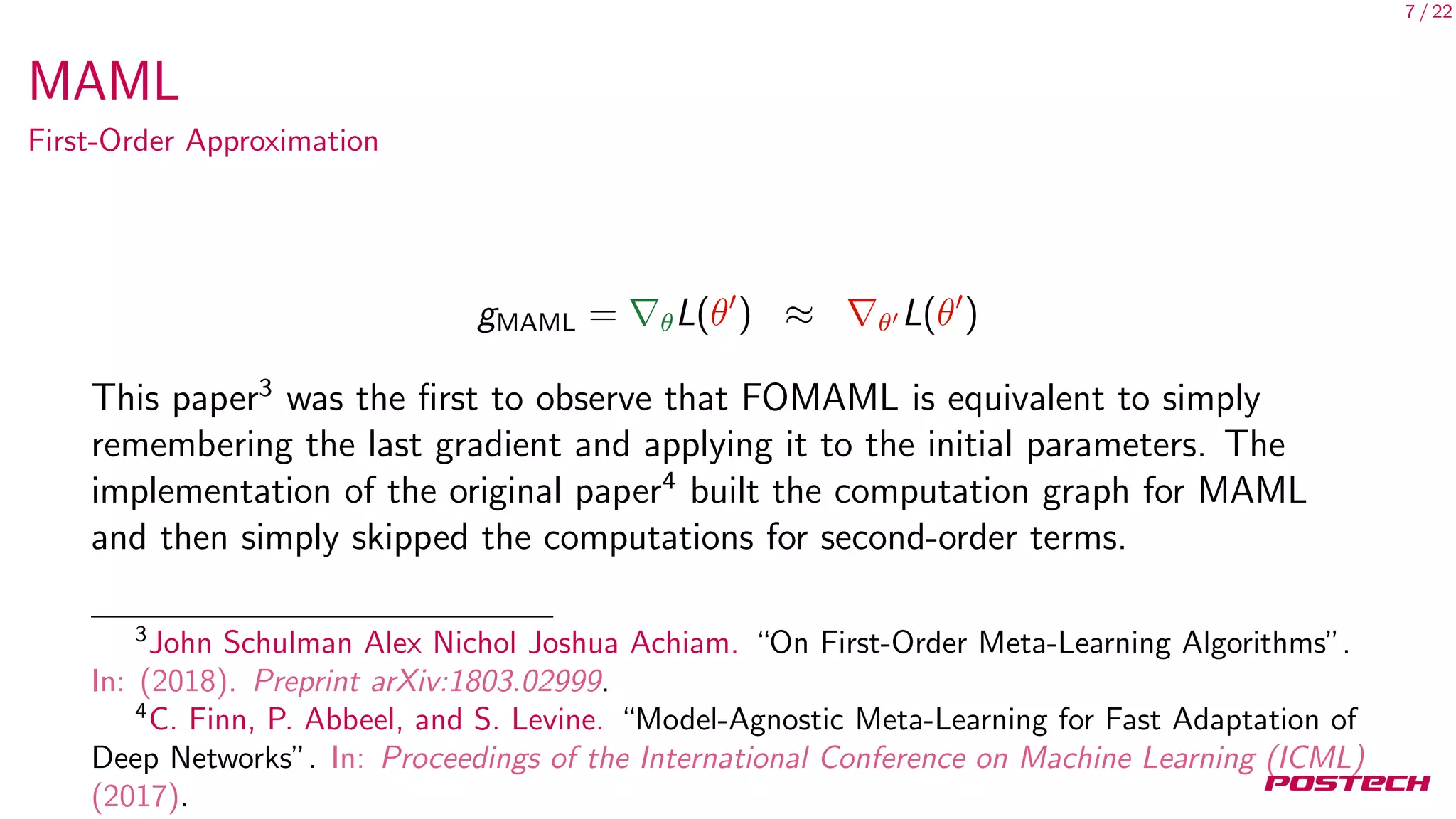

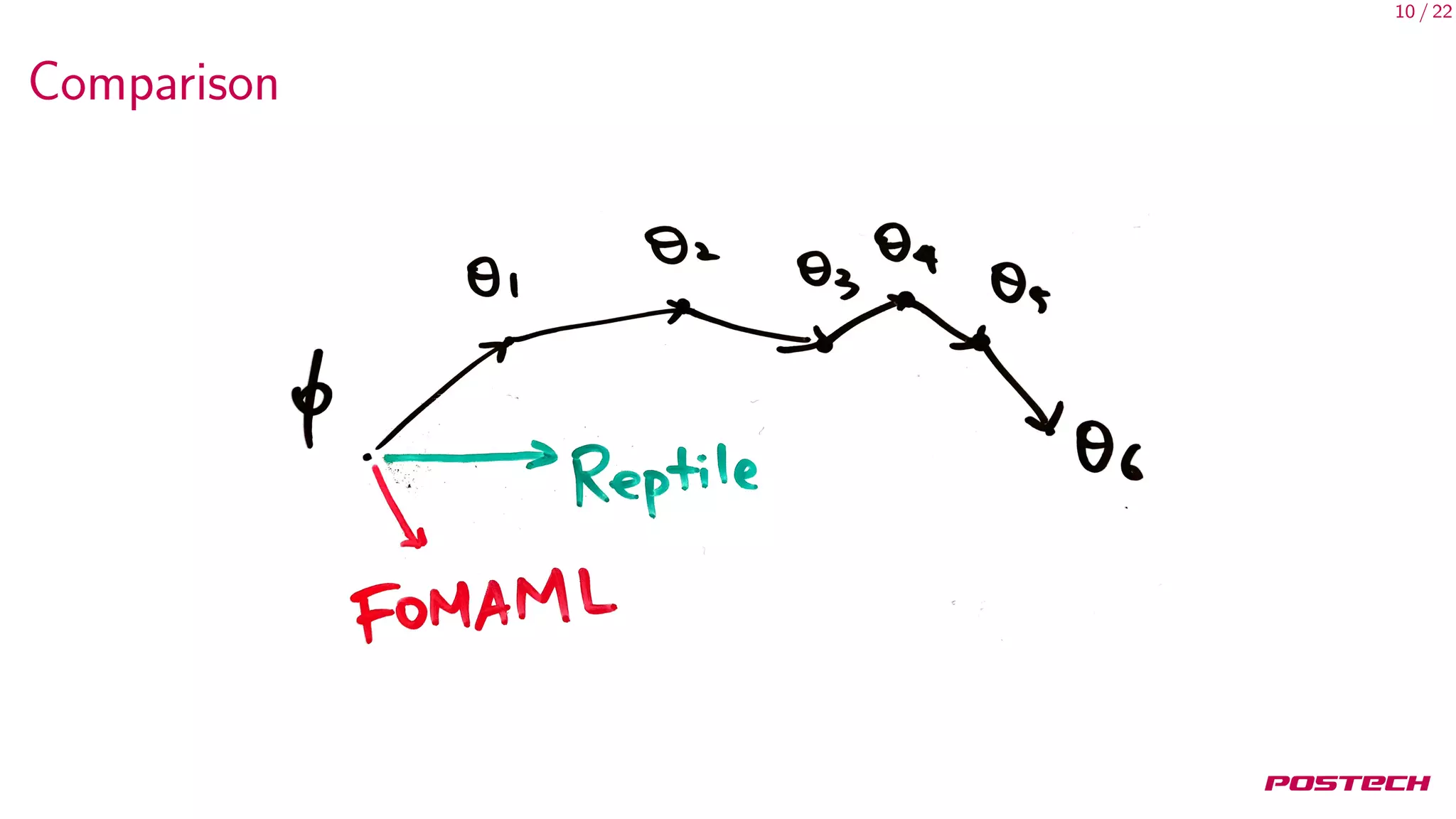

This document summarizes and analyzes first-order meta-learning algorithms. It discusses MAML, which approximates the MAML objective using only first-order information (FOMAML). FOMAML is equivalent to applying the last gradient to the initial parameters. Reptile is also analyzed, which simply averages the parameter updates. In expectation, the gradients of MAML, FOMAML and Reptile depend on the average gradient and average inner product of gradients. Experiments show similar performance between FOMAML and Reptile. The analysis suggests SGD may generalize well due to being an approximation of MAML.

![16 / 22

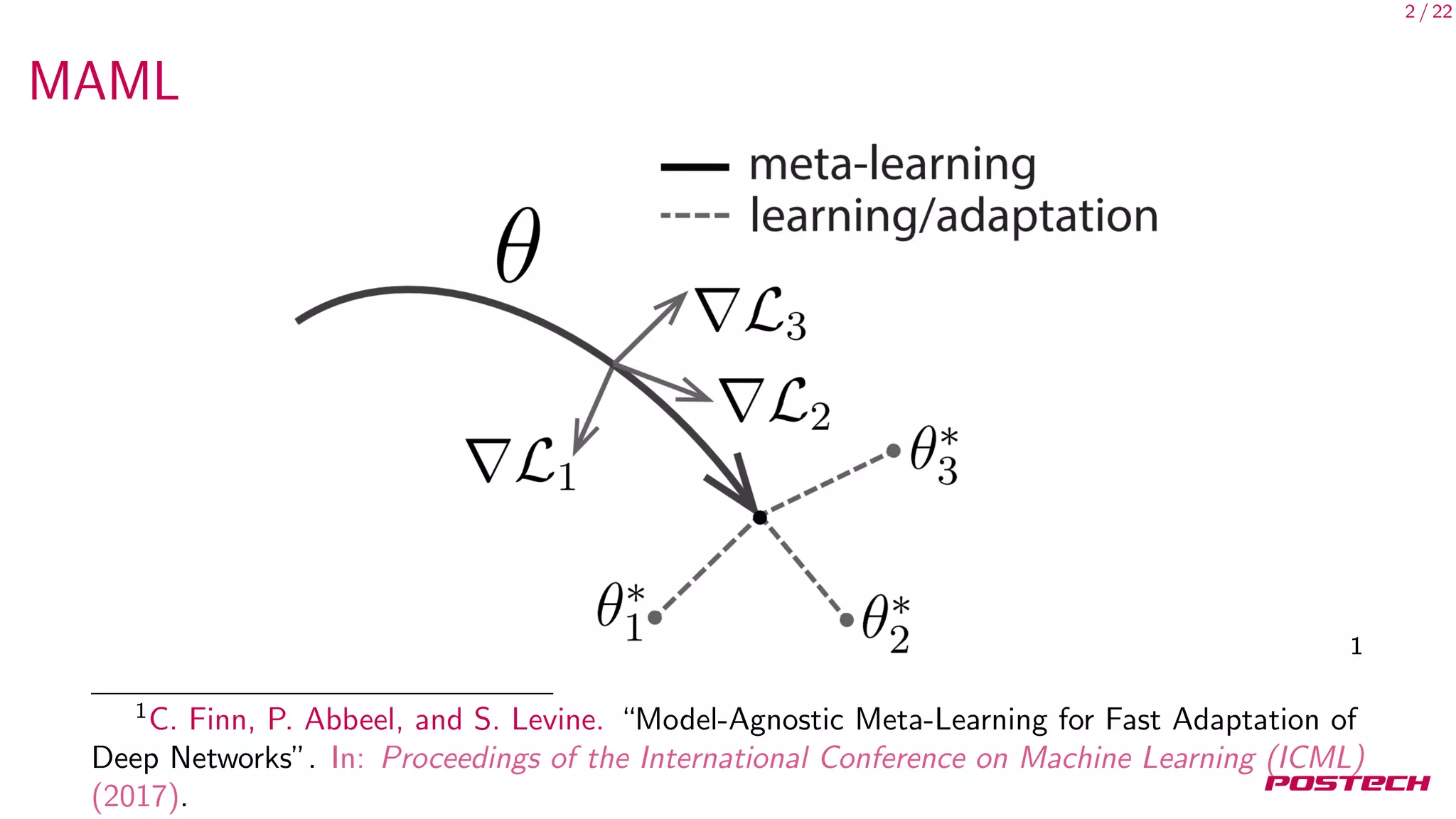

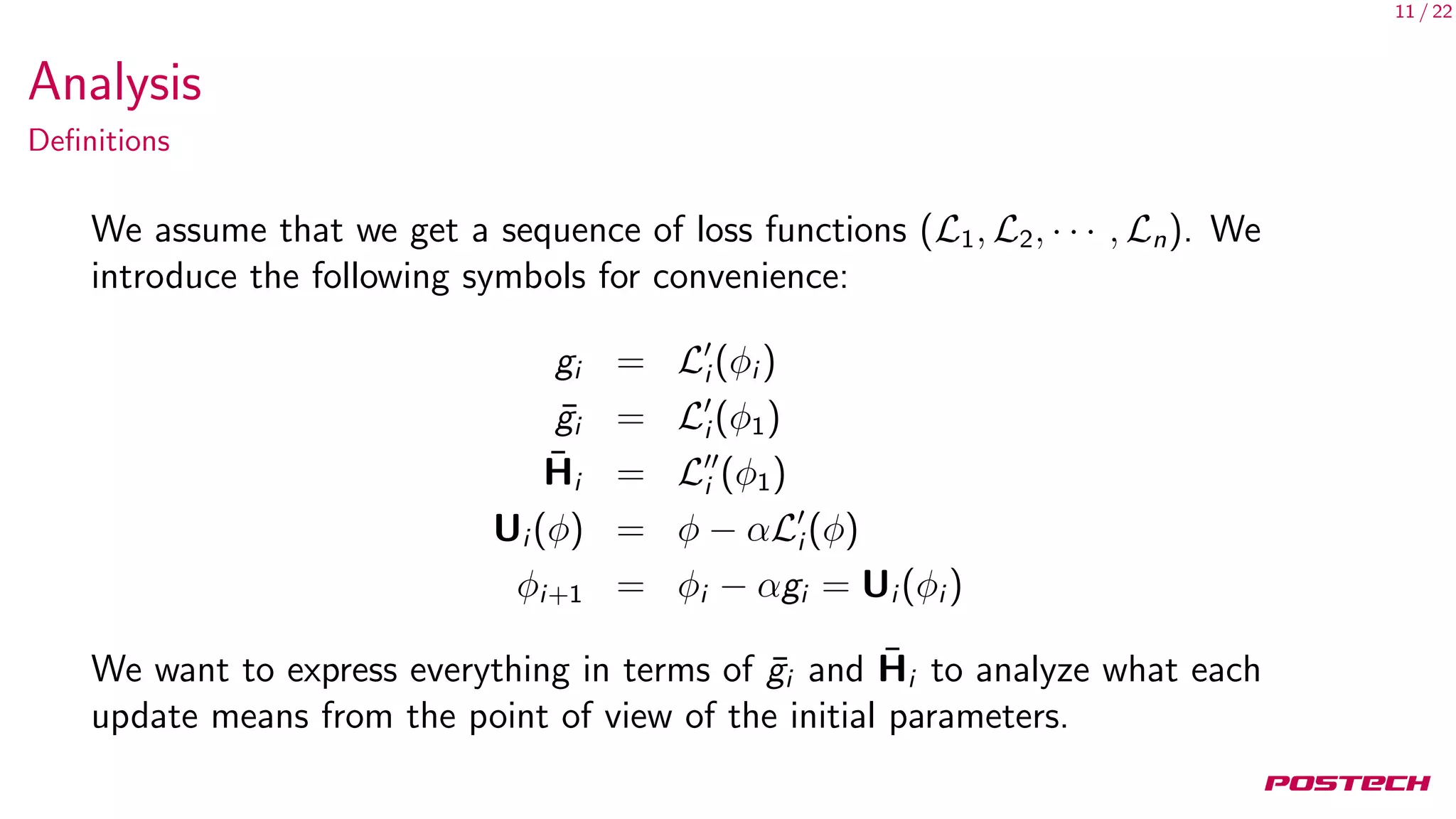

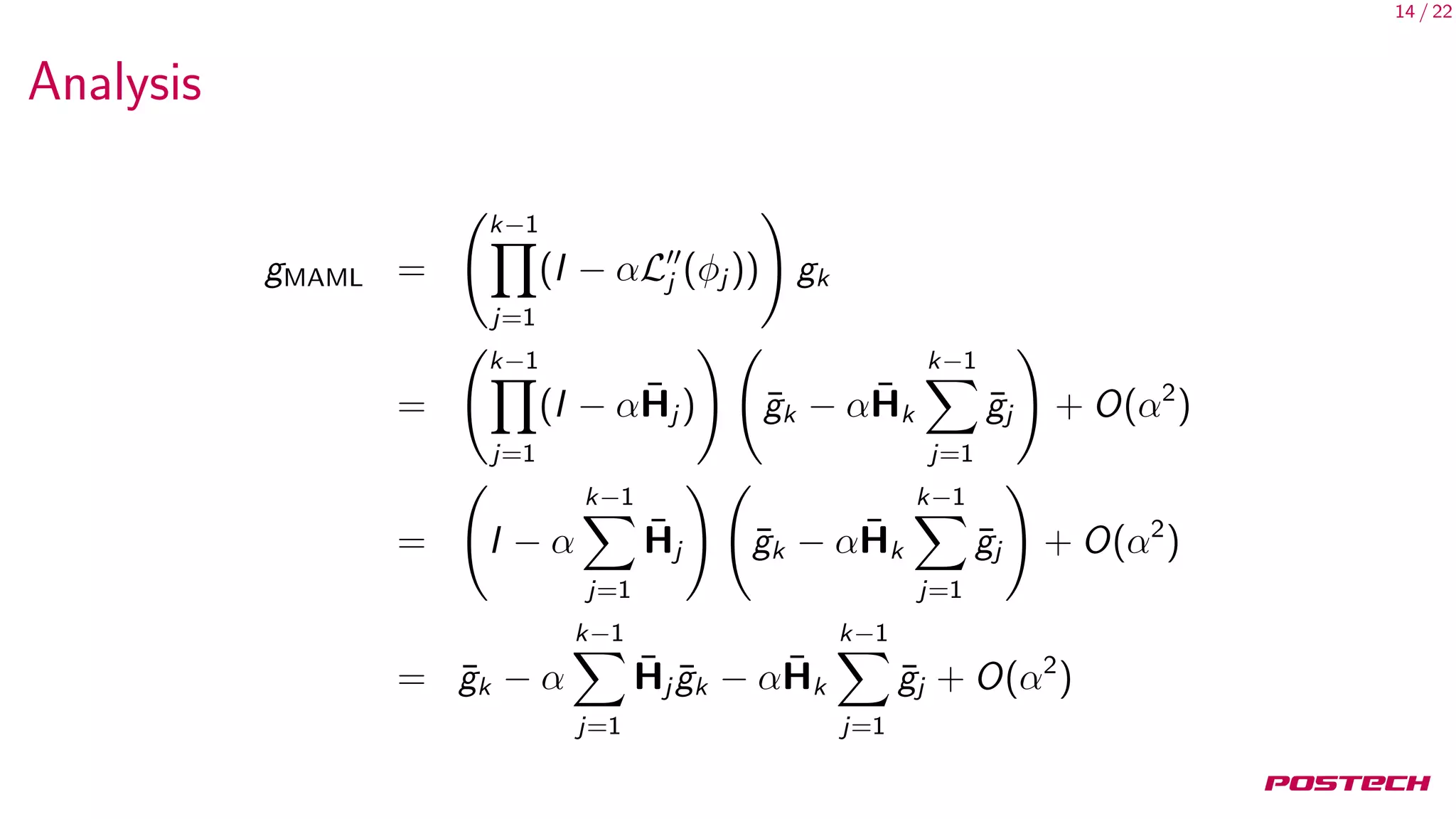

Analysis

Since loss functions are exchangeable (losses are typically computed over

minibatches randomly taken from a larger set),

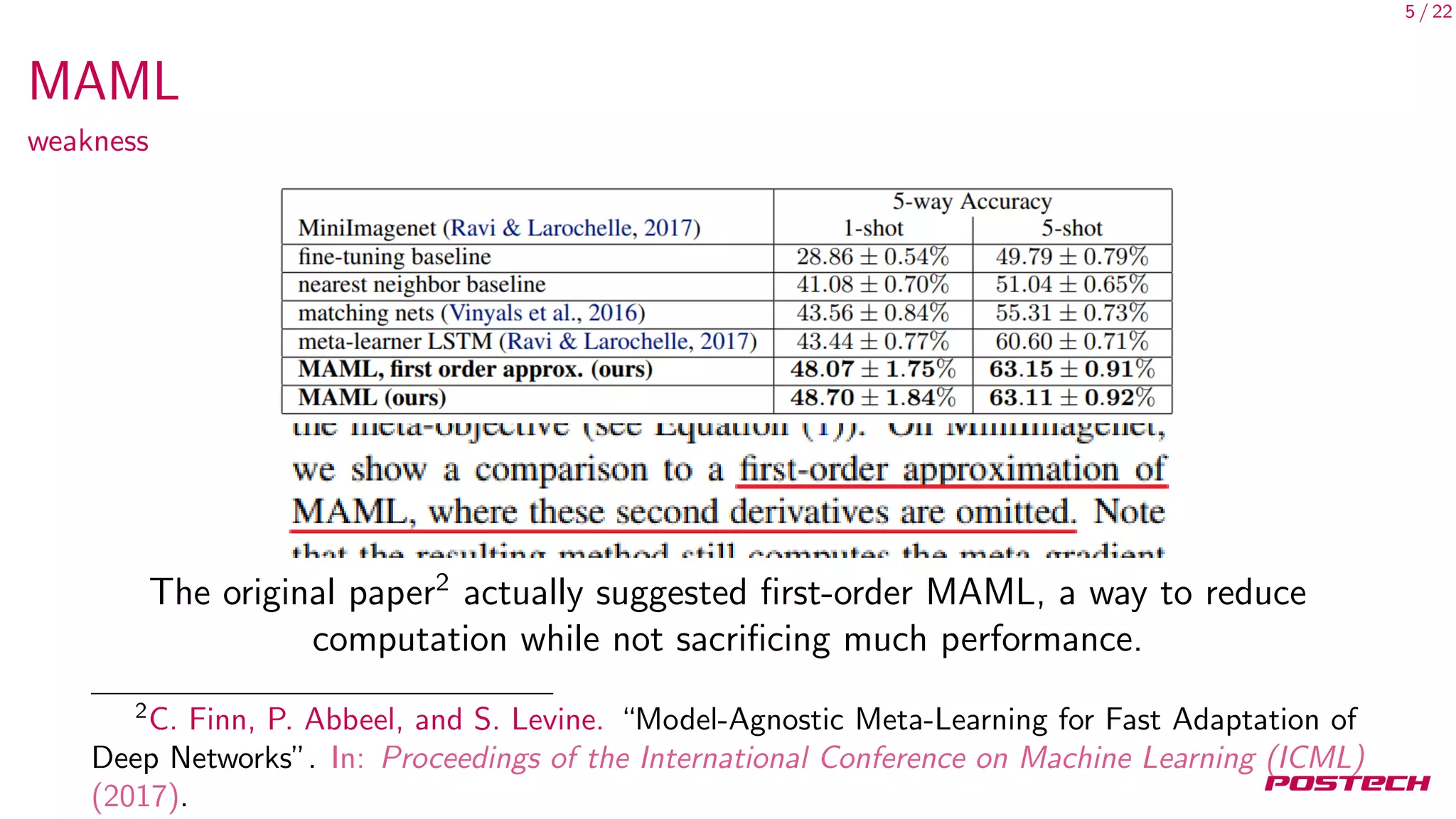

E[¯g1] = E[¯g2] = · · ·

Similarly,

E[ ¯Hi ¯gj ] =

1

2

E[ ¯Hi ¯gj + ¯Hj ¯gi ]

=

1

2

E[

∂

∂φ1

(¯gi · ¯gj )]](https://image.slidesharecdn.com/main-180517050811/75/On-First-Order-Meta-Learning-Algorithms-16-2048.jpg)

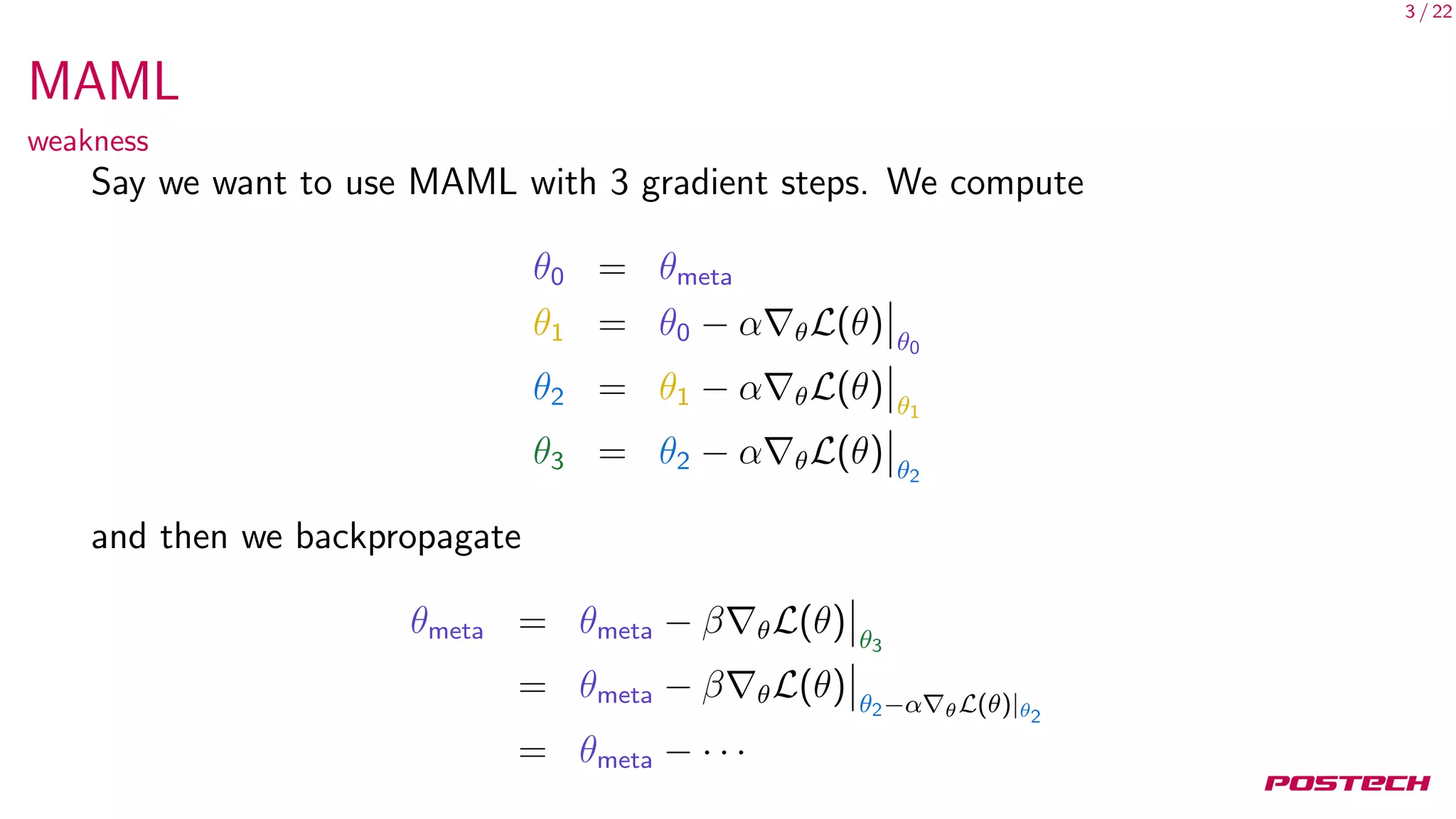

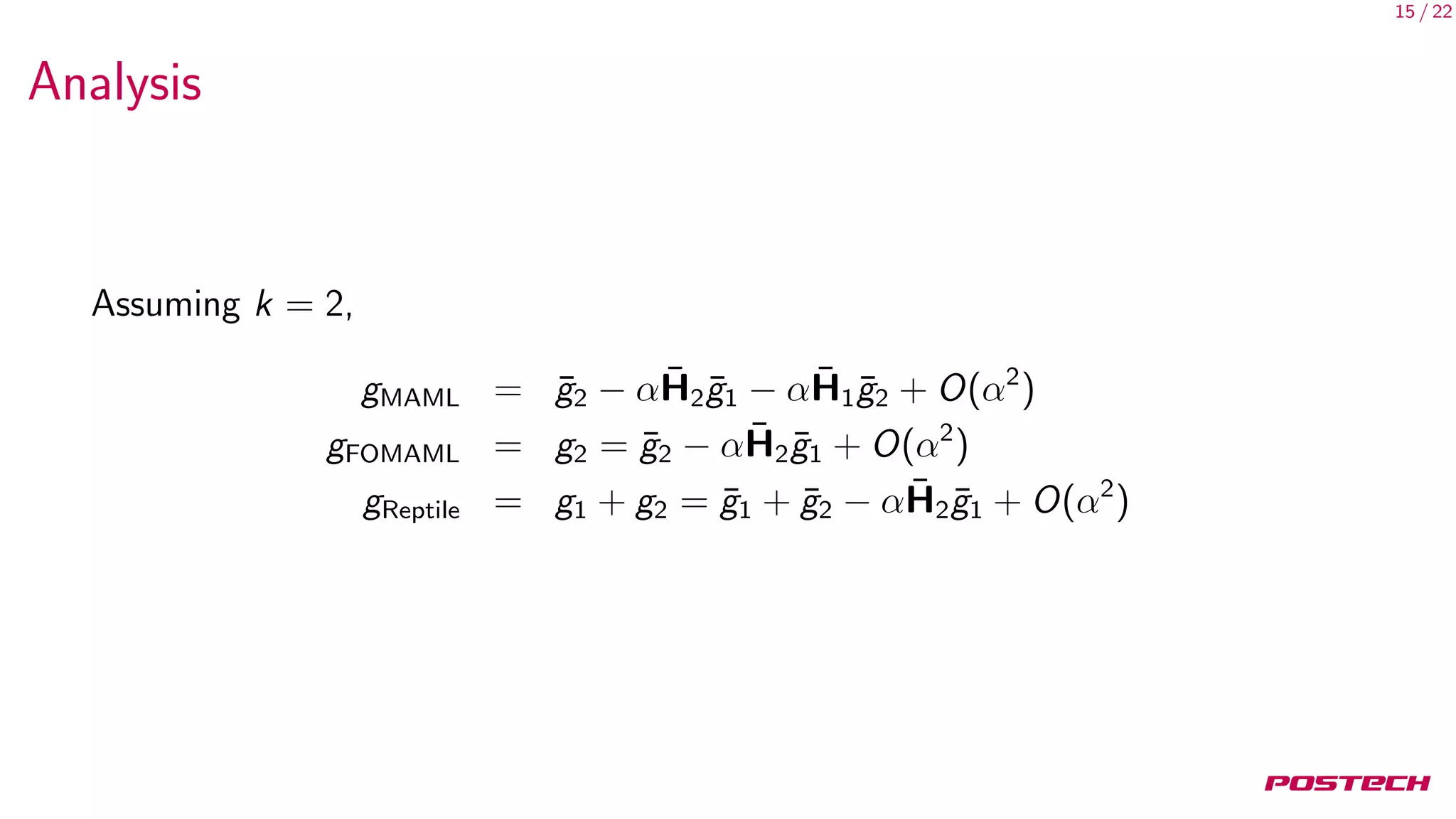

![17 / 22

Analysis

Therfore, in expectation, there are only two kinds of terms:

AvgGrad = E[¯g]

AvgGradInner = E[¯g · ¯g ]

We now return to gradient-based meta-learning for k steps:

E[gMAML] = 1AvgGrad + (2k − 2)αAvgGradInner

E[gFOMAML] = 1AvgGrad + (k − 1)αAvgGradInner

E[gReptile] = kAvgGrad +

1

2

k(k − 1)αAvgGradInner](https://image.slidesharecdn.com/main-180517050811/75/On-First-Order-Meta-Learning-Algorithms-17-2048.jpg)

![22 / 22

References I

[1] John Schulman Alex Nichol Joshua Achiam. “On First-Order Meta-Learning

Algorithms”. In: (2018). Preprint arXiv:1803.02999.

[2] C. Finn, P. Abbeel, and S. Levine. “Model-Agnostic Meta-Learning for Fast

Adaptation of Deep Networks”. In: Proceedings of the International

Conference on Machine Learning (ICML) (2017).](https://image.slidesharecdn.com/main-180517050811/75/On-First-Order-Meta-Learning-Algorithms-22-2048.jpg)