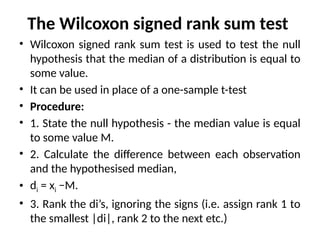

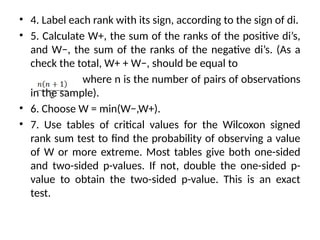

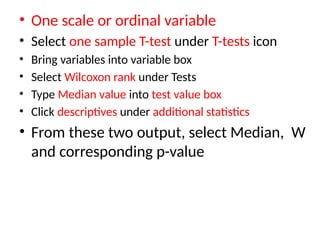

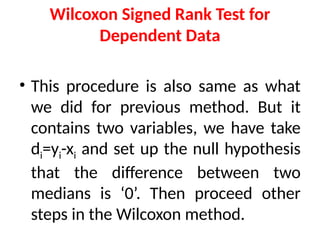

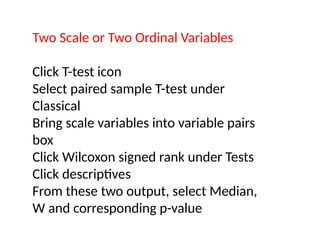

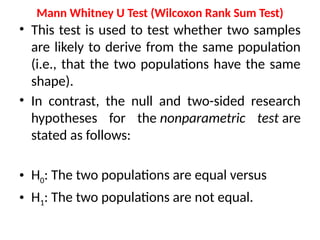

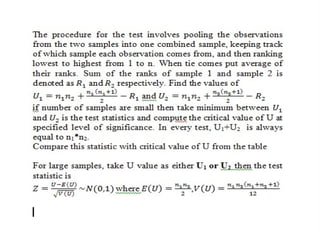

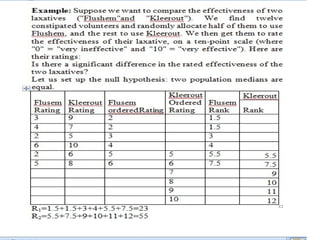

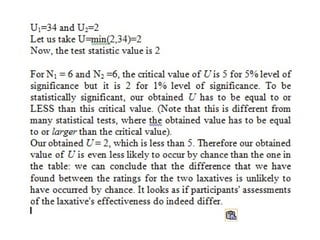

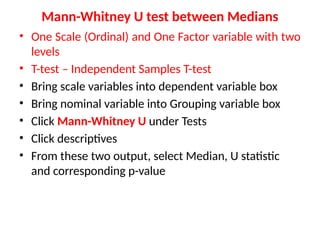

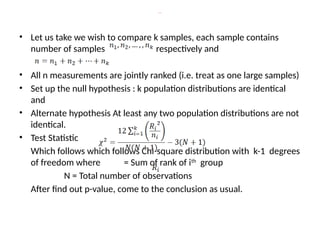

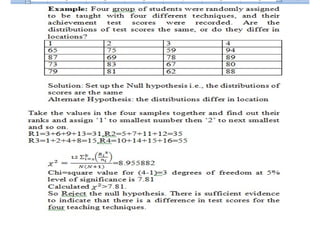

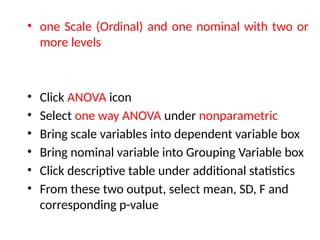

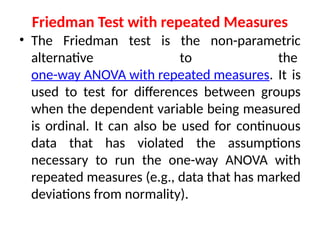

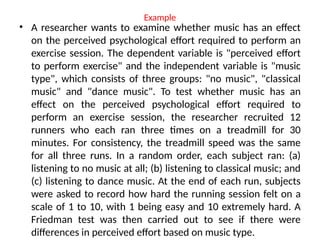

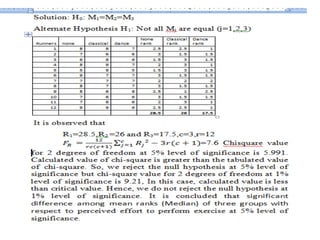

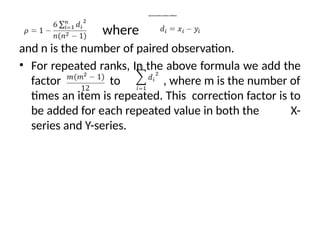

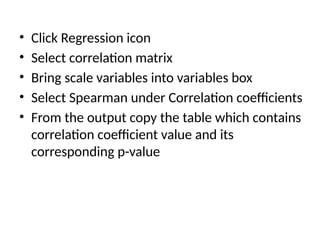

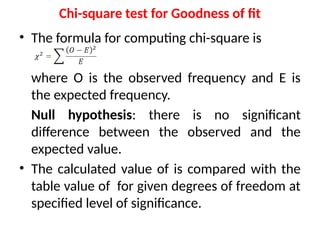

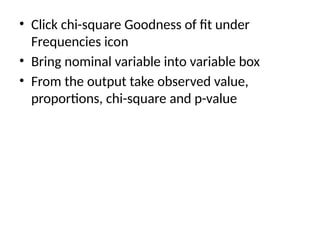

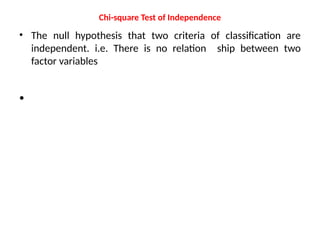

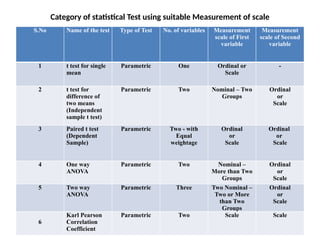

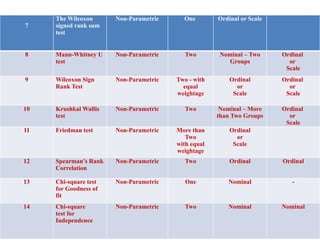

This document discusses various nonparametric statistical tests, including the Wilcoxon signed rank sum test, Mann-Whitney U test, Kruskal-Wallis test, and Friedman test, explaining their procedures, hypotheses, and applications for analyzing ordinal and nominal data. It highlights how these tests are used in situations where the assumptions of parametric tests cannot be met, providing step-by-step instructions for conducting each test. Additionally, it includes a table categorizing each test based on the number of variables and measurement scales.