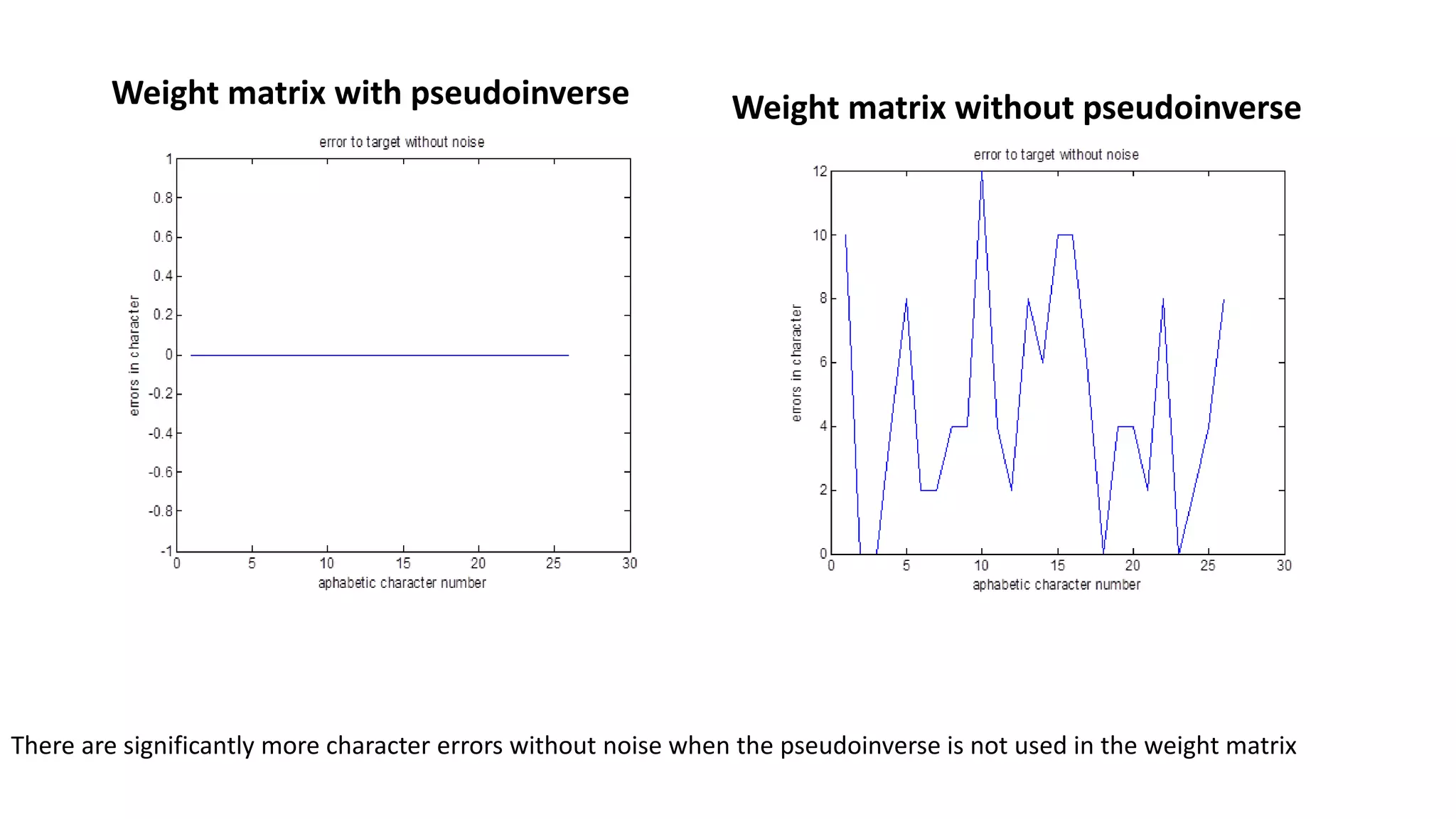

The document discusses autoassociative memory performance with and without using a pseudoinverse weight matrix. It finds that using a pseudoinverse weight matrix limits the range of values in the matrix and improves performance both without noise and with noise present. Specifically, it finds that without a pseudoinverse, there are significantly more character errors without noise and the autoassociative memory has much better performance in dealing with noise when a pseudoinverse is used to calculate the weight matrix.

![ An auto associative memory is used to retrieve a previously stored

pattern that most closely resembles the current pattern

In auto associative memory y[1],y[2],y[3],…….y[m] number of stored

pattern and an output pattern vector y[m] can be obtained from noisy

y[m]

Hetero associative memory:- the retrieved pattern is, in general,

different from the input pattern not only in content but possibly also

different in type and format.

in hetero associative memory {c(1),y(1)},{c(2),y(2)},…….{( 𝑐m,ym)}

output a pattern vector y(m) if noisy or incomplete version of c(m) is

input.](https://image.slidesharecdn.com/nn-171228055542/75/Neural-network-3-2048.jpg)

![References:-

[1] J. A. Anderson, “A simple neural network generating an interactive

memory,” Mathematical Biosciences, vol. 14, no. 3-4, pp. 197–220, 1972.

[2] T. Kohonen, “Correlation matrix memories,” IEEE Trans. Comput., vol.

C-21, no. 4, pp. 353–359, 1972.

[3] K. Nakano, “Associatron–a model of associative memory,” IEEE Trans.

Syst., Man, Cybern., vol. 2, no. 3, pp. 380–388, 1972.

[4] J. J. Hopfield, “Neural networks and physical systems with emergent

collective computational abilities,” Proc. Natl. Acad. Sci. USA, vol. 79,

no. 8, pp. 2554–2558, 1982.](https://image.slidesharecdn.com/nn-171228055542/75/Neural-network-12-2048.jpg)