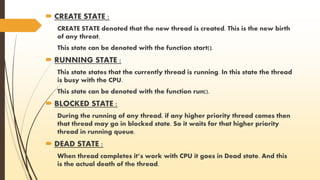

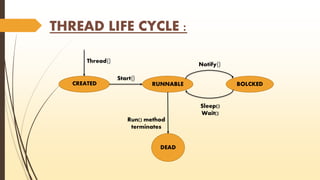

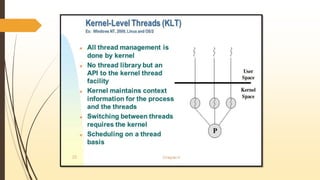

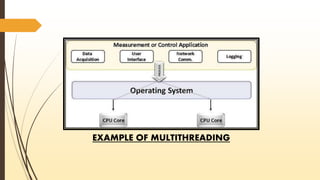

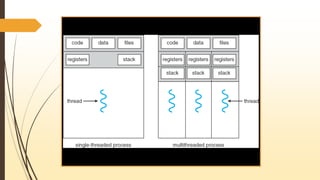

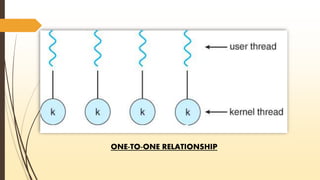

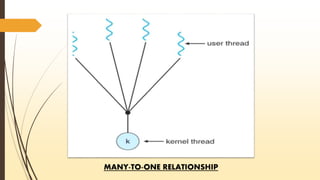

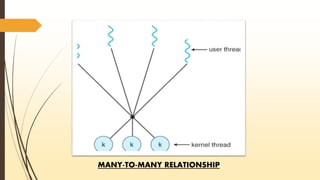

The document provides an overview of threads in computing, including their definition, states, types (kernel-level and user-level), and advantages and disadvantages. It explains multithreading, threading models (one-to-one, many-to-one, many-to-many), and threading issues such as signal handling and thread scheduling. Additionally, it discusses POSIX threads (pthreads) and references relevant literature.