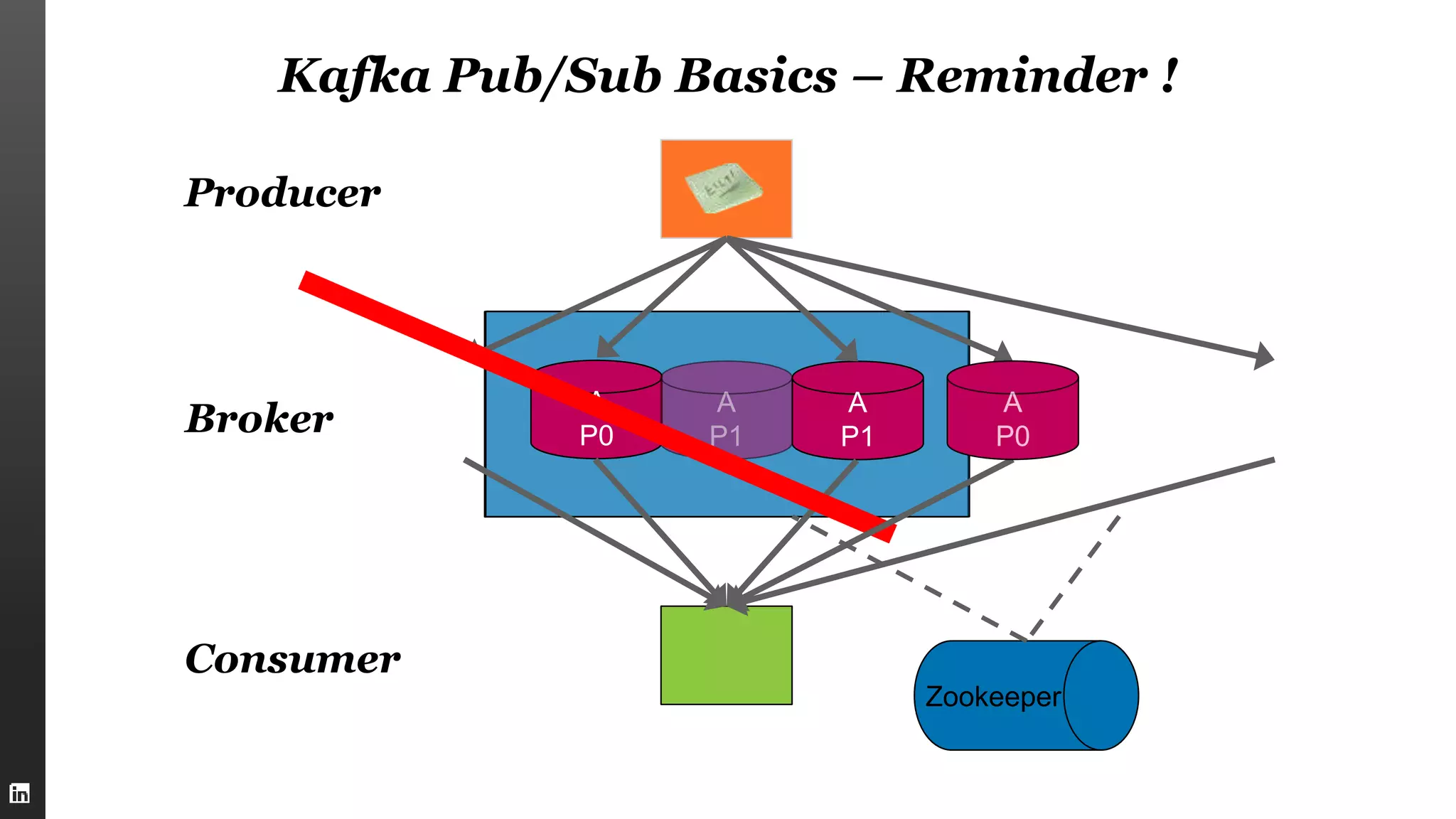

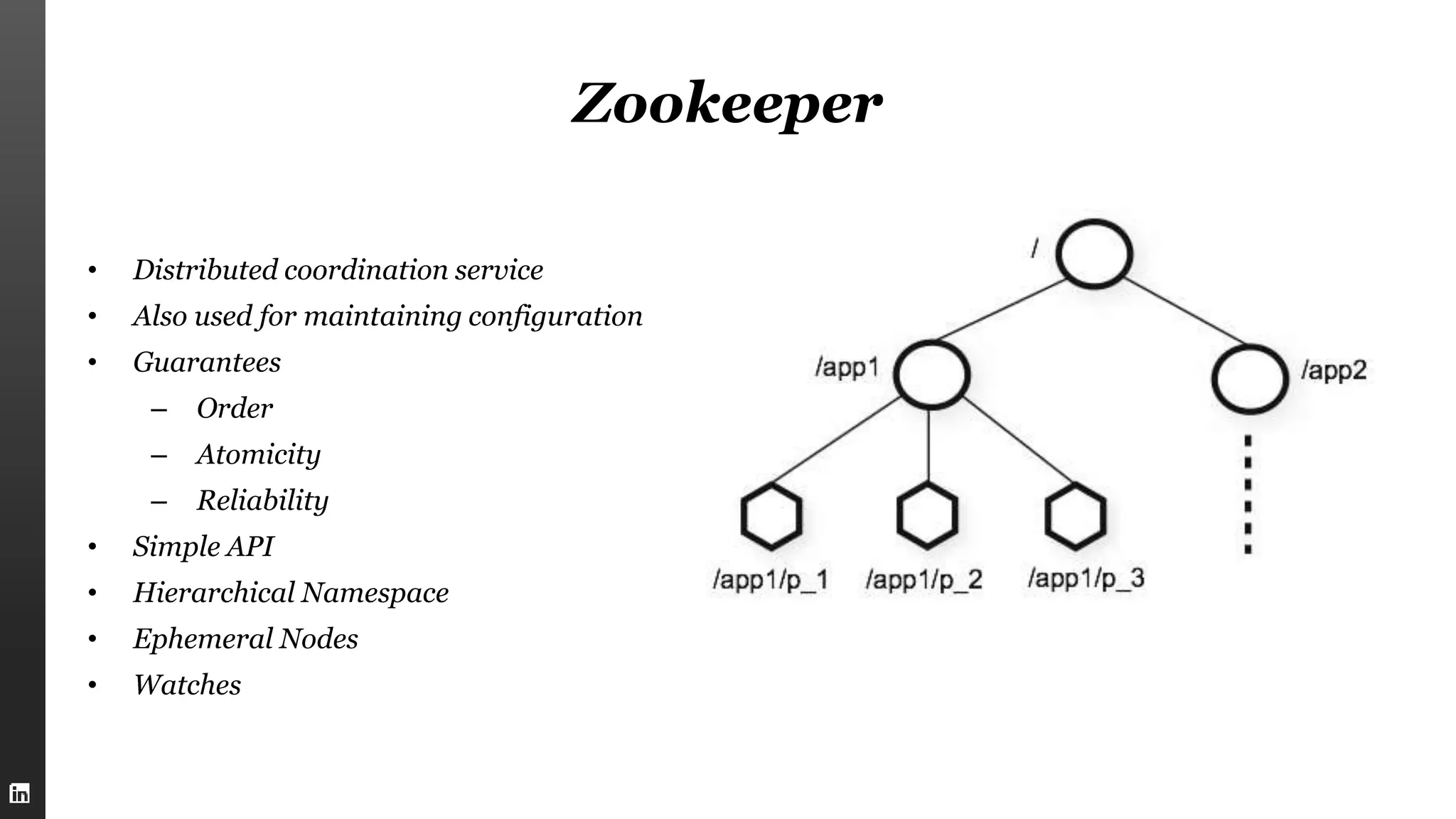

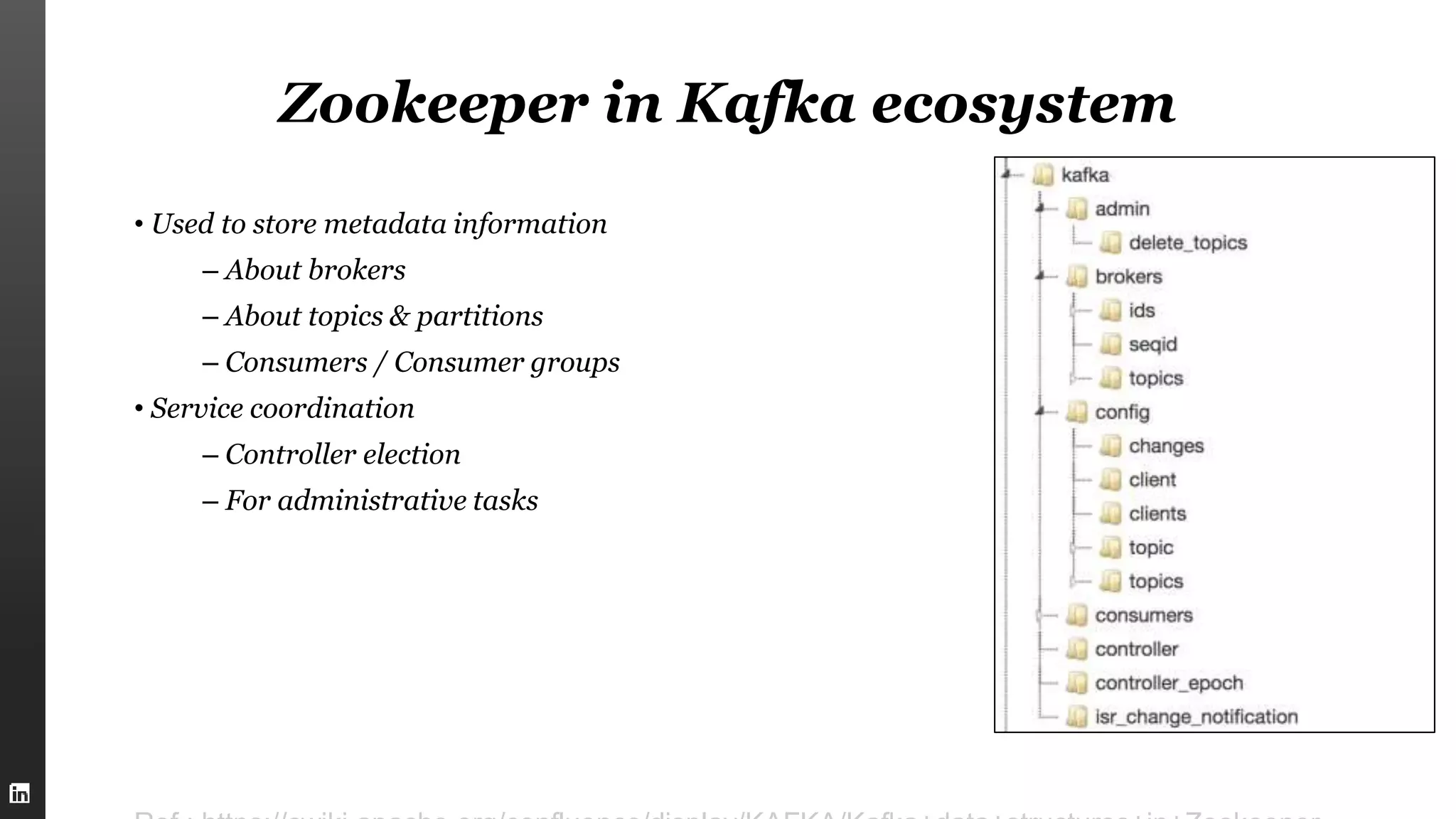

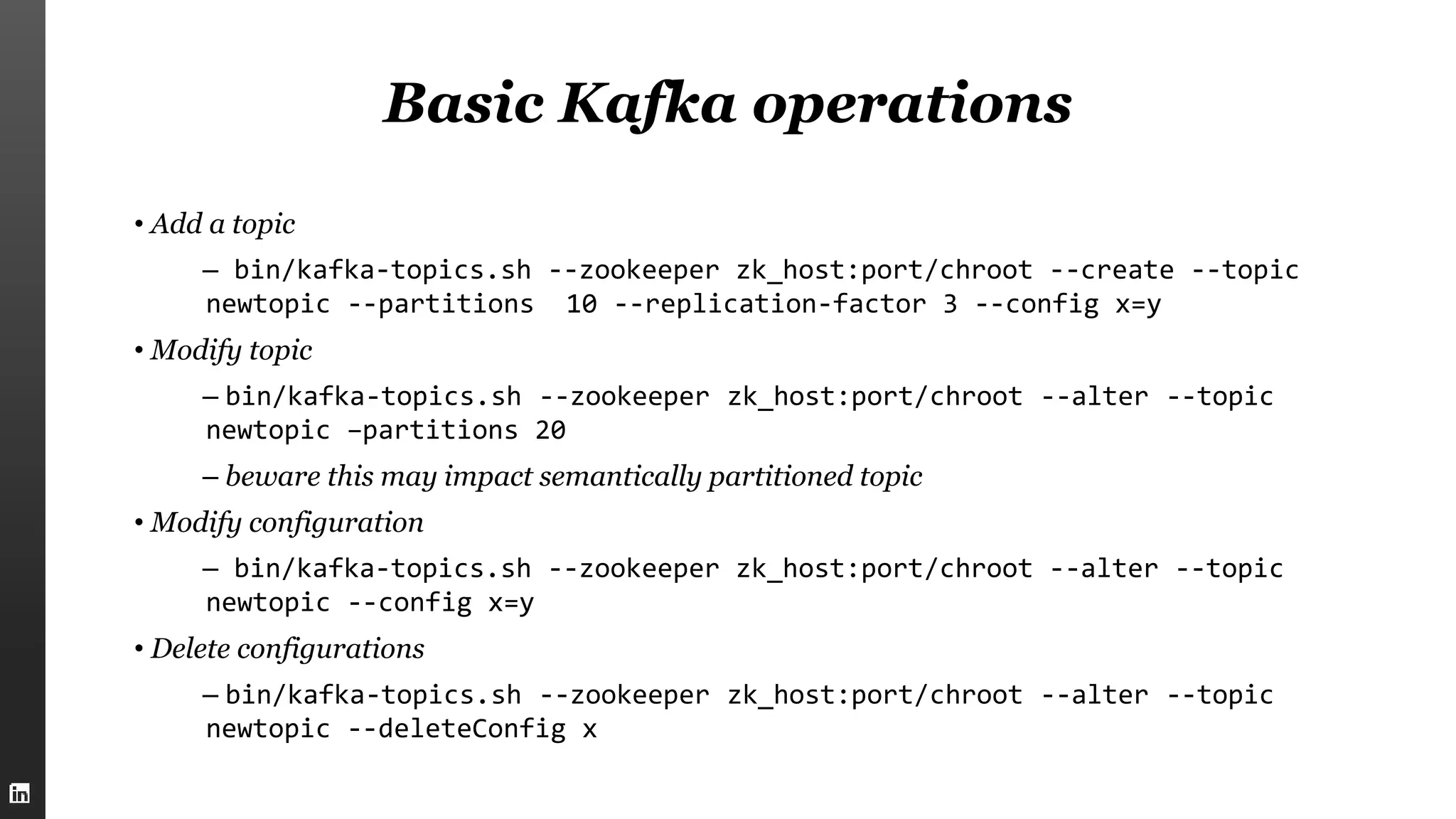

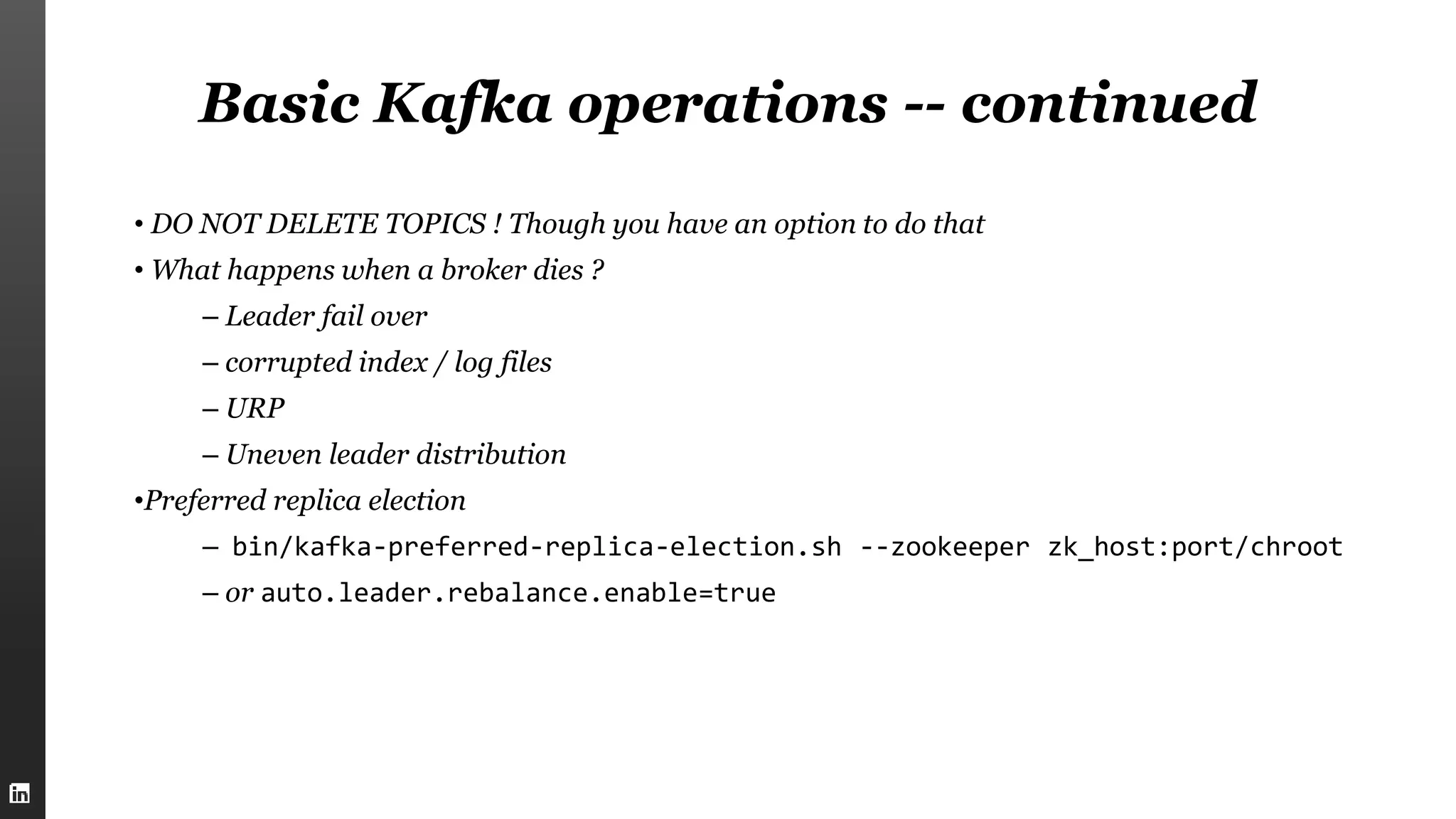

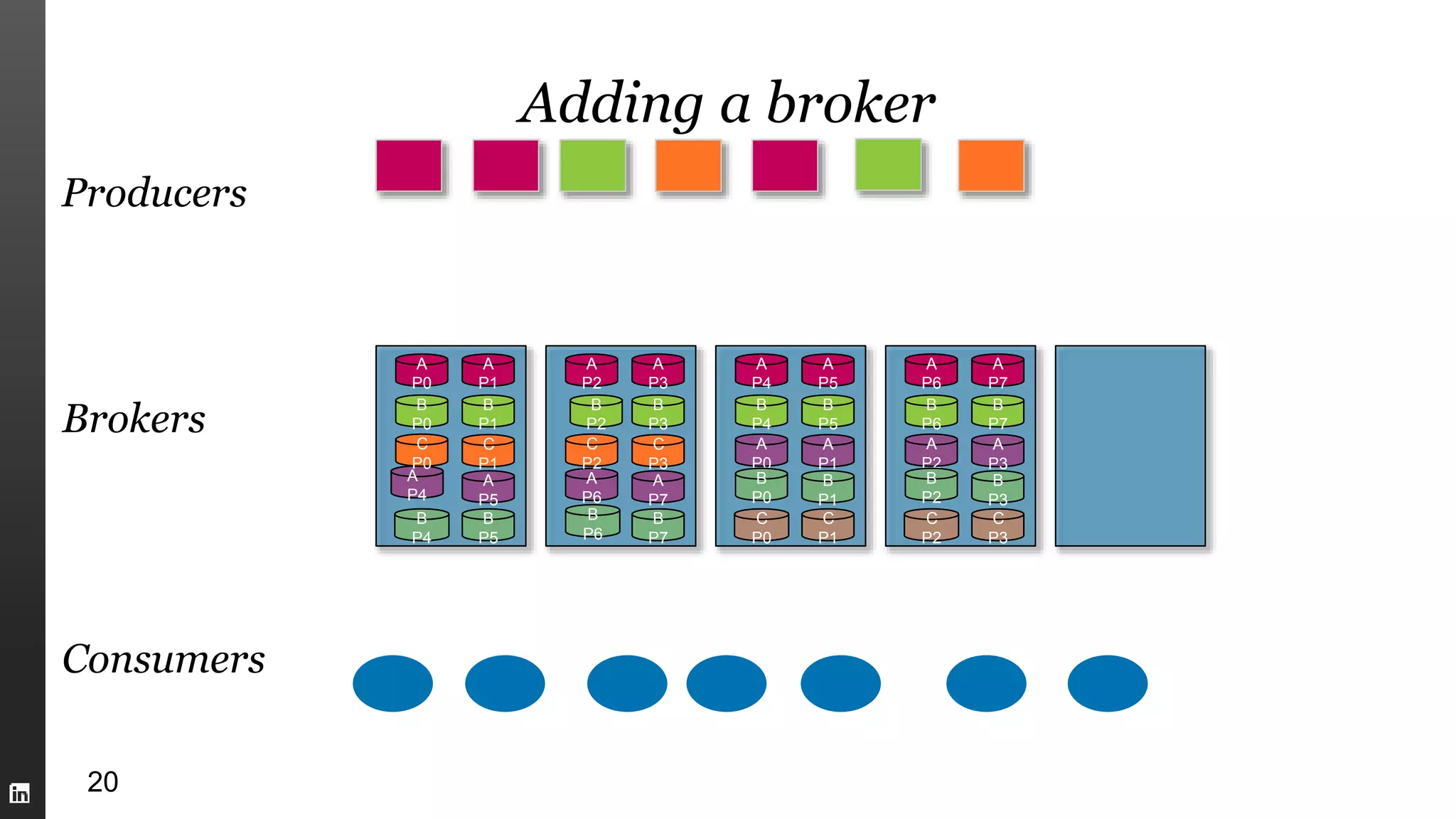

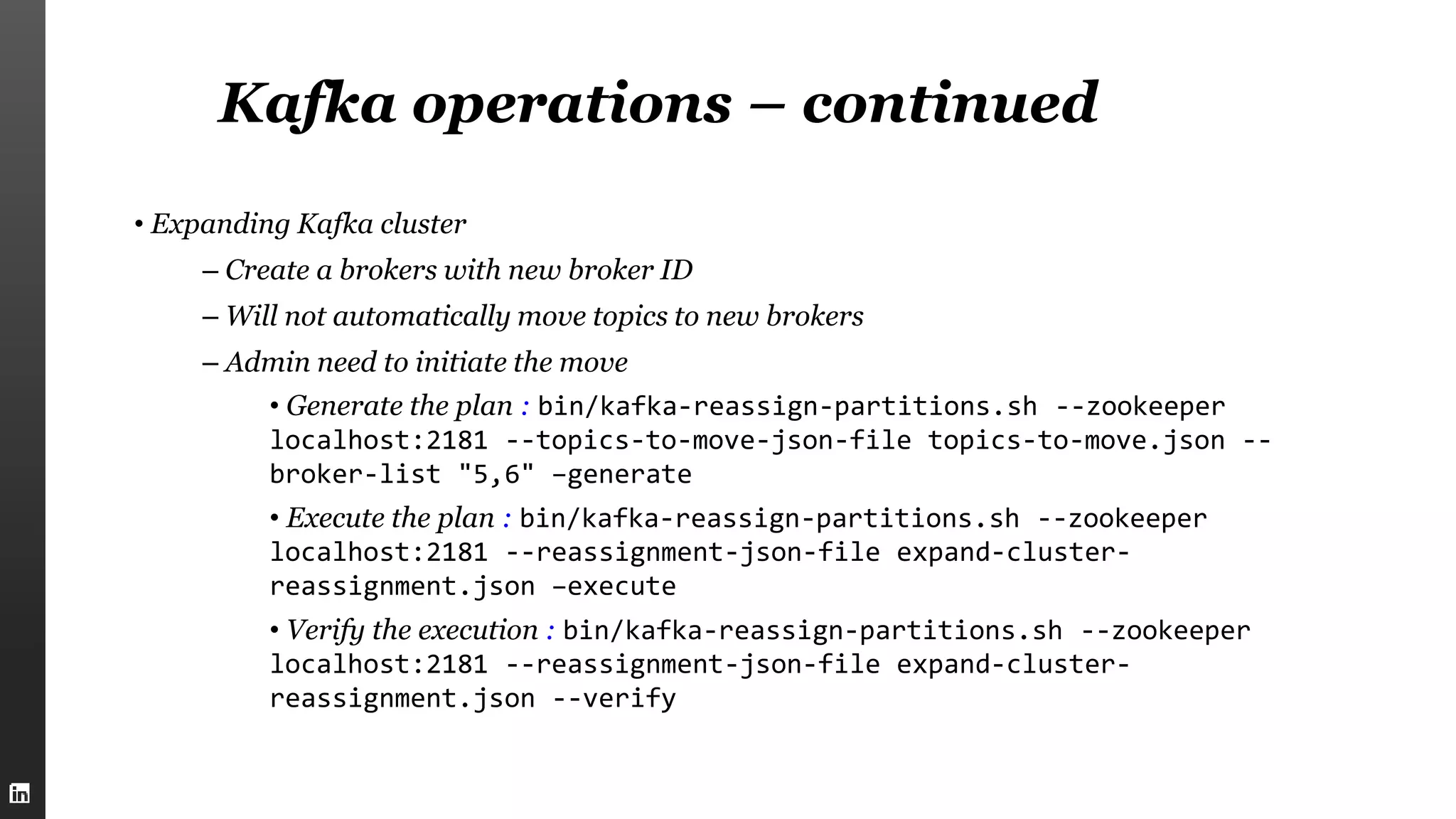

This document summarizes Kafka internals including how Zookeeper is used for coordination, how brokers store partitions and messages, how producers and consumers interact with brokers, how to ensure data integrity, and new features in Kafka 0.9 like security enhancements and the new consumer API. It also provides an overview of operating Kafka clusters including adding and removing brokers through reassignment.