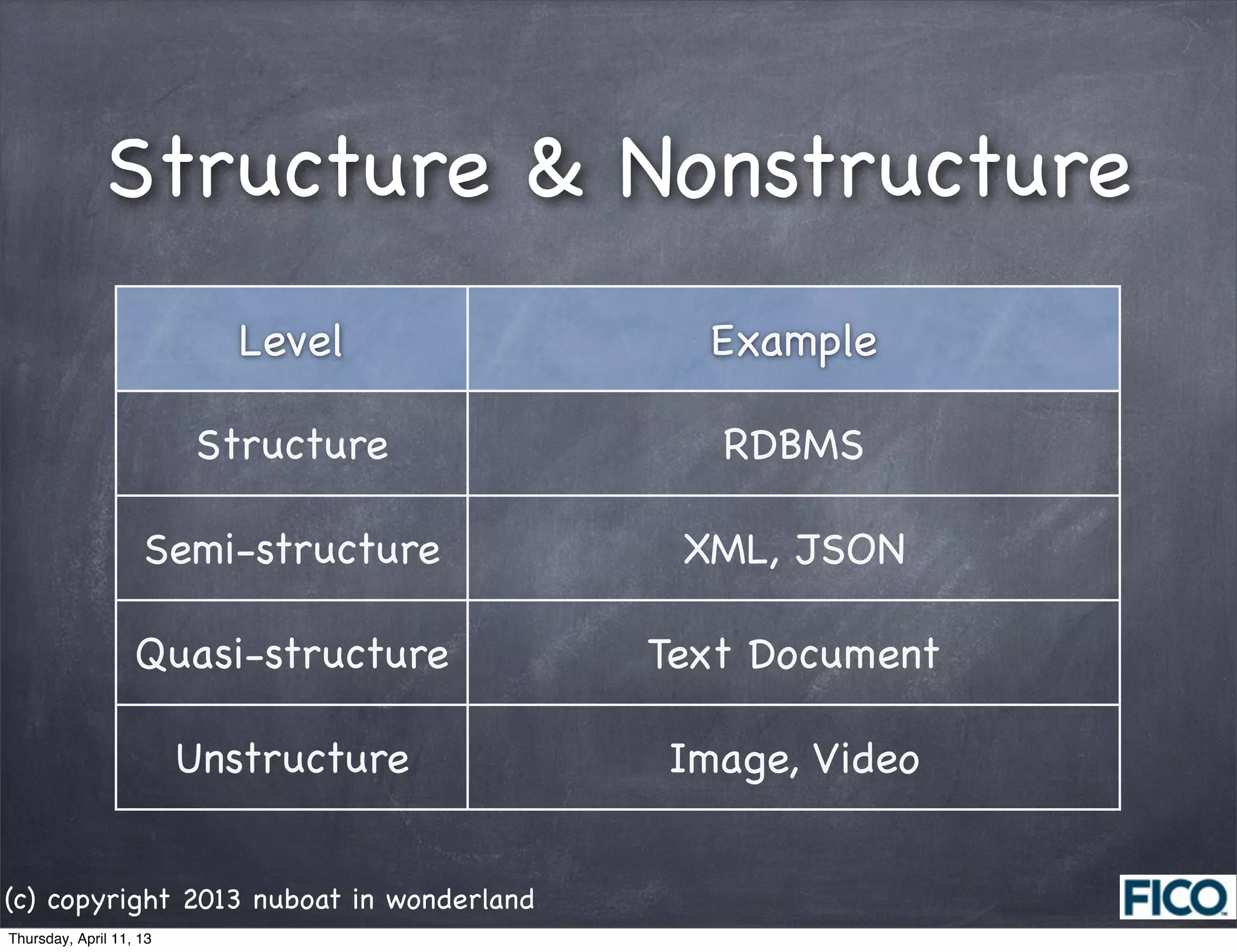

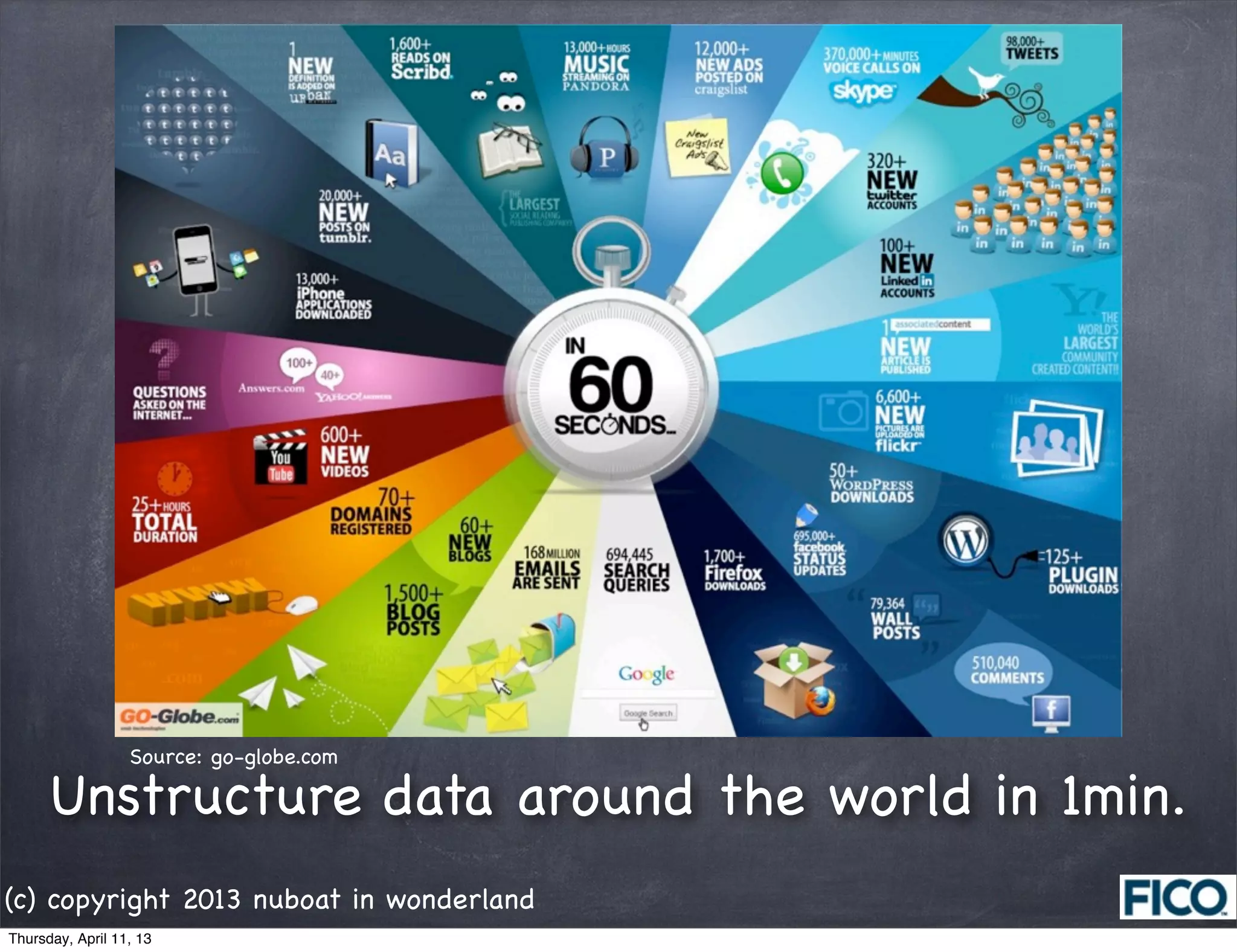

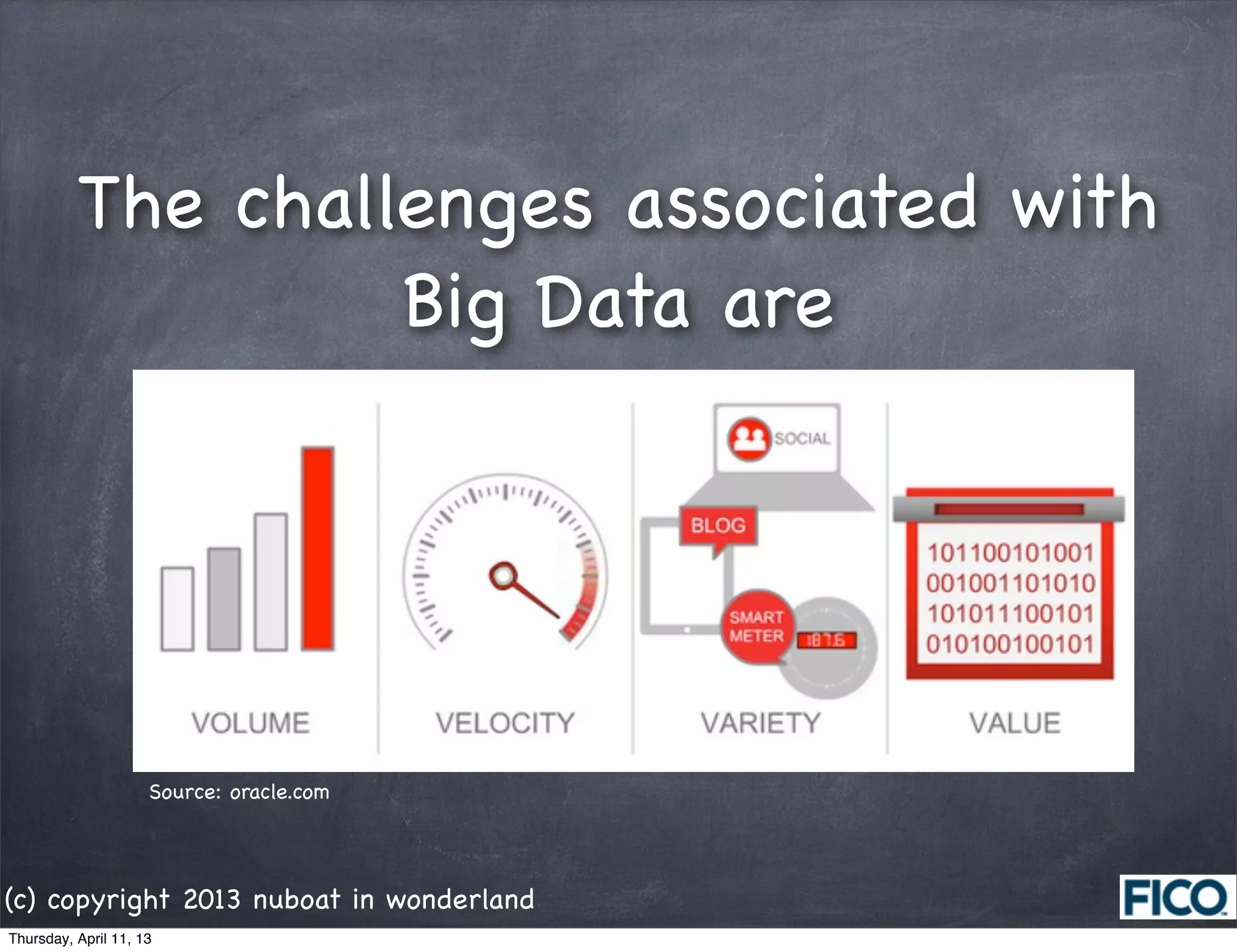

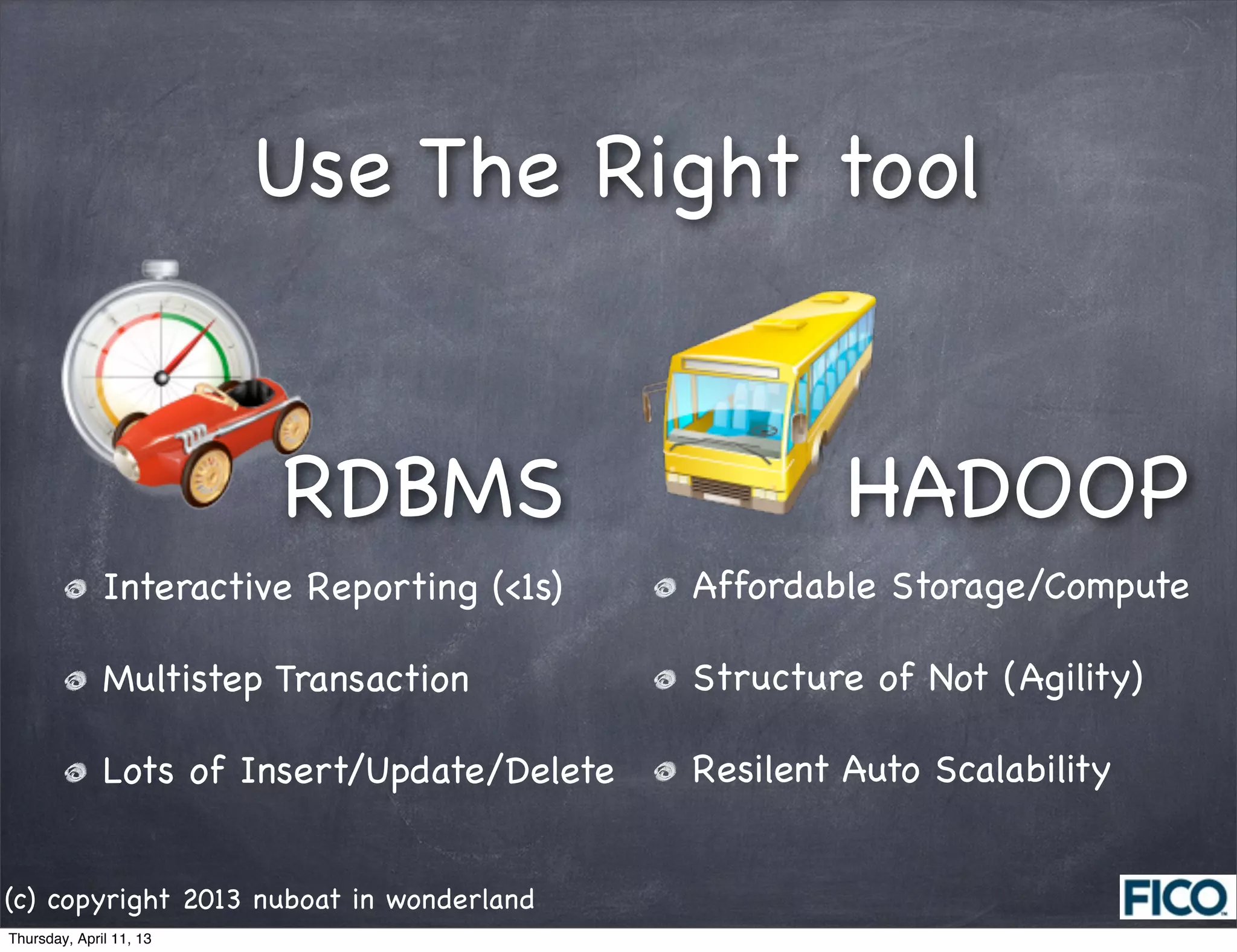

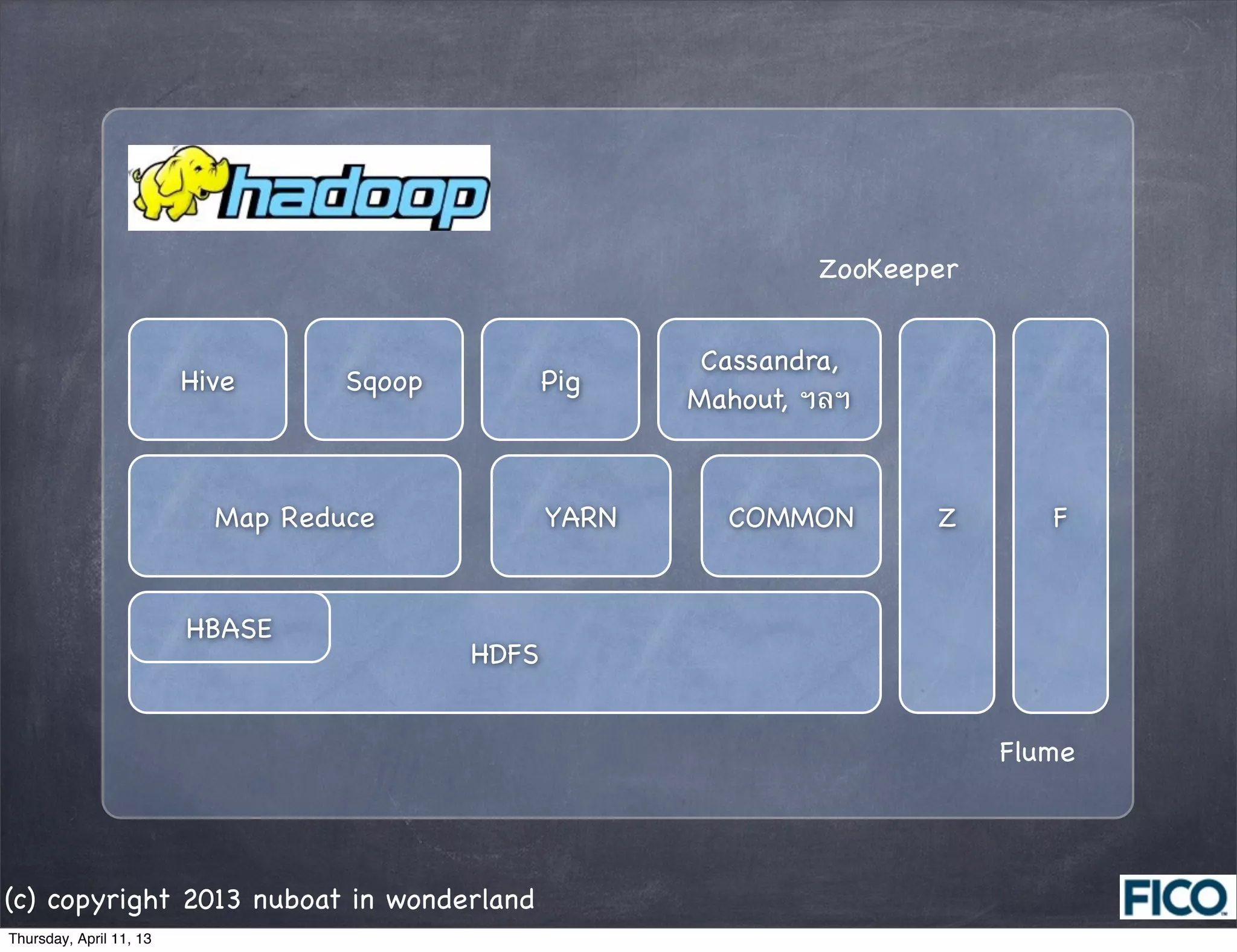

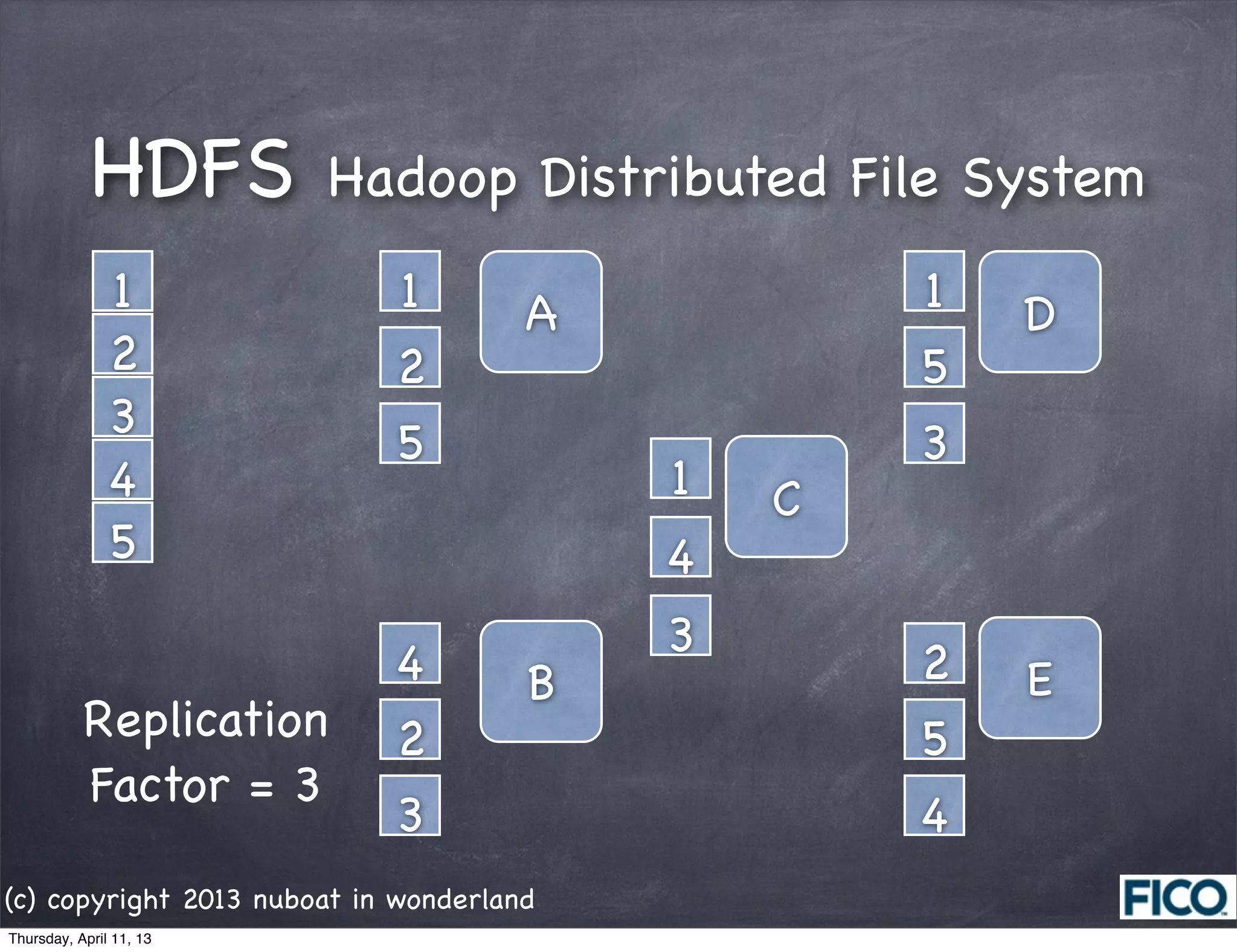

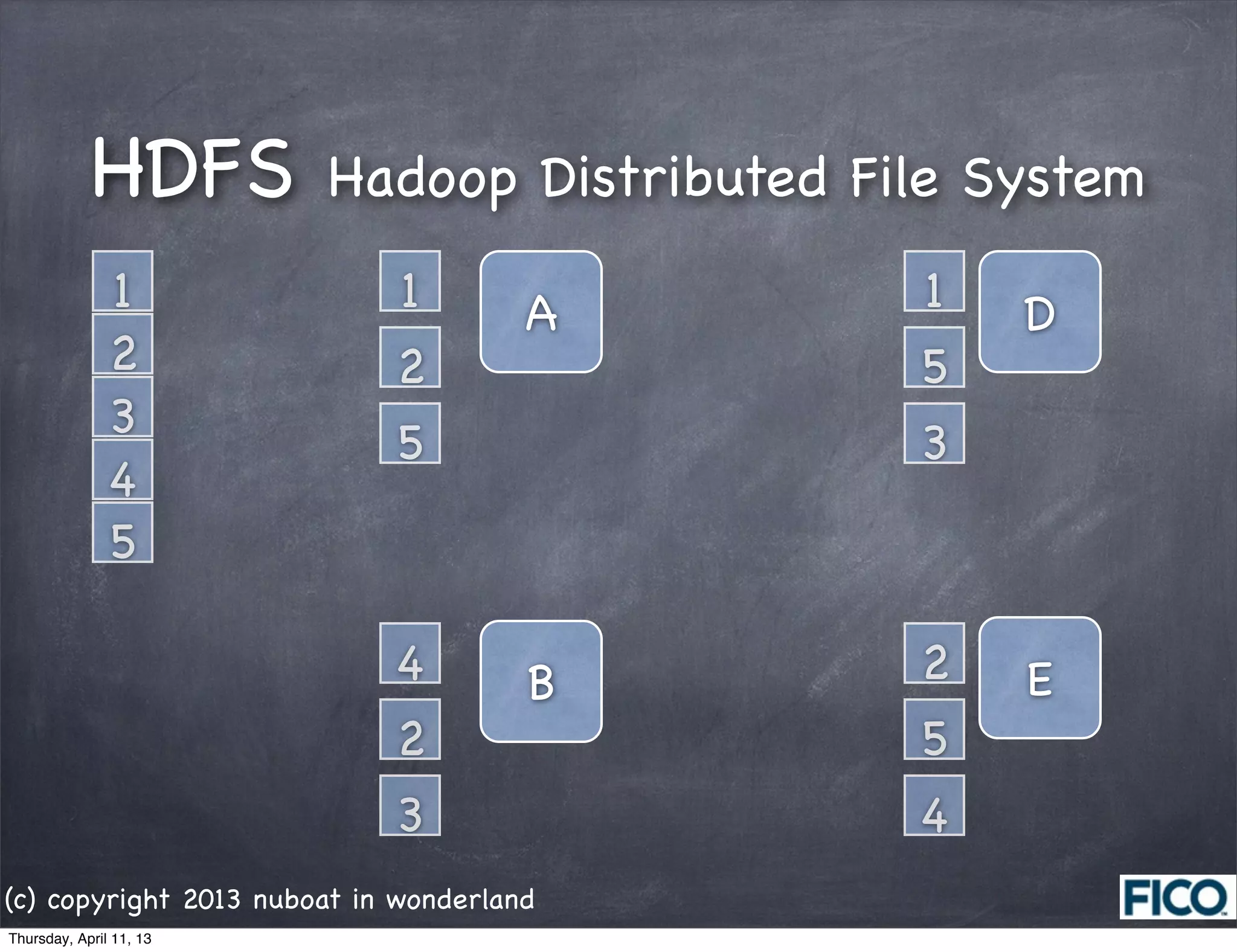

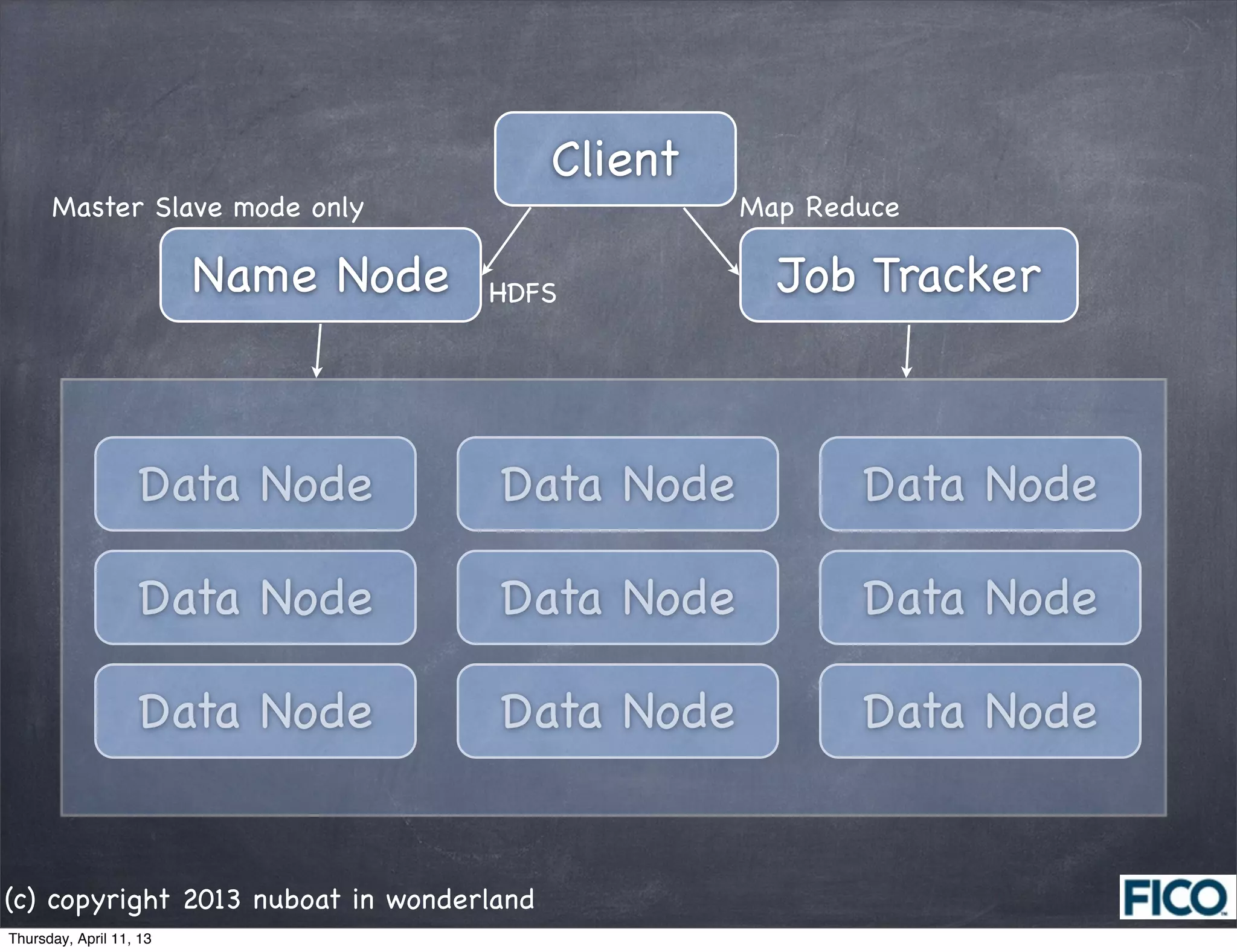

This document provides an overview and introduction to big data concepts. It discusses what big data is, different data structures and sources, challenges of big data, and appropriate tools to use for big data analytics like Hadoop. It also provides step-by-step instructions for installing and configuring Hadoop, including installing Java, adding users, configuring SSH, installing Hadoop files and setting configuration parameters.

![1. Install Java

[root@localhost ~]# ./jdk-6u39-linux-i586.bin

[root@localhost ~]# chown -R root:root ./jdk1.6.0_39/

[root@localhost ~]# mv jdk1.6.0_39/ /usr/lib/jvm/

[root@localhost ~]# update-alternatives --install "/usr/bin/java" "java" "/usr/lib/jvm/jdk1.6.0_39/bin/java"

1

[root@localhost ~]# update-alternatives --install "/usr/bin/javac" "javac" "/usr/lib/jvm/jdk1.6.0_39/bin/

javac" 1

[root@localhost ~]# update-alternatives --install "/usr/bin/javaws" "javaws" "/usr/lib/jvm/jdk1.6.0_39/bin/

javaws" 1

[root@localhost ~]# update-alternatives --config java

There are 3 programs which provide 'java'.

Selection Command

-----------------------------------------------

* 1 /usr/lib/jvm/jre-1.6.0-openjdk/bin/java

2 /usr/lib/jvm/jre-1.5.0-gcj/bin/java

+ 3 /usr/lib/jvm/jdk1.6.0_39/bin/java

Enter to keep the current selection[+], or type selection number: 3

[root@localhost ~]# java -version

java version "1.6.0_39"

Java(TM) SE Runtime Environment (build 1.6.0_39-b04)

Java HotSpot(TM) Server VM (build 20.14-b01, mixed mode)

(c) copyright 2013 nuboat in wonderland

Thursday, April 11, 13](https://image.slidesharecdn.com/hadoop-130410131757-phpapp01/75/Hadoop-13-2048.jpg)

![2. Create hadoop user

[root@localhost ~]# groupadd hadoop

[root@localhost ~]# useradd -g hadoop -d /home/hdadmin hdadmin

[root@localhost ~]# passwd hdadmin

3. Config SSH

[root@localhost ~]# service sshd start

...

[root@localhost ~]# chkconfig sshd on

[root@localhost ~]# su - hdadmin

[root@localhost ~]# ssh-keygen -t rsa -P ""

$ ~/.ssh/id_rsa.pub >> ~/.ssh/authroized_keys

$ ssh localhost

4. Disable IPv6

[root@localhost ~]# vi /etc/sysctl.conf

# disable ipv6

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

(c) copyright 2013 nuboat in wonderland

Thursday, April 11, 13](https://image.slidesharecdn.com/hadoop-130410131757-phpapp01/75/Hadoop-14-2048.jpg)

![5. SET Path

$ vi ~/.bashrc

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# Do not set HADOOP_HOME

# User specific aliases and functions

export HIVE_HOME=/usr/local/hive-0.9.0

export JAVA_HOME=/usr/lib/jvm/jdk1.6.0_39

export PATH=$PATH:/usr/local/hadoop-0.20.205.0/bin:$JAVA_HOME/bin:

$HIVE_HOME/bin

(c) copyright 2013 nuboat in wonderland

Thursday, April 11, 13](https://image.slidesharecdn.com/hadoop-130410131757-phpapp01/75/Hadoop-15-2048.jpg)

![6. Install Hadoop

[root@localhost ~]# tar xzf hadoop-0.20.205.0-bin.tar.gz

[root@localhost ~]# mv hadoop-0.20.205.0 /usr/local/hadoop-0.20.205.0

[root@localhost ~]# cd /usr/local

[root@localhost ~]# chown -R hdadmin:hadoop hadoop-0.20.205.0

[root@localhost ~]# mkdir -p /data/hadoop

[root@localhost ~]# chown hdadmin:hadoop /data/hadoop

[root@localhost ~]# chmod 750 /data/hadoop

(c) copyright 2013 nuboat in wonderland

Thursday, April 11, 13](https://image.slidesharecdn.com/hadoop-130410131757-phpapp01/75/Hadoop-16-2048.jpg)

![8. Format HDFS

$ hadoop namenode -format

13/04/03 13:59:54 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = localhost.localdomain/127.0.0.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 0.20.205.0

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.20-security-205

-r 1179940; compiled by 'hortonfo' on Fri Oct 7 06:26:14 UTC 2011

************************************************************/

13/04/03 14:00:02 INFO util.GSet: VM type = 32-bit

13/04/03 14:00:02 INFO util.GSet: 2% max memory = 17.77875 MB

13/04/03 14:00:02 INFO util.GSet: capacity = 2^22 = 4194304 entries

13/04/03 14:00:02 INFO util.GSet: recommended=4194304, actual=4194304

13/04/03 14:00:02 INFO namenode.FSNamesystem: fsOwner=hdadmin

13/04/03 14:00:02 INFO namenode.FSNamesystem: supergroup=supergroup

13/04/03 14:00:02 INFO namenode.FSNamesystem: isPermissionEnabled=true

13/04/03 14:00:02 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100

13/04/03 14:00:02 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0

min(s), accessTokenLifetime=0 min(s)

13/04/03 14:00:02 INFO namenode.NameNode: Caching file names occuring more than 10 times

13/04/03 14:00:02 INFO common.Storage: Image file of size 113 saved in 0 seconds.

13/04/03 14:00:02 INFO common.Storage: Storage directory /app/hadoop/tmp/dfs/name has been successfully

formatted.

13/04/03 14:00:02 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost.localdomain/127.0.0.1

************************************************************/

(c) copyright 2013 nuboat in wonderland

Thursday, April 11, 13](https://image.slidesharecdn.com/hadoop-130410131757-phpapp01/75/Hadoop-19-2048.jpg)