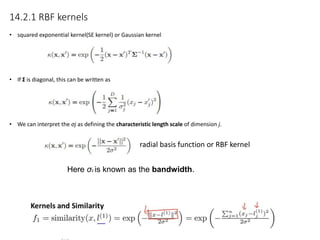

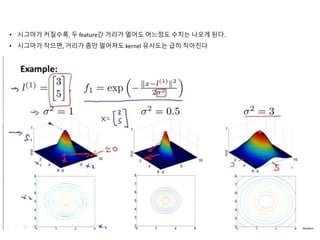

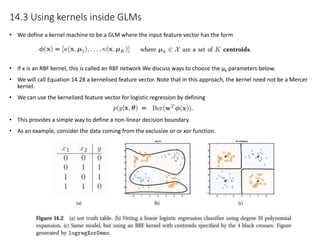

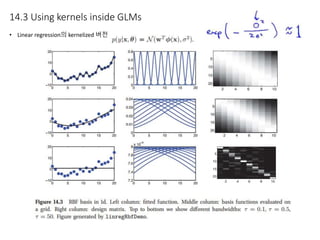

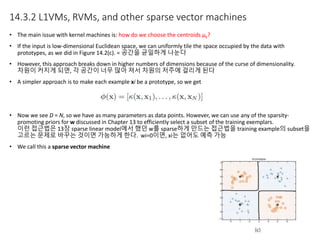

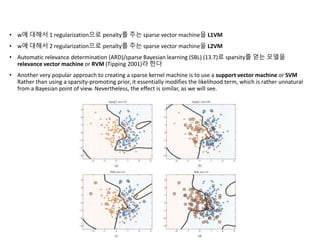

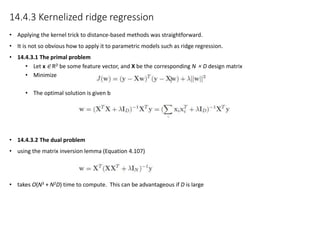

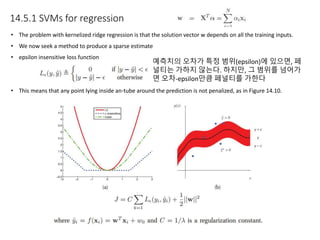

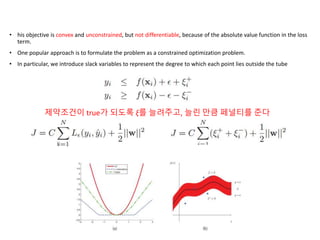

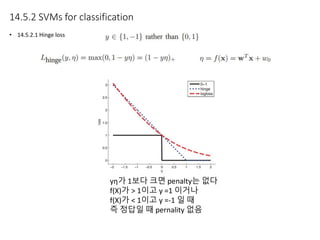

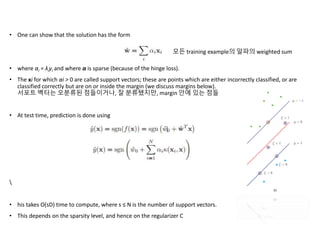

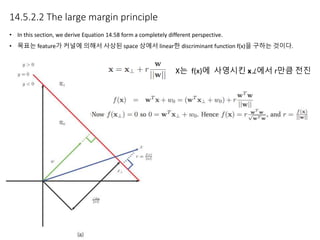

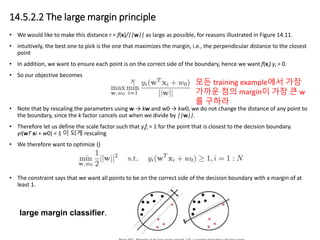

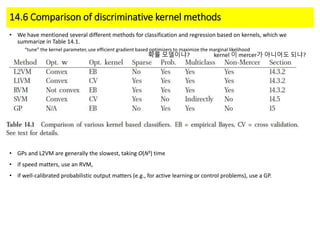

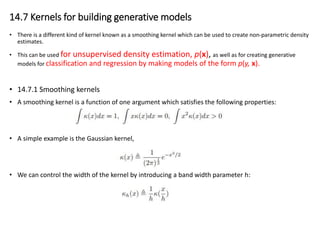

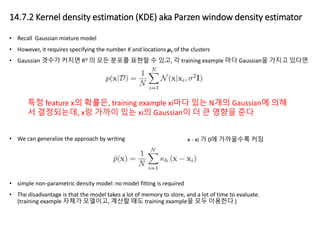

Kernel functions allow measuring the similarity between objects without explicitly representing them as feature vectors. The kernel trick enables applying algorithms designed for explicit feature vectors, like support vector machines (SVMs), to implicit spaces defined by kernels. SVMs find a sparse set of support vectors that define the decision boundary by maximizing margin and minimizing error. They can perform both classification using a hinge loss function and regression using an epsilon-insensitive loss function.