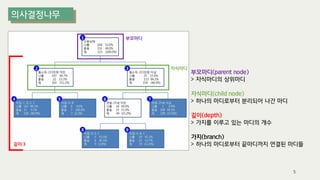

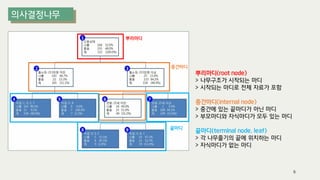

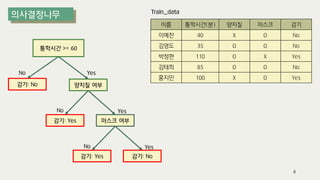

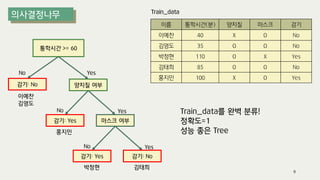

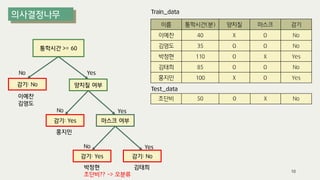

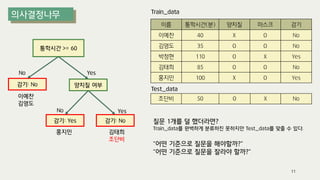

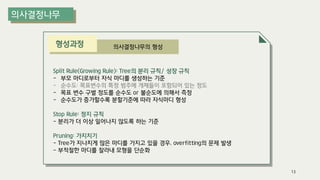

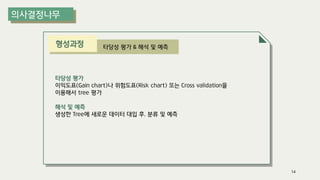

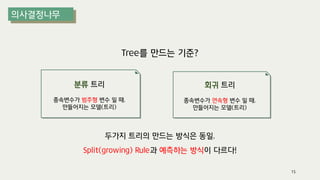

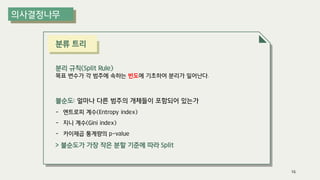

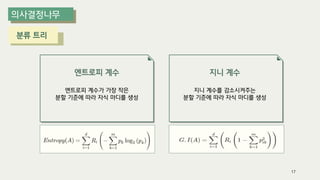

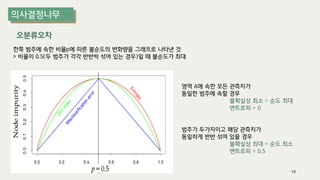

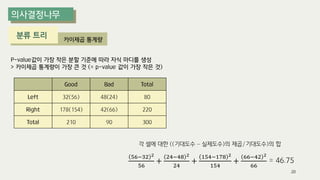

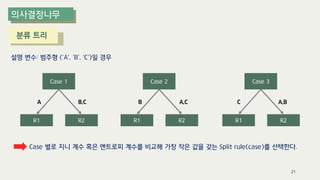

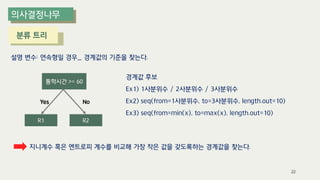

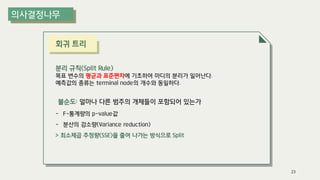

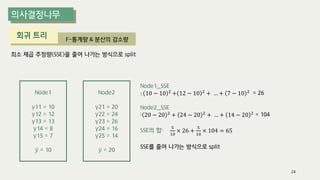

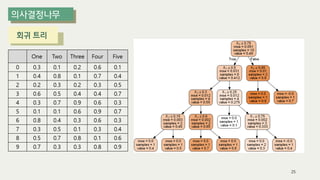

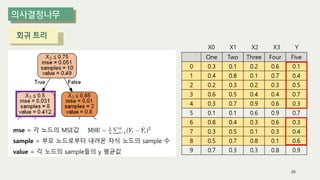

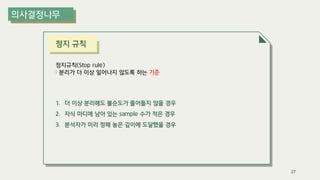

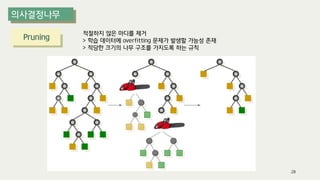

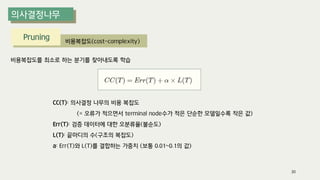

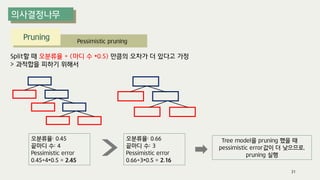

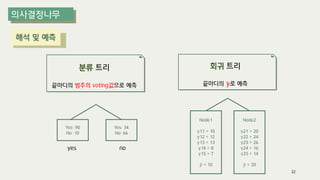

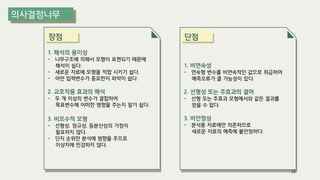

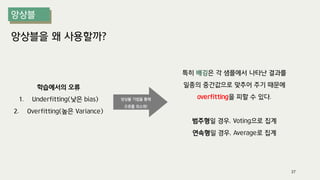

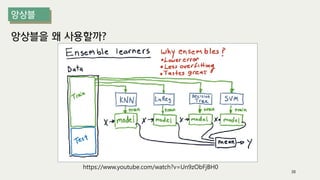

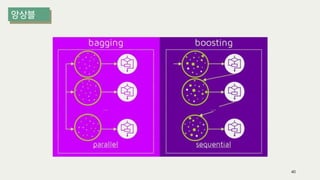

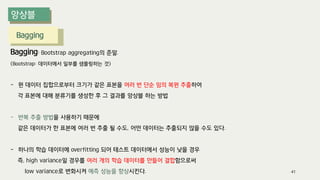

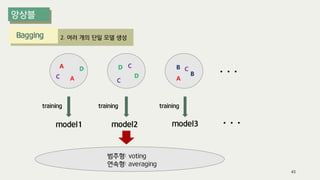

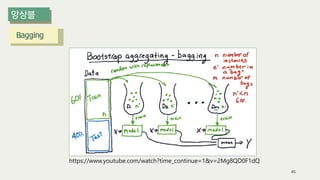

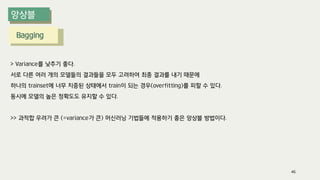

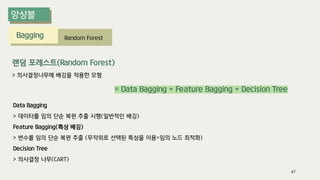

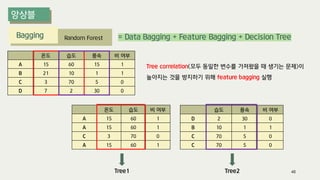

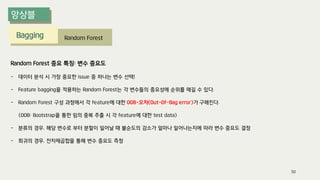

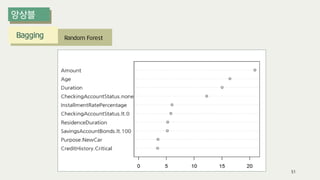

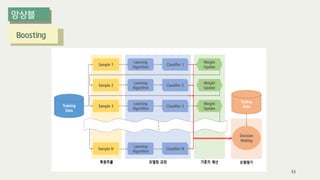

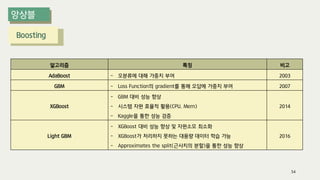

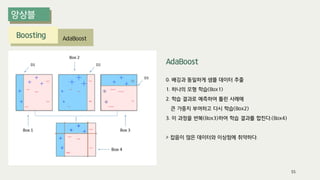

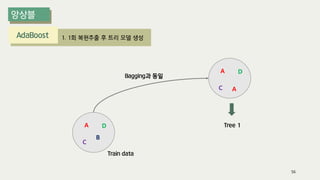

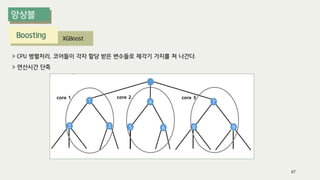

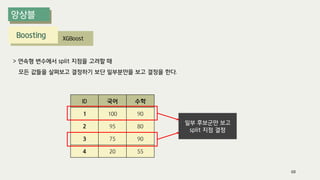

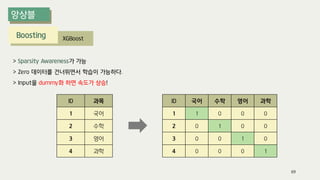

The document outlines the process of decision tree formation and application in classification and prediction tasks. It discusses the methodologies used for segmentation, classification, prediction, and interaction effect identification, along with the structure and components of decision trees such as nodes, branches, and depth. Additionally, it addresses the importance of rules for splitting, stopping, and pruning trees to avoid overfitting, and emphasizes the need for ensemble methods to improve predictive power.