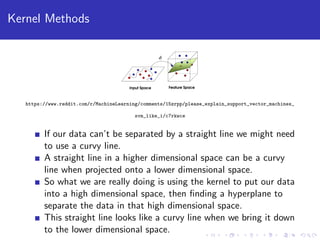

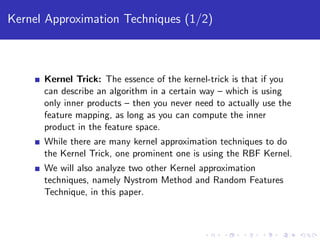

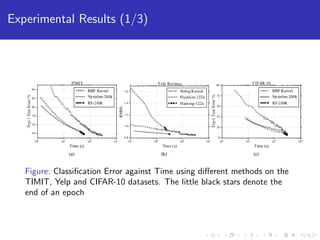

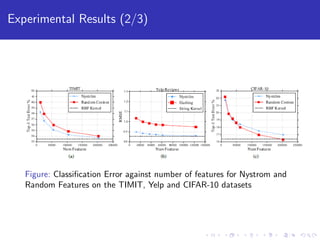

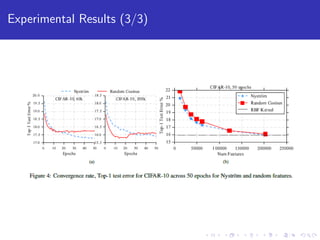

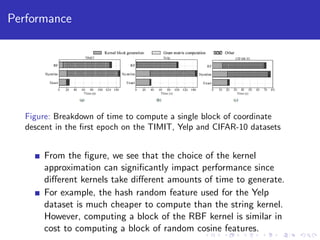

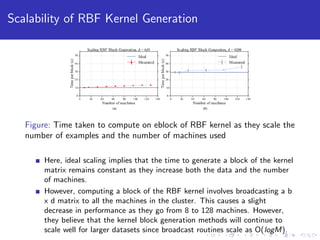

This paper explores using block coordinate descent to scale kernel learning methods to large datasets. It compares exact kernel methods to two approximation techniques, Nystrom and random Fourier features, on speech, text, and image datasets. Experimental results show that Nystrom generally achieves better accuracy than random features but requires more iterations. The paper also analyzes the performance and scalability of computing kernel blocks in a distributed setting.