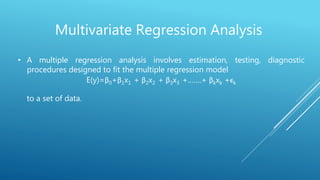

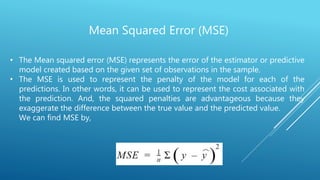

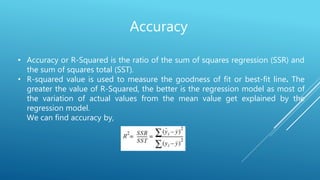

This document discusses multiple linear regression analysis. It defines multiple regression as containing more than one independent variable. The key steps of multiple regression analysis are described, including feature selection, normalizing features, selecting a loss function and hypothesis, setting hypothesis parameters, minimizing the loss function, and testing the hypothesis. Advantages include understanding relationships between variables, while disadvantages include complexity and interpretation challenges with smaller datasets.