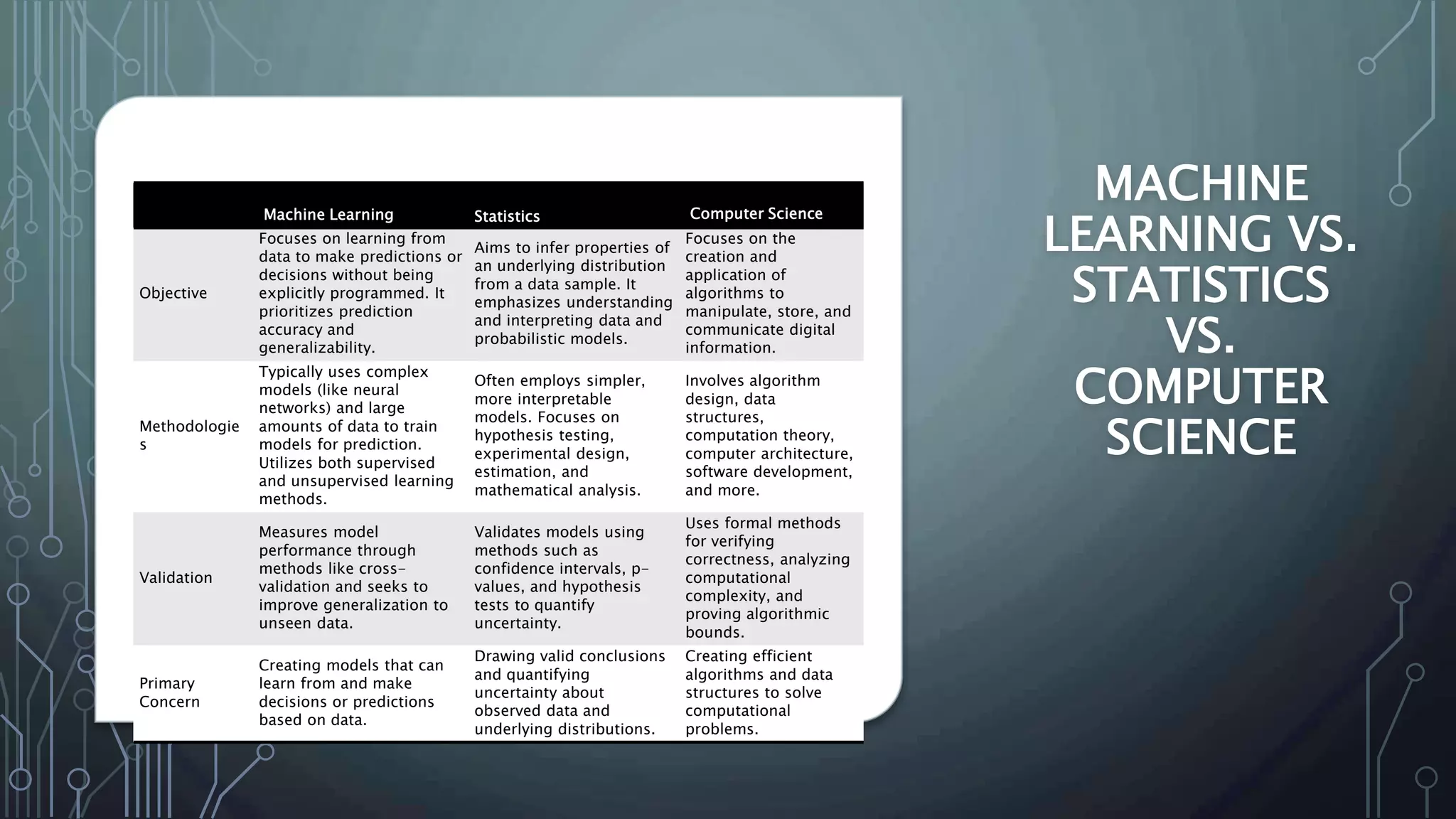

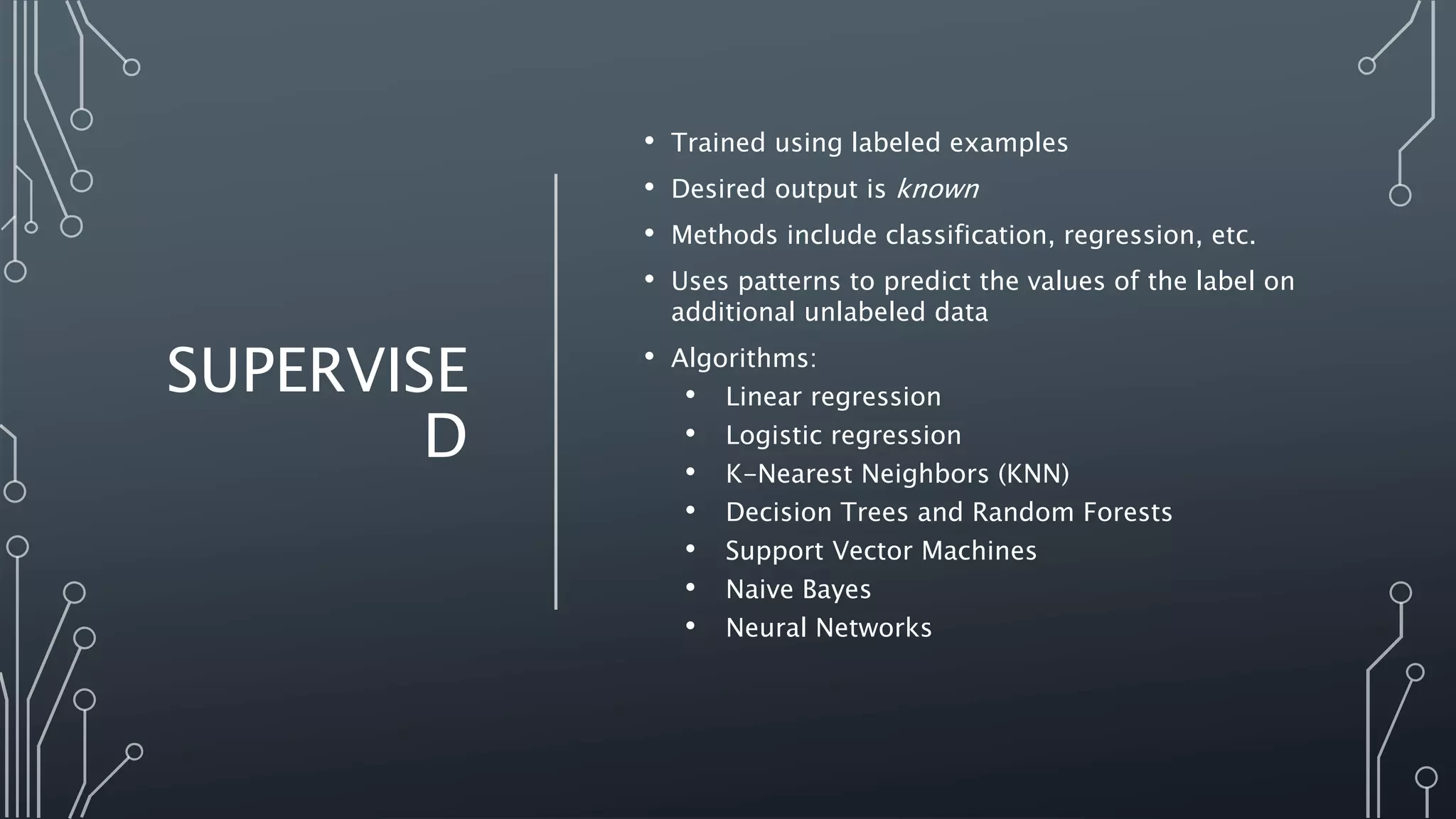

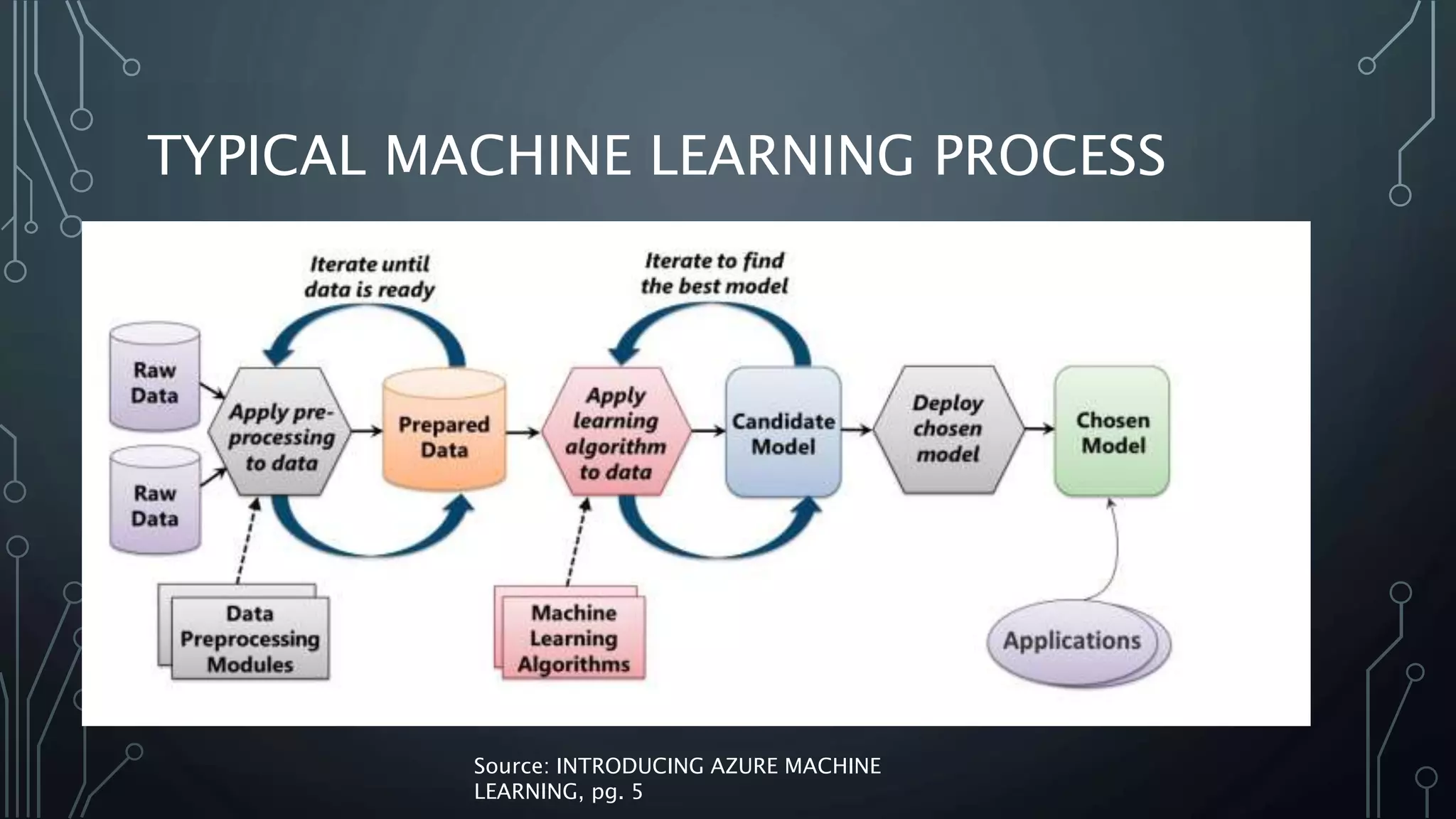

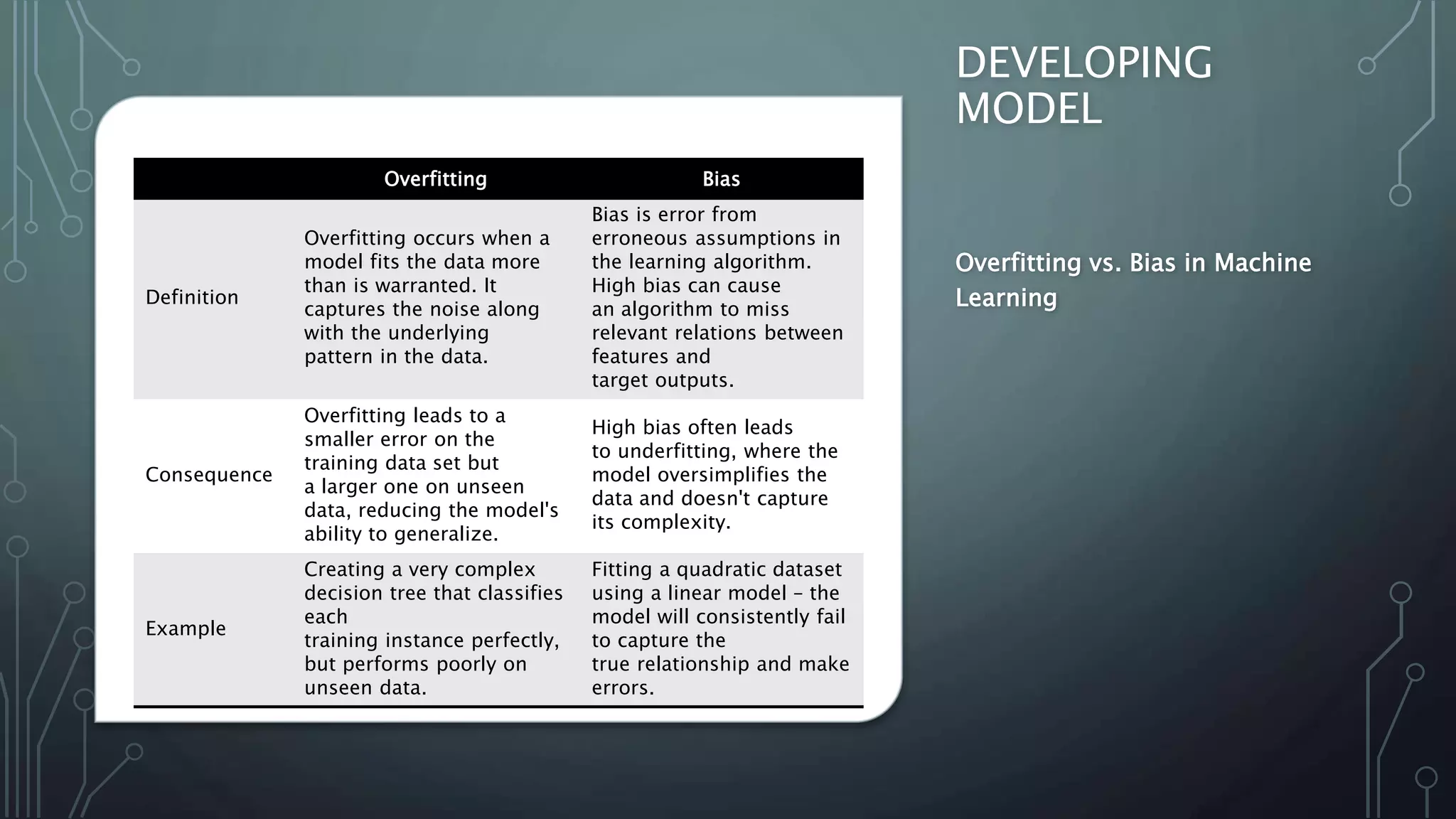

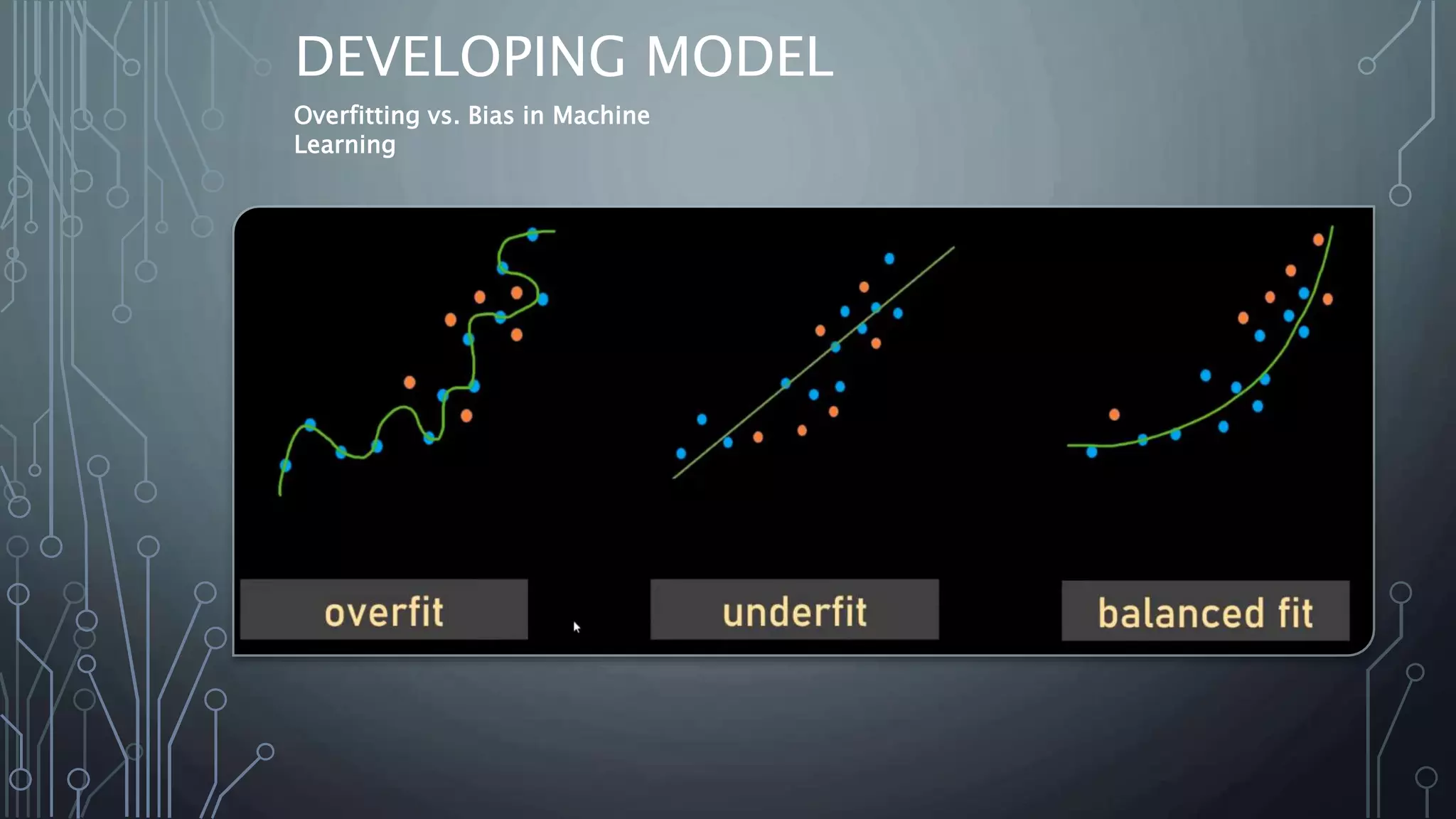

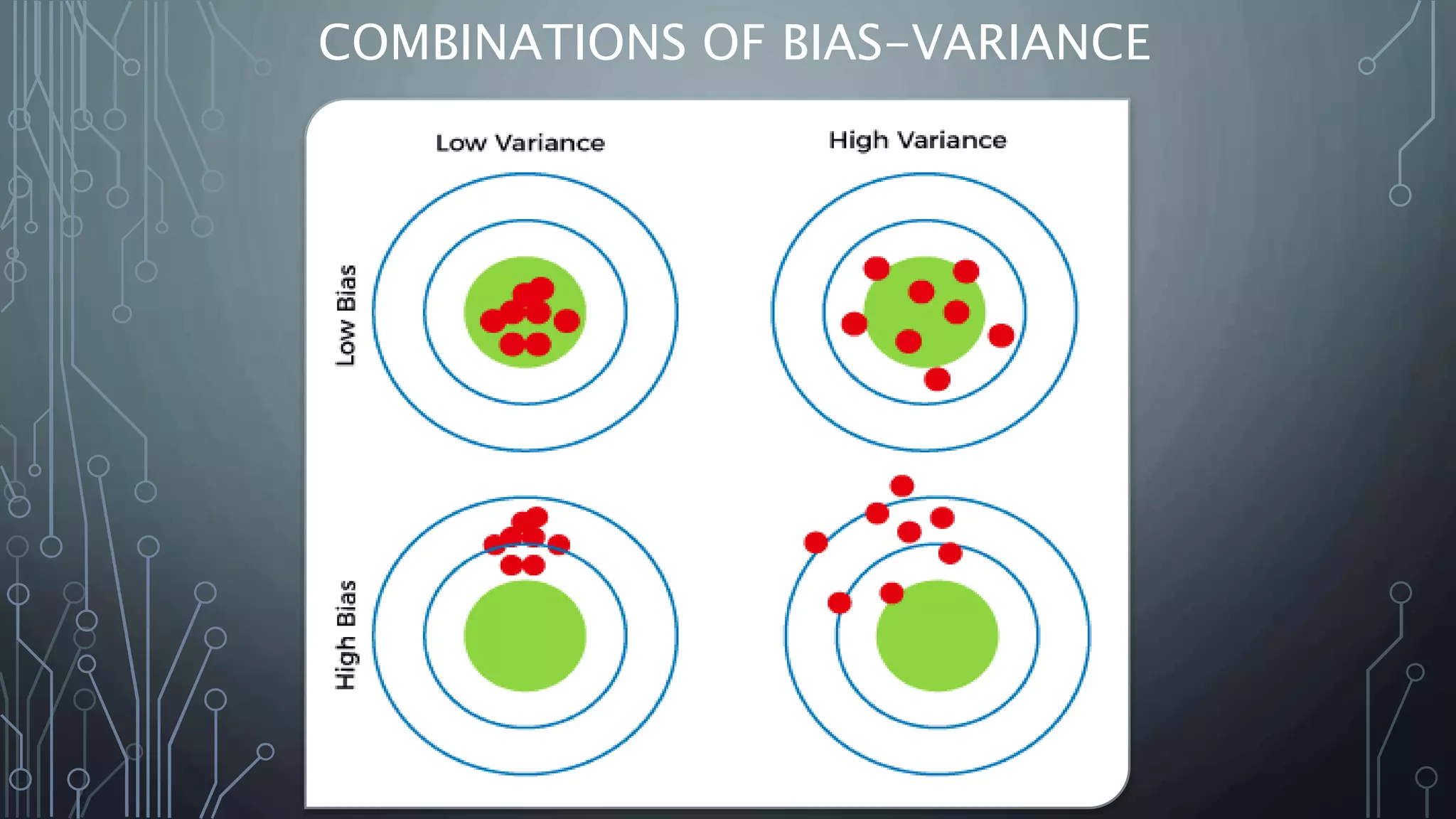

This document provides an introduction to machine learning, including definitions, types of machine learning problems, common algorithms, and typical machine learning processes. It defines machine learning as a type of artificial intelligence that enables computers to learn without being explicitly programmed. The three main types of machine learning problems are supervised learning (classification and regression), unsupervised learning (clustering and association), and reinforcement learning. Common machine learning algorithms and examples of their applications are also discussed. The document concludes with an overview of typical machine learning processes such as selecting and preparing data, developing and evaluating models, and interpreting results.