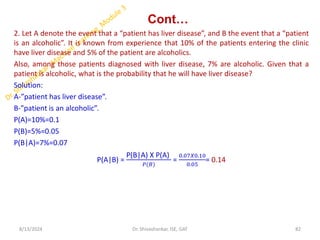

The document outlines a course on machine learning, focusing on regression techniques, algorithms like SVM and ANN, Bayesian methods, and reinforcement learning. It includes detailed explanations of conditional probability, Bayes' theorem, and various examples and problems related to calculating probabilities in different scenarios. Additionally, it discusses maximum likelihood and least-squared error hypotheses related to continuous-valued target functions in machine learning.

![NAÏVE BAYES CLASSIFIER

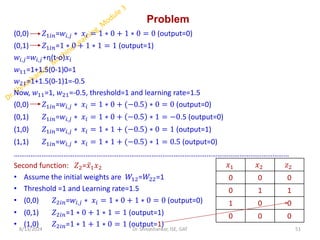

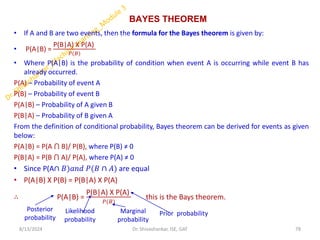

From Bays theorem

P(A|B) =

P(B|A) X P(A)

𝑃(𝐵)

this is the Bays theorem.

Data set

X={𝑥1, 𝑥2, … … … . . 𝑥𝑛) using these features compute output {y} features.

Multiple features

𝑓1, 𝑓2, 𝑓3, 𝑦

𝑥1, 𝑥2, 𝑥3, 𝑦1 --- (1 record)

𝑥1, 𝑥2, 𝑥3, 𝑦2 --- (2 record)

For this kind of data set how (Bayes theorem) equation changes

To compute these features in y using Bayes theorem

P(y|𝑥1, 𝑥2, 𝑥3,….. 𝑥𝑛) =

(P(𝑥1 𝑦 ∗P(𝑥2|𝑦) ∗ P(𝑥3|𝑦),……..∗ P(𝑥𝑛|𝑦)∗P(y))

𝑃 𝑥1 𝑃 𝑥2 𝑃 𝑥3 ,………….𝑃(𝑥𝑛)

=

𝑃 𝑌 ∗ς1=1

𝑛 𝑃(𝑥𝑖|𝑦)

𝑃 𝑥1 𝑃 𝑥2 𝑃 𝑥3 ,………….𝑃(𝑥𝑛)

P(y|𝑥1, 𝑥2, 𝑥3,….. 𝑥𝑛) 𝛼 𝑃 𝑌 ∗ ς1=1

𝑛

𝑃 𝑥𝑖 𝑦

Y= argmax

𝑦

[𝑃 𝑌 ∗ ς1=1

𝑛

𝑃 𝑥𝑖 𝑦 ]

8/13/2024 21

Dr. Shivashankar, ISE, GAT](https://image.slidesharecdn.com/module3machinelearning-240813084355-23ea13c2/85/Module-3_Machine-Learning-Bayesian-Learn-21-320.jpg)

![NAÏVE BAYES CLASSIFIER

From Bays theorem

P(A|B) =

P(B|A) X P(A)

𝑃(𝐵)

this is the Bays theorem.

Data set

X={𝑥1, 𝑥2, … … … . . 𝑥𝑛) using these features compute output {y} features.

Multiple features

𝑓1, 𝑓2, 𝑓3, 𝑦

𝑥1, 𝑥2, 𝑥3, 𝑦1 --- (1 record)

𝑥1, 𝑥2, 𝑥3, 𝑦2 --- (2 record)

For this kind of data set how (Bayes theorem) equation changes

To compute these features in y using Bayes theorem

P(y|𝑥1, 𝑥2, 𝑥3,….. 𝑥𝑛) =

(P(𝑥1 𝑦 ∗P(𝑥2|𝑦) ∗ P(𝑥3|𝑦),……..∗ P(𝑥𝑛|𝑦)∗P(y))

𝑃 𝑥1 𝑃 𝑥2 𝑃 𝑥3 ,………….𝑃(𝑥𝑛)

=

𝑃 𝑌 ∗ς1=1

𝑛 𝑃(𝑥𝑖|𝑦)

𝑃 𝑥1 𝑃 𝑥2 𝑃 𝑥3 ,………….𝑃(𝑥𝑛)

P(y|𝑥1, 𝑥2, 𝑥3,….. 𝑥𝑛) 𝛼 𝑃 𝑌 ∗ ς1=1

𝑛

𝑃 𝑥𝑖 𝑦

Y= argmax

𝑦

[𝑃 𝑌 ∗ ς1=1

𝑛

𝑃 𝑥𝑖 𝑦 ]

8/13/2024 89

Dr. Shivashankar, ISE, GAT](https://image.slidesharecdn.com/module3machinelearning-240813084355-23ea13c2/85/Module-3_Machine-Learning-Bayesian-Learn-89-320.jpg)