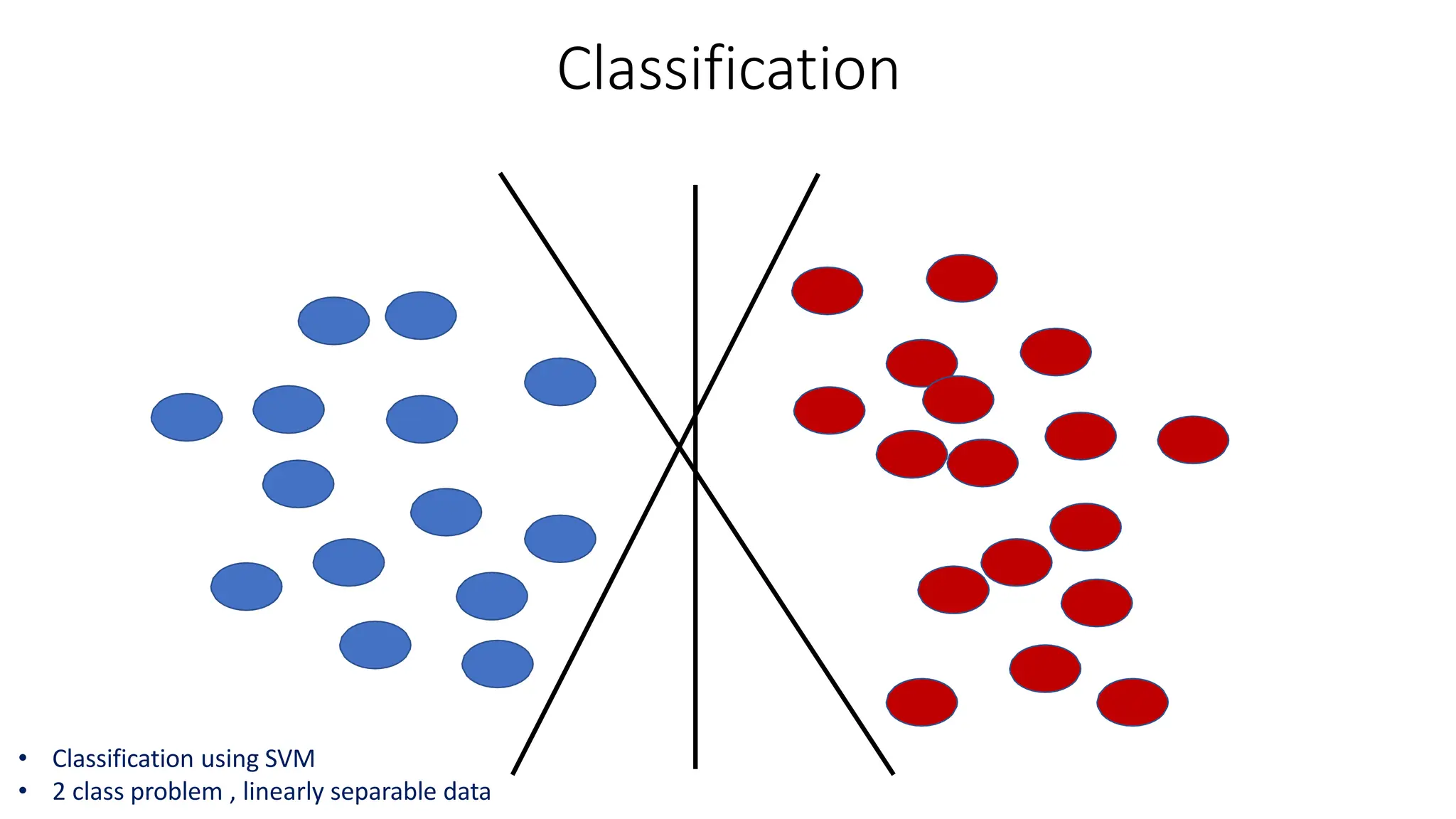

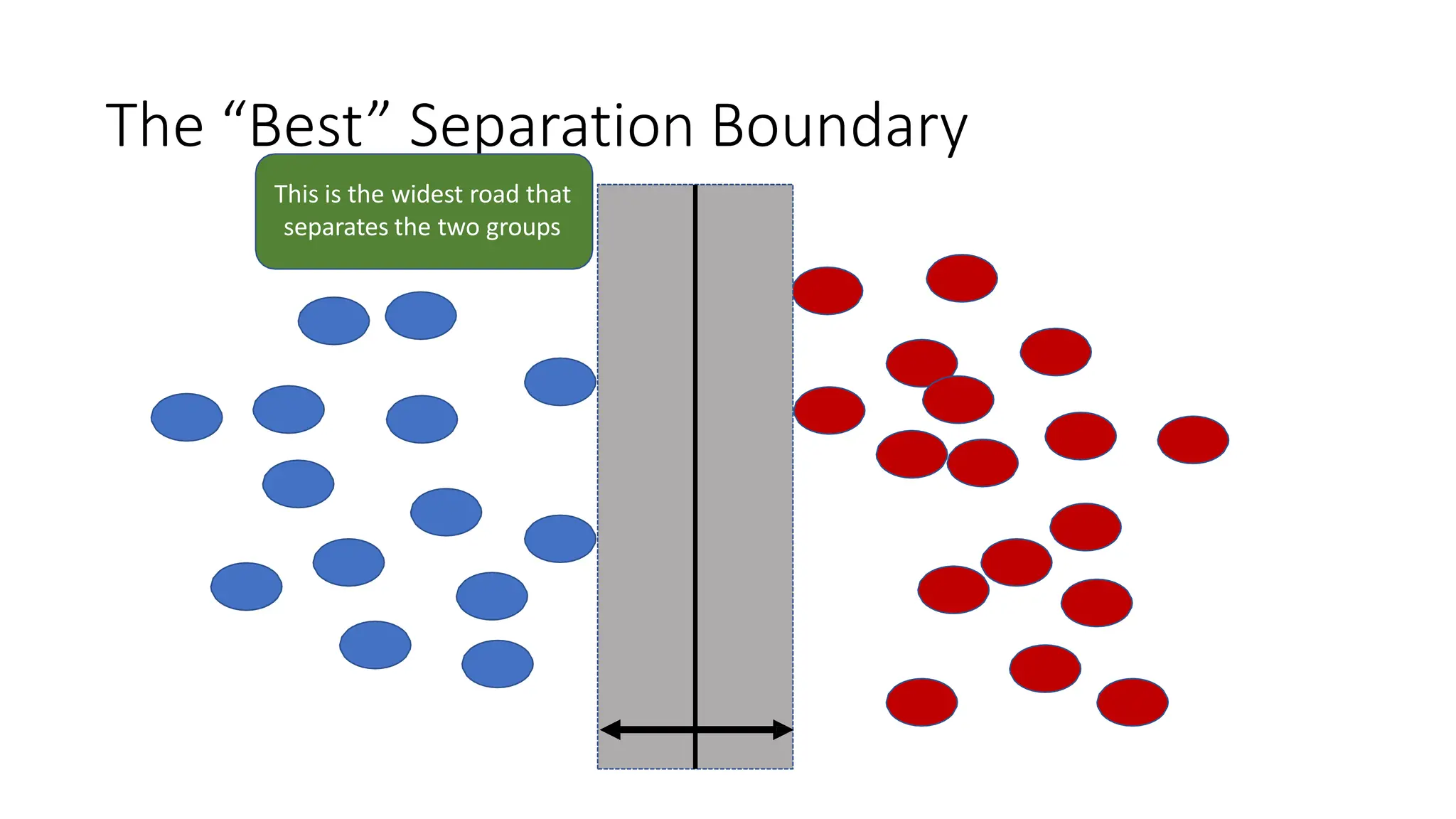

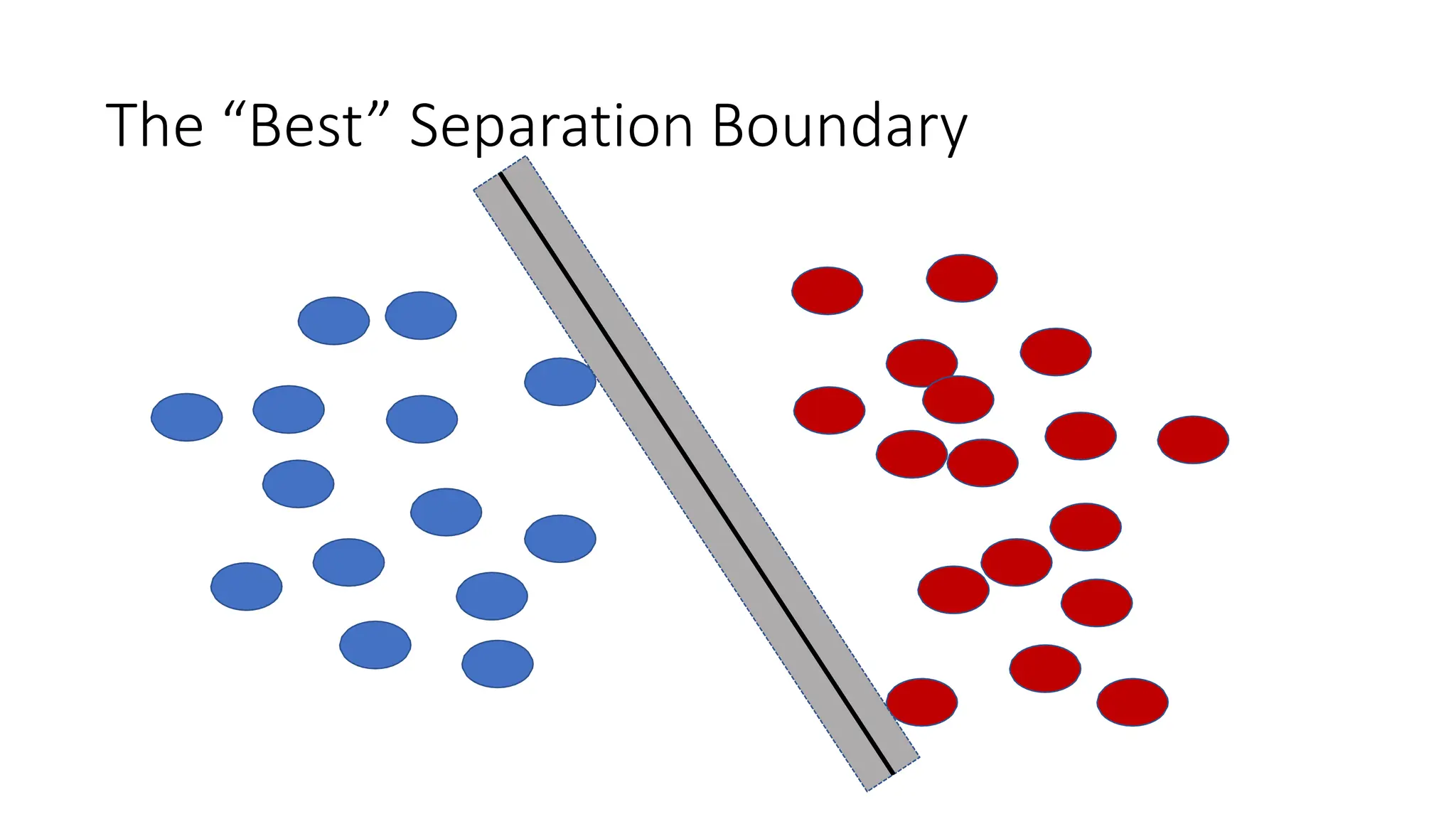

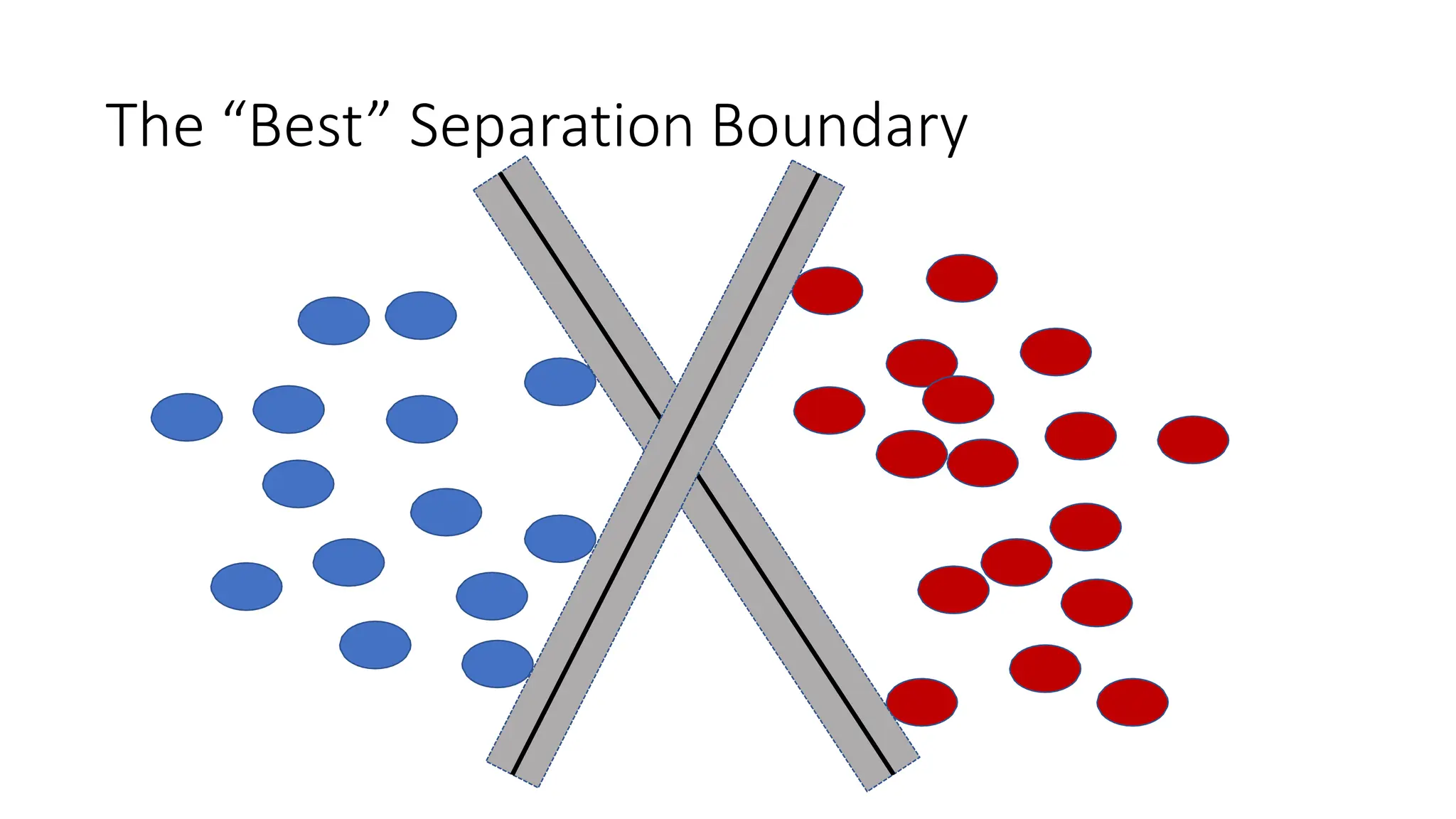

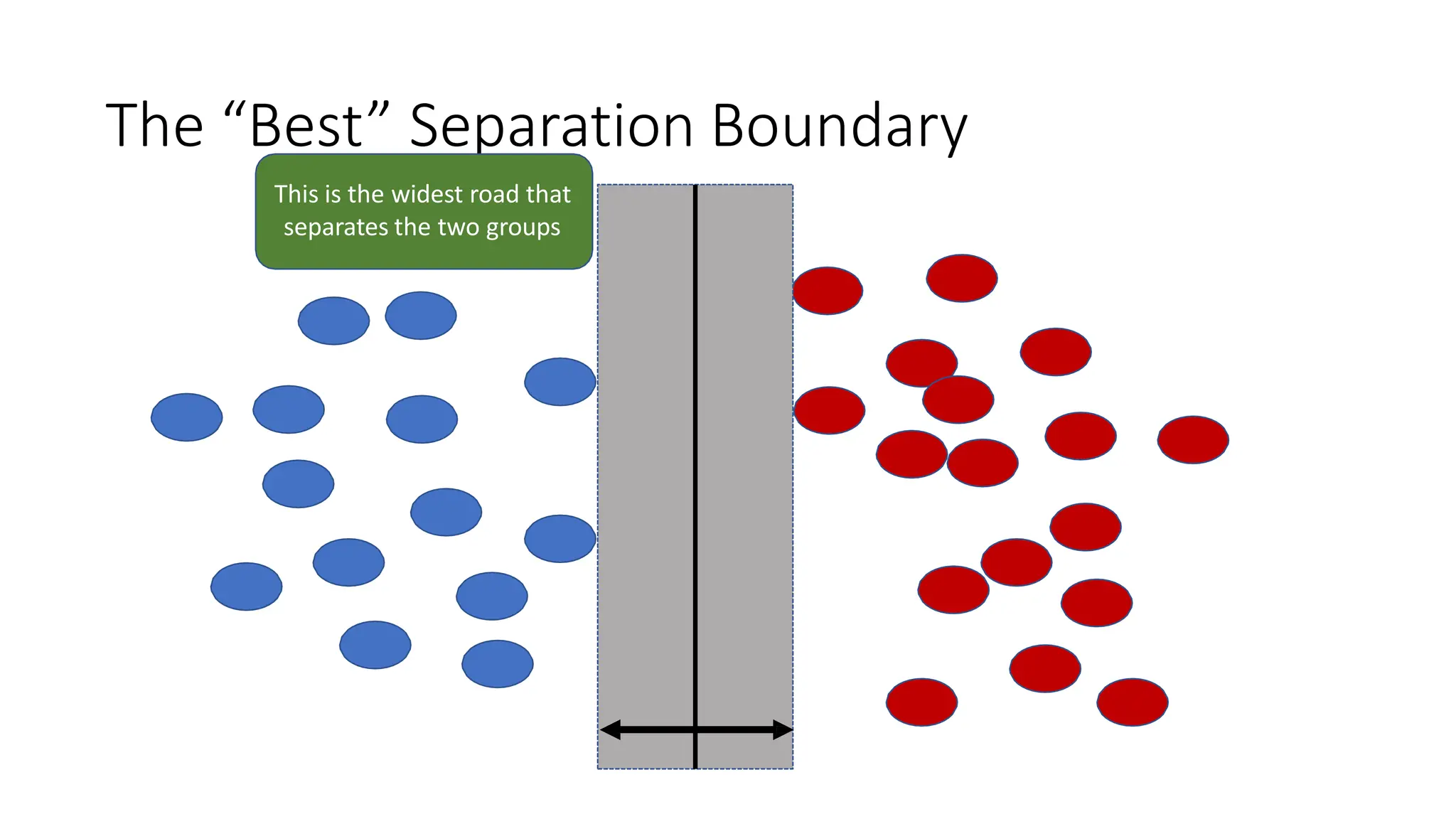

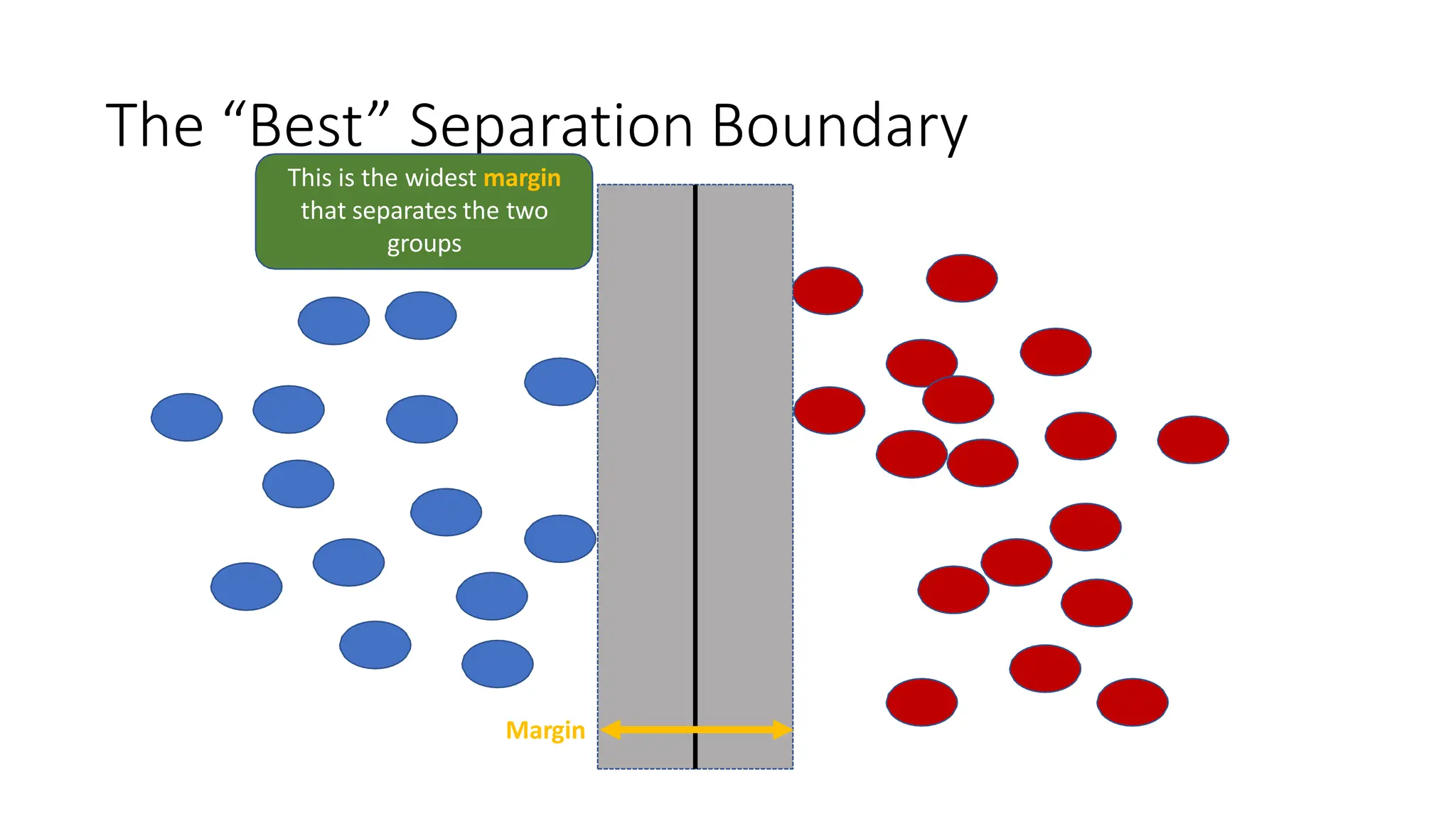

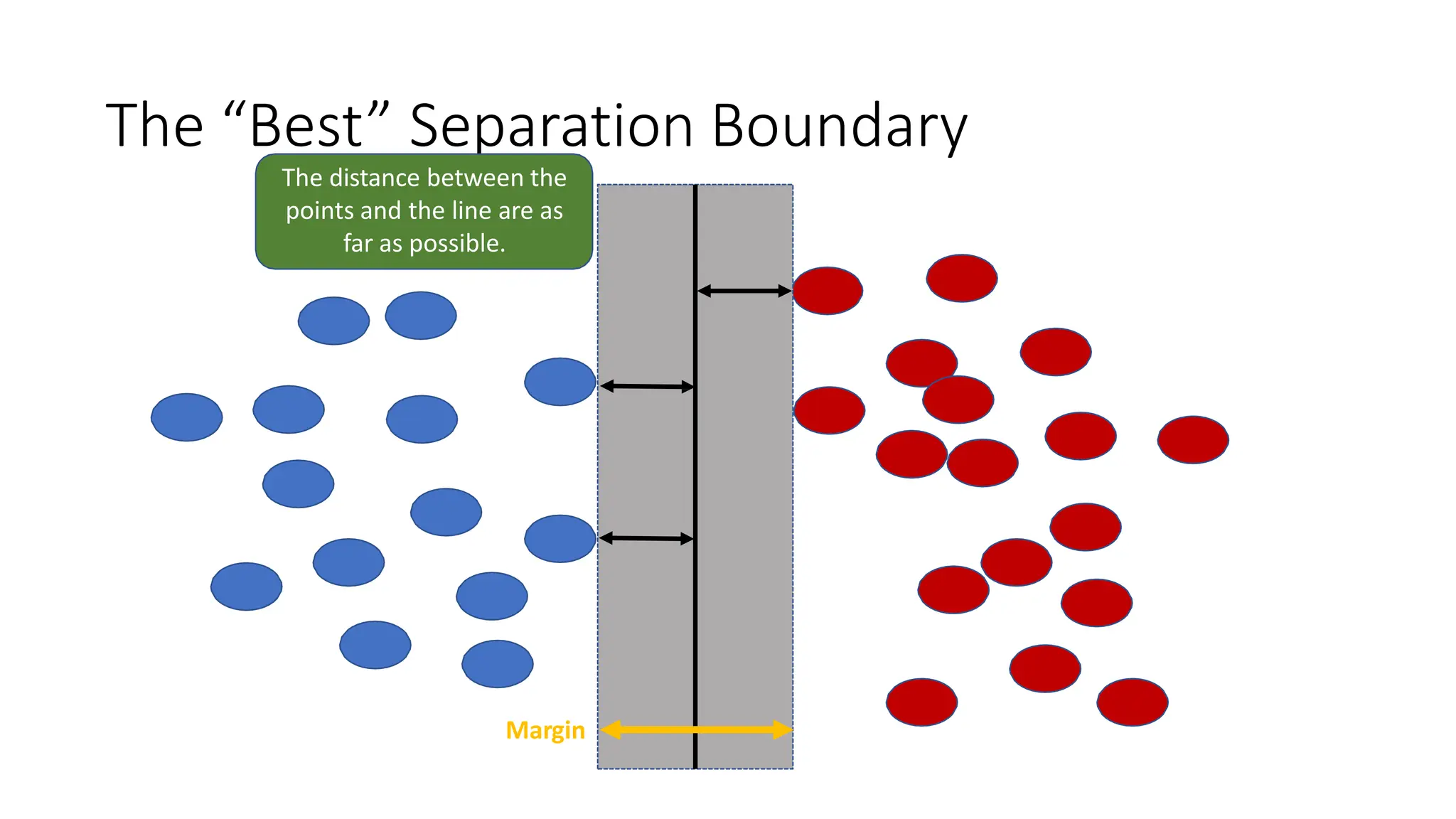

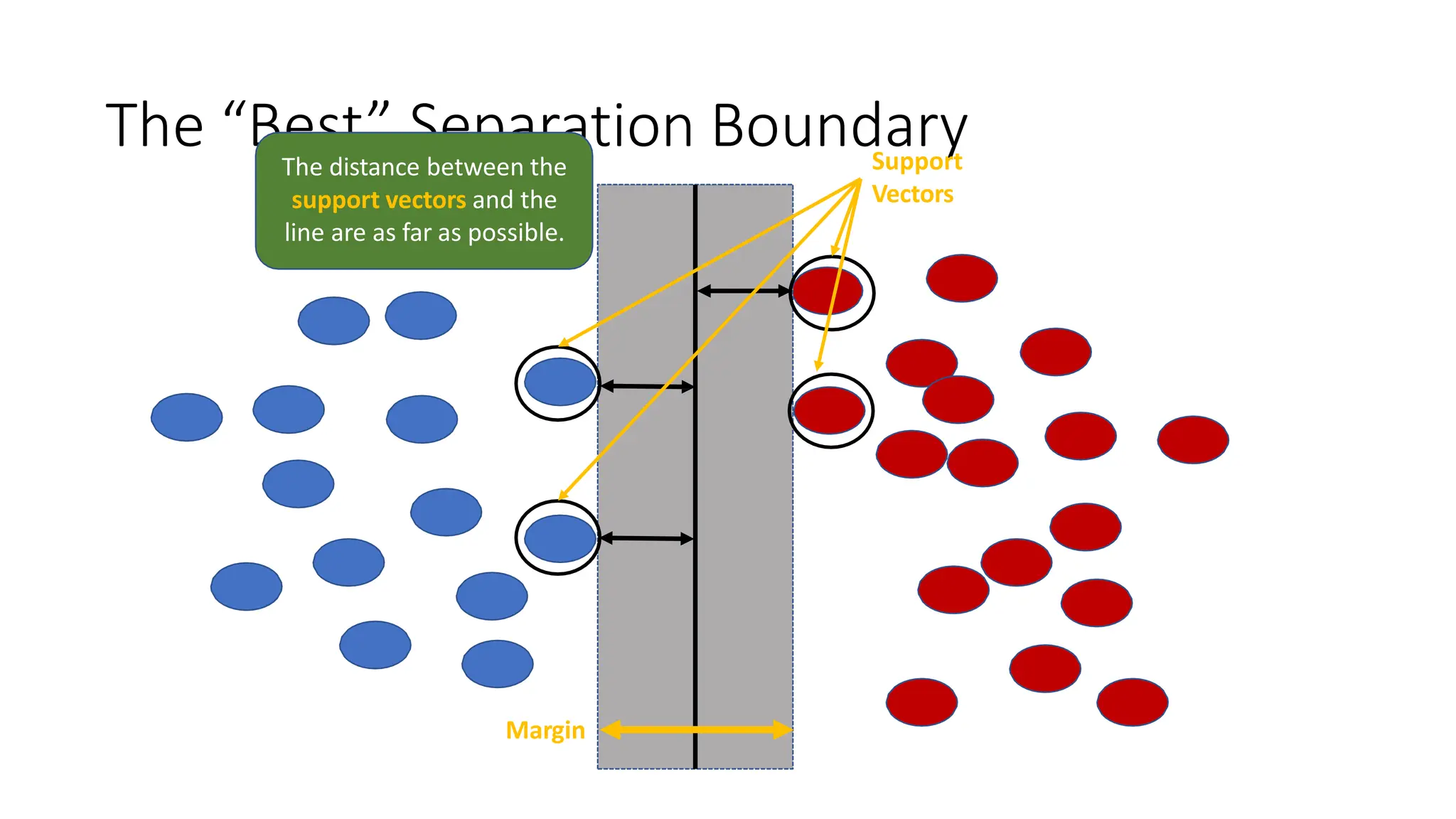

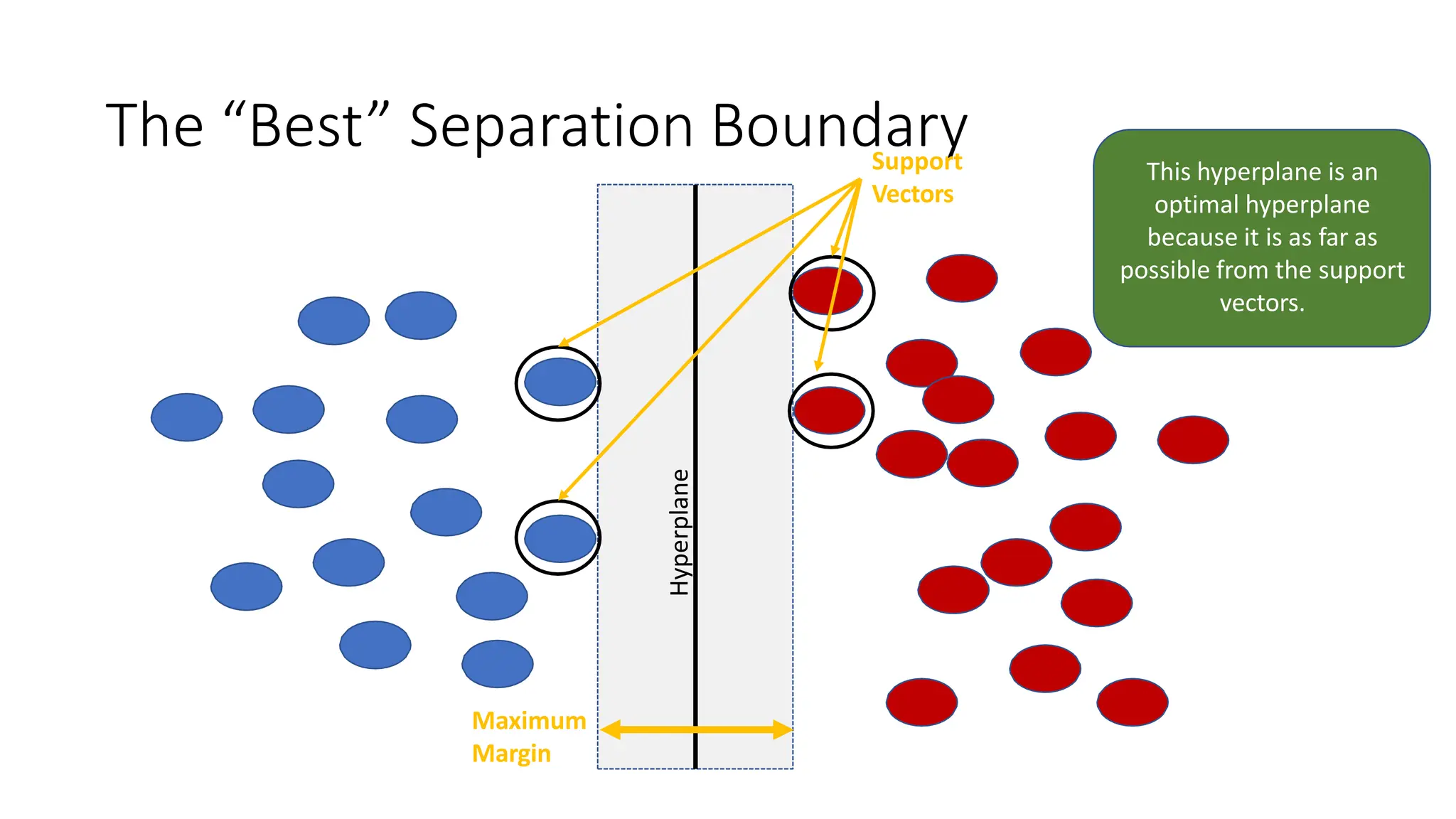

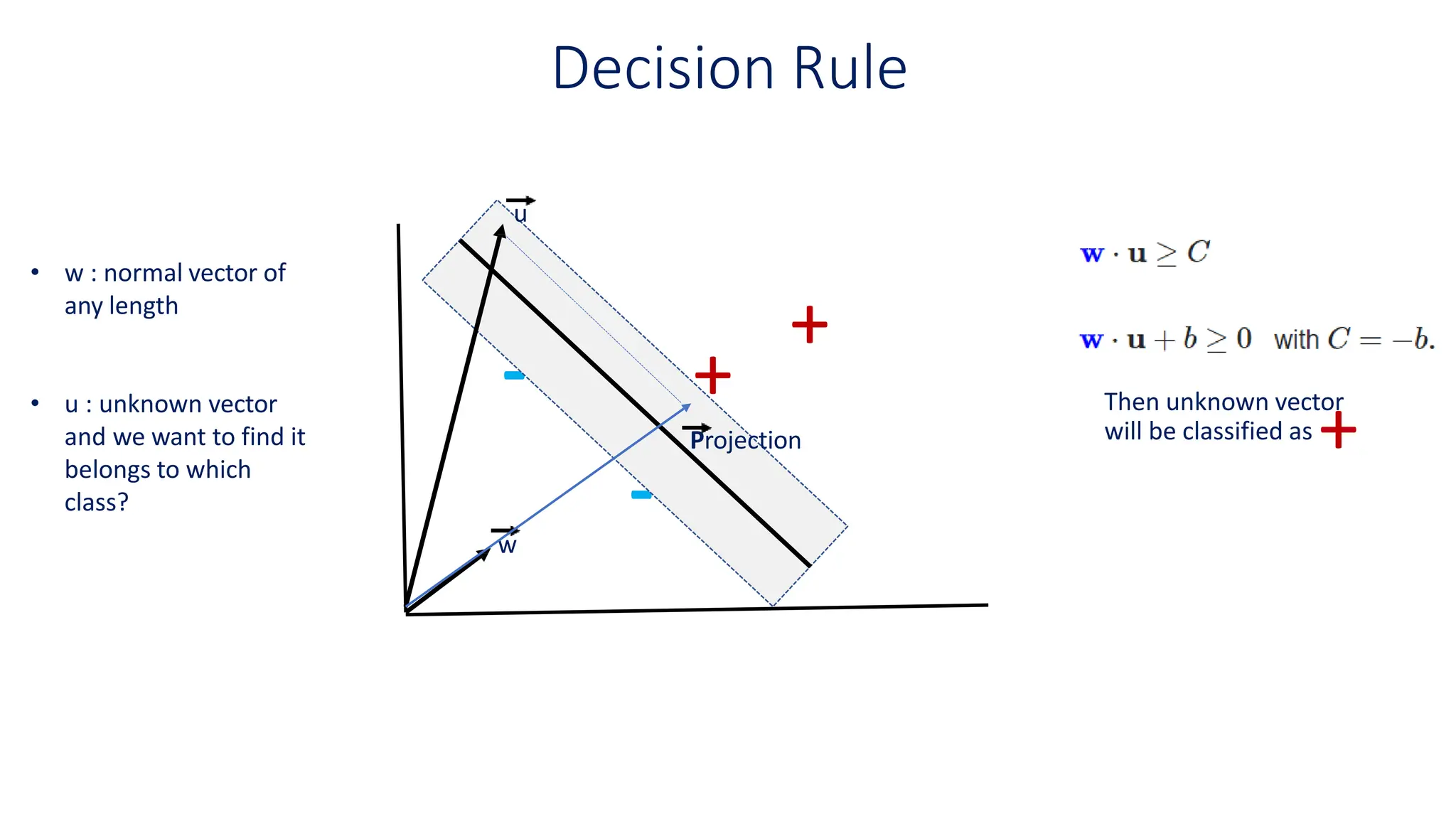

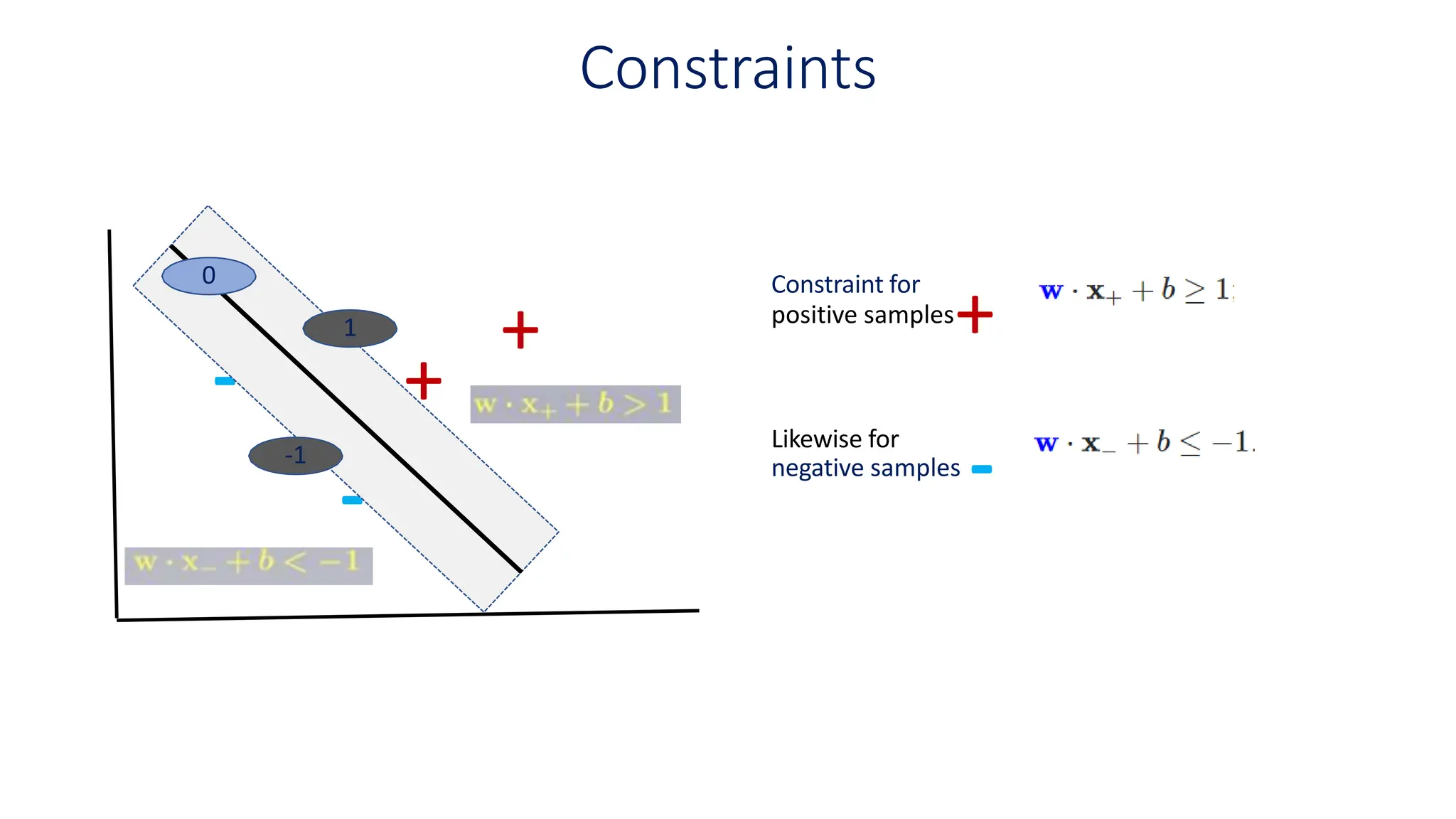

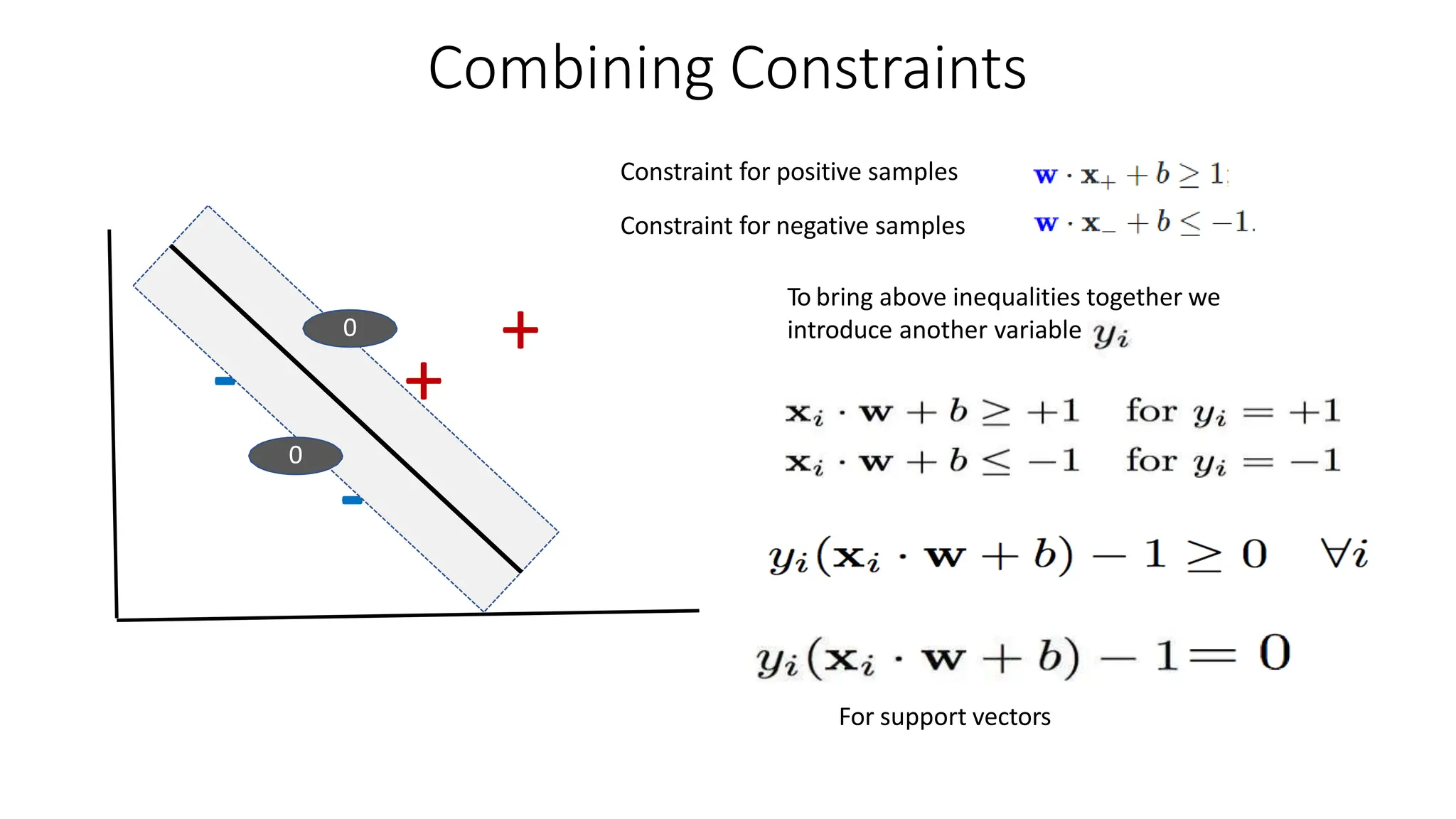

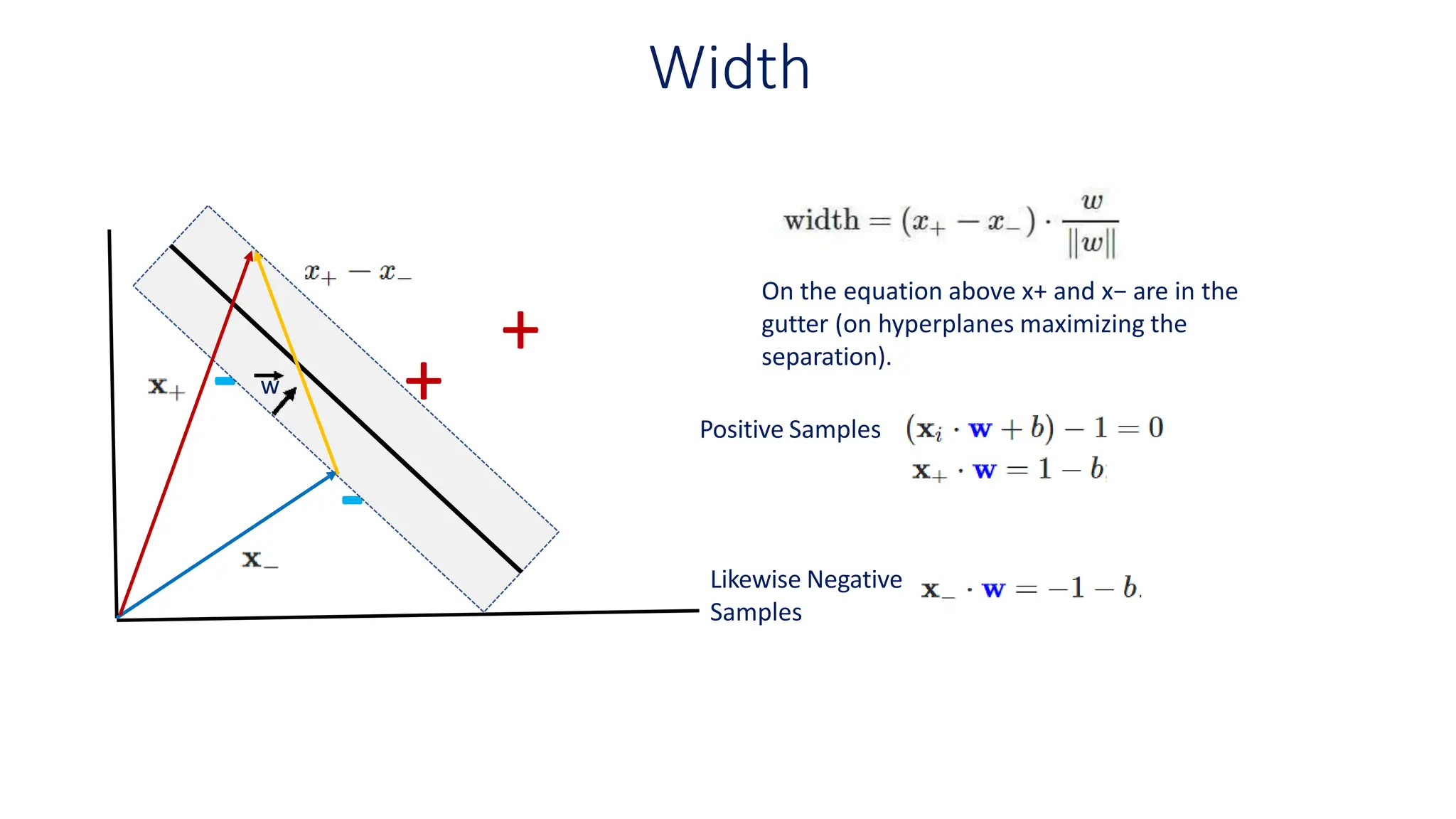

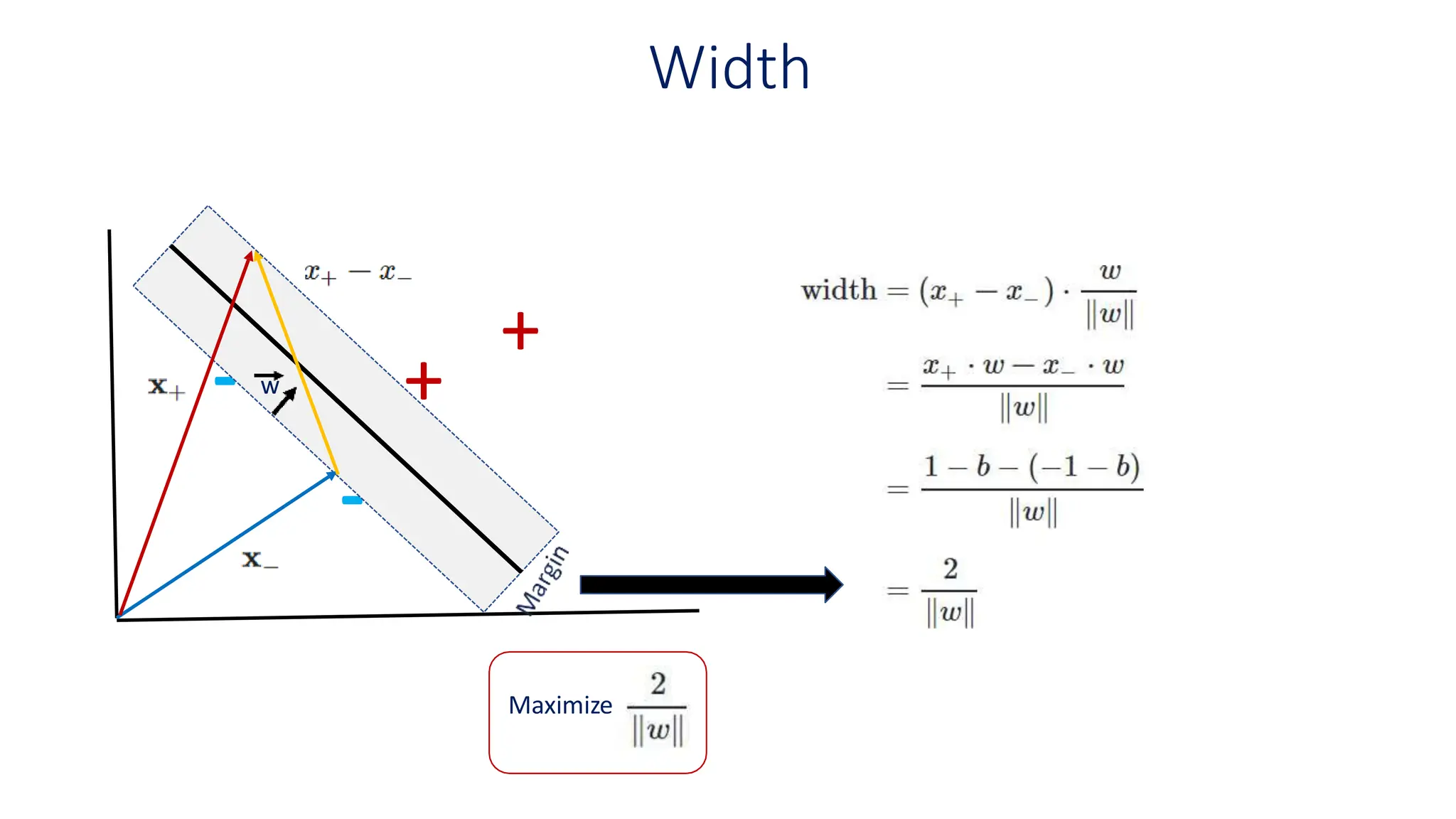

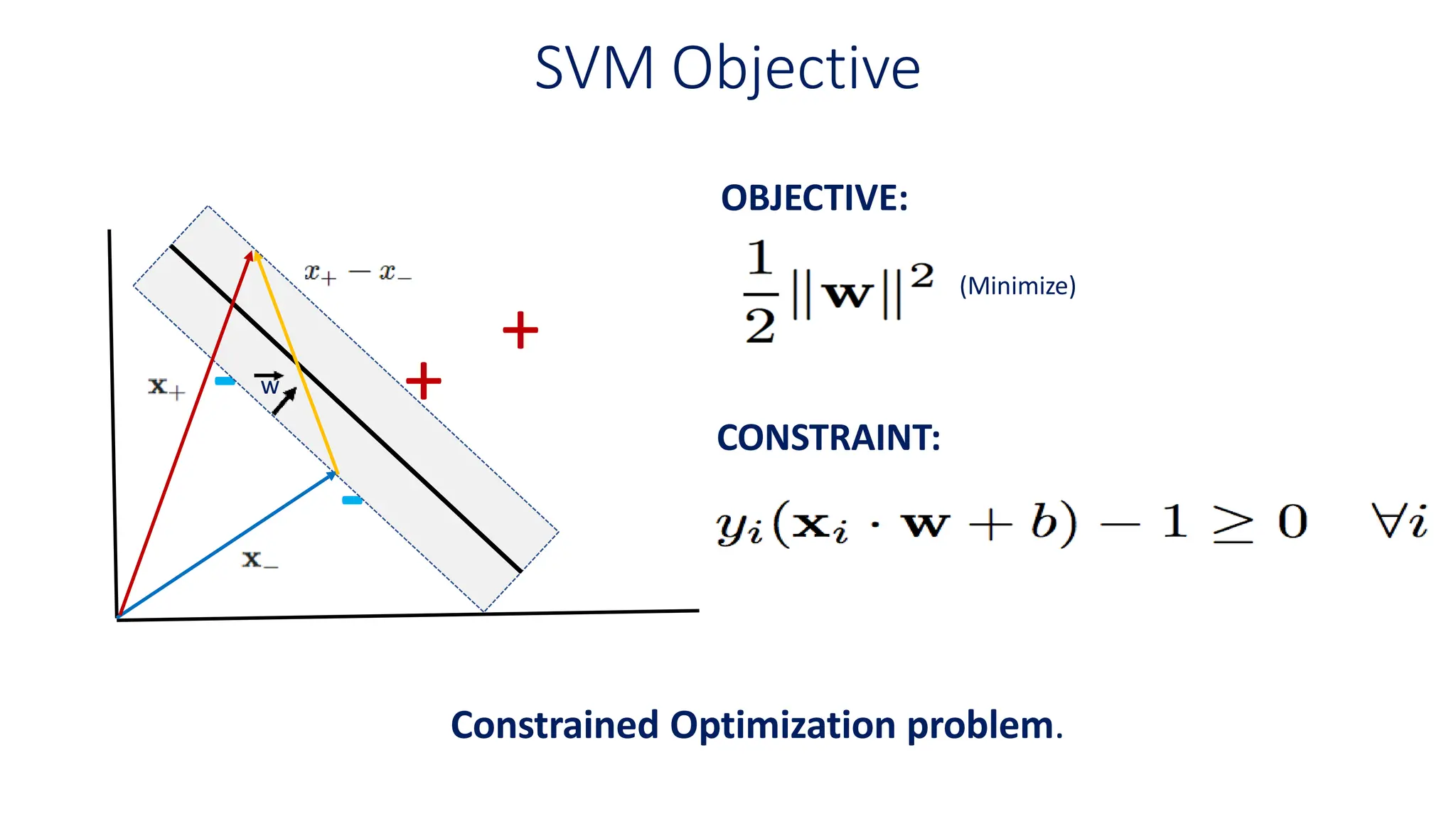

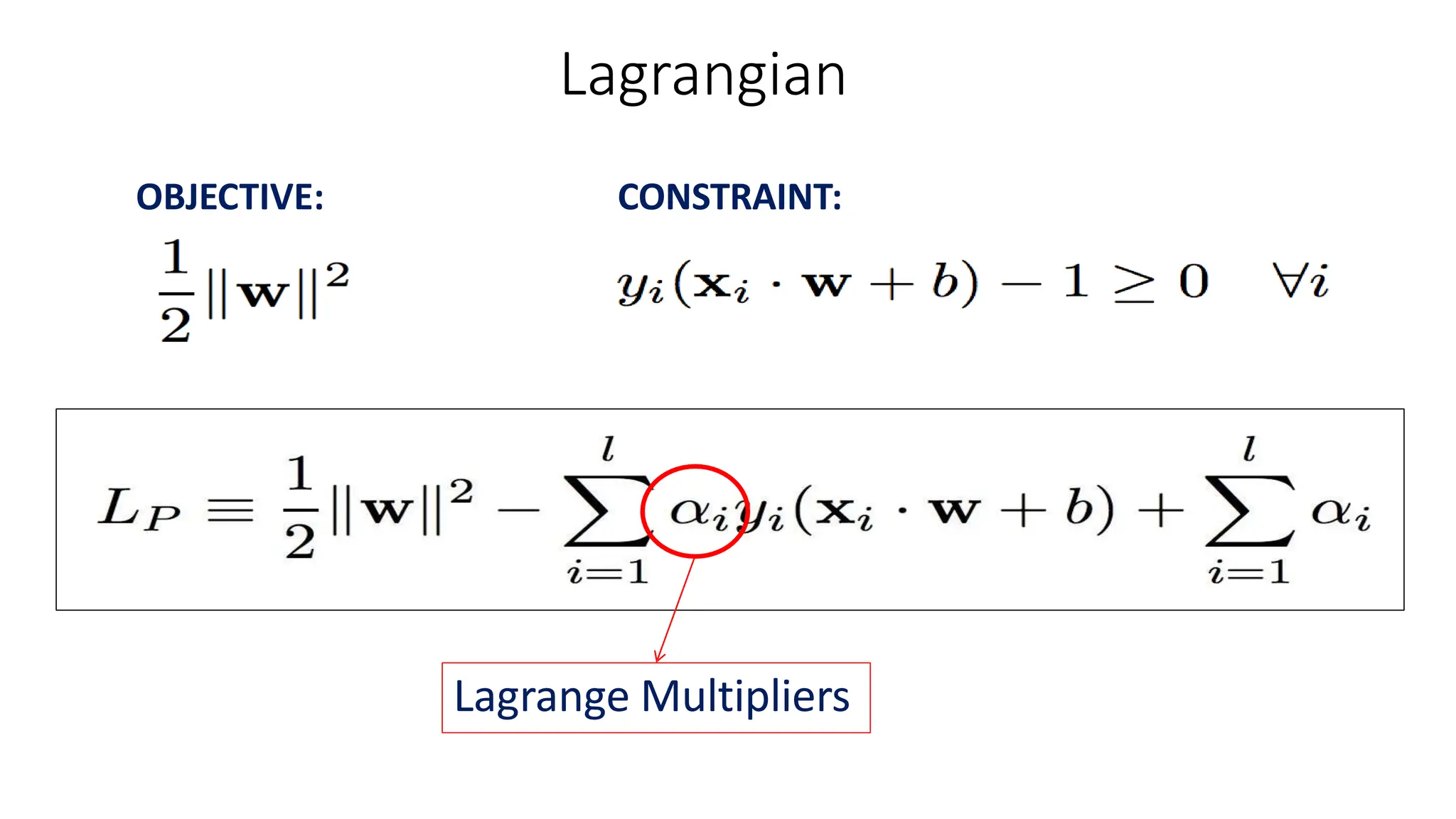

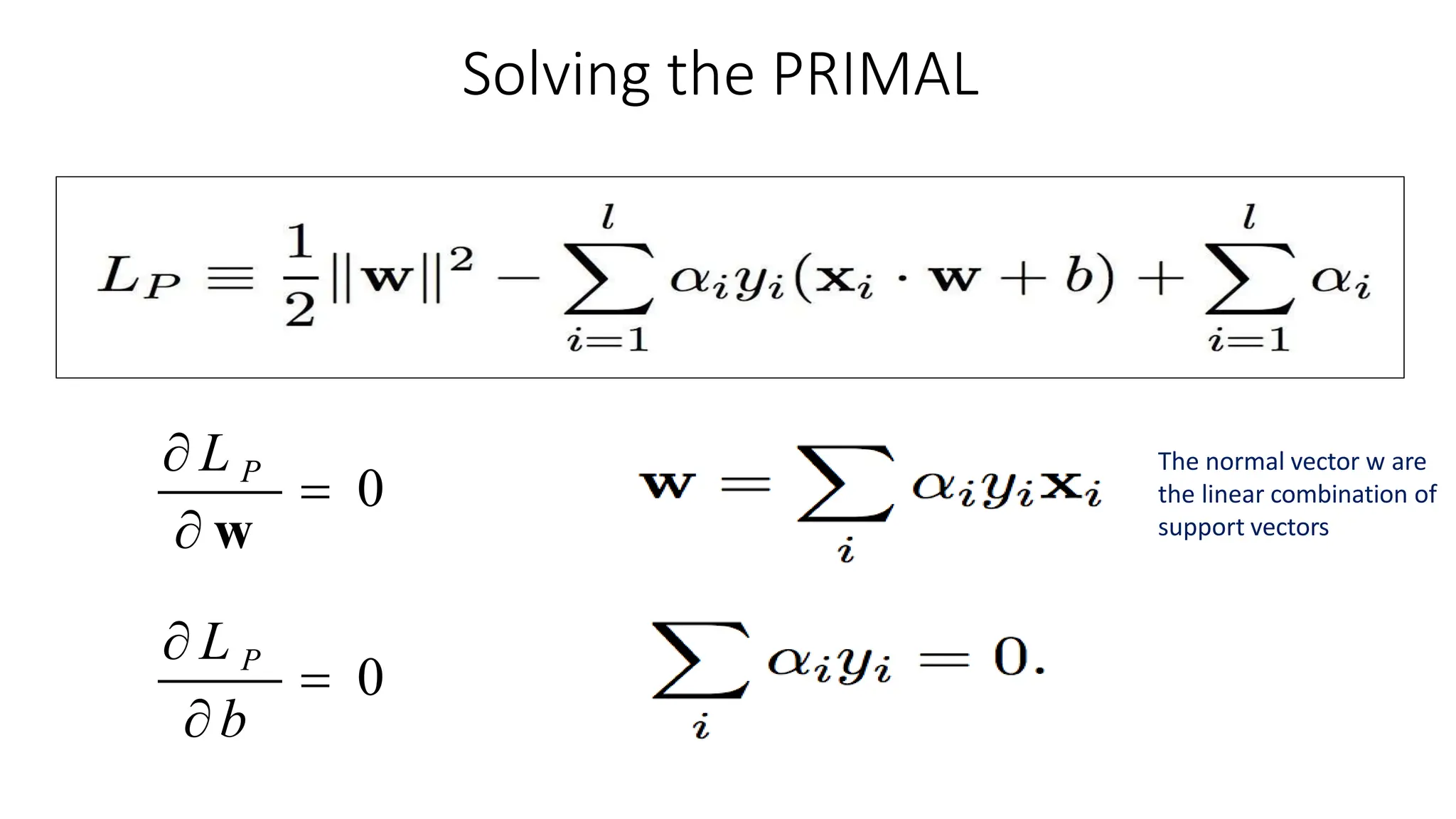

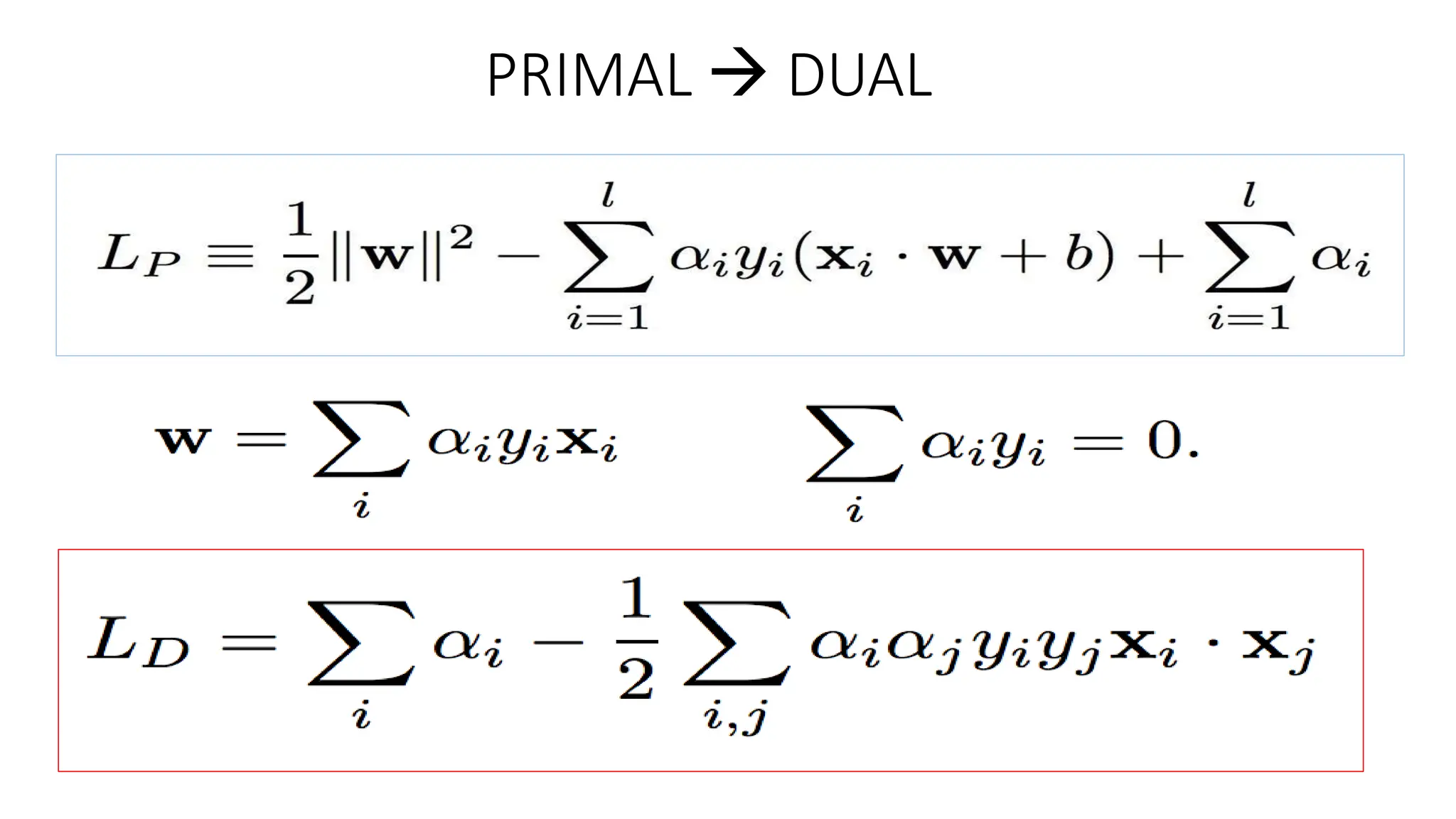

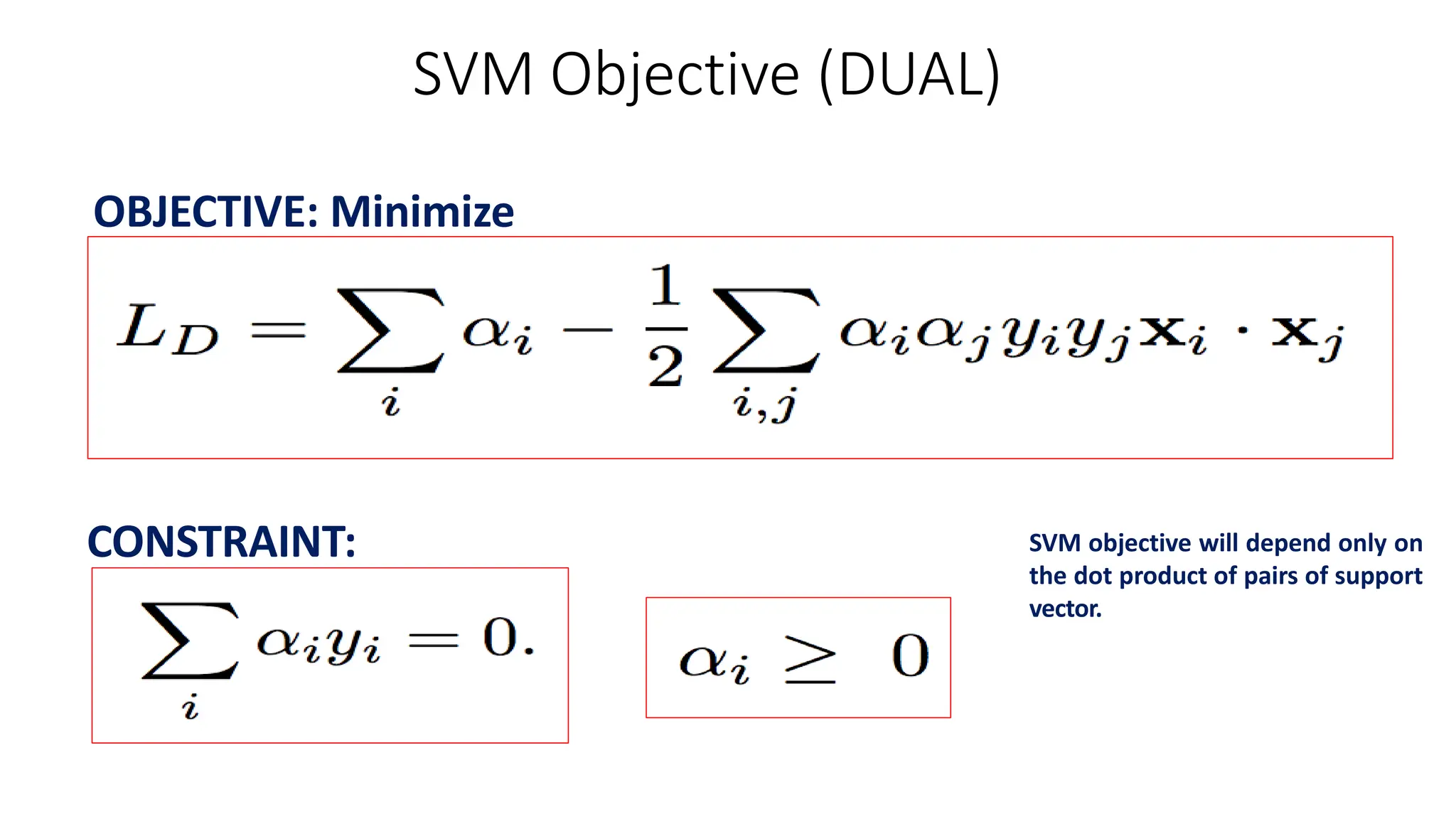

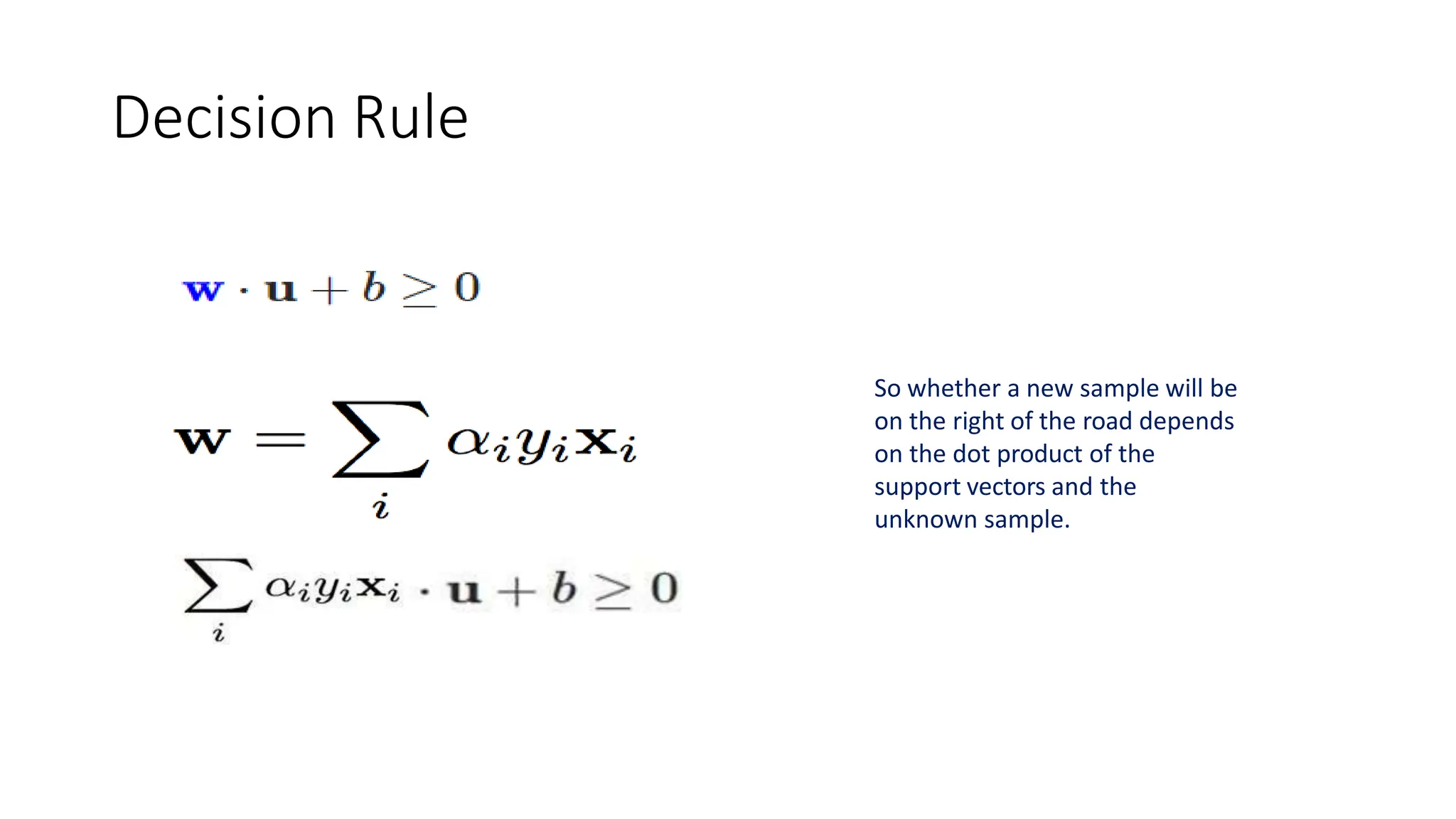

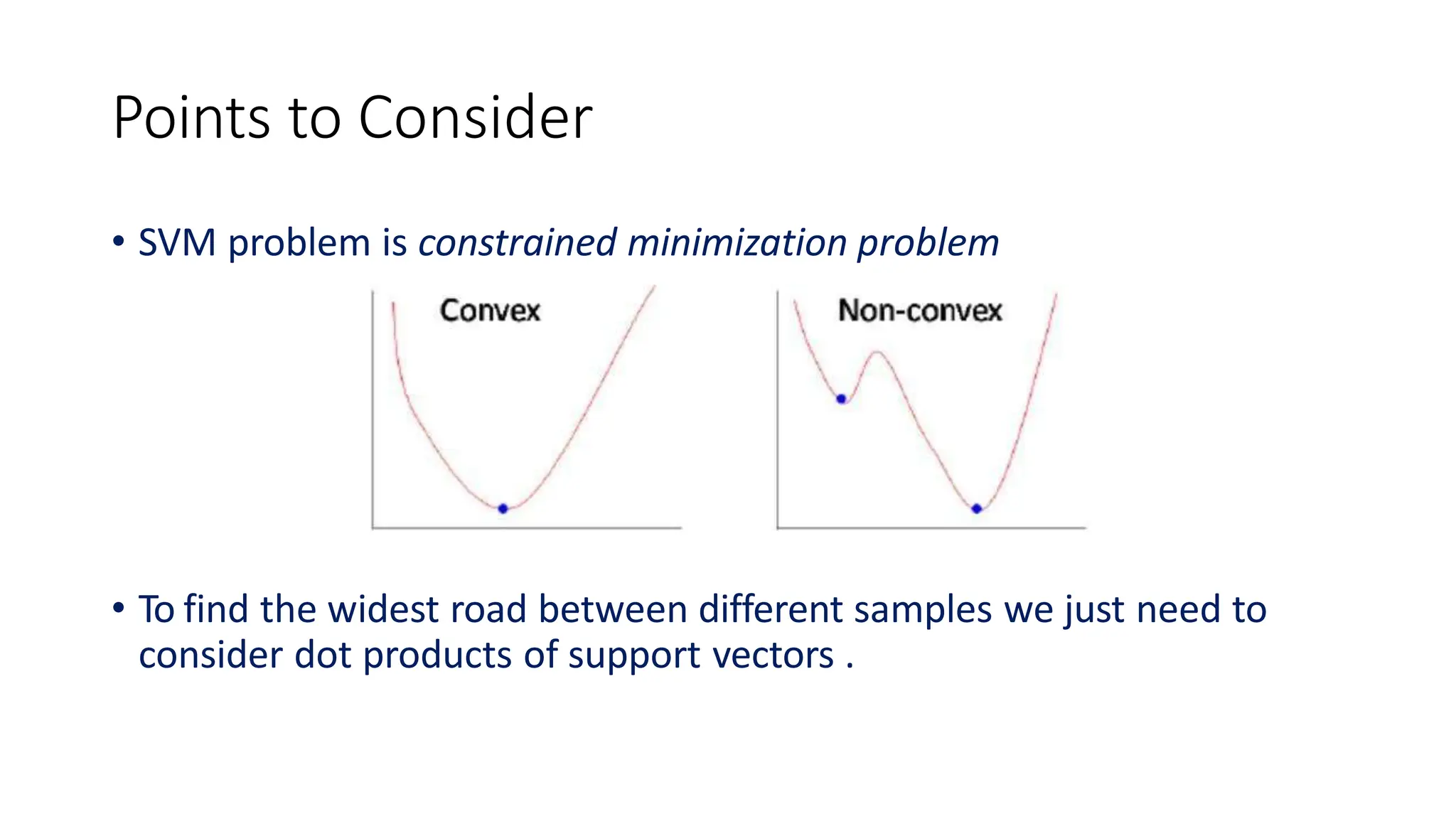

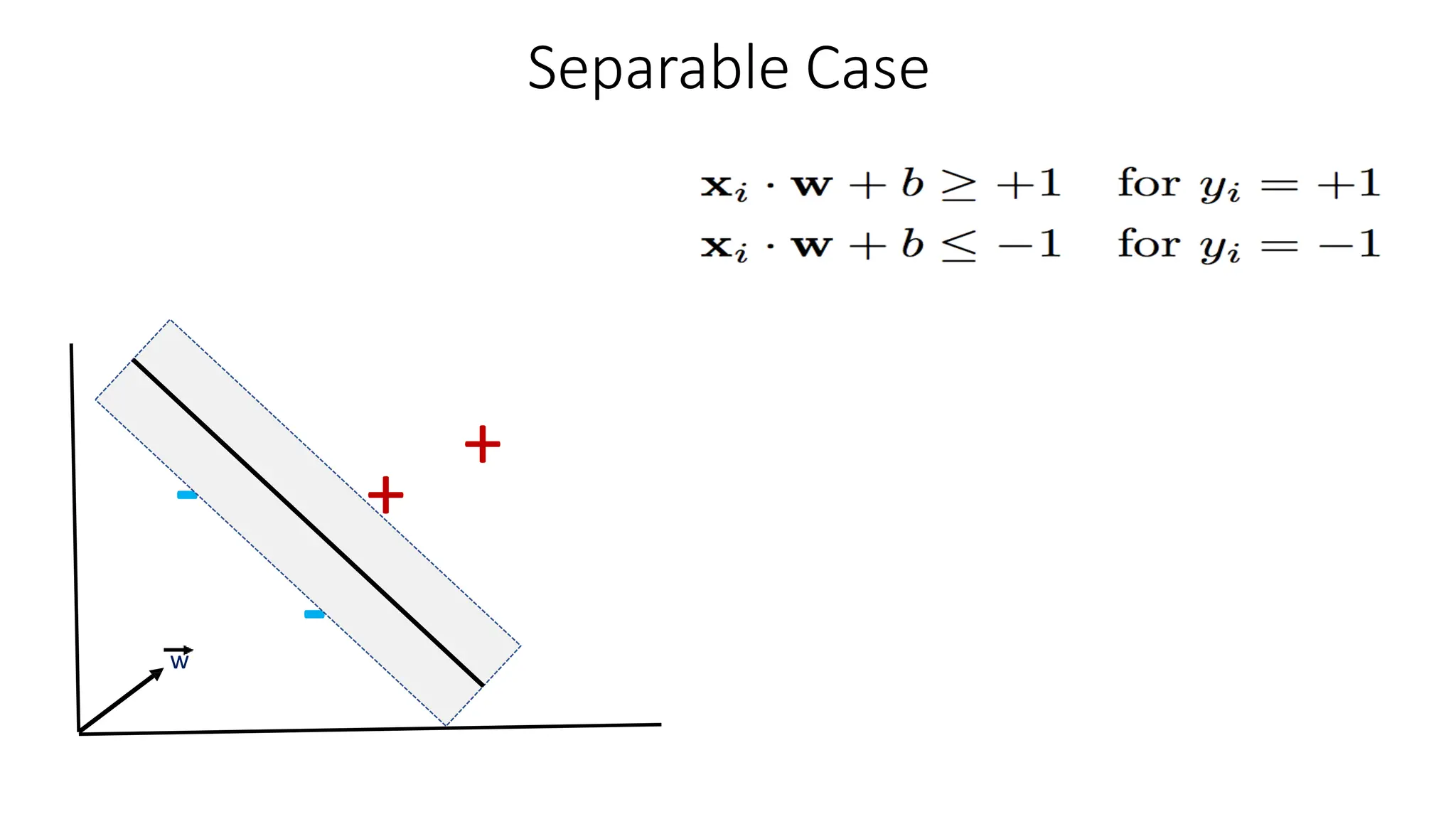

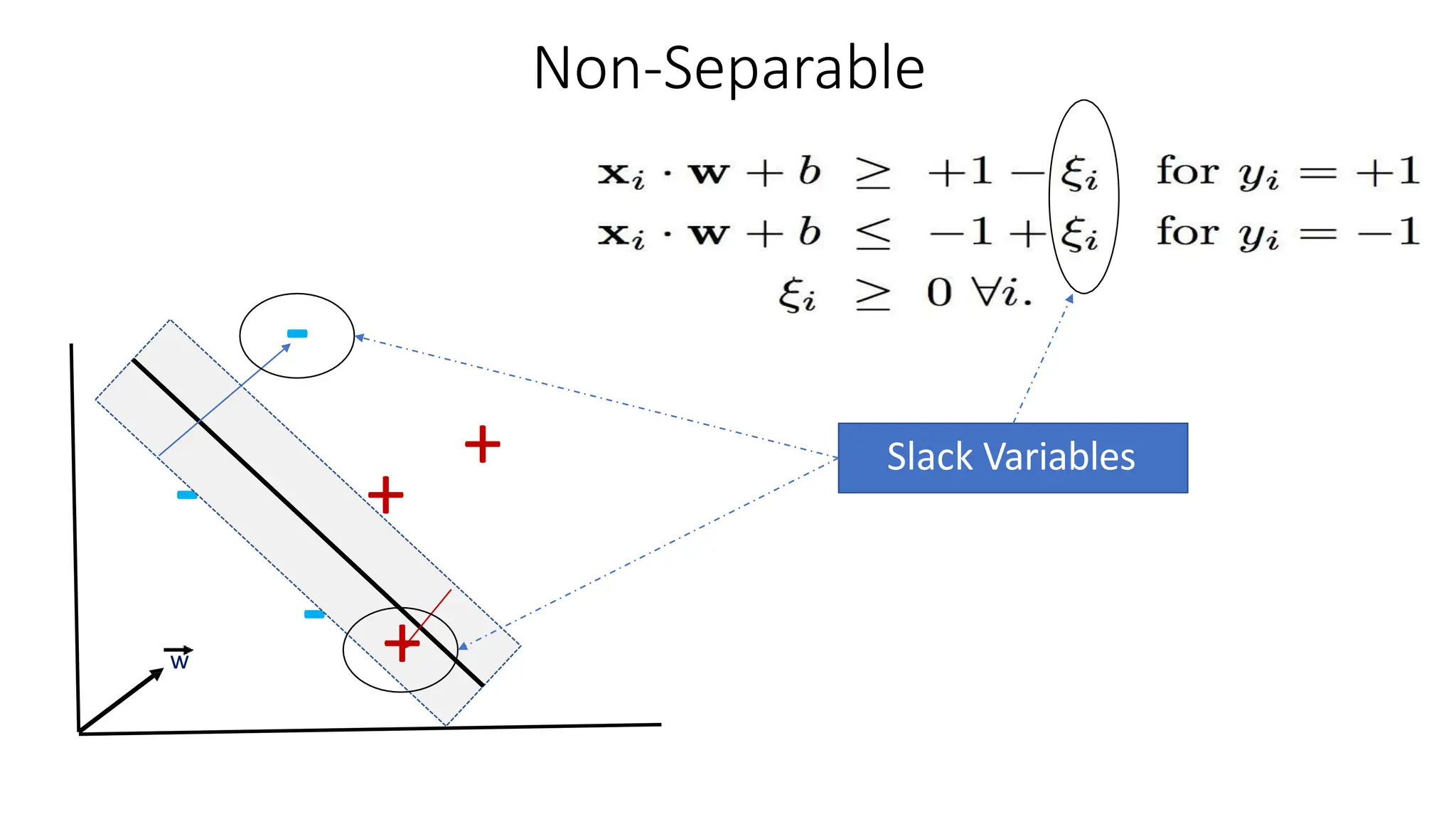

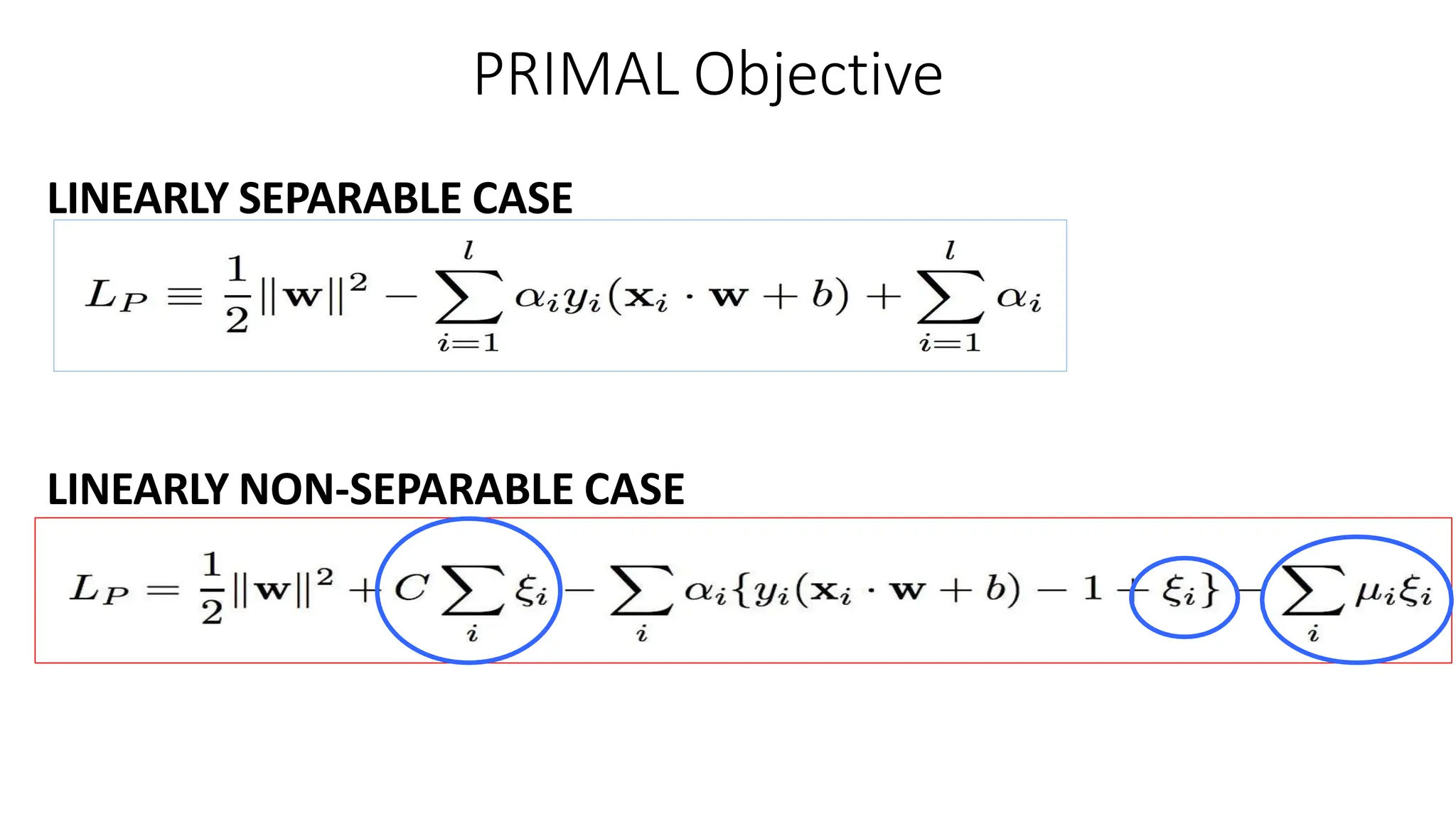

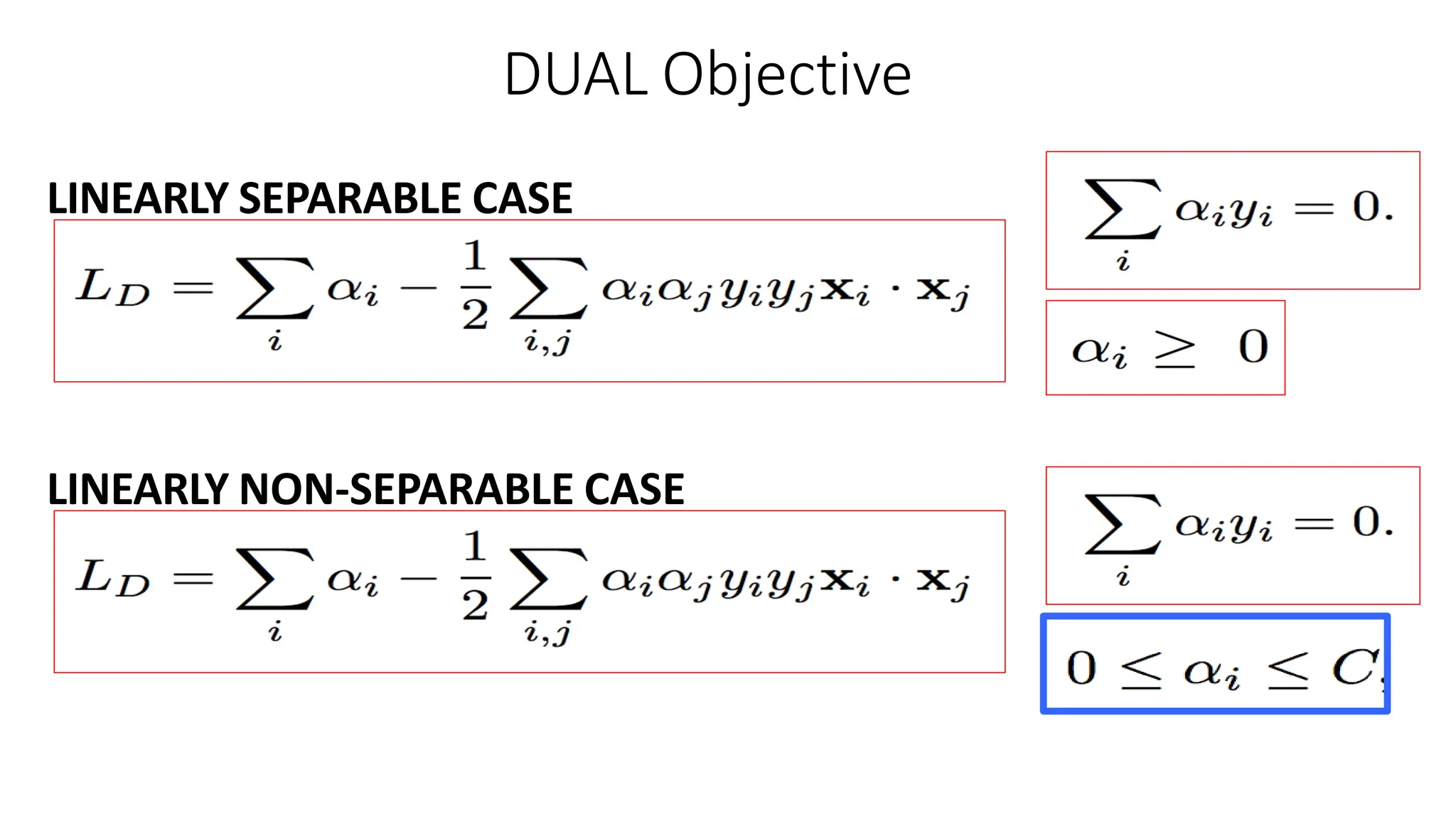

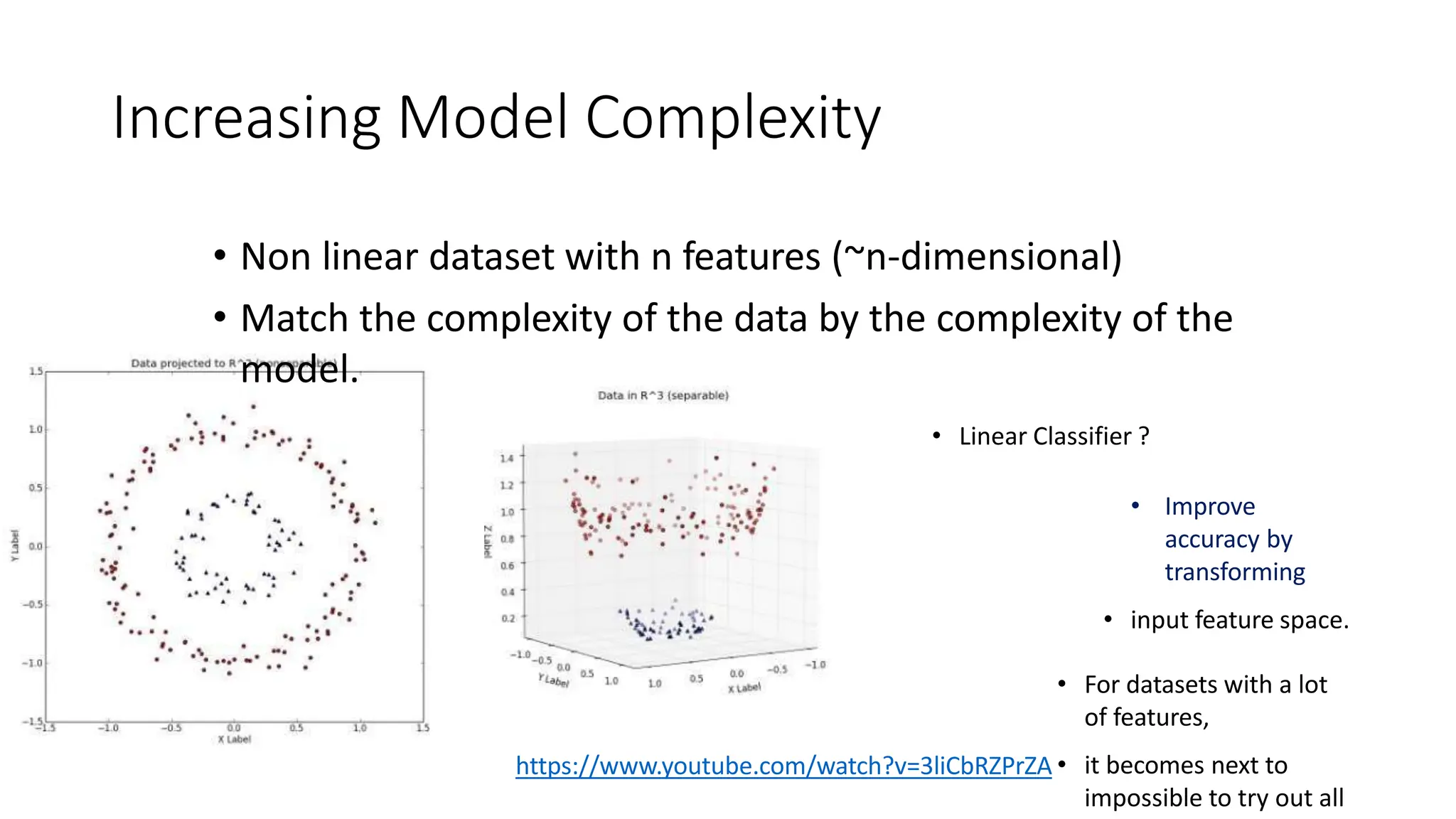

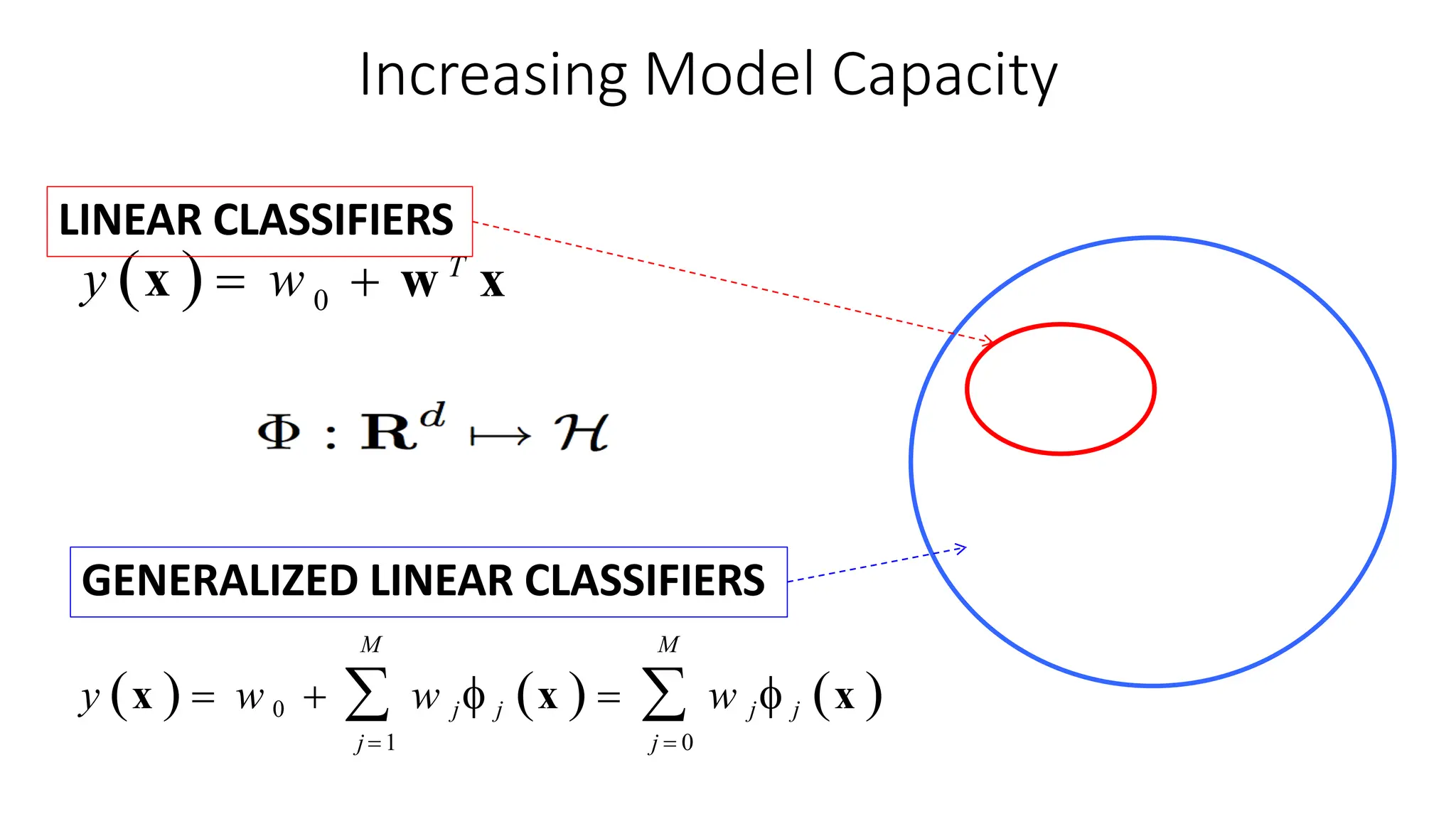

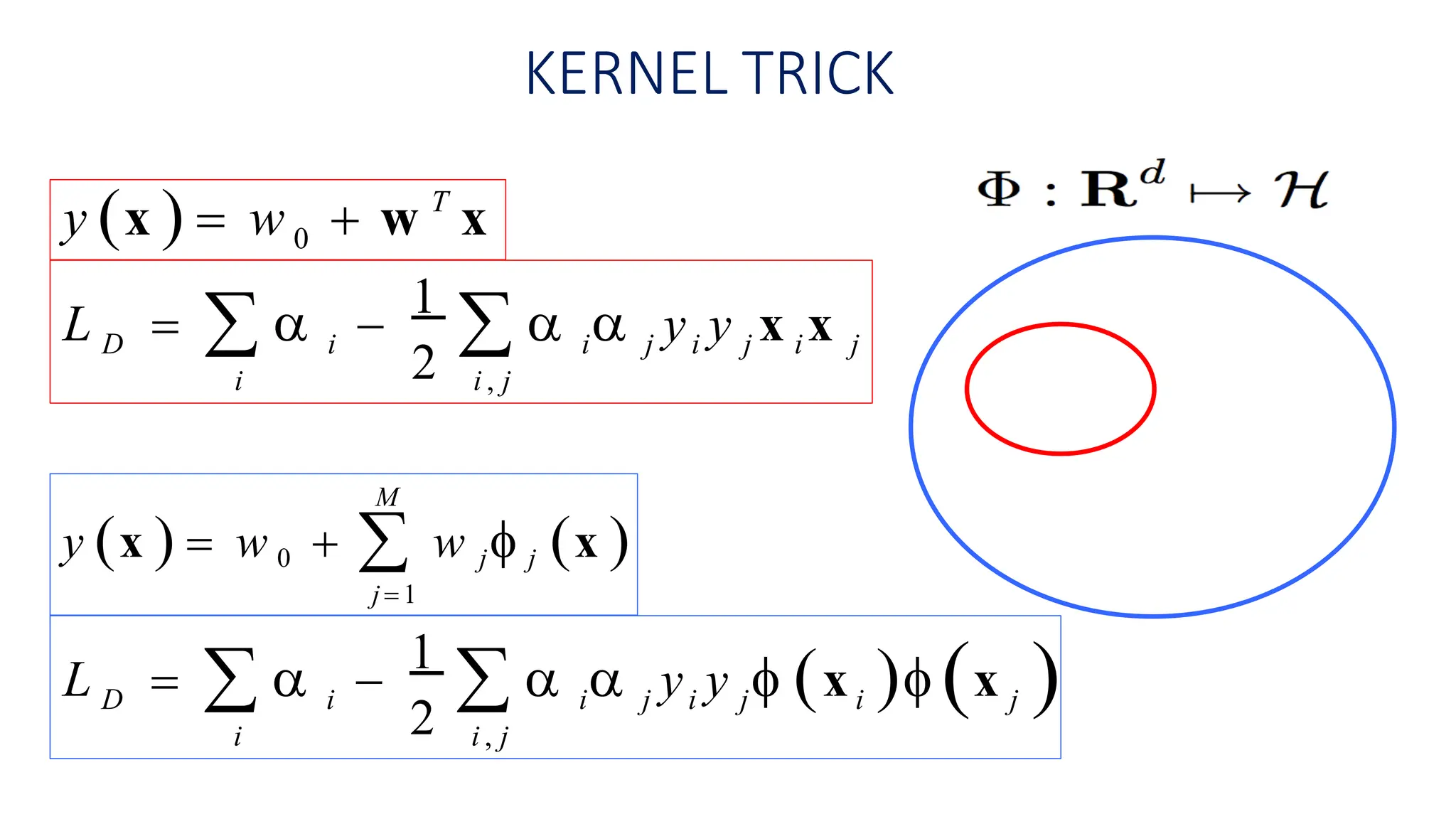

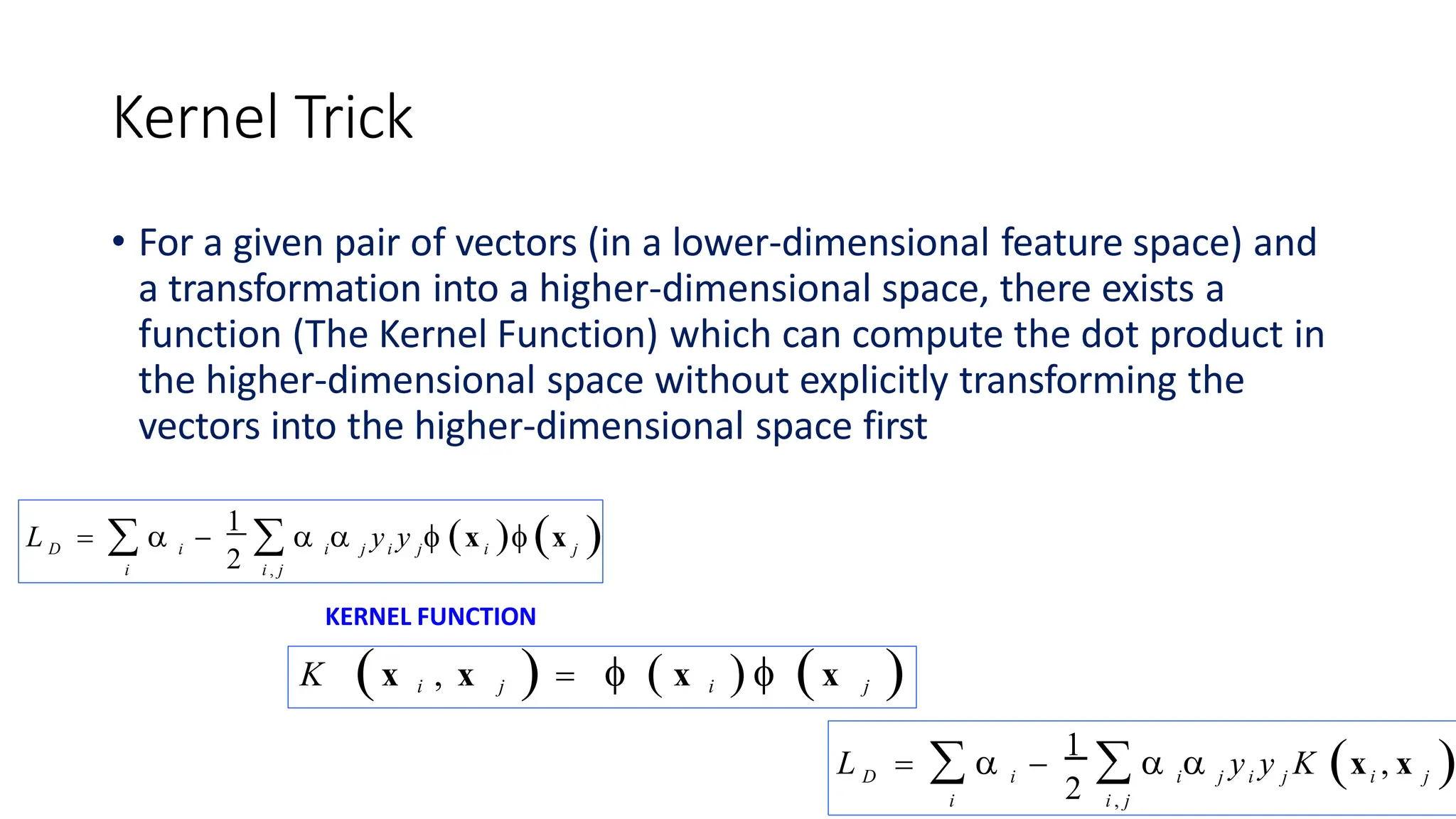

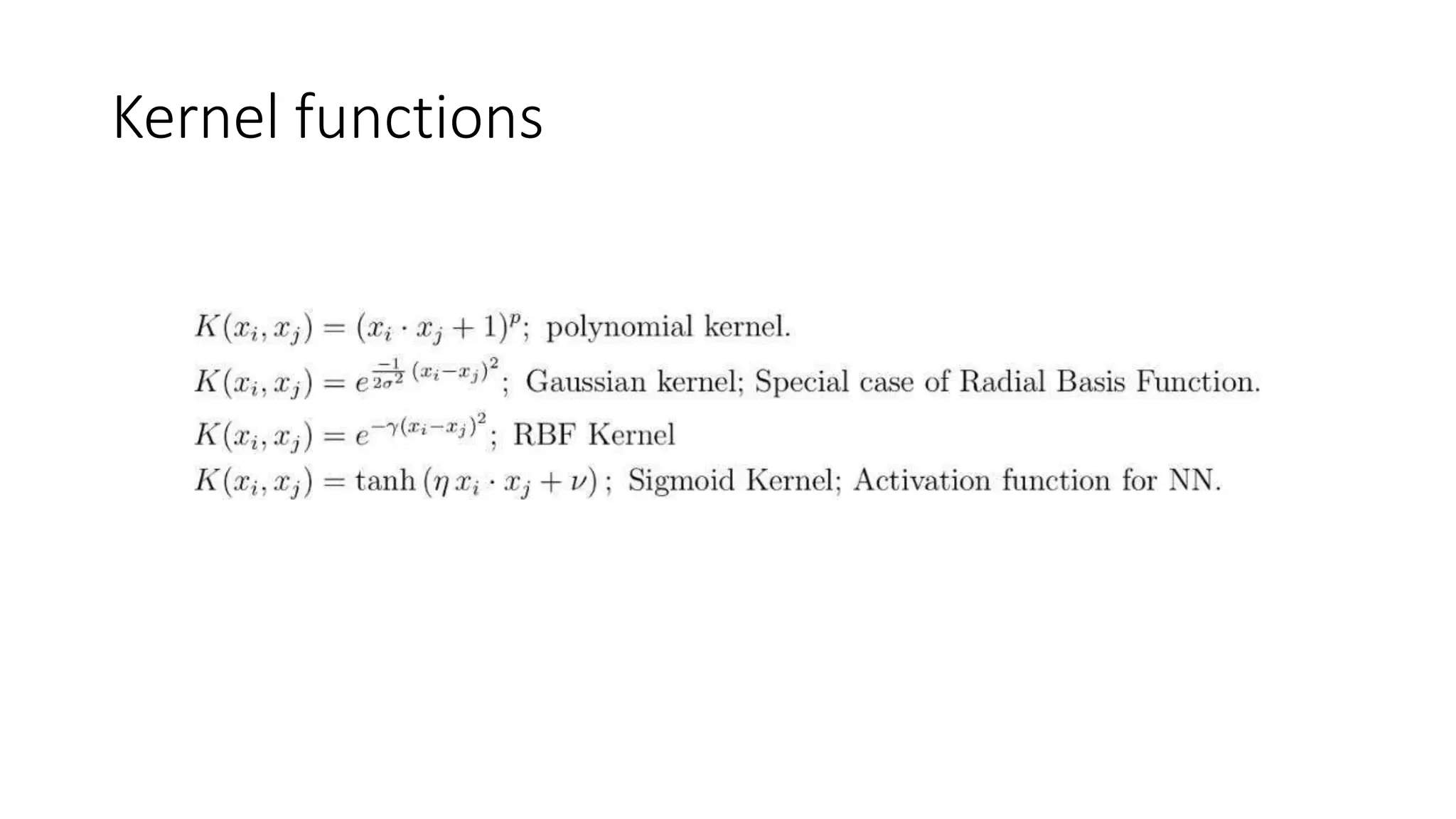

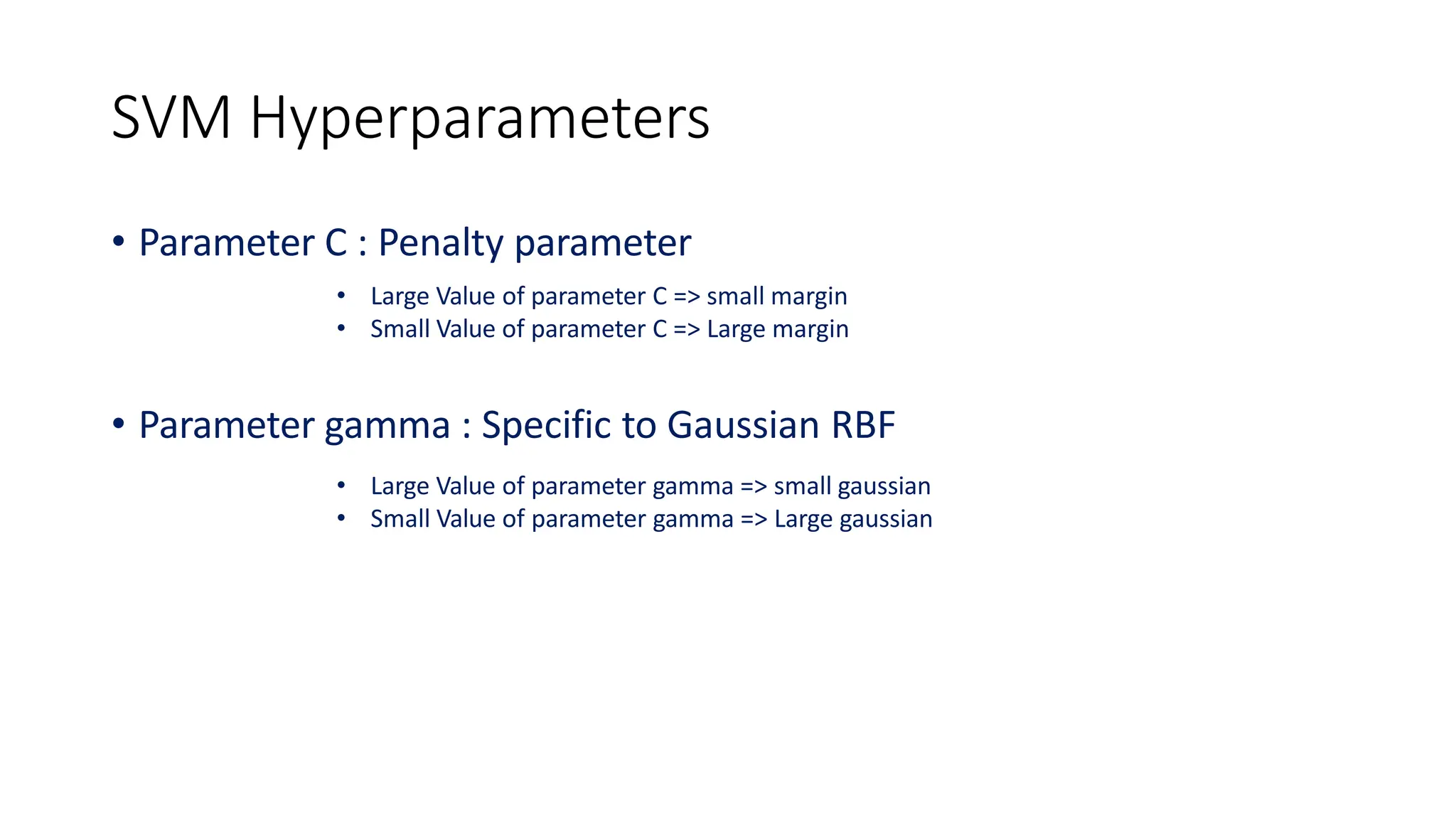

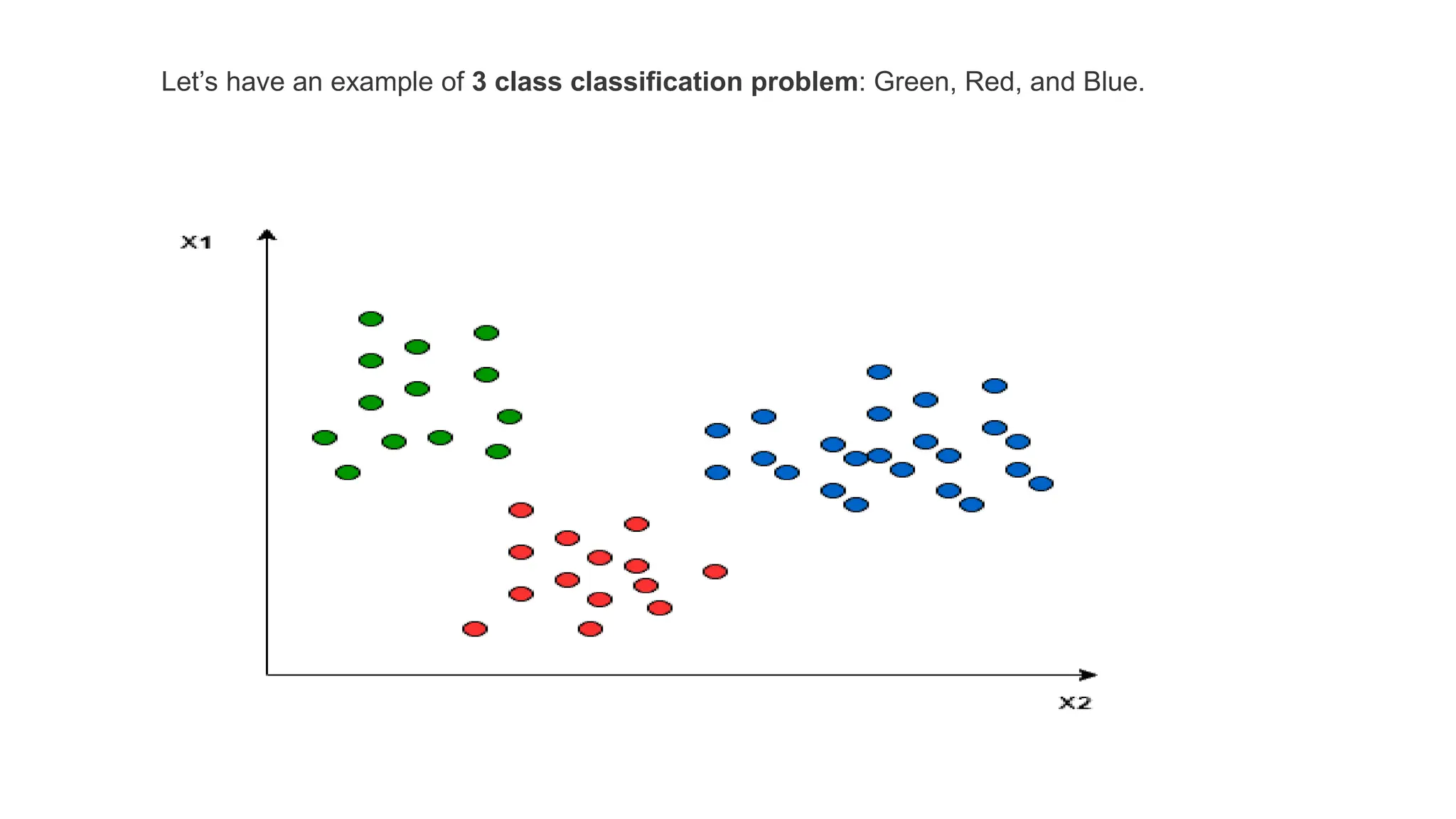

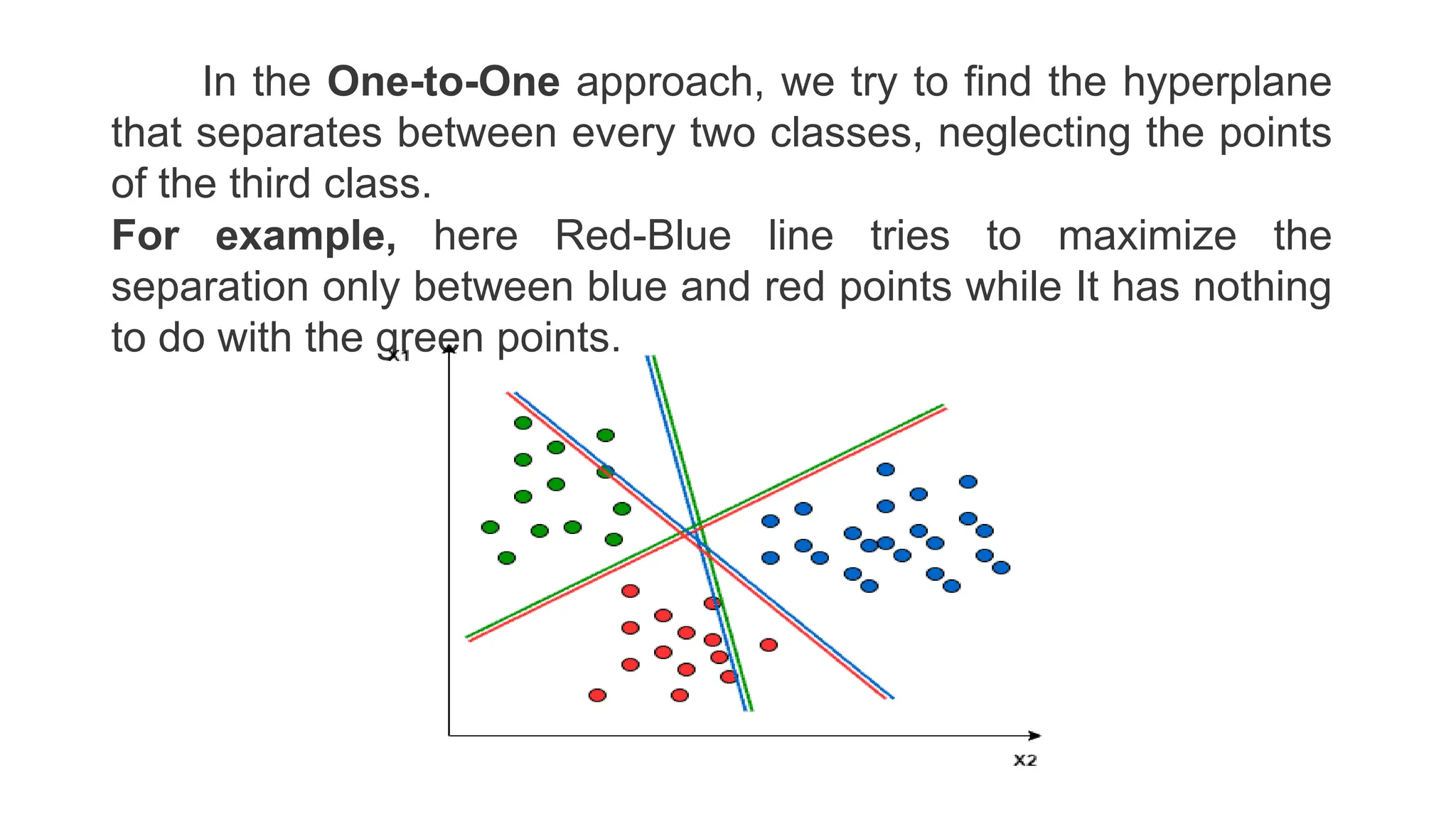

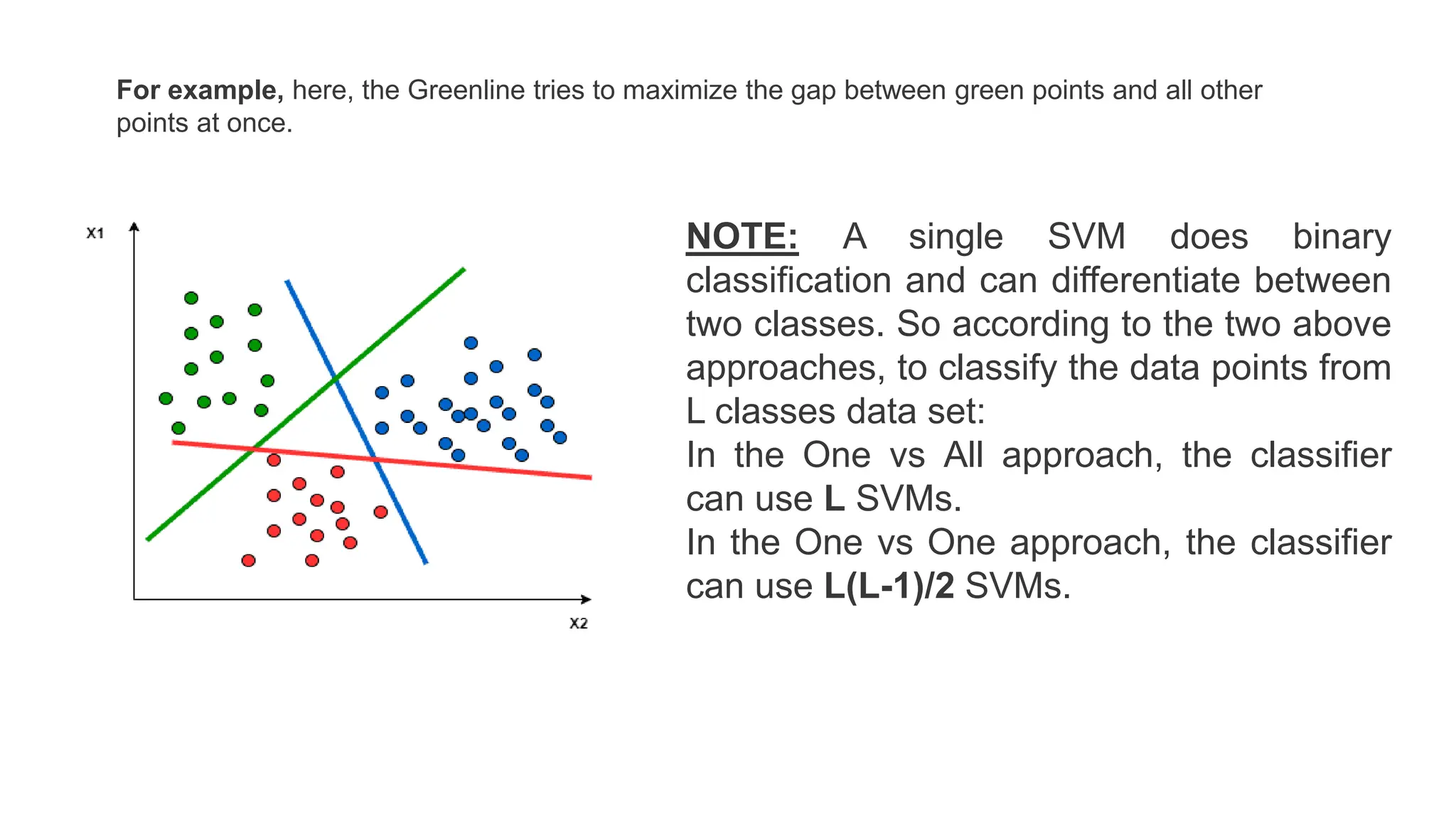

This document discusses support vector machines (SVM), a supervised machine learning algorithm used for classification and regression. It explains that SVM finds the optimal boundary, known as a hyperplane, that separates classes with the maximum margin. When data is not linearly separable, kernel functions can transform the data into a higher-dimensional space to make it separable. The document discusses SVM for both linearly separable and non-separable data, kernel functions, hyperparameters, and approaches for multiclass classification like one-vs-one and one-vs-all.