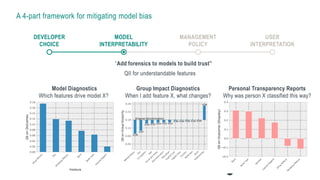

The document discusses model bias in artificial intelligence, emphasizing the implications of biased AI models and their potential to perpetuate discrimination. It presents a four-part framework for mitigating model bias, which includes developer choice, model interpretability, management policy, and user interpretation. It also highlights the importance of aligning AI systems with human values and ethics to promote fairness and transparency in AI decision-making.