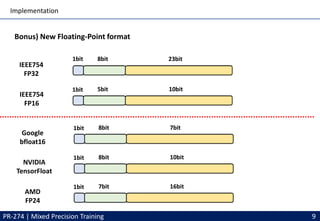

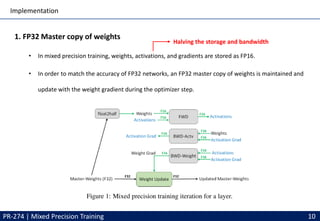

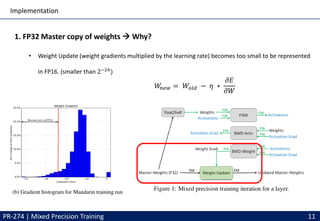

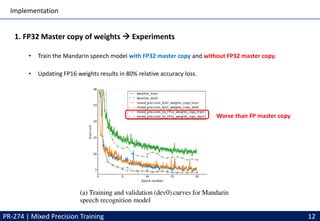

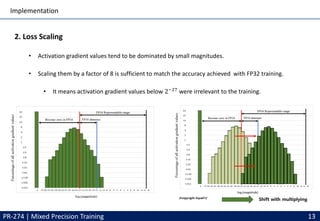

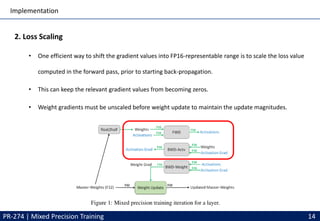

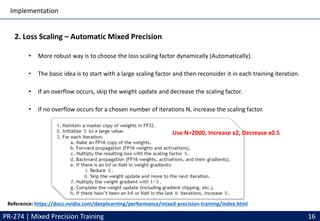

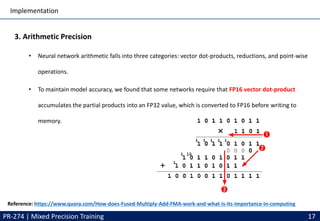

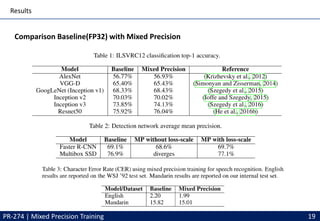

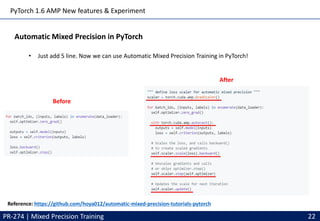

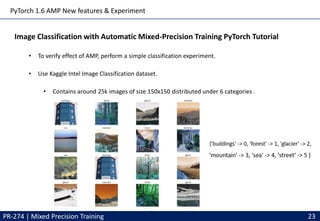

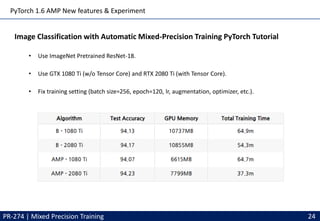

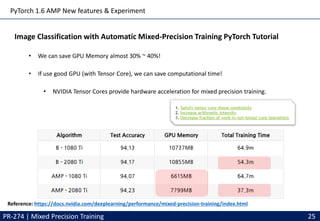

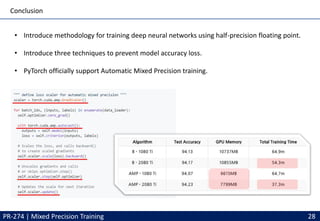

This document discusses mixed precision training techniques for deep neural networks. It introduces three techniques to train models with half-precision floating point without losing accuracy: 1) Maintaining a FP32 master copy of weights, 2) Scaling the loss to prevent small gradients, and 3) Performing certain arithmetic like dot products in FP32. Experimental results show these techniques allow a variety of networks to match the accuracy of FP32 training while reducing memory and bandwidth. The document also discusses related work and PyTorch's new Automatic Mixed Precision features.