Embed presentation

Download as PDF, PPTX

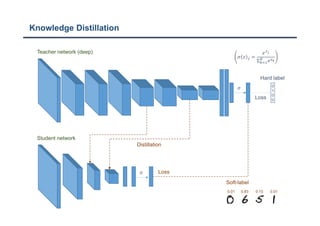

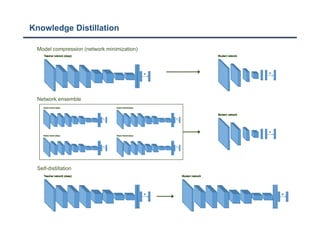

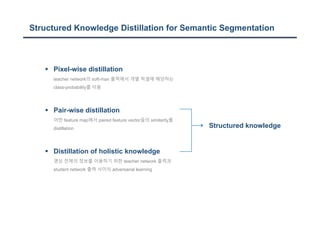

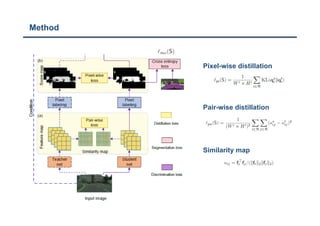

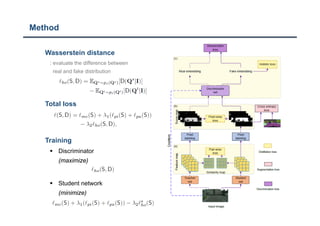

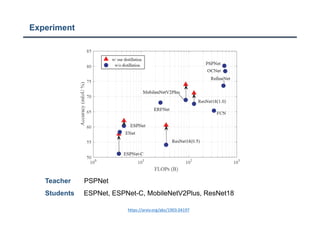

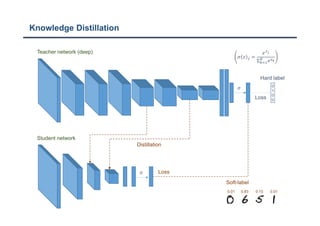

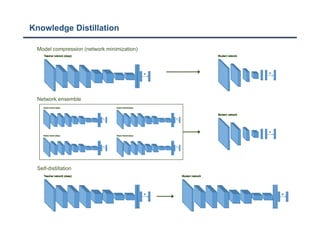

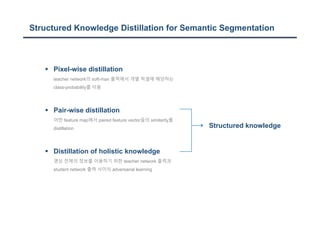

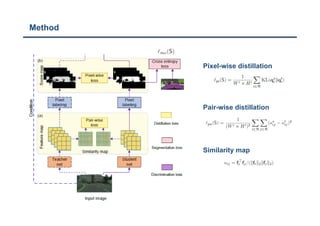

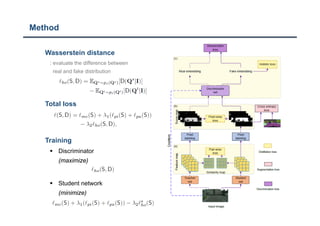

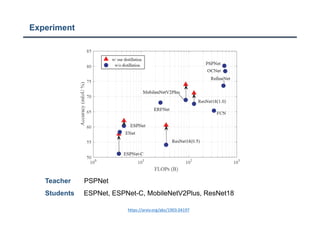

The document discusses a structured knowledge distillation approach for semantic segmentation presented by Sang Jun Lee at CVPR 2019. It highlights techniques such as pixel-wise and pair-wise distillation to transfer knowledge from a teacher network to student networks, including adversarial learning methods for improved accuracy. The study also includes details on minimizing network size and employs various loss functions to evaluate performance.