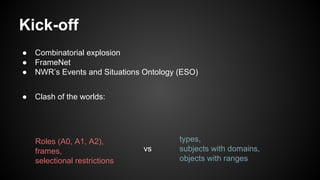

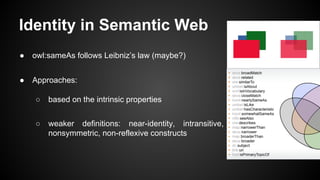

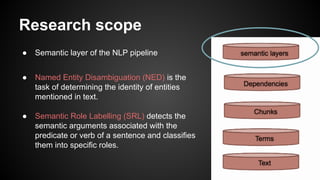

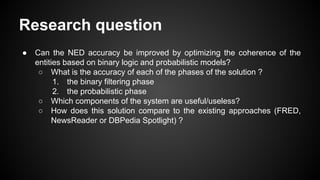

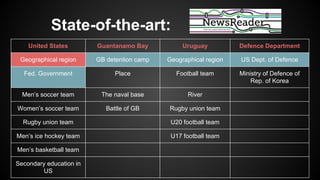

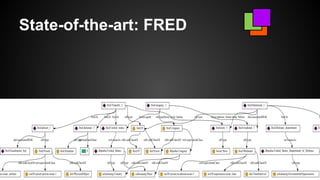

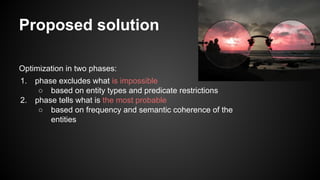

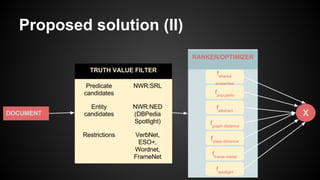

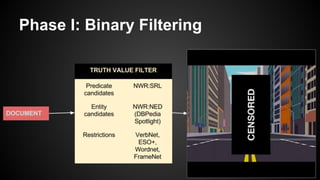

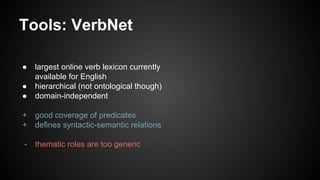

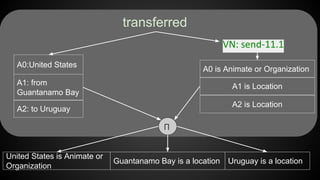

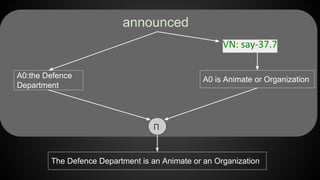

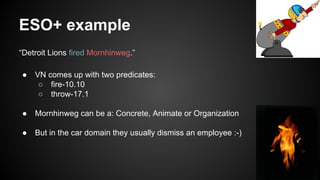

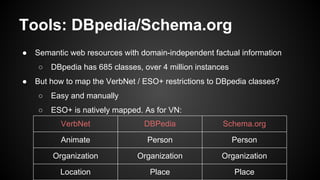

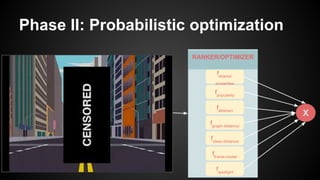

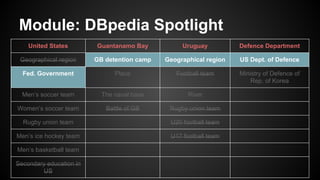

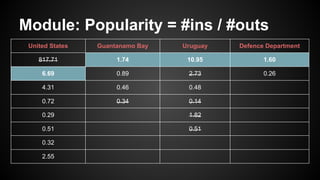

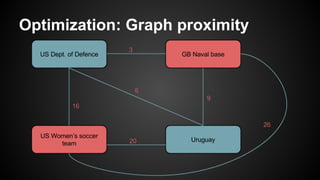

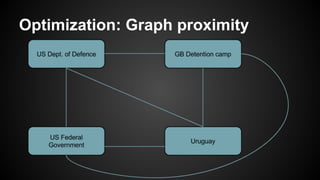

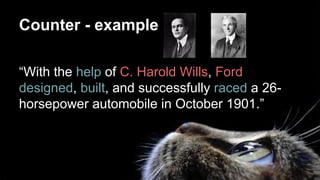

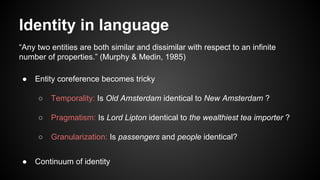

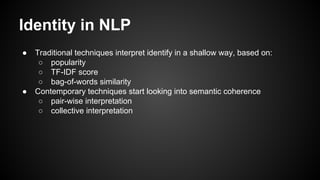

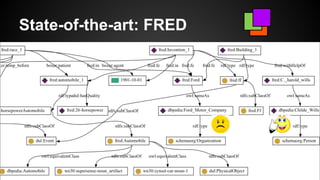

The document discusses the challenges of language processing and entity linking in natural language processing (NLP), emphasizing the importance of handling the ambiguity and context-dependence in language. It presents a research study aimed at improving named entity disambiguation (NED) accuracy through binary and probabilistic optimization phases, utilizing various linguistic resources and methodologies. The proposed solution evaluates existing approaches while aiming to enhance coherence and accuracy in identifying entities within text.